Speech Translation System for Language Barrier Reduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Can You Give Me Another Word for Hyperbaric?: Improving Speech

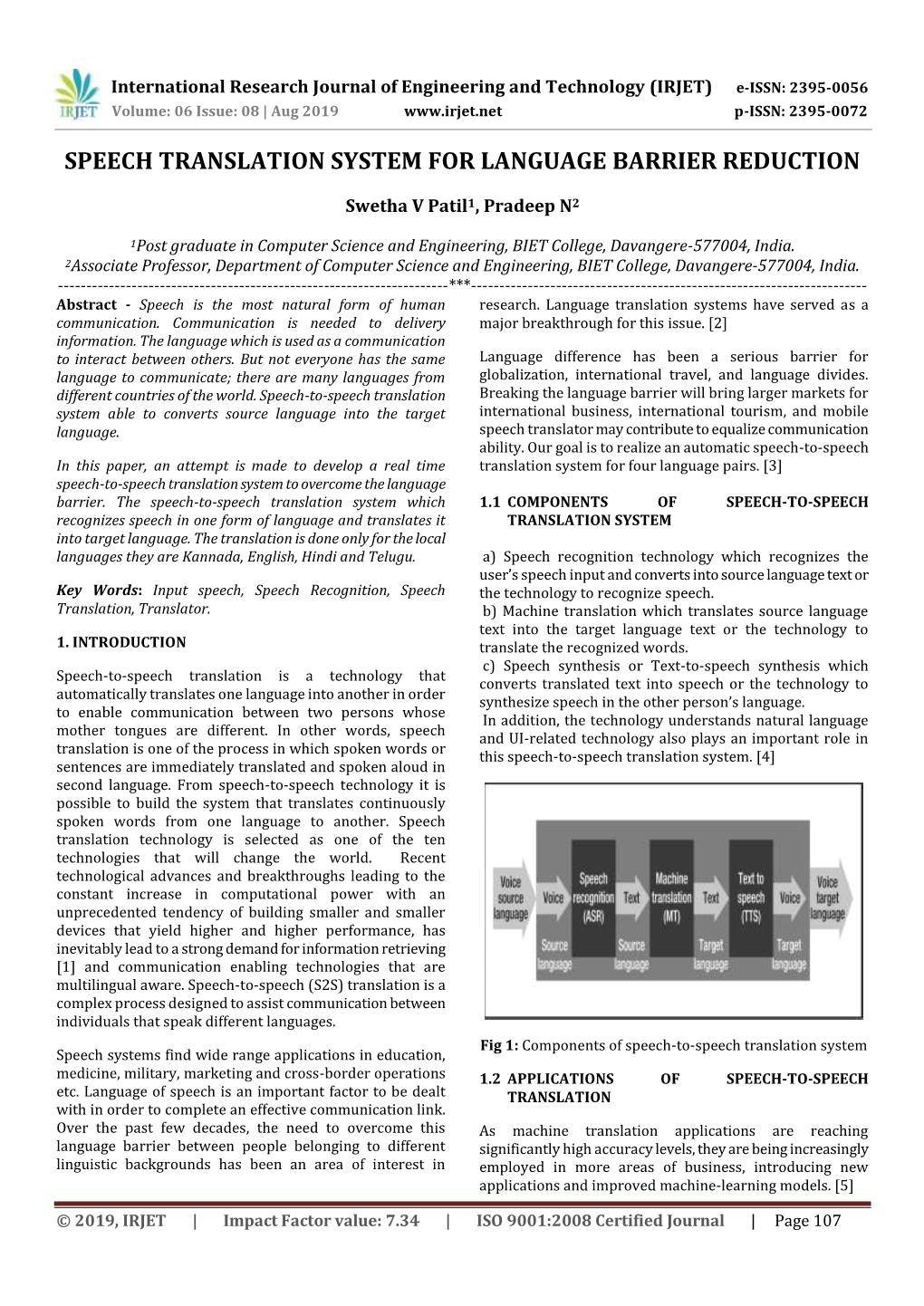

“CAN YOU GIVE ME ANOTHER WORD FOR HYPERBARIC?”: IMPROVING SPEECH TRANSLATION USING TARGETED CLARIFICATION QUESTIONS Necip Fazil Ayan1, Arindam Mandal1, Michael Frandsen1, Jing Zheng1, Peter Blasco1, Andreas Kathol1 Fred´ eric´ Bechet´ 2, Benoit Favre2, Alex Marin3, Tom Kwiatkowski3, Mari Ostendorf3 Luke Zettlemoyer3, Philipp Salletmayr5∗, Julia Hirschberg4, Svetlana Stoyanchev4 1 SRI International, Menlo Park, USA 2 Aix-Marseille Universite,´ Marseille, France 3 University of Washington, Seattle, USA 4 Columbia University, New York, USA 5 Graz Institute of Technology, Austria ABSTRACT Our previous work on speech-to-speech translation systems has We present a novel approach for improving communication success shown that there are seven primary sources of errors in translation: between users of speech-to-speech translation systems by automat- • ASR named entity OOVs: Hi, my name is Colonel Zigman. ically detecting errors in the output of automatic speech recogni- • ASR non-named entity OOVs: I want some pristine plates. tion (ASR) and statistical machine translation (SMT) systems. Our approach initiates system-driven targeted clarification about errorful • Mispronunciations: I want to collect some +de-MOG-raf-ees regions in user input and repairs them given user responses. Our about your family? (demographics) system has been evaluated by unbiased subjects in live mode, and • Homophones: Do you have any patients to try this medica- results show improved success of communication between users of tion? (patients vs. patience) the system. • MT OOVs: Where is your father-in-law? Index Terms— Speech translation, error detection, error correc- • Word sense ambiguity: How many men are in your com- tion, spoken dialog systems. pany? (organization vs. -

Using Languages in Legal Interpreting

UsIng LangUages In LegaL InterpretIng edItor’s note: In this issue of The Language Educator, we continue our series of articles focusing on different career opportunities available to language pro- fessionals and exploring how students might prepare for these jobs. Here we look at different careers in the legal field. By Patti Koning egal interpreting is one of the fastest growing fields for foreign “This job gives me the opportunity to see all sides of a courtroom Llanguage specialists. In fact, the profession is having a tough proceeding. A legal interpreter is a neutral party, but you are still time keeping up with the demand for certified legal interpreters and providing a tremendous service to the community and to the court,” translators, due to the increase in the number of individuals with she explains. “It’s also a lot of fun. There is always something new— limited English language skills in the United States, along with law you are dealing with different people and issues constantly. It really enforcement efforts against undocumented foreign nationals. keeps you on your toes.” Federal and state guidelines mandate that legal interpreters be That neutrality can pose a challenge for people new to the field. provided for any speaker with limited English skills in a courtroom “If you interpret, that is the only role you can play. You aren’t a proceeding, court-ordered mediation, or other court-ordered programs social worker, friend, advocate, or advisor and that distance is some- such as diversion and anger management classes. This includes defen- thing that has to be learned,” explains Lois Feuerle, a NAJIT director. -

Piracy Or Productivity: Unlawful Practices in Anime Fansubbing

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Aaltodoc Publication Archive Aalto-yliopisto Teknillinen korkeakoulu Informaatio- ja luonnontieteiden tiedekunta Tietotekniikan tutkinto-/koulutusohjelma Teemu Mäntylä Piracy or productivity: unlawful practices in anime fansubbing Diplomityö Espoo 3. kesäkuuta 2010 Valvoja: Professori Tapio Takala Ohjaaja: - 2 Abstract Piracy or productivity: unlawful practices in anime fansubbing Over a short period of time, Japanese animation or anime has grown explosively in popularity worldwide. In the United States this growth has been based on copyright infringement, where fans have subtitled anime series and released them as fansubs. In the absence of official releases fansubs have created the current popularity of anime, which companies can now benefit from. From the beginning the companies have tolerated and even encouraged the fan activity, partly because the fans have followed their own rules, intended to stop the distribution of fansubs after official licensing. The work explores the history and current situation of fansubs, and seeks to explain how these practices adopted by fans have arisen, why both fans and companies accept them and act according to them, and whether the situation is sustainable. Keywords: Japanese animation, anime, fansub, copyright, piracy Tiivistelmä Piratismia vai tuottavuutta: laittomat toimintatavat animen fanikäännöksissä Japanilaisen animaation eli animen suosio maailmalla on lyhyessä ajassa kasvanut räjähdysmäisesti. Tämä kasvu on Yhdysvalloissa perustunut tekijänoikeuksien rikkomiseen, missä fanit ovat tekstittäneet animesarjoja itse ja julkaisseet ne fanikäännöksinä. Virallisten julkaisujen puutteessa fanikäännökset ovat luoneet animen nykyisen suosion, jota yhtiöt voivat nyt hyödyntää. Yhtiöt ovat alusta asti sietäneet ja jopa kannustaneet fanien toimia, osaksi koska fanit ovat noudattaneet omia sääntöjään, joiden on tarkoitus estää fanikäännösten levitys virallisen lisensoinnin jälkeen. -

A Survey of Voice Translation Methodologies - Acoustic Dialect Decoder

International Conference On Information Communication & Embedded Systems (ICICES-2016) A SURVEY OF VOICE TRANSLATION METHODOLOGIES - ACOUSTIC DIALECT DECODER Hans Krupakar Keerthika Rajvel Dept. of Computer Science and Engineering Dept. of Computer Science and Engineering SSN College Of Engineering SSN College of Engineering E-mail: [email protected] E-mail: [email protected] Bharathi B Angel Deborah S Vallidevi Krishnamurthy Dept. of Computer Science Dept. of Computer Science Dept. of Computer Science and Engineering and Engineering and Engineering SSN College Of Engineering SSN College Of Engineering SSN College Of Engineering E-mail: [email protected] E-mail: [email protected] E-mail: [email protected] Abstract— Speech Translation has always been about giving in order to make the process of communication amongst source text/audio input and waiting for system to give humans better, easier and efficient. However, the existing translated output in desired form. In this paper, we present the methods, including Google voice translators, typically Acoustic Dialect Decoder (ADD) – a voice to voice ear-piece handle the process of translation in a non-automated manner. translation device. We introduce and survey the recent advances made in the field of Speech Engineering, to employ in This makes the process of translation of word(s) and/or the ADD, particularly focusing on the three major processing sentences from one language to another, slower and more steps of Recognition, Translation and Synthesis. We tackle the tedious. We wish to make that process automatic – have a problem of machine understanding of natural language by device do what a human translator does, inside our ears. -

Incorporating Fansubbers Into Corporate Capitalism on Viki.Com

“A Community Unlike Any Other”: Incorporating Fansubbers into Corporate Capitalism on Viki.com Taylore Nicole Woodhouse TC 660H Plan II Honors Program The University of Texas at Austin Spring 2018 __________________________________________ Dr. Suzanne Scott Department of Radio-Television-Film Supervising Professor __________________________________________ Dr. Youjeong Oh Department of Asian Studies Second Reader ABSTRACT Author: Taylore Nicole Woodhouse Title: “A Community Unlike Another Other”: Incorporating Fansubbers into Corporate Capitalism on Viki.com Supervising Professors: Dr. Suzanne Scott and Dr. Youjeong Oh Viki.com, founded in 2008, is a streaming site that offers Korean (and other East Asian) television programs with subtitles in a variety of languages. Unlike other K-drama distribution sites that serve audiences outside of South Korea, Viki utilizes fan-volunteers, called fansubbers, as laborers to produce its subtitles. Fan subtitling and distribution of foreign language media in the United States is a rich fan practice dating back to the 1980s, and Viki is the first corporate entity that has harnessed the productive power of fansubbers. In this thesis, I investigate how Viki has been able to capture the enthusiasm and productive capacity of fansubbers. Particularly, I examine how Viki has been able to monetize fansubbing in while still staying competitive with sites who employee trained, professional translators. I argue that Viki has succeeded in courting fansubbers as laborers by co-opting the concept of the “fan community.” I focus on how Viki strategically speaks about the community and builds its site to facilitate the functioning of its community so as to encourage fansubbers to view themselves as semi-professional laborers instead of amateur fans. -

Presenting to Elementary School Students Sample Script #1

Presenting to Elementary School Students These sample presentations, tips, and exercises that can be adapted for your needs. If you do use any of these materials, please be sure to acknowledge the author’s contribution appropriately. Sample Script #1 Please acknowledge: Lillian Clementi Please note that this presentation incorporates a number of ideas contributed by other people. Special thanks to Barbara Bell and Amanda Ennis. Length. The typical elementary school presentation is about 20 minutes, though you may have less for Career Day or more if you're doing a special presentation for a language class. There are several interactive exercises to choose from at the end: adapt the script to your needs by adding or eliminating material. Level. This is pitched largely to third or fourth grade. I usually start with the more basic exercises and then go on to the next if I have time and the group seems to be following me. For younger children, simply make them aware of other languages and focus on getting the first two or three points across. For fifth graders, you may want to make the presentation a little more sophisticated by incorporating some of the material from the middle school page. Logistics. It's helpful to transfer the script to index cards (numbered so they're easy to put back in order if they're dropped!). Because they're easier to hold than sheets of paper, they allow you to move around the room more freely, and you can simply reshuffle them or eliminate cards if you need to change your material at the last minute. -

Learning Speech Translation from Interpretation

Learning Speech Translation from Interpretation zur Erlangung des akademischen Grades eines Doktors der Ingenieurwissenschaften von der Fakult¨atf¨urInformatik Karlsruher Institut f¨urTechnologie (KIT) genehmigte Dissertation von Matthias Paulik aus Karlsruhe Tag der m¨undlichen Pr¨ufung: 21. Mai 2010 Erster Gutachter: Prof. Dr. Alexander Waibel Zweiter Gutachter: Prof. Dr. Tanja Schultz Ich erkl¨arehiermit, dass ich die vorliegende Arbeit selbst¨andig verfasst und keine anderen als die angegebenen Quellen und Hilfsmittel verwendet habe sowie dass ich die w¨ortlich oder inhaltlich ¨ubernommenen Stellen als solche kenntlich gemacht habe und die Satzung des KIT, ehem. Universit¨atKarlsruhe (TH), zur Sicherung guter wissenschaftlicher Praxis in der jeweils g¨ultigenFassung beachtet habe. Karlsruhe, den 21. Mai 2010 Matthias Paulik Abstract The basic objective of this thesis is to examine the extent to which automatic speech translation can benefit from an often available but ignored resource, namely human interpreter speech. The main con- tribution of this thesis is a novel approach to speech translation development, which makes use of that resource. The performance of the statistical models employed in modern speech translation systems depends heavily on the availability of vast amounts of training data. State-of-the-art systems are typically trained on: (1) hundreds, sometimes thousands of hours of manually transcribed speech audio; (2) bi-lingual, sentence-aligned text corpora of man- ual translations, often comprising tens of millions of words; and (3) monolingual text corpora, often comprising hundreds of millions of words. The acquisition of such enormous data resources is highly time-consuming and expensive, rendering the development of deploy- able speech translation systems prohibitive to all but a handful of eco- nomically or politically viable languages. -

Is 42 the Answer to Everything in Subtitling-Oriented Speech Translation?

Is 42 the Answer to Everything in Subtitling-oriented Speech Translation? Alina Karakanta Matteo Negri Marco Turchi Fondazione Bruno Kessler Fondazione Bruno Kessler Fondazione Bruno Kessler University of Trento Trento - Italy Trento - Italy Trento - Italy [email protected] [email protected] [email protected] Abstract content, still rely heavily on human effort. In a typi- cal multilingual subtitling workflow, a subtitler first Subtitling is becoming increasingly important creates a subtitle template (Georgakopoulou, 2019) for disseminating information, given the enor- by transcribing the source language audio, timing mous amounts of audiovisual content becom- and adapting the text to create proper subtitles in ing available daily. Although Neural Machine Translation (NMT) can speed up the process the source language. These source language subti- of translating audiovisual content, large man- tles (also called captions) are already compressed ual effort is still required for transcribing the and segmented to respect the subtitling constraints source language, and for spotting and seg- of length, reading speed and proper segmentation menting the text into proper subtitles. Cre- (Cintas and Remael, 2007; Karakanta et al., 2019). ating proper subtitles in terms of timing and In this way, the work of an NMT system is already segmentation highly depends on information simplified, since it only needs to translate match- present in the audio (utterance duration, natu- ing the length of the source text (Matusov et al., ral pauses). In this work, we explore two meth- ods for applying Speech Translation (ST) to 2019; Lakew et al., 2019). However, the essence subtitling: a) a direct end-to-end and b) a clas- of a good subtitle goes beyond matching a prede- sical cascade approach. -

Breaking Through the Language Barrier – Bringing 'Dead'

Museum Management and Curatorship ISSN: 0964-7775 (Print) 1872-9185 (Online) Journal homepage: http://www.tandfonline.com/loi/rmmc20 Breaking through the language barrier – bringing ‘dead’ languages to life through sensory and narrative engagement Abigail Baker & Alison Cooley To cite this article: Abigail Baker & Alison Cooley (2018): Breaking through the language barrier – bringing ‘dead’ languages to life through sensory and narrative engagement, Museum Management and Curatorship, DOI: 10.1080/09647775.2018.1501601 To link to this article: https://doi.org/10.1080/09647775.2018.1501601 © 2018 The Author(s). Published by Informa UK Limited, trading as Taylor & Francis Group Published online: 28 Jul 2018. Submit your article to this journal Article views: 37 View Crossmark data Full Terms & Conditions of access and use can be found at http://www.tandfonline.com/action/journalInformation?journalCode=rmmc20 MUSEUM MANAGEMENT AND CURATORSHIP https://doi.org/10.1080/09647775.2018.1501601 Breaking through the language barrier – bringing ‘dead’ languages to life through sensory and narrative engagement Abigail Baker and Alison Cooley Department of Classics and Ancient History, University of Warwick, Coventry, UK ABSTRACT ARTICLE HISTORY Ancient inscriptions can be difficult to understand and off-putting to Received 7 December 2017 museum audiences, but they are packed with personal stories and Accepted 14 July 2018 vivid information about the people who made them. This article KEYWORDS argues that overcoming the language barrier presented by these ff Museums; interpretation; objects can o er a deep sense of engagement with the ancient epigraphy; storytelling; world and explores possible ways of achieving this. It looks at inscriptions; senses examples of effective approaches from a range of European museums with a particular emphasis on bringing out the sensory, social, and narrative dimensions of these objects. -

Speech Translation Into Pakistan Sign Language

Master Thesis Computer Science Thesis No: MCS-2010-24 2012 Speech Translation into Pakistan Sign Language Speech Translation into Pakistan Sign AhmedLanguage Abdul Haseeb Asim Illyas Speech Translation into Pakistan Sign LanguageAsim Ilyas School of Computing Blekinge Institute of Technology SE – 371 79 Karlskrona Sweden This thesis is submitted to the School of Computing at Blekinge Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science in Computer Science. The thesis is equivalent to 20 weeks of full time studies. Contact Information: Authors: Ahmed Abdul Haseeb E-mail: [email protected] Asim Ilyas E-mail: [email protected] University advisor: Prof. Sara Eriksén School of Computing, Blekinge Institute of Technology Internet : www.bth.se/com SE – 371 79 Karlskrona Phone : +46 457 38 50 00 Sweden Fax : + 46 457 271 25 ABSTRACT Context: Communication is a primary human need and language is the medium for this. Most people have the ability to listen and speak and they use different languages like Swedish, Urdu and English etc. to communicate. Hearing impaired people use signs to communicate. Pakistan Sign Language (PSL) is the preferred language of the deaf in Pakistan. Currently, human PSL interpreters are required to facilitate communication between the deaf and hearing; they are not always available, which means that communication among the deaf and other people may be impaired or nonexistent. In this situation, a system with voice recognition as an input and PSL as an output will be highly helpful. Objectives: As part of this thesis, we explore challenges faced by deaf people in everyday life while interacting with unimpaired. -

Looking Beyond the Knocked-Down Language Barrier

TranscUlturAl, vol. 9.2 (2017), 86-108. http://ejournals.library.ualberta.ca/index.php/TC No Linguistic Borders Ahead? Looking Beyond the Knocked-down Language Barrier Tomáš Svoboda Charles University, Prague 1. Introduction Our times are those of change. The paradigm-shifting technology of Machine Translation (MT) clearly comes with the potential of bringing down the so-called language barrier. This involves a vision of people using electronic devices to capture the spoken or written word, those devices then rendering the text or utterance from one language into another automatically. Already today, people use their smartphones exactly for that purpose, albeit with restricted functionality: they use a publicly available MT service with their smartphone, typing in a sentence in one language, have it translate the text, and show the on-screen result to the other party in the conversation, who then reads the translated message, and, in time, responds in the same way. This rather cumbersome procedure is quite easy to enhance by voice recognition and voice synthesis systems. For example, the Google Translate service allows for speech being dictated into the device, then speech recognition software converting the spoken text into written text, which is then fed into the translation engine. The latter transforms the text material, and speech synthesis software enables the message to be read aloud by the device. What is here today, wrapped in rather a primitive interface, is the precursor of future wearable devices (some of which have been referred to as SATS1), or even augmented-reality tools,2 which will better respect the ergonomics of human bodies and, eventually, become part of either smart clothing, or even implanted into our own organisms. -

Between Flexibility and Consistency: Joint Generation of Captions And

Between Flexibility and Consistency: Joint Generation of Captions and Subtitles Alina Karakanta1,2, Marco Gaido1,2, Matteo Negri1, Marco Turchi1 1 Fondazione Bruno Kessler, Via Sommarive 18, Povo, Trento - Italy 2 University of Trento, Italy {akarakanta,mgaido,negri,turchi}@fbk.eu Abstract material but also between each other, for example in the number of blocks (pieces of time-aligned Speech translation (ST) has lately received text) they occupy, their length and segmentation. growing interest for the generation of subtitles Consistency is vital for user experience, for ex- without the need for an intermediate source language transcription and timing (i.e. cap- ample in order to elicit the same reaction among tions). However, the joint generation of source multilingual audiences, or to facilitate the quality captions and target subtitles does not only assurance process in the localisation industry. bring potential output quality advantageswhen Previous work in ST for subtitling has focused the two decoding processes inform each other, on generating interlingual subtitles (Matusov et al., but it is also often required in multilingual sce- 2019; Karakanta et al., 2020a), a) without consid- narios. In this work, we focus on ST models ering the necessity of obtaining captions consis- which generate consistent captions-subtitles in tent with the target subtitles, and b) without ex- terms of structure and lexical content. We further introduce new metrics for evaluating amining whether the joint generation leads to im- subtitling consistency. Our findings show that provements in quality. We hypothesise that knowl- joint decoding leads to increased performance edge sharing between the tasks of transcription and consistency between the generated cap- and translation could lead to such improvements.