COMPRESSION: the Good, the Bad and the Ugly

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Survey Paper on Different Speech Compression Techniques

Vol-2 Issue-5 2016 IJARIIE-ISSN (O)-2395-4396 A Survey Paper on Different Speech Compression Techniques Kanawade Pramila.R1, Prof. Gundal Shital.S2 1 M.E. Electronics, Department of Electronics Engineering, Amrutvahini College of Engineering, Sangamner, Maharashtra, India. 2 HOD in Electronics Department, Department of Electronics Engineering , Amrutvahini College of Engineering, Sangamner, Maharashtra, India. ABSTRACT This paper describes the different types of speech compression techniques. Speech compression can be divided into two main types such as lossless and lossy compression. This survey paper has been written with the help of different types of Waveform-based speech compression, Parametric-based speech compression, Hybrid based speech compression etc. Compression is nothing but reducing size of data with considering memory size. Speech compression means voiced signal compress for different application such as high quality database of speech signals, multimedia applications, music database and internet applications. Today speech compression is very useful in our life. The main purpose or aim of speech compression is to compress any type of audio that is transfer over the communication channel, because of the limited channel bandwidth and data storage capacity and low bit rate. The use of lossless and lossy techniques for speech compression means that reduced the numbers of bits in the original information. By the use of lossless data compression there is no loss in the original information but while using lossy data compression technique some numbers of bits are loss. Keyword: - Bit rate, Compression, Waveform-based speech compression, Parametric-based speech compression, Hybrid based speech compression. 1. INTRODUCTION -1 Speech compression is use in the encoding system. -

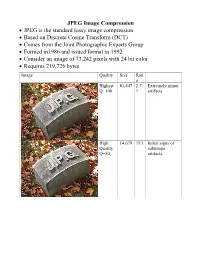

JPEG Image Compression

JPEG Image Compression JPEG is the standard lossy image compression Based on Discrete Cosine Transform (DCT) Comes from the Joint Photographic Experts Group Formed in1986 and issued format in 1992 Consider an image of 73,242 pixels with 24 bit color Requires 219,726 bytes Image Quality Size Rati o Highest 81,447 2.7: Extremely minor Q=100 1 artifacts High 14,679 15:1 Initial signs of Quality subimage Q=50 artifacts Medium 9,407 23:1 Stronger Q artifacts; loss of high frequency information Low 4,787 46:1 Severe high frequency loss leads to obvious artifacts on subimage boundaries ("macroblocking ") Lowest 1,523 144: Extreme loss of 1 color and detail; the leaves are nearly unrecognizable JPEG How it works Begin with a color translation RGB goes to Y′CBCR Luma and two Chroma colors Y is brightness CB is B-Y CR is R-Y Downsample or Chroma Subsampling Chroma data resolutions reduced by 2 or 3 Eye is less sensitive to fine color details than to brightness Block splitting Each channel broken into 8x8 blocks no subsampling Or 16x8 most common at medium compression Or 16x16 Must fill in remaining areas of incomplete blocks This gives the values DCT - centering Center the data about 0 Range is now -128 to 127 Middle is zero Discrete cosine transform formula Apply as 2D DCT using the formula Creates a new matrix Top left (largest) is the DC coefficient constant component Gives basic hue for the block Remaining 63 are AC coefficients Discrete cosine transform The DCT transforms an 8×8 block of input values to a linear combination of these 64 patterns. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Digital Video Quality Handbook (May 2013

Digital Video Quality Handbook May 2013 This page intentionally left blank. Executive Summary Under the direction of the Department of Homeland Security (DHS) Science and Technology Directorate (S&T), First Responders Group (FRG), Office for Interoperability and Compatibility (OIC), the Johns Hopkins University Applied Physics Laboratory (JHU/APL), worked with the Security Industry Association (including Steve Surfaro) and members of the Video Quality in Public Safety (VQiPS) Working Group to develop the May 2013 Video Quality Handbook. This document provides voluntary guidance for providing levels of video quality in public safety applications for network video surveillance. Several video surveillance use cases are presented to help illustrate how to relate video component and system performance to the intended application of video surveillance, while meeting the basic requirements of federal, state, tribal and local government authorities. Characteristics of video surveillance equipment are described in terms of how they may influence the design of video surveillance systems. In order for the video surveillance system to meet the needs of the user, the technology provider must consider the following factors that impact video quality: 1) Device categories; 2) Component and system performance level; 3) Verification of intended use; 4) Component and system performance specification; and 5) Best fit and link to use case(s). An appendix is also provided that presents content related to topics not covered in the original document (especially information related to video standards) and to update the material as needed to reflect innovation and changes in the video environment. The emphasis is on the implications of digital video data being exchanged across networks with large numbers of components or participants. -

![Arxiv:2004.10531V1 [Cs.OH] 8 Apr 2020](https://docslib.b-cdn.net/cover/5419/arxiv-2004-10531v1-cs-oh-8-apr-2020-215419.webp)

Arxiv:2004.10531V1 [Cs.OH] 8 Apr 2020

ROOT I/O compression improvements for HEP analysis Oksana Shadura1;∗ Brian Paul Bockelman2;∗∗ Philippe Canal3;∗∗∗ Danilo Piparo4;∗∗∗∗ and Zhe Zhang1;y 1University of Nebraska-Lincoln, 1400 R St, Lincoln, NE 68588, United States 2Morgridge Institute for Research, 330 N Orchard St, Madison, WI 53715, United States 3Fermilab, Kirk Road and Pine St, Batavia, IL 60510, United States 4CERN, Meyrin 1211, Geneve, Switzerland Abstract. We overview recent changes in the ROOT I/O system, increasing per- formance and enhancing it and improving its interaction with other data analy- sis ecosystems. Both the newly introduced compression algorithms, the much faster bulk I/O data path, and a few additional techniques have the potential to significantly to improve experiment’s software performance. The need for efficient lossless data compression has grown significantly as the amount of HEP data collected, transmitted, and stored has dramatically in- creased during the LHC era. While compression reduces storage space and, potentially, I/O bandwidth usage, it should not be applied blindly: there are sig- nificant trade-offs between the increased CPU cost for reading and writing files and the reduce storage space. 1 Introduction In the past years LHC experiments are commissioned and now manages about an exabyte of storage for analysis purposes, approximately half of which is used for archival purposes, and half is used for traditional disk storage. Meanwhile for HL-LHC storage requirements per year are expected to be increased by factor 10 [1]. arXiv:2004.10531v1 [cs.OH] 8 Apr 2020 Looking at these predictions, we would like to state that storage will remain one of the major cost drivers and at the same time the bottlenecks for HEP computing. -

The Video Codecs Behind Modern Remote Display Protocols Rody Kossen

The video codecs behind modern remote display protocols Rody Kossen 1 Rody Kossen - Consultant [email protected] @r_kossen rody-kossen-186b4b40 www.rodykossen.com 2 Agenda The basics H264 – The magician Other codecs Hardware support (NVIDIA) The basics 4 Let’s start with some terms YCbCr RGB Bit Depth YUV 5 Colors 6 7 8 9 Visible colors 10 Color Bit-Depth = Amount of different colors Used in 2 ways: > The number of bits used to indicate the color of a single pixel, for example 24 bit > Number of bits used for each color component of a single pixel (Red Green Blue), for example 8 bit Can be combined with Alpha, which describes transparency Most commonly used: > High Color – 16 bit = 5 bits per color + 1 unused bit = 32.768 colors = 2^15 > True Color – 24 bit Color + 8 bit Alpha = 8 bits per color = 16.777.216 colors > Deep color – 30 bit Color + 10 bit Alpha = 10 bits per color = 1.073 billion colors 11 Color Bit-Depth 12 Color Gamut Describes the subset of colors which can be displayed in relation to the human eye Standardized gamut: > Rec.709 (Blu-ray) > Rec.2020 (Ultra HD Blu-ray) > Adobe RGB > sRBG 13 Compression Lossy > Irreversible compression > Used to reduce data size > Examples: MP3, JPEG, MPEG-4 Lossless > Reversable compression > Examples: ZIP, PNG, FLAC or Dolby TrueHD Visually Lossless > Irreversible compression > Difference can’t be seen by the Human Eye 14 YCbCr Encoding RGB uses Red Green Blue values to describe a color YCbCr is a different way of storing color: > Y = Luma or Brightness of the Color > Cr = Chroma difference -

(A/V Codecs) REDCODE RAW (.R3D) ARRIRAW

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format. -

Instant Savings!*

Incredibly Easy! ® Was $64995** 18-55 NOW! VR Kit Mother’s Day D3100 ** Kit Includes 18-55mm VR Zoom-NIKKOR® Lens $ 95 549 * AFTER 14.2 UP TO 3 3" GUIDE MODE MEGAPIXELS FRAMES PER SECOND LCD MONITOR EASE OF USE $100 * Nikon Instant Savings 1080p INSTANT SAVINGS! HDVIDEO WITH FULL-TIME AUTOFOCUS White EXTRA INSTANT SAVINGS! Was $89990** Was $64995** Was $24995** NOW! NOW! NOW! ** $ 95** $ 95** $ 90 549 149 699 White Gift Pack Includes AFTER AFTER $100 $100 AFTER 1 NIKKOR VR 10mm-30mm * * Instant Savings + Instant Savings = $ Plum Zoom Lens, Red Leather 200 D3100 KIT INCLUDES 18-55MM DX VR BUY AN ADDITIONAL TELEPHOTO 55-200MM DX VR Camera Case & 8GB Memory Card Instant Savings* ZOOM-NIKKOR LENS. ZOOM-NIKKOR LENS AND SAVE $100 INSTANTLY. Gift Pack Includes Camera Case & 4GB Memory Card J1 White Gift Pack 3UNSET$RIVEs7ATERBURY#ENTER 64 Was $74995** AFTER ,OCATED/FF2OUTE !CROSSFROMTHE#OLD(OLLOW#IDER-ILL NOW! $ %VERYDAY,OW0RICES)N 3TORE3PECIALS New! 200 (AVEUSEDEQUIPMENT0UTITTOGOODUSE ** Instant 802-244-0883 $ 95 Savings* 3ELLORTRADE INYOUREQUIPMENTTODAY Gift Pack www.gmcamera.com 549 16 6x WIDE 3" HI-RES TOUCH Nikon MEGAPIXELS OPTICAL ZOOM LCD DISPLAY HD VIDEO $ 95** J1200182 KIT J1200182 KIT Was 219 AFTER * For details regarding Nikon’s Instant Savings Offers described in this fl yer, please visit: nikonusa.com/mothersdaykitoffers. Instant Savings offers $ on the COOLPIX S4300 Gift Pack, Nikon 1 J1 White Gift Pack, COOLPIX L26, COOLPIX L810, COOLPIX P310, COOLPIX S6300 and COOLPIX S9300 NOW! 90 are effective from 5/6/12 (or earlier) through 5/12/12. Instant Savings offers on the COOLPIX S30 and COOLPIX S3300 are effective from 5/6/12 ** through 5/19/12. -

USB Displayport Dual View Hdbaset™ 2.0 KVM Extender (4K@100M for Single View)

CE924 USB DisplayPort Dual View HDBaseT™ 2.0 KVM Extender (4K@100m for Single View) The ATEN CE924 USB DisplayPort Dual View HDBaseT™ 2.0 KVM Extender integrates the latest HDBaseT™ 2.0 technologies to deliver 4K video, stereo audio, USB, and RS-232 signals. The HDBaseT™ 2.0 guarantees the most reliable transmission on the market as well as it provides long-reach capability that extends Full HD 1080P signals up to 150 meters. In addition, CE924 supports single 4K display or dual 1080p display for DisplayPort video output resolution up to 100m by using a single Cat6 / 2L-2910 Cat 6 cable. With an easy cable installation supporting various signals, the CE924 is ideal for applications where convenient remote access is required – such as transportation control centers, medical facilities, industrial warehouses, and extended workstations. Features Allows dual view DisplayPort access to a computer and KVM control from a remote console Supports HDBaseT 2.0 technology - Extends video, audio, USB, and RS-232 signals via a single Cat6 / 6a / 2L-2910 Cat 6 cable - Enhanced bit error detection and correction to resist signal interference during high-quality video transmissions - Status detection and LED indication for HDBaseT™ signal transmission on the remote unit EDID Buffer for smooth power-up and the highest quality display Single category cable to transmit AV and control signal - HDBaseT Standard mode up to 4K @ 100 m (via Cat 6 / 6a / 2L-2910); (single view only; dual-view resolution up to 1080p) - HDBaseT Long-Reach mode up to 1080P @ 150 m -

Canon XA25.Pdf

XA25 Professional Camcorder The XA25 is a compact, high-performance professional camcorder designed specifically for “run-and-gun,” ENG-style shoots with enhanced I/O connectivity. The XA25 offers a unique combination of high-precision optics, outstanding image processing, multiple recording formats, flexible connectivity and intuitive user features. But its compact design does not mean compromised functionality – this small and lightweight camcorder meets the quality and performance standards necessary for use in a wide range of applications, including news gathering, law enforcement and military, education and documentary, among others. A powerful, all-new Genuine Canon 20x HD Video Lens with SuperRange Optical Image Stabilization and a new 8-Blade Circular Aperture extends user creativity; while a new 2.91 Megapixel Full HD CMOS Sensor and new DIGIC DV 4 Image Processor capture superb images with outstanding clarity. The camcorder features both MP4 (up to 35 Mbps) and AVCHD (up to 28 Mbps) codecs at up to 1080/50p resolution for virtually blur-free, high-quality capture of fast-moving subjects. Dual-band, built-in Wi-Fi® technology allows easy FTP file transfer and upload to the internet. A 3.5-inch OLED Touch Panel Display with the equivalent of 1.23 million dots of resolution offers a high contrast ratio, a wide- view angle and high-speed response, while a pair of SDHC/SDXC-compatible card slots enable Relay Recording, double-slot recording, and all-new Dual Recording, which enables the XA25 to capture video in different recording formats simultaneously. An HD/SD-SDI port allows uncompressed video with embedded audio and timecode data to be output to video editors, recorders, news trucks, workstations and other digital cinema/TV hardware. -

On the Accuracy of Video Quality Measurement Techniques

On the accuracy of video quality measurement techniques Deepthi Nandakumar Yongjun Wu Hai Wei Avisar Ten-Ami Amazon Video, Bangalore, India Amazon Video, Seattle, USA Amazon Video, Seattle, USA Amazon Video, Seattle, USA, [email protected] [email protected] [email protected] [email protected] Abstract —With the massive growth of Internet video and therefore measuring and optimizing for video quality in streaming, it is critical to accurately measure video quality these high quality ranges is an imperative for streaming service subjectively and objectively, especially HD and UHD video which providers. In this study, we lay specific emphasis on the is bandwidth intensive. We summarize the creation of a database measurement accuracy of subjective and objective video quality of 200 clips, with 20 unique sources tested across a variety of scores in this high quality range. devices. By classifying the test videos into 2 distinct quality regions SD and HD, we show that the high correlation claimed by objective Globally, a healthy mix of devices with different screen sizes video quality metrics is led mostly by videos in the SD quality and form factors is projected to contribute to IP traffic in 2021 region. We perform detailed correlation analysis and statistical [1], ranging from smartphones (44%), to tablets (6%), PCs hypothesis testing of the HD subjective quality scores, and (19%) and TVs (24%). It is therefore necessary for streaming establish that the commonly used ACR methodology of subjective service providers to quantify the viewing experience based on testing is unable to capture significant quality differences, leading the device, and possibly optimize the encoding and delivery to poor measurement accuracy for both subjective and objective process accordingly. -

PROFESSIONAL VIDEO 315 800-947-1175 | 212-444-6675 Blackmagic • Canon

PROFESSIONAL VIDEO 315 800-947-1175 | 212-444-6675 Blackmagic • Canon VIDEO TAPE Fuji Film PRO-T120 VHS Video Cassette (FUPROT120)............................3.29 XA10 Professional HD Camcorder DVC-60 Mini DV Cassette (FUDVC60) .......................................3.35 Pocket Cinema Camera Ultra-compact, the XA10 DVC-80 Mini DV Cassette (FUDVC80)........................................7.99 shares nearly all the Pocket Cinema Camera is a HDV Cassette, 63 Minute (FUHDVDVM63) .................................6.99 functionality of the XF100, true Super 16 digital film DV141HD63S HDV (FUDV14163S) ............................................7.95 but in an even smaller, camera that’s small enough run-and-gun form factor. to keep with you at all times. Maxell 64GB internal flash drive Remarkably compact (5 x 2.6 DV-60 Mini DV Cassette (MADVM60SE) .................................3.99 and two SDXC-compatible x 1.5”) and lightweight (12.5 M-DV63PRO Mini DV Cassette (MADVM63PRO)......................5.50 card slots allow non-stop oz) with a magnesium alloy chassis, it features 13 stops of T-120 VHS Cassette (MAGXT120) ..........................................2.39 recording. Able to capture dynamic range, Super 16 sensor size, and and records 1080HD STD-160 VHS Cassette (MAGXT160).....................................2.69 AVCHD video at bitrates up to lossless CinemaDNG RAW and Apple ProRes 422 (HQ) files to fast STD-180 VHS Cassette (MAGXT180)......................................3.09 24Mbps, the camcorder’s native 1920 x1080 CMOS sensor also SDXC cards, so you can immediately edit or color correct your HG-T120 VHS Cassette (MAHGT120) .....................................1.99 lets you choose 60i, 24p, PF30, and PF24 frame rates for media on your laptop. Active Micro Four Thirds lens mount can HG-T160 VHS Video Cassette (MAHGT160) ............................2.59 customizing the look of your footage.