Image and Video Compression Coding Theory Contents

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Evaluation of Color Differences Across Different Devices Craitishia Lewis Clemson University, [email protected]

Clemson University TigerPrints All Theses Theses 12-2013 What You See Isn't Always What You Get: An Evaluation of Color Differences Across Different Devices Craitishia Lewis Clemson University, [email protected] Follow this and additional works at: https://tigerprints.clemson.edu/all_theses Part of the Communication Commons Recommended Citation Lewis, Craitishia, "What You See Isn't Always What You Get: An Evaluation of Color Differences Across Different Devices" (2013). All Theses. 1808. https://tigerprints.clemson.edu/all_theses/1808 This Thesis is brought to you for free and open access by the Theses at TigerPrints. It has been accepted for inclusion in All Theses by an authorized administrator of TigerPrints. For more information, please contact [email protected]. What You See Isn’t Always What You Get: An Evaluation of Color Differences Across Different Devices A Thesis Presented to The Graduate School of Clemson University In Partial Fulfillment Of the Requirements for the Degree Master of Science Graphic Communications By Craitishia Lewis December 2013 Accepted by: Dr. Samuel Ingram, Committee Chair Kern Cox Dr. Eric Weisenmiller Dr. Russell Purvis ABSTRACT The objective of this thesis was to examine color differences between different digital devices such as, phones, tablets, and monitors. New technology has always been the catalyst for growth and change within the printing industry. With gadgets like the iPhone and the iPad becoming increasingly more popular in the recent years, printers have yet another technological advancement to consider. Soft proofing strategies use color management technology that allows the client to view their proof on a monitor as a duplication of how the finished product will appear on a printed piece of paper. -

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

Package 'Colorscience'

Package ‘colorscience’ October 29, 2019 Type Package Title Color Science Methods and Data Version 1.0.8 Encoding UTF-8 Date 2019-10-29 Maintainer Glenn Davis <[email protected]> Description Methods and data for color science - color conversions by observer, illuminant, and gamma. Color matching functions and chromaticity diagrams. Color indices, color differences, and spectral data conversion/analysis. License GPL (>= 3) Depends R (>= 2.10), Hmisc, pracma, sp Enhances png LazyData yes Author Jose Gama [aut], Glenn Davis [aut, cre] Repository CRAN NeedsCompilation no Date/Publication 2019-10-29 18:40:02 UTC R topics documented: ASTM.D1925.YellownessIndex . .5 ASTM.E313.Whiteness . .6 ASTM.E313.YellownessIndex . .7 Berger59.Whiteness . .7 BVR2XYZ . .8 cccie31 . .9 cccie64 . 10 CCT2XYZ . 11 CentralsISCCNBS . 11 CheckColorLookup . 12 1 2 R topics documented: ChromaticAdaptation . 13 chromaticity.diagram . 14 chromaticity.diagram.color . 14 CIE.Whiteness . 15 CIE1931xy2CIE1960uv . 16 CIE1931xy2CIE1976uv . 17 CIE1931XYZ2CIE1931xyz . 18 CIE1931XYZ2CIE1960uv . 19 CIE1931XYZ2CIE1976uv . 20 CIE1960UCS2CIE1964 . 21 CIE1960UCS2xy . 22 CIE1976chroma . 23 CIE1976hueangle . 23 CIE1976uv2CIE1931xy . 24 CIE1976uv2CIE1960uv . 25 CIE1976uvSaturation . 26 CIELabtoDIN99 . 27 CIEluminanceY2NCSblackness . 28 CIETint . 28 ciexyz31 . 29 ciexyz64 . 30 CMY2CMYK . 31 CMY2RGB . 32 CMYK2CMY . 32 ColorBlockFromMunsell . 33 compuphaseDifferenceRGB . 34 conversionIlluminance . 35 conversionLuminance . 36 createIsoTempLinesTable . 37 daylightcomponents . 38 deltaE1976 -

An Improved SPSIM Index for Image Quality Assessment

S S symmetry Article An Improved SPSIM Index for Image Quality Assessment Mariusz Frackiewicz * , Grzegorz Szolc and Henryk Palus Department of Data Science and Engineering, Silesian University of Technology, Akademicka 16, 44-100 Gliwice, Poland; [email protected] (G.S.); [email protected] (H.P.) * Correspondence: [email protected]; Tel.: +48-32-2371066 Abstract: Objective image quality assessment (IQA) measures are playing an increasingly important role in the evaluation of digital image quality. New IQA indices are expected to be strongly correlated with subjective observer evaluations expressed by Mean Opinion Score (MOS) or Difference Mean Opinion Score (DMOS). One such recently proposed index is the SuperPixel-based SIMilarity (SPSIM) index, which uses superpixel patches instead of a rectangular pixel grid. The authors of this paper have proposed three modifications to the SPSIM index. For this purpose, the color space used by SPSIM was changed and the way SPSIM determines similarity maps was modified using methods derived from an algorithm for computing the Mean Deviation Similarity Index (MDSI). The third modification was a combination of the first two. These three new quality indices were used in the assessment process. The experimental results obtained for many color images from five image databases demonstrated the advantages of the proposed SPSIM modifications. Keywords: image quality assessment; image databases; superpixels; color image; color space; image quality measures Citation: Frackiewicz, M.; Szolc, G.; Palus, H. An Improved SPSIM Index 1. Introduction for Image Quality Assessment. Quantitative domination of acquired color images over gray level images results in Symmetry 2021, 13, 518. https:// the development not only of color image processing methods but also of Image Quality doi.org/10.3390/sym13030518 Assessment (IQA) methods. -

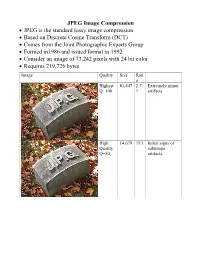

JPEG Image Compression

JPEG Image Compression JPEG is the standard lossy image compression Based on Discrete Cosine Transform (DCT) Comes from the Joint Photographic Experts Group Formed in1986 and issued format in 1992 Consider an image of 73,242 pixels with 24 bit color Requires 219,726 bytes Image Quality Size Rati o Highest 81,447 2.7: Extremely minor Q=100 1 artifacts High 14,679 15:1 Initial signs of Quality subimage Q=50 artifacts Medium 9,407 23:1 Stronger Q artifacts; loss of high frequency information Low 4,787 46:1 Severe high frequency loss leads to obvious artifacts on subimage boundaries ("macroblocking ") Lowest 1,523 144: Extreme loss of 1 color and detail; the leaves are nearly unrecognizable JPEG How it works Begin with a color translation RGB goes to Y′CBCR Luma and two Chroma colors Y is brightness CB is B-Y CR is R-Y Downsample or Chroma Subsampling Chroma data resolutions reduced by 2 or 3 Eye is less sensitive to fine color details than to brightness Block splitting Each channel broken into 8x8 blocks no subsampling Or 16x8 most common at medium compression Or 16x16 Must fill in remaining areas of incomplete blocks This gives the values DCT - centering Center the data about 0 Range is now -128 to 127 Middle is zero Discrete cosine transform formula Apply as 2D DCT using the formula Creates a new matrix Top left (largest) is the DC coefficient constant component Gives basic hue for the block Remaining 63 are AC coefficients Discrete cosine transform The DCT transforms an 8×8 block of input values to a linear combination of these 64 patterns. -

Energy-Efficient Design of the Secure Better Portable

Energy-Efficient Design of the Secure Better Portable Graphics Compression Architecture for Trusted Image Communication in the IoT Umar Albalawi Saraju P. Mohanty Elias Kougianos Computer Science and Engineering Computer Science and Engineering Engineering Technology University of North Texas, USA. University of North Texas, USA. University of North Texas. USA. Email: [email protected] Email: [email protected] Email: [email protected] Abstract—Energy consumption has become a major concern it. On the other hand, researchers from the software field in portable applications. This paper proposes an energy-efficient investigate how the software itself and its different uses can design of the Secure Better Portable Graphics Compression influence energy consumption. An efficient software is capable (SBPG) Architecture. The architecture proposed in this paper is suitable for imaging in the Internet of Things (IoT) as the main of adapting to the requirements of everyday usage while saving concentration is on the energy efficiency. The novel contributions as much energy as possible. Software engineers contribute to of this paper are divided into two parts. One is the energy efficient improving energy consumption by designing frameworks and SBPG architecture, which offers encryption and watermarking, tools used in the process of energy metering and profiling [2]. a double layer protection to address most of the issues related to As a specific type of data, images can have a long life if privacy, security and digital rights management. The other novel contribution is the Secure Digital Camera integrated with the stored properly. However, images require a large storage space. SBPG architecture. The combination of these two gives the best The process of storing an image starts with its compression. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Digital Cinematography Camera F35 F23

Digital Cinematography Camera F35 F23 www.sony.com/professional SONY54696_F-Series 1 9/26/08 12:08:42 PM ADVANCING THE ART OF DIGITAL IMAGING CineAlta – a name that proudly symbolizes the bond between cinematography and high-resolution digital imaging, distinguishes Sony’s family of 24P acquisition products and systems. The emergence of Sony’s CineAlta™ products marked the beginning of a new era in movie, commercial and television production applications. Since their introduction, CineAlta products – beginning with the groundbreaking HDW-F900, Sony’s first 24P-capable HDCAM™ camcorder, and the HDC-F950 full-bandwidth 4:4:4 (RGB) portable camera – have been globally accepted as a viable creative alternative to 24-frame film origination. Working closely with the creative community over time, Sony has created CineAlta acquisition systems designed specifically to meet the Cinematographer’s needs. This collaboration has lead to array of highly sophisticated digital acquisition systems that offer comprehensive feature sets and workflows specifically designed to maximize on-set efficiencies, flexibility and creative freedom. Consequently, the name CineAlta has come to define the industry standards for quality and flexibility in 24-frame digital cinematography. 2 2 SONY54696_F-Series 2 9/26/08 12:08:45 PM Expand Your Creative Possibilities With a Choice of Film-style Digital Cinematography Cameras Sony has proudly introduced two new powerful film-style Both the F35 and F23 provide an uncompromising design digital cinematography cameras to the CineAlta acquisition that allows direct docking with Sony’s SRW-1 portable lineup. The F35 and F23 cameras combine the proven HDCAM-SR™ recorder. It’s also possible to use the F23 or the technology used in previous CineAlta acquisition models F35 in combination, for even more creative freedom. -

Opus, a Free, High-Quality Speech and Audio Codec

Opus, a free, high-quality speech and audio codec Jean-Marc Valin, Koen Vos, Timothy B. Terriberry, Gregory Maxwell 29 January 2014 Xiph.Org & Mozilla What is Opus? ● New highly-flexible speech and audio codec – Works for most audio applications ● Completely free – Royalty-free licensing – Open-source implementation ● IETF RFC 6716 (Sep. 2012) Xiph.Org & Mozilla Why a New Audio Codec? http://xkcd.com/927/ http://imgs.xkcd.com/comics/standards.png Xiph.Org & Mozilla Why Should You Care? ● Best-in-class performance within a wide range of bitrates and applications ● Adaptability to varying network conditions ● Will be deployed as part of WebRTC ● No licensing costs ● No incompatible flavours Xiph.Org & Mozilla History ● Jan. 2007: SILK project started at Skype ● Nov. 2007: CELT project started ● Mar. 2009: Skype asks IETF to create a WG ● Feb. 2010: WG created ● Jul. 2010: First prototype of SILK+CELT codec ● Dec 2011: Opus surpasses Vorbis and AAC ● Sep. 2012: Opus becomes RFC 6716 ● Dec. 2013: Version 1.1 of libopus released Xiph.Org & Mozilla Applications and Standards (2010) Application Codec VoIP with PSTN AMR-NB Wideband VoIP/videoconference AMR-WB High-quality videoconference G.719 Low-bitrate music streaming HE-AAC High-quality music streaming AAC-LC Low-delay broadcast AAC-ELD Network music performance Xiph.Org & Mozilla Applications and Standards (2013) Application Codec VoIP with PSTN Opus Wideband VoIP/videoconference Opus High-quality videoconference Opus Low-bitrate music streaming Opus High-quality music streaming Opus Low-delay -

Improved Lossy Image Compression with Priming and Spatially Adaptive Bit Rates for Recurrent Networks

Improved Lossy Image Compression with Priming and Spatially Adaptive Bit Rates for Recurrent Networks Nick Johnston, Damien Vincent, David Minnen, Michele Covell, Saurabh Singh, Troy Chinen, Sung Jin Hwang, Joel Shor, George Toderici {nickj, damienv, dminnen, covell, saurabhsingh, tchinen, sjhwang, joelshor, gtoderici} @google.com, Google Research Abstract formation from the current residual and combines it with context stored in the hidden state of the recurrent layers. By We propose a method for lossy image compression based saving the bits from the quantized bottleneck after each it- on recurrent, convolutional neural networks that outper- eration, the model generates a progressive encoding of the forms BPG (4:2:0), WebP, JPEG2000, and JPEG as mea- input image. sured by MS-SSIM. We introduce three improvements over Our method provides a significant increase in compres- previous research that lead to this state-of-the-art result us- sion performance over previous models due to three im- ing a single model. First, we modify the recurrent architec- provements. First, by “priming” the network, that is, run- ture to improve spatial diffusion, which allows the network ning several iterations before generating the binary codes to more effectively capture and propagate image informa- (in the encoder) or a reconstructed image (in the decoder), tion through the network’s hidden state. Second, in addition we expand the spatial context, which allows the network to lossless entropy coding, we use a spatially adaptive bit to represent more complex representations in early itera- allocation algorithm to more efficiently use the limited num- tions. Second, we add support for spatially adaptive bit rates ber of bits to encode visually complex image regions. -

Optical Network Technologies for Future Digital Cinema

Hindawi Publishing Corporation Advances in Optical Technologies Volume 2016, Article ID 8164308, 8 pages http://dx.doi.org/10.1155/2016/8164308 Review Article Optical Network Technologies for Future Digital Cinema Sajid Nazir1 and Mohammad Kaleem2 1 School of Engineering, London South Bank University, 103 Borough Road, London SE1 0AA, UK 2Department of Electrical Engineering, COMSATS, Institute of Information Technology, Islamabad, Pakistan Correspondence should be addressed to Sajid Nazir; [email protected] Received 9 May 2016; Revised 30 October 2016; Accepted 15 November 2016 Academic Editor: Giancarlo C. Righini Copyright © 2016 S. Nazir and M. Kaleem. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Digital technology has transformed the information flow and support infrastructure for numerous application domains, such as cellular communications. Cinematography, traditionally, a film based medium, has embraced digital technology leading to innovative transformations in its work flow. Digital cinema supports transmission of high resolution content enabled by the latest advancements in optical communications and video compression. In this paper we provide a survey of the optical network technologies for supporting this bandwidth intensive traffic class. We also highlight the significance and benefits of the state ofthe art in optical technologies that support the digital cinema work flow. 1. Introduction its real-time nature. The data generated by a single frame in ultrahigh definition (UHD) format is enormous and cannot The transformation to digital cinema is taking place through be supported over today’s Internet infrastructure. -

Growing Importance of Color in a World of Displays and Data Color In

Importance of Color in Displays Growing Importance of Color in a World of Displays and Data Color in displays Standards for color space and their importance The television and monitor display industry, through numerous standards bodies, including the International Telecommunication Union (ITU) and International Commission on Illumination (CIE), collaborate to create standards for interoperability, delivery, and image quality, providing a basis for delivering value to the consumers and businesses that purchase and rely on these displays. In addition to industry-wide standards, proprietary standards developed by commercial entities, such as Adobe RGB and Dolby Vision, drive innovation and customer value. The benefits of standards for color are tangible. They provide the means for: Color information to be device- and application-independent Maintaining color fidelity through the creation, editing, mastering, and broadcasting process Reproducing color accurately and consistently across diverse display devices By defining a uniform color space, encoding system, and broadcast specification relative to an ideal “reference display,” content can be created almost anywhere and transmitted to virtually any device. In order for that content to be shown correctly and in full detail, the display rendering it should be able to reproduce the same color space. While down-conversion to lesser color spaces is possible, the resulting image quality will be reduced. It benefits a consumer, whenever possible and within reasonable spending, to purchase a display with the closest conformance to standards so that they are assured of the best experience in terms of interoperability and image quality. The Color IQ optic that is referenced as Annex 3 of Exemption 39(b) allows displays to meet these standards, and does so at a lower system cost and higher energy efficiency than alternative solutions.