An Improved SPSIM Index for Image Quality Assessment

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Robust Pulse-Rate from Chrominance-Based Rppg Gerard De Haan and Vincent Jeanne

IEEE TRANSACTIONS ON BIOMEDICAL ENGINEERING, VOL. ?, NO. ?, MONTH 2013 1 Robust pulse-rate from chrominance-based rPPG Gerard de Haan and Vincent Jeanne Abstract—Remote photoplethysmography (rPPG) enables con- PPG signal into two independent signals built as a linear tactless monitoring of the blood volume pulse using a regular combination of two color channels [8]. One combination camera. Recent research focused on improved motion robustness, approximated the clean pulse-signal, the other the motion but the proposed blind source separation techniques (BSS) in RGB color space show limited success. We present an analysis of artifact, and the energy in the pulse-signal was minimized the motion problem, from which far superior chrominance-based to optimize the combination. Poh et al. extended this work methods emerge. For a population of 117 stationary subjects, we proposing a linear combination of all three color channels show our methods to perform in 92% good agreement (±1:96σ) defining three independent signals with Independent Compo- with contact PPG, with RMSE and standard deviation both a nent Analysis (ICA) using non-Gaussianity as the criterion factor of two better than BSS-based methods. In a fitness setting using a simple spectral peak detector, the obtained pulse-rate for independence [5]. Lewandowska et al. varied this con- for modest motion (bike) improves from 79% to 98% correct, cept defining three independent linear combinations of the and for vigorous motion (stepping) from less than 11% to more color channels with Principal Component Analysis (PCA) [6]. than 48% correct. We expect the greatly improved robustness to With both Blind Source Separation (BSS) techniques, the considerably widen the application scope of the technology. -

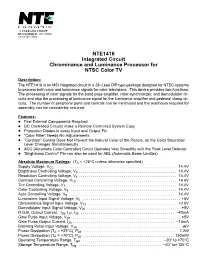

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV Description: The NTE1416 is an MSI integrated circuit in a 28–Lead DIP type package designed for NTSC systems to process both color and luminance signals for color televisions. This device provides two functions: The processing of color signals for the band pass amplifier, color synchronizer, and demodulator cir- cuits and also the processing of luminance signal for the luminance amplifier and pedestal clamp cir- cuits. The number of peripheral parts and controls can be minimized and the manhours required for assembly can be considerbly reduced. Features: D Few External Components Required D DC Controlled Circuits make a Remote Controlled System Easy D Protection Diodes in every Input and Output Pin D “Color Killer” Needs No Adjustements D “Contrast” Control Does Not Prevent the Natural Color of the Picture, as the Color Saturation Level Changes Simultaneously D ACC (Automatic Color Controller) Circuit Operates Very Smoothly with the Peak Level Detector D “Brightness Control” Pin can also be used for ABL (Automatic Beam Limitter) Absolute Maximum Ratings: (TA = +25°C unless otherwise specified) Supply Voltage, VCC . 14.4V Brightness Controlling Voltage, V3 . 14.4V Resolution Controlling Voltage, V4 . 14.4V Contrast Controlling Voltage, V10 . 14.4V Tint Controlling Voltage, V7 . 14.4V Color Controlling Voltage, V9 . 14.4V Auto Controlling Voltage, V8 . 14.4V Luminance Input Signal Voltage, V5 . +5V Chrominance Signal Input Voltage, V13 . +2.5V Demodulator Input Signal Voltage, V25 . +5V R.G.B. Output Current, I26, I27, I28 . –40mA Gate Pulse Input Voltage, V20 . +5V Gate Pulse Output Current, I20 . -

Creating 4K/UHD Content Poster

Creating 4K/UHD Content Colorimetry Image Format / SMPTE Standards Figure A2. Using a Table B1: SMPTE Standards The television color specification is based on standards defined by the CIE (Commission 100% color bar signal Square Division separates the image into quad links for distribution. to show conversion Internationale de L’Éclairage) in 1931. The CIE specified an idealized set of primary XYZ SMPTE Standards of RGB levels from UHDTV 1: 3840x2160 (4x1920x1080) tristimulus values. This set is a group of all-positive values converted from R’G’B’ where 700 mv (100%) to ST 125 SDTV Component Video Signal Coding for 4:4:4 and 4:2:2 for 13.5 MHz and 18 MHz Systems 0mv (0%) for each ST 240 Television – 1125-Line High-Definition Production Systems – Signal Parameters Y is proportional to the luminance of the additive mix. This specification is used as the color component with a color bar split ST 259 Television – SDTV Digital Signal/Data – Serial Digital Interface basis for color within 4K/UHDTV1 that supports both ITU-R BT.709 and BT2020. 2020 field BT.2020 and ST 272 Television – Formatting AES/EBU Audio and Auxiliary Data into Digital Video Ancillary Data Space BT.709 test signal. ST 274 Television – 1920 x 1080 Image Sample Structure, Digital Representation and Digital Timing Reference Sequences for The WFM8300 was Table A1: Illuminant (Ill.) Value Multiple Picture Rates 709 configured for Source X / Y BT.709 colorimetry ST 296 1280 x 720 Progressive Image 4:2:2 and 4:4:4 Sample Structure – Analog & Digital Representation & Analog Interface as shown in the video ST 299-0/1/2 24-Bit Digital Audio Format for SMPTE Bit-Serial Interfaces at 1.5 Gb/s and 3 Gb/s – Document Suite Illuminant A: Tungsten Filament Lamp, 2854°K x = 0.4476 y = 0.4075 session display. -

Demosaicking: Color Filter Array Interpolation [Exploring the Imaging

[Bahadir K. Gunturk, John Glotzbach, Yucel Altunbasak, Ronald W. Schafer, and Russel M. Mersereau] FLOWER PHOTO © PHOTO FLOWER MEDIA, 1991 21ST CENTURY PHOTO:CAMERA AND BACKGROUND ©VISION LTD. DIGITAL Demosaicking: Color Filter Array Interpolation [Exploring the imaging process and the correlations among three color planes in single-chip digital cameras] igital cameras have become popular, and many people are choosing to take their pic- tures with digital cameras instead of film cameras. When a digital image is recorded, the camera needs to perform a significant amount of processing to provide the user with a viewable image. This processing includes correction for sensor nonlinearities and nonuniformities, white balance adjustment, compression, and more. An important Dpart of this image processing chain is color filter array (CFA) interpolation or demosaicking. A color image requires at least three color samples at each pixel location. Computer images often use red (R), green (G), and blue (B). A camera would need three separate sensors to completely meas- ure the image. In a three-chip color camera, the light entering the camera is split and projected onto each spectral sensor. Each sensor requires its proper driving electronics, and the sensors have to be registered precisely. These additional requirements add a large expense to the system. Thus, many cameras use a single sensor covered with a CFA. The CFA allows only one color to be measured at each pixel. This means that the camera must estimate the missing two color values at each pixel. This estimation process is known as demosaicking. Several patterns exist for the filter array. -

Measuring Perceived Color Difference Using YIQ Color Space

Programación Matemática y Software (2010) Vol. 2. No 2. ISSN: 2007-3283 Recibido: 17 de Agosto de 2010 Aceptado: 25 de Noviembre de 2010 Publicado en línea: 30 de Diciembre de 2010 Measuring perceived color difference using YIQ NTSC transmission color space in mobile applications Yuriy Kotsarenko, Fernando Ramos TECNOLOGICO DE DE MONTERREY, CAMPUS CUERNAVACA. Resumen: En este trabajo varias fórmulas están introducidas que permiten calcular la medir la diferencia entre colores de forma perceptible, utilizando el espacio de colores YIQ. Las formulas clásicas y sus derivados que utilizan los espacios CIELAB y CIELUV requieren muchas transformaciones aritméticas de valores entrantes definidos comúnmente con los componentes de rojo, verde y azul, y por lo tanto son muy pesadas para su implementación en dispositivos móviles. Las fórmulas alternativas propuestas en este trabajo basadas en espacio de colores YIQ son sencillas y se calculan rápidamente, incluso en tiempo real. La comparación está incluida en este trabajo entre las formulas clásicas y las propuestas utilizando dos diferentes grupos de experimentos. El primer grupo de experimentos se enfoca en evaluar la diferencia perceptible utilizando diferentes fórmulas, mientras el segundo grupo de experimentos permite determinar el desempeño de cada una de las fórmulas para determinar su velocidad cuando se procesan imágenes. Los resultados experimentales indican que las formulas propuestas en este trabajo son muy cercanas en términos perceptibles a las de CIELAB y CIELUV, pero son significativamente más rápidas, lo que los hace buenos candidatos para la medición de las diferencias de colores en dispositivos móviles y aplicaciones en tiempo real. Abstract: An alternative color difference formulas are presented for measuring the perceived difference between two color samples defined in YIQ color space. -

AN9717: Ycbcr to RGB Considerations (Multimedia)

YCbCr to RGB Considerations TM Application Note March 1997 AN9717 Author: Keith Jack Introduction Converting 4:2:2 to 4:4:4 YCbCr Many video ICs now generate 4:2:2 YCbCr video data. The Prior to converting YCbCr data to R´G´B´ data, the 4:2:2 YCbCr color space was developed as part of ITU-R BT.601 YCbCr data must be converted to 4:4:4 YCbCr data. For the (formerly CCIR 601) during the development of a world-wide YCbCr to RGB conversion process, each Y sample must digital component video standard. have a corresponding Cb and Cr sample. Some of these video ICs also generate digital RGB video Figure 1 illustrates the positioning of YCbCr samples for the data, using lookup tables to assist with the YCbCr to RGB 4:4:4 format. Each sample has a Y, a Cb, and a Cr value. conversion. By understanding the YCbCr to RGB conversion Each sample is typically 8 bits (consumer applications) or 10 process, the lookup tables can be eliminated, resulting in a bits (professional editing applications) per component. substantial cost savings. Figure 2 illustrates the positioning of YCbCr samples for the This application note covers some of the considerations for 4:2:2 format. For every two horizontal Y samples, there is converting the YCbCr data to RGB data without the use of one Cb and Cr sample. Each sample is typically 8 bits (con- lookup tables. The process basically consists of three steps: sumer applications) or 10 bits (professional editing applica- tions) per component. -

Improved Television Systems: NTSC and Beyond

• Improved Television Systems: NTSC and Beyond By William F. Schreiber After a discussion ofthe limits to received image quality in NTSC and a excellent results. Demonstrations review of various proposals for improvement, it is concluded that the have been made showing good motion current system is capable ofsignificant increase in spatial and temporal rendition with very few frames per resolution. and that most of these improvements can be made in a second,2 elimination of interline flick er by up-conversion, 3 and improved compatible manner. Newly designed systems,for the sake ofmaximum separation of luminance and chromi utilization of channel capacity. should use many of the techniques nance by means of comb tilters. ~ proposedfor improving NTSC. such as high-rate cameras and displays, No doubt the most important ele but should use the component. rather than composite, technique for ment in creating interest in this sub color multiplexing. A preference is expressed for noncompatible new ject was the demonstration of the Jap systems, both for increased design flexibility and on the basis oflikely anese high-definition television consumer behaL'ior. Some sample systems are described that achieve system in 1981, a development that very high quality in the present 6-MHz channels, full "HDTV" at the took more than ten years.5 Orches CCIR rate of 216 Mbits/sec, or "better-than-35mm" at about 500 trated by NHK, with contributions Mbits/sec. Possibilities for even higher efficiency using motion compen from many Japanese companies, im sation are described. ages have been produced that are comparable to 35mm theater quality. -

Convolutional Color Constancy

Convolutional Color Constancy Jonathan T. Barron [email protected] Abstract assumes that the illuminant color is the average color of all image pixels, thereby implicitly assuming that object Color constancy is the problem of inferring the color of reflectances are, on average, gray [12]. This simple idea the light that illuminated a scene, usually so that the illumi- can be generalized to modeling gradient information or us- nation color can be removed. Because this problem is un- ing generalized norms instead of a simple arithmetic mean derconstrained, it is often solved by modeling the statistical [4, 36], modeling the spatial distribution of colors with a fil- regularities of the colors of natural objects and illumina- ter bank [13], modeling the distribution of color histograms tion. In contrast, in this paper we reformulate the problem [21], or implicitly reasoning about the moments of colors of color constancy as a 2D spatial localization task in a log- using PCA [14]. Other models assume that the colors of chrominance space, thereby allowing us to apply techniques natural objects lie with some gamut [3, 23]. Most of these from object detection and structured prediction to the color models can be thought of as statistical, as they either assume constancy problem. By directly learning how to discrimi- some distribution of colors or they learn some distribution nate between correctly white-balanced images and poorly of colors from training data. This connection to learning white-balanced images, our model is able to improve per- and statistics is sometimes made more explicit, often in a formance on standard benchmarks by nearly 40%. -

Measuring Perceived Color Difference Using YIQ NTSC Transmission Color Space in Mobile Applications

Programación Matemática y Software (2010) Vol.2. Num. 2. Dirección de Reservas de Derecho: 04-2009-011611475800-102 Measuring perceived color difference using YIQ NTSC transmission color space in mobile applications Yuriy Kotsarenko, Fernando Ramos TECNOLÓGICO DE DE MONTERREY, CAMPUS CUERNAVACA. Resumen. En este trabajo varias formulas están introducidas que permiten calcular la medir la diferencia entre colores de forma perceptible, utilizando el espacio de colores YIQ. Las formulas clásicas y sus derivados que utilizan los espacios CIELAB y CIELUV requieren muchas transformaciones aritméticas de valores entrantes definidos comúnmente con los componentes de rojo, verde y azul, y por lo tanto son muy pesadas para su implementación en dispositivos móviles. Las formulas alternativas propuestas en este trabajo basadas en espacio de colores YIQ son sencillas y se calculan rápidamente, incluso en tiempo real. La comparación está incluida en este trabajo entre las formulas clásicas y las propuestas utilizando dos diferentes grupos de experimentos. El primer grupo de experimentos se enfoca en evaluar la diferencia perceptible utilizando diferentes formulas, mientras el segundo grupo de experimentos permite determinar el desempeño de cada una de las formulas para determinar su velocidad cuando se procesan imágenes. Los resultados experimentales indican que las formulas propuestas en este trabajo son muy cercanas en términos perceptibles a las de CIELAB y CIELUV, pero son significativamente más rápidas, lo que los hace buenos candidatos para la medición de las diferencias de colores en dispositivos móviles y aplicaciones en tiempo real. Abstract. An alternative color difference formulas are presented for measuring the perceived difference between two color samples defined in YIQ color space. -

Color Image Enhancement with Saturation Adjustment Method

Journal of Applied Science and Engineering, Vol. 17, No. 4, pp. 341-352 (2014) DOI: 10.6180/jase.2014.17.4.01 Color Image Enhancement with Saturation Adjustment Method Jen-Shiun Chiang1, Chih-Hsien Hsia2*, Hao-Wei Peng1 and Chun-Hung Lien3 1Department of Electrical Engineering, Tamkang University, Tamsui, Taiwan 251 2Department of Electrical Engineering, Chinese Culture University, Taipei, Taiwan 111 3Commercialization and Service Center, Industrial Technology Research Institute, Taipei, Taiwan 106 Abstract In the traditional color adjustment approach, people tried to separately adjust the luminance and saturation. This approach makes the color over-saturate very easily and makes the image look unnatural. In this study, we try to use the concept of exposure compensation to simulate the brightness changes and to find the relationship among luminance, saturation, and hue. The simulation indicates that saturation changes withthe change of luminance and the simulation also shows there are certain relationships between color variation model and YCbCr color model. Together with all these symptoms, we also include the human vision characteristics to propose a new saturation method to enhance the vision effect of an image. As results, the proposed approach can make the image have better vivid and contrast. Most important of all, unlike the over-saturation caused by the conventional approach, our approach prevents over-saturation and further makes the adjusted image look natural. Key Words: Color Adjustment, Human Vision, Color Image Processing, YCbCr, Over-Saturation 1. Introduction over-saturated images. An over-saturated image looks unnatural due to the loss of color details in high satura- With the advanced development of technology, the tion area of the image.