Convolutional Color Constancy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

An Improved SPSIM Index for Image Quality Assessment

S S symmetry Article An Improved SPSIM Index for Image Quality Assessment Mariusz Frackiewicz * , Grzegorz Szolc and Henryk Palus Department of Data Science and Engineering, Silesian University of Technology, Akademicka 16, 44-100 Gliwice, Poland; [email protected] (G.S.); [email protected] (H.P.) * Correspondence: [email protected]; Tel.: +48-32-2371066 Abstract: Objective image quality assessment (IQA) measures are playing an increasingly important role in the evaluation of digital image quality. New IQA indices are expected to be strongly correlated with subjective observer evaluations expressed by Mean Opinion Score (MOS) or Difference Mean Opinion Score (DMOS). One such recently proposed index is the SuperPixel-based SIMilarity (SPSIM) index, which uses superpixel patches instead of a rectangular pixel grid. The authors of this paper have proposed three modifications to the SPSIM index. For this purpose, the color space used by SPSIM was changed and the way SPSIM determines similarity maps was modified using methods derived from an algorithm for computing the Mean Deviation Similarity Index (MDSI). The third modification was a combination of the first two. These three new quality indices were used in the assessment process. The experimental results obtained for many color images from five image databases demonstrated the advantages of the proposed SPSIM modifications. Keywords: image quality assessment; image databases; superpixels; color image; color space; image quality measures Citation: Frackiewicz, M.; Szolc, G.; Palus, H. An Improved SPSIM Index 1. Introduction for Image Quality Assessment. Quantitative domination of acquired color images over gray level images results in Symmetry 2021, 13, 518. https:// the development not only of color image processing methods but also of Image Quality doi.org/10.3390/sym13030518 Assessment (IQA) methods. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Robust Pulse-Rate from Chrominance-Based Rppg Gerard De Haan and Vincent Jeanne

IEEE TRANSACTIONS ON BIOMEDICAL ENGINEERING, VOL. ?, NO. ?, MONTH 2013 1 Robust pulse-rate from chrominance-based rPPG Gerard de Haan and Vincent Jeanne Abstract—Remote photoplethysmography (rPPG) enables con- PPG signal into two independent signals built as a linear tactless monitoring of the blood volume pulse using a regular combination of two color channels [8]. One combination camera. Recent research focused on improved motion robustness, approximated the clean pulse-signal, the other the motion but the proposed blind source separation techniques (BSS) in RGB color space show limited success. We present an analysis of artifact, and the energy in the pulse-signal was minimized the motion problem, from which far superior chrominance-based to optimize the combination. Poh et al. extended this work methods emerge. For a population of 117 stationary subjects, we proposing a linear combination of all three color channels show our methods to perform in 92% good agreement (±1:96σ) defining three independent signals with Independent Compo- with contact PPG, with RMSE and standard deviation both a nent Analysis (ICA) using non-Gaussianity as the criterion factor of two better than BSS-based methods. In a fitness setting using a simple spectral peak detector, the obtained pulse-rate for independence [5]. Lewandowska et al. varied this con- for modest motion (bike) improves from 79% to 98% correct, cept defining three independent linear combinations of the and for vigorous motion (stepping) from less than 11% to more color channels with Principal Component Analysis (PCA) [6]. than 48% correct. We expect the greatly improved robustness to With both Blind Source Separation (BSS) techniques, the considerably widen the application scope of the technology. -

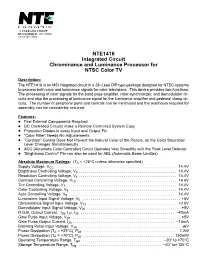

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV Description: The NTE1416 is an MSI integrated circuit in a 28–Lead DIP type package designed for NTSC systems to process both color and luminance signals for color televisions. This device provides two functions: The processing of color signals for the band pass amplifier, color synchronizer, and demodulator cir- cuits and also the processing of luminance signal for the luminance amplifier and pedestal clamp cir- cuits. The number of peripheral parts and controls can be minimized and the manhours required for assembly can be considerbly reduced. Features: D Few External Components Required D DC Controlled Circuits make a Remote Controlled System Easy D Protection Diodes in every Input and Output Pin D “Color Killer” Needs No Adjustements D “Contrast” Control Does Not Prevent the Natural Color of the Picture, as the Color Saturation Level Changes Simultaneously D ACC (Automatic Color Controller) Circuit Operates Very Smoothly with the Peak Level Detector D “Brightness Control” Pin can also be used for ABL (Automatic Beam Limitter) Absolute Maximum Ratings: (TA = +25°C unless otherwise specified) Supply Voltage, VCC . 14.4V Brightness Controlling Voltage, V3 . 14.4V Resolution Controlling Voltage, V4 . 14.4V Contrast Controlling Voltage, V10 . 14.4V Tint Controlling Voltage, V7 . 14.4V Color Controlling Voltage, V9 . 14.4V Auto Controlling Voltage, V8 . 14.4V Luminance Input Signal Voltage, V5 . +5V Chrominance Signal Input Voltage, V13 . +2.5V Demodulator Input Signal Voltage, V25 . +5V R.G.B. Output Current, I26, I27, I28 . –40mA Gate Pulse Input Voltage, V20 . +5V Gate Pulse Output Current, I20 . -

Demosaicking: Color Filter Array Interpolation [Exploring the Imaging

[Bahadir K. Gunturk, John Glotzbach, Yucel Altunbasak, Ronald W. Schafer, and Russel M. Mersereau] FLOWER PHOTO © PHOTO FLOWER MEDIA, 1991 21ST CENTURY PHOTO:CAMERA AND BACKGROUND ©VISION LTD. DIGITAL Demosaicking: Color Filter Array Interpolation [Exploring the imaging process and the correlations among three color planes in single-chip digital cameras] igital cameras have become popular, and many people are choosing to take their pic- tures with digital cameras instead of film cameras. When a digital image is recorded, the camera needs to perform a significant amount of processing to provide the user with a viewable image. This processing includes correction for sensor nonlinearities and nonuniformities, white balance adjustment, compression, and more. An important Dpart of this image processing chain is color filter array (CFA) interpolation or demosaicking. A color image requires at least three color samples at each pixel location. Computer images often use red (R), green (G), and blue (B). A camera would need three separate sensors to completely meas- ure the image. In a three-chip color camera, the light entering the camera is split and projected onto each spectral sensor. Each sensor requires its proper driving electronics, and the sensors have to be registered precisely. These additional requirements add a large expense to the system. Thus, many cameras use a single sensor covered with a CFA. The CFA allows only one color to be measured at each pixel. This means that the camera must estimate the missing two color values at each pixel. This estimation process is known as demosaicking. Several patterns exist for the filter array. -

Improved Television Systems: NTSC and Beyond

• Improved Television Systems: NTSC and Beyond By William F. Schreiber After a discussion ofthe limits to received image quality in NTSC and a excellent results. Demonstrations review of various proposals for improvement, it is concluded that the have been made showing good motion current system is capable ofsignificant increase in spatial and temporal rendition with very few frames per resolution. and that most of these improvements can be made in a second,2 elimination of interline flick er by up-conversion, 3 and improved compatible manner. Newly designed systems,for the sake ofmaximum separation of luminance and chromi utilization of channel capacity. should use many of the techniques nance by means of comb tilters. ~ proposedfor improving NTSC. such as high-rate cameras and displays, No doubt the most important ele but should use the component. rather than composite, technique for ment in creating interest in this sub color multiplexing. A preference is expressed for noncompatible new ject was the demonstration of the Jap systems, both for increased design flexibility and on the basis oflikely anese high-definition television consumer behaL'ior. Some sample systems are described that achieve system in 1981, a development that very high quality in the present 6-MHz channels, full "HDTV" at the took more than ten years.5 Orches CCIR rate of 216 Mbits/sec, or "better-than-35mm" at about 500 trated by NHK, with contributions Mbits/sec. Possibilities for even higher efficiency using motion compen from many Japanese companies, im sation are described. ages have been produced that are comparable to 35mm theater quality. -

Basics of Video

Basics of Video Yao Wang Polytechnic University, Brooklyn, NY11201 [email protected] Video Basics 1 Outline • Color perception and specification (review on your own) • Video capture and disppy(lay (review on your own ) • Analog raster video • Analog TV systems • Digital video Yao Wang, 2013 Video Basics 2 Analog Video • Video raster • Progressive vs. interlaced raster • Analog TV systems Yao Wang, 2013 Video Basics 3 Raster Scan • Real-world scene is a continuous 3-DsignalD signal (temporal, horizontal, vertical) • Analog video is stored in the raster format – Sampling in time: consecutive sets of frames • To render motion properly, >=30 frame/s is needed – Sampling in vertical direction: a frame is represented by a set of scan lines • Number of lines depends on maximum vertical frequency and viewingg, distance, 525 lines in the NTSC s ystem – Video-raster = 1-D signal consisting of scan lines from successive frames Yao Wang, 2013 Video Basics 4 Progressive and Interlaced Scans Progressive Frame Interlaced Frame Horizontal retrace Field 1 Field 2 Vertical retrace Interlaced scan is developed to provide a trade-off between temporal and vertical resolution, for a given, fixed data rate (number of line/sec). Yao Wang, 2013 Video Basics 5 Waveform and Spectrum of an Interlaced Raster Horizontal retrace Vertical retrace Vertical retrace for first field from first to second field from second to third field Blanking level Black level Ӈ Ӈ Th White level Tl T T ⌬t 2 ⌬ t (a) Խ⌿( f )Խ f 0 fl 2fl 3fl fmax (b) Yao Wang, 2013 Video Basics 6 Color -

Color Spaces Analysis for Luminance & Chrominance Signals AS NTSC

Journal Of Engineering And Development, Vol. 15, No.4, Des 2011 ISSN 1813- 7822 Color Spaces Analysis For Luminance & Chrominance Signals AS NTSC-TV System Riyad Mitieb Mahmood Assistant Lecturer Electrical Eng. Dept. Abstract There are many regulations related to television and video broadcast signals in various countries around the world, especially the developed countries in technology and communication systems including the United States, Japan and the two together using (NTSC) system . So this system was chosen in research to analyze the space of colors in which to enables us to know the nature of this system and the possibility to convert to other working systems (PAL, SECAM ,...) to ensure the exchange of information and video signals through systems of the global direct broadcasting and the Internet. The research has included analysis of the colors according to an international standard (International Commission on Illumination), which represents the evolution of Maxwell triangle to the process of mixing colors and thus determine the color space for this system for parameters (I,Q,Y) and calculate the values of quantitative color as well as the value of grayscale according to mathematical equations and illustrations of this, After that simulate those results in the image have been selected for the test to be analyzed according to the matlab program as it has proved assess the pattern of color change correct outcomes of the application in the analysis provided and the color that fits the selected image. Keywords : color’s system , Commission International (CIE) , National Television System Committee (NTSC). تحليﻻت فراغات اﻷلوان ﻹشارات اﻹضاءة والتلوين لنظام التلفازNTSC الخﻻصة هْاك أّظَت مثُشة حخص اىبث أىخيفاصٌ واﻹشاساث اىفذَىَت اىعاٍيت فٍ ٍخخيف دوه اىعاىٌ وباﻷخص اىذوه اىَخطىسة حقُْا فٍ ٍْظىٍاث اﻻحصاﻻث و ٍْها اىىﻻَاث اىَخحذة اﻷٍشَنُت و اىُاباُ و اﻻثْاُ ٍعا حسخعَو ّظاً ( NTSC ( . -

Chrominance Edge Preserving Grayscale Transformation with Approximate First Principal Component for Color Edge Detection

CHROMINANCE EDGE PRESERVING GRAYSCALE TRANSFORMATION WITH APPROXIMATE FIRST PRINCIPAL COMPONENT FOR COLOR EDGE DETECTION Salih Dikbas, Tarik Arici, and Yucel Altunbasak School of Electrical and Computer Engineering Georgia Institute of Technology, Atlanta, Georgia 30332–0250 Email: {salih, tariq, yucel}@ece.gatech.edu ABSTRACT be critical for subsequent techniques that rely on edges in an Edges that are visible in color images may not be detected in image; in some cases the additional information provided by the corresponding grayscale image. This is due to the neigh- color is of utmost importance. Multi-dimensional nature of boring objects having different hues but the same intensities. color makes it more challenging to detect edges in color im- Hence, a color edge preserving grayscale conversion algo- ages, and often increases the computational complexity three- rithm is proposed that helps detect color edges using only the fold compared to grayscale edge detection; hence, color edge luminance component. The algorithm calculates an approxi- detection algorithms accept from the beginning that all of the mation to the first principal component to form a new set of efforts are to find the remaining 10% of the edges. Impor- luminance coefficients instead of using the conventional lu- tance of color edge detection also becomes more apparent in minance coefficients. This method can be directly applied to low contrast images [5]. all existing grayscale edge detectors for color edge detection. Color edge detection techniques fall into two main cat- Processing only one channel instead of three channels results egories. Techniques in the first group [6–10] calculate gra- in lower computational complexity compared to other color dients in each color component separately, then either fuses edge detectors. -

R501 Bluel GREEN!

United States Patent ' on 3,585,280 [72] Inventor Renville 11.. McMann, Jr. [56] References Cited New Canaan, Colo. [211' Appl. No. 832,404 UNITED STATES PATENTS [22] Filed June 11, 1969 3,479,447 11/1969 Goldmark et al. .......... .. 178/52 [45] Patented June 15, I971 Primary Examiner—-Robert L. Richardson [731 Assignee Columbia Broadcasting System. Inc. Attorney-Brumbaugh, Graves. Donohue 62 Raymond New York, N.Y. ‘ABSTRACT: In the illustrative embodiment of the invention described herein, the superimposed pilot signal of a color in formation signal is supplied to a detector which produces a [54] GAIN CORRECTION APPARATUS FOR COLOR pulse each time the pilot signal crosses its zero axis. The pulses PICTURE INFORMATION SIGNALS are delayed in time to align the pulses with the pilot signal at REPRODUCED FROM A RECORD MEDIUM 90° from the axis crossing points and the delayed pulses are 5 Claims, 1 Drawing Fig. thereafter utilized to enable a sampling gate to which the pilot signal is also supplied. The output signal from the gate is [52] U.S. CI ...................................................... .. l78/5.2, smoothed and then supplied across a potentiometer to a varia 178/5.4 ble gain ampli?er to which the color carrier signal com [51] Int. Cl. ...................................................... .. ll04n 9/48 ponents of the color information signal are supplied to [50] HField of Search .......................................... .. 178/5.2, eliminate amplitude ?uctuations in the color carrier signal 5.4, 5.2 A, 5.2 D, 7.2, 6.7, 6.7 A, -

Appendix G: the Pantone “Our Color Wheel” Compared to the Chromaticity Diagram (2016) 1

Appendix G - 1 Appendix G: The Pantone “Our color Wheel” compared to the Chromaticity Diagram (2016) 1 There is considerable interest in the conversion of Pantone identified color numbers to other numbers within the CIE and ISO Standards. Unfortunately, most of these Standards are not based on any theoretical foundation and have evolved since the late 1920's based on empirical relationships agreed to by committees. As a general rule, these Standards have all assumed that Grassman’s Law of linearity in the visual realm. Unfortunately, this fundamental assumption is not appropriate and has never been confirmed. The visual system of all biological neural systems rely upon logarithmic summing and differencing. A particular goal has been to define precisely the border between colors occurring in the local language and vernacular. An example is the border between yellow and orange. Because of the logarithmic summations used in the neural circuits of the eye and the positions of perceived yellow and orange relative to the photoreceptors of the eye, defining the transition wavelength between these two colors is particularly acute.The perceived response is particularly sensitive to stimulus intensity in the spectral region from 560 to about 580 nanometers. This Appendix relies upon the Chromaticity Diagram (2016) developed within this work. It has previously been described as The New Chromaticity Diagram, or the New Chromaticity Diagram of Research. It is in fact a foundation document that is theoretically supportable and in turn supports a wide variety of less well founded Hering, Munsell, and various RGB and CMYK representations of the human visual spectrum.