Computing Chromatic Adaptation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Understanding Color and Gamut Poster

Understanding Colors and Gamut www.tektronix.com/video Contact Tektronix: ASEAN / Australasia (65) 6356 3900 Austria* 00800 2255 4835 Understanding High Balkans, Israel, South Africa and other ISE Countries +41 52 675 3777 Definition Video Poster Belgium* 00800 2255 4835 Brazil +55 (11) 3759 7627 This poster provides graphical Canada 1 (800) 833-9200 reference to understanding Central East Europe and the Baltics +41 52 675 3777 high definition video. Central Europe & Greece +41 52 675 3777 Denmark +45 80 88 1401 Finland +41 52 675 3777 France* 00800 2255 4835 To order your free copy of this poster, please visit: Germany* 00800 2255 4835 www.tek.com/poster/understanding-hd-and-3g-sdi-video-poster Hong Kong 400-820-5835 India 000-800-650-1835 Italy* 00800 2255 4835 Japan 81 (3) 6714-3010 Luxembourg +41 52 675 3777 MPEG-2 Transport Stream Advanced Television Systems Committee (ATSC) Mexico, Central/South America & Caribbean 52 (55) 56 04 50 90 ISO/IEC 13818-1 International Standard Program and System Information Protocol (PSIP) for Terrestrial Broadcast and cable (Doc. A//65B and A/69) System Time Table (STT) Rating Region Table (RRT) Direct Channel Change Table (DCCT) ISO/IEC 13818-2 Video Levels and Profiles MPEG Poster ISO/IEC 13818-1 Transport Packet PES PACKET SYNTAX DIAGRAM 24 bits 8 bits 16 bits Syntax Bits Format Syntax Bits Format Syntax Bits Format 4:2:0 4:2:2 4:2:0, 4:2:2 1920x1152 1920x1088 1920x1152 Packet PES Optional system_time_table_section(){ rating_region_table_section(){ directed_channel_change_table_section(){ High Syntax -

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

An Improved SPSIM Index for Image Quality Assessment

S S symmetry Article An Improved SPSIM Index for Image Quality Assessment Mariusz Frackiewicz * , Grzegorz Szolc and Henryk Palus Department of Data Science and Engineering, Silesian University of Technology, Akademicka 16, 44-100 Gliwice, Poland; [email protected] (G.S.); [email protected] (H.P.) * Correspondence: [email protected]; Tel.: +48-32-2371066 Abstract: Objective image quality assessment (IQA) measures are playing an increasingly important role in the evaluation of digital image quality. New IQA indices are expected to be strongly correlated with subjective observer evaluations expressed by Mean Opinion Score (MOS) or Difference Mean Opinion Score (DMOS). One such recently proposed index is the SuperPixel-based SIMilarity (SPSIM) index, which uses superpixel patches instead of a rectangular pixel grid. The authors of this paper have proposed three modifications to the SPSIM index. For this purpose, the color space used by SPSIM was changed and the way SPSIM determines similarity maps was modified using methods derived from an algorithm for computing the Mean Deviation Similarity Index (MDSI). The third modification was a combination of the first two. These three new quality indices were used in the assessment process. The experimental results obtained for many color images from five image databases demonstrated the advantages of the proposed SPSIM modifications. Keywords: image quality assessment; image databases; superpixels; color image; color space; image quality measures Citation: Frackiewicz, M.; Szolc, G.; Palus, H. An Improved SPSIM Index 1. Introduction for Image Quality Assessment. Quantitative domination of acquired color images over gray level images results in Symmetry 2021, 13, 518. https:// the development not only of color image processing methods but also of Image Quality doi.org/10.3390/sym13030518 Assessment (IQA) methods. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Robust Pulse-Rate from Chrominance-Based Rppg Gerard De Haan and Vincent Jeanne

IEEE TRANSACTIONS ON BIOMEDICAL ENGINEERING, VOL. ?, NO. ?, MONTH 2013 1 Robust pulse-rate from chrominance-based rPPG Gerard de Haan and Vincent Jeanne Abstract—Remote photoplethysmography (rPPG) enables con- PPG signal into two independent signals built as a linear tactless monitoring of the blood volume pulse using a regular combination of two color channels [8]. One combination camera. Recent research focused on improved motion robustness, approximated the clean pulse-signal, the other the motion but the proposed blind source separation techniques (BSS) in RGB color space show limited success. We present an analysis of artifact, and the energy in the pulse-signal was minimized the motion problem, from which far superior chrominance-based to optimize the combination. Poh et al. extended this work methods emerge. For a population of 117 stationary subjects, we proposing a linear combination of all three color channels show our methods to perform in 92% good agreement (±1:96σ) defining three independent signals with Independent Compo- with contact PPG, with RMSE and standard deviation both a nent Analysis (ICA) using non-Gaussianity as the criterion factor of two better than BSS-based methods. In a fitness setting using a simple spectral peak detector, the obtained pulse-rate for independence [5]. Lewandowska et al. varied this con- for modest motion (bike) improves from 79% to 98% correct, cept defining three independent linear combinations of the and for vigorous motion (stepping) from less than 11% to more color channels with Principal Component Analysis (PCA) [6]. than 48% correct. We expect the greatly improved robustness to With both Blind Source Separation (BSS) techniques, the considerably widen the application scope of the technology. -

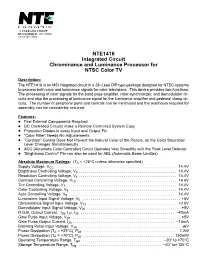

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV Description: The NTE1416 is an MSI integrated circuit in a 28–Lead DIP type package designed for NTSC systems to process both color and luminance signals for color televisions. This device provides two functions: The processing of color signals for the band pass amplifier, color synchronizer, and demodulator cir- cuits and also the processing of luminance signal for the luminance amplifier and pedestal clamp cir- cuits. The number of peripheral parts and controls can be minimized and the manhours required for assembly can be considerbly reduced. Features: D Few External Components Required D DC Controlled Circuits make a Remote Controlled System Easy D Protection Diodes in every Input and Output Pin D “Color Killer” Needs No Adjustements D “Contrast” Control Does Not Prevent the Natural Color of the Picture, as the Color Saturation Level Changes Simultaneously D ACC (Automatic Color Controller) Circuit Operates Very Smoothly with the Peak Level Detector D “Brightness Control” Pin can also be used for ABL (Automatic Beam Limitter) Absolute Maximum Ratings: (TA = +25°C unless otherwise specified) Supply Voltage, VCC . 14.4V Brightness Controlling Voltage, V3 . 14.4V Resolution Controlling Voltage, V4 . 14.4V Contrast Controlling Voltage, V10 . 14.4V Tint Controlling Voltage, V7 . 14.4V Color Controlling Voltage, V9 . 14.4V Auto Controlling Voltage, V8 . 14.4V Luminance Input Signal Voltage, V5 . +5V Chrominance Signal Input Voltage, V13 . +2.5V Demodulator Input Signal Voltage, V25 . +5V R.G.B. Output Current, I26, I27, I28 . –40mA Gate Pulse Input Voltage, V20 . +5V Gate Pulse Output Current, I20 . -

Creating 4K/UHD Content Poster

Creating 4K/UHD Content Colorimetry Image Format / SMPTE Standards Figure A2. Using a Table B1: SMPTE Standards The television color specification is based on standards defined by the CIE (Commission 100% color bar signal Square Division separates the image into quad links for distribution. to show conversion Internationale de L’Éclairage) in 1931. The CIE specified an idealized set of primary XYZ SMPTE Standards of RGB levels from UHDTV 1: 3840x2160 (4x1920x1080) tristimulus values. This set is a group of all-positive values converted from R’G’B’ where 700 mv (100%) to ST 125 SDTV Component Video Signal Coding for 4:4:4 and 4:2:2 for 13.5 MHz and 18 MHz Systems 0mv (0%) for each ST 240 Television – 1125-Line High-Definition Production Systems – Signal Parameters Y is proportional to the luminance of the additive mix. This specification is used as the color component with a color bar split ST 259 Television – SDTV Digital Signal/Data – Serial Digital Interface basis for color within 4K/UHDTV1 that supports both ITU-R BT.709 and BT2020. 2020 field BT.2020 and ST 272 Television – Formatting AES/EBU Audio and Auxiliary Data into Digital Video Ancillary Data Space BT.709 test signal. ST 274 Television – 1920 x 1080 Image Sample Structure, Digital Representation and Digital Timing Reference Sequences for The WFM8300 was Table A1: Illuminant (Ill.) Value Multiple Picture Rates 709 configured for Source X / Y BT.709 colorimetry ST 296 1280 x 720 Progressive Image 4:2:2 and 4:4:4 Sample Structure – Analog & Digital Representation & Analog Interface as shown in the video ST 299-0/1/2 24-Bit Digital Audio Format for SMPTE Bit-Serial Interfaces at 1.5 Gb/s and 3 Gb/s – Document Suite Illuminant A: Tungsten Filament Lamp, 2854°K x = 0.4476 y = 0.4075 session display. -

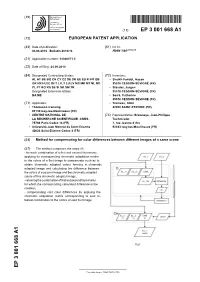

Method for Compensating for Color Differences Between Different Images of a Same Scene

(19) TZZ¥ZZ__T (11) EP 3 001 668 A1 (12) EUROPEAN PATENT APPLICATION (43) Date of publication: (51) Int Cl.: 30.03.2016 Bulletin 2016/13 H04N 1/60 (2006.01) (21) Application number: 14306471.5 (22) Date of filing: 24.09.2014 (84) Designated Contracting States: (72) Inventors: AL AT BE BG CH CY CZ DE DK EE ES FI FR GB • Sheikh Faridul, Hasan GR HR HU IE IS IT LI LT LU LV MC MK MT NL NO 35576 CESSON-SÉVIGNÉ (FR) PL PT RO RS SE SI SK SM TR • Stauder, Jurgen Designated Extension States: 35576 CESSON-SÉVIGNÉ (FR) BA ME • Serré, Catherine 35576 CESSON-SÉVIGNÉ (FR) (71) Applicants: • Tremeau, Alain • Thomson Licensing 42000 SAINT-ETIENNE (FR) 92130 Issy-les-Moulineaux (FR) • CENTRE NATIONAL DE (74) Representative: Browaeys, Jean-Philippe LA RECHERCHE SCIENTIFIQUE -CNRS- Technicolor 75794 Paris Cedex 16 (FR) 1, rue Jeanne d’Arc • Université Jean Monnet de Saint-Etienne 92443 Issy-les-Moulineaux (FR) 42023 Saint-Etienne Cedex 2 (FR) (54) Method for compensating for color differences between different images of a same scene (57) The method comprises the steps of: - for each combination of a first and second illuminants, applying its corresponding chromatic adaptation matrix to the colors of a first image to compensate such as to obtain chromatic adapted colors forming a chromatic adapted image and calculating the difference between the colors of a second image and the chromatic adapted colors of this chromatic adapted image, - retaining the combination of first and second illuminants for which the corresponding calculated difference is the smallest, - compensating said color differences by applying the chromatic adaptation matrix corresponding to said re- tained combination to the colors of said first image. -

Khronos Data Format Specification

Khronos Data Format Specification Andrew Garrard Version 1.2, Revision 1 2019-03-31 1 / 207 Khronos Data Format Specification License Information Copyright (C) 2014-2019 The Khronos Group Inc. All Rights Reserved. This specification is protected by copyright laws and contains material proprietary to the Khronos Group, Inc. It or any components may not be reproduced, republished, distributed, transmitted, displayed, broadcast, or otherwise exploited in any manner without the express prior written permission of Khronos Group. You may use this specification for implementing the functionality therein, without altering or removing any trademark, copyright or other notice from the specification, but the receipt or possession of this specification does not convey any rights to reproduce, disclose, or distribute its contents, or to manufacture, use, or sell anything that it may describe, in whole or in part. This version of the Data Format Specification is published and copyrighted by Khronos, but is not a Khronos ratified specification. Accordingly, it does not fall within the scope of the Khronos IP policy, except to the extent that sections of it are normatively referenced in ratified Khronos specifications. Such references incorporate the referenced sections into the ratified specifications, and bring those sections into the scope of the policy for those specifications. Khronos Group grants express permission to any current Promoter, Contributor or Adopter member of Khronos to copy and redistribute UNMODIFIED versions of this specification in any fashion, provided that NO CHARGE is made for the specification and the latest available update of the specification for any version of the API is used whenever possible. -

Spectral Primary Decomposition for Rendering with RGB Reflectance

Eurographics Symposium on Rendering (DL-only Track) (2019) T. Boubekeur and P. Sen (Editors) Spectral Primary Decomposition for Rendering with sRGB Reflectance Ian Mallett1 and Cem Yuksel1 1University of Utah Ground Truth Our Method Meng et al. 2015 D65 Environment 35 Error (Noise & Imprecision) Error (Color Distortion) E D CIE76 0:0 Lambertian Plane Figure 1: Spectral rendering of a texture containing the entire sRGB gamut as the Lambertian albedo for a plane under a D65 environment. In this configuration, ideally, rendered sRGB pixels should match the texture’s values. Prior work by Meng et al. [MSHD15] produces noticeable color distortion, whereas our method produces no error beyond numerical precision and Monte Carlo sampling noise (the magnitude of the DE induced by this noise varies with the image because sRGB is perceptually nonlinear). Contemporary work [JH19] is also nearly able to achieve this, but at a significant implementation and memory cost. Abstract Spectral renderers, as-compared to RGB renderers, are able to simulate light transport that is closer to reality, capturing light behavior that is impossible to simulate with any three-primary decomposition. However, spectral rendering requires spectral scene data (e.g. textures and material properties), which is not widely available, severely limiting the practicality of spectral rendering. Unfortunately, producing a physically valid reflectance spectrum from a given sRGB triple has been a challenging problem, and indeed until very recently constructing a spectrum without colorimetric round-trip error was thought to be impos- sible. In this paper, we introduce a new procedure for efficiently generating a reflectance spectrum from any given sRGB input data. -

Painting with Light: a Technical Overview of Implementing HDR Support in Krita

Painting with Light: A Technical Overview of Implementing HDR Support in Krita Executive Summary Krita, a leading open source application for artists, has become one of the first digital painting software to achieve true high dynamic range (HDR) capability. With more than two million downloads per year, the program enables users to create and manipulate illustrations, concept art, comics, matte paintings, game art, and more. With HDR, Krita now opens the door for professionals and amateurs to a vastly extended range of colors and luminance. The pioneering approach of the Krita team can be leveraged by developers to add HDR to other applications. Krita and Intel engineers have also worked together to enhance Krita performance through Intel® multithreading technology, while Intel’s built-in support of HDR provides further acceleration. This white paper gives a high-level description of the development process to equip Krita with HDR. A Revolution in the Display of Color and Brightness Before the arrival of HDR, displays were calibrated to comply with the D65 standard of whiteness, corresponding to average midday light in Western and Northern Europe. Possibilities of storing color, contrast, or other information about each pixel were limited. Encoding was limited to a depth of 8 bits; some laptop screens only handled 6 bits per pixel. By comparison, HDR standards typically define 10 bits per pixel, with some rising to 16. This extra capacity for storing information allows HDR displays to go beyond the basic sRGB color space used previously and display the colors of Recommendation ITU-R BT.2020 (Rec. 2020, also known as BT.2020). -

Chromatic Adaptation Transform by Spectral Reconstruction Scott A

Chromatic Adaptation Transform by Spectral Reconstruction Scott A. Burns, University of Illinois at Urbana-Champaign, [email protected] February 28, 2019 Note to readers: This version of the paper is a preprint of a paper to appear in Color Research and Application in October 2019 (Citation: Burns SA. Chromatic adaptation transform by spectral reconstruction. Color Res Appl. 2019;44(5):682-693). The full text of the final version is available courtesy of Wiley Content Sharing initiative at: https://rdcu.be/bEZbD. The final published version differs substantially from the preprint shown here, as follows. The claims of negative tristimulus values being “failures” of a CAT are removed, since in some circumstances such as with “supersaturated” colors, it may be reasonable for a CAT to produce such results. The revised version simply states that in certain applications, tristimulus values outside the spectral locus or having negative values are undesirable. In these cases, the proposed method will guarantee that the destination colors will always be within the spectral locus. Abstract: A color appearance model (CAM) is an advanced colorimetric tool used to predict color appearance under a wide variety of viewing conditions. A chromatic adaptation transform (CAT) is an integral part of a CAM. Its role is to predict “corresponding colors,” that is, a pair of colors that have the same color appearance when viewed under different illuminants, after partial or full adaptation to each illuminant. Modern CATs perform well when applied to a limited range of illuminant pairs and a limited range of source (test) colors. However, they can fail if operated outside these ranges.