A Short Guide to Choosing a Digital Format for Video Archiving Masters | S

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Compression: Dictionary-Based Coding 2 / 37 Dictionary-Based Coding Dictionary-Based Coding

Dictionary-based Coding already coded not yet coded search buffer look-ahead buffer cursor (N symbols) (L symbols) We know the past but cannot control it. We control the future but... Last Lecture Last Lecture: Predictive Lossless Coding Predictive Lossless Coding Simple and effective way to exploit dependencies between neighboring symbols / samples Optimal predictor: Conditional mean (requires storage of large tables) Affine and Linear Prediction Simple structure, low-complex implementation possible Optimal prediction parameters are given by solution of Yule-Walker equations Works very well for real signals (e.g., audio, images, ...) Efficient Lossless Coding for Real-World Signals Affine/linear prediction (often: block-adaptive choice of prediction parameters) Entropy coding of prediction errors (e.g., arithmetic coding) Using marginal pmf often already yields good results Can be improved by using conditional pmfs (with simple conditions) Heiko Schwarz (Freie Universität Berlin) — Data Compression: Dictionary-based Coding 2 / 37 Dictionary-based Coding Dictionary-Based Coding Coding of Text Files Very high amount of dependencies Affine prediction does not work (requires linear dependencies) Higher-order conditional coding should work well, but is way to complex (memory) Alternative: Do not code single characters, but words or phrases Example: English Texts Oxford English Dictionary lists less than 230 000 words (including obsolete words) On average, a word contains about 6 characters Average codeword length per character would be limited by 1 -

A Survey Paper on Different Speech Compression Techniques

Vol-2 Issue-5 2016 IJARIIE-ISSN (O)-2395-4396 A Survey Paper on Different Speech Compression Techniques Kanawade Pramila.R1, Prof. Gundal Shital.S2 1 M.E. Electronics, Department of Electronics Engineering, Amrutvahini College of Engineering, Sangamner, Maharashtra, India. 2 HOD in Electronics Department, Department of Electronics Engineering , Amrutvahini College of Engineering, Sangamner, Maharashtra, India. ABSTRACT This paper describes the different types of speech compression techniques. Speech compression can be divided into two main types such as lossless and lossy compression. This survey paper has been written with the help of different types of Waveform-based speech compression, Parametric-based speech compression, Hybrid based speech compression etc. Compression is nothing but reducing size of data with considering memory size. Speech compression means voiced signal compress for different application such as high quality database of speech signals, multimedia applications, music database and internet applications. Today speech compression is very useful in our life. The main purpose or aim of speech compression is to compress any type of audio that is transfer over the communication channel, because of the limited channel bandwidth and data storage capacity and low bit rate. The use of lossless and lossy techniques for speech compression means that reduced the numbers of bits in the original information. By the use of lossless data compression there is no loss in the original information but while using lossy data compression technique some numbers of bits are loss. Keyword: - Bit rate, Compression, Waveform-based speech compression, Parametric-based speech compression, Hybrid based speech compression. 1. INTRODUCTION -1 Speech compression is use in the encoding system. -

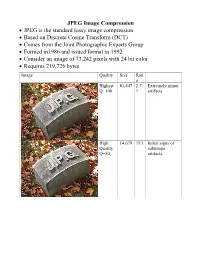

JPEG Image Compression

JPEG Image Compression JPEG is the standard lossy image compression Based on Discrete Cosine Transform (DCT) Comes from the Joint Photographic Experts Group Formed in1986 and issued format in 1992 Consider an image of 73,242 pixels with 24 bit color Requires 219,726 bytes Image Quality Size Rati o Highest 81,447 2.7: Extremely minor Q=100 1 artifacts High 14,679 15:1 Initial signs of Quality subimage Q=50 artifacts Medium 9,407 23:1 Stronger Q artifacts; loss of high frequency information Low 4,787 46:1 Severe high frequency loss leads to obvious artifacts on subimage boundaries ("macroblocking ") Lowest 1,523 144: Extreme loss of 1 color and detail; the leaves are nearly unrecognizable JPEG How it works Begin with a color translation RGB goes to Y′CBCR Luma and two Chroma colors Y is brightness CB is B-Y CR is R-Y Downsample or Chroma Subsampling Chroma data resolutions reduced by 2 or 3 Eye is less sensitive to fine color details than to brightness Block splitting Each channel broken into 8x8 blocks no subsampling Or 16x8 most common at medium compression Or 16x16 Must fill in remaining areas of incomplete blocks This gives the values DCT - centering Center the data about 0 Range is now -128 to 127 Middle is zero Discrete cosine transform formula Apply as 2D DCT using the formula Creates a new matrix Top left (largest) is the DC coefficient constant component Gives basic hue for the block Remaining 63 are AC coefficients Discrete cosine transform The DCT transforms an 8×8 block of input values to a linear combination of these 64 patterns. -

Download Media Player Codec Pack Version 4.1 Media Player Codec Pack

download media player codec pack version 4.1 Media Player Codec Pack. Description: In Microsoft Windows 10 it is not possible to set all file associations using an installer. Microsoft chose to block changes of file associations with the introduction of their Zune players. Third party codecs are also blocked in some instances, preventing some files from playing in the Zune players. A simple workaround for this problem is to switch playback of video and music files to Windows Media Player manually. In start menu click on the "Settings". In the "Windows Settings" window click on "System". On the "System" pane click on "Default apps". On the "Choose default applications" pane click on "Films & TV" under "Video Player". On the "Choose an application" pop up menu click on "Windows Media Player" to set Windows Media Player as the default player for video files. Footnote: The same method can be used to apply file associations for music, by simply clicking on "Groove Music" under "Media Player" instead of changing Video Player in step 4. Media Player Codec Pack Plus. Codec's Explained: A codec is a piece of software on either a device or computer capable of encoding and/or decoding video and/or audio data from files, streams and broadcasts. The word Codec is a portmanteau of ' co mpressor- dec ompressor' Compression types that you will be able to play include: x264 | x265 | h.265 | HEVC | 10bit x265 | 10bit x264 | AVCHD | AVC DivX | XviD | MP4 | MPEG4 | MPEG2 and many more. File types you will be able to play include: .bdmv | .evo | .hevc | .mkv | .avi | .flv | .webm | .mp4 | .m4v | .m4a | .ts | .ogm .ac3 | .dts | .alac | .flac | .ape | .aac | .ogg | .ofr | .mpc | .3gp and many more. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Video Codec Requirements and Evaluation Methodology

Video Codec Requirements 47pt 30pt and Evaluation Methodology Color::white : LT Medium Font to be used by customers and : Arial www.huawei.com draft-filippov-netvc-requirements-01 Alexey Filippov, Huawei Technologies 35pt Contents Font to be used by customers and partners : • An overview of applications • Requirements 18pt • Evaluation methodology Font to be used by customers • Conclusions and partners : Slide 2 Page 2 35pt Applications Font to be used by customers and partners : • Internet Protocol Television (IPTV) • Video conferencing 18pt • Video sharing Font to be used by customers • Screencasting and partners : • Game streaming • Video monitoring / surveillance Slide 3 35pt Internet Protocol Television (IPTV) Font to be used by customers and partners : • Basic requirements: . Random access to pictures 18pt Random Access Period (RAP) should be kept small enough (approximately, 1-15 seconds); Font to be used by customers . Temporal (frame-rate) scalability; and partners : . Error robustness • Optional requirements: . resolution and quality (SNR) scalability Slide 4 35pt Internet Protocol Television (IPTV) Font to be used by customers and partners : Resolution Frame-rate, fps Picture access mode 2160p (4K),3840x2160 60 RA 18pt 1080p, 1920x1080 24, 50, 60 RA 1080i, 1920x1080 30 (60 fields per second) RA Font to be used by customers and partners : 720p, 1280x720 50, 60 RA 576p (EDTV), 720x576 25, 50 RA 576i (SDTV), 720x576 25, 30 RA 480p (EDTV), 720x480 50, 60 RA 480i (SDTV), 720x480 25, 30 RA Slide 5 35pt Video conferencing Font to be used by customers and partners : • Basic requirements: . Delay should be kept as low as possible 18pt The preferable and maximum delay values should be less than 100 ms and 350 ms, respectively Font to be used by customers . -

![Arxiv:2004.10531V1 [Cs.OH] 8 Apr 2020](https://docslib.b-cdn.net/cover/5419/arxiv-2004-10531v1-cs-oh-8-apr-2020-215419.webp)

Arxiv:2004.10531V1 [Cs.OH] 8 Apr 2020

ROOT I/O compression improvements for HEP analysis Oksana Shadura1;∗ Brian Paul Bockelman2;∗∗ Philippe Canal3;∗∗∗ Danilo Piparo4;∗∗∗∗ and Zhe Zhang1;y 1University of Nebraska-Lincoln, 1400 R St, Lincoln, NE 68588, United States 2Morgridge Institute for Research, 330 N Orchard St, Madison, WI 53715, United States 3Fermilab, Kirk Road and Pine St, Batavia, IL 60510, United States 4CERN, Meyrin 1211, Geneve, Switzerland Abstract. We overview recent changes in the ROOT I/O system, increasing per- formance and enhancing it and improving its interaction with other data analy- sis ecosystems. Both the newly introduced compression algorithms, the much faster bulk I/O data path, and a few additional techniques have the potential to significantly to improve experiment’s software performance. The need for efficient lossless data compression has grown significantly as the amount of HEP data collected, transmitted, and stored has dramatically in- creased during the LHC era. While compression reduces storage space and, potentially, I/O bandwidth usage, it should not be applied blindly: there are sig- nificant trade-offs between the increased CPU cost for reading and writing files and the reduce storage space. 1 Introduction In the past years LHC experiments are commissioned and now manages about an exabyte of storage for analysis purposes, approximately half of which is used for archival purposes, and half is used for traditional disk storage. Meanwhile for HL-LHC storage requirements per year are expected to be increased by factor 10 [1]. arXiv:2004.10531v1 [cs.OH] 8 Apr 2020 Looking at these predictions, we would like to state that storage will remain one of the major cost drivers and at the same time the bottlenecks for HEP computing. -

Making Speech Recognition Work on the Web Christopher J. Varenhorst

Making Speech Recognition Work on the Web by Christopher J. Varenhorst Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Masters of Engineering in Computer Science and Engineering at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY May 2011 c Massachusetts Institute of Technology 2011. All rights reserved. Author.................................................................... Department of Electrical Engineering and Computer Science May 20, 2011 Certified by . James R. Glass Principal Research Scientist Thesis Supervisor Certified by . Scott Cyphers Research Scientist Thesis Supervisor Accepted by . Christopher J. Terman Chairman, Department Committee on Graduate Students Making Speech Recognition Work on the Web by Christopher J. Varenhorst Submitted to the Department of Electrical Engineering and Computer Science on May 20, 2011, in partial fulfillment of the requirements for the degree of Masters of Engineering in Computer Science and Engineering Abstract We present an improved Audio Controller for Web-Accessible Multimodal Interface toolkit { a system that provides a simple way for developers to add speech recognition to web pages. Our improved system offers increased usability and performance for users and greater flexibility for developers. Tests performed showed a %36 increase in recognition response time in the best possible networking conditions. Preliminary tests shows a markedly improved users experience. The new Wowza platform also provides a means of upgrading other Audio Controllers easily. Thesis Supervisor: James R. Glass Title: Principal Research Scientist Thesis Supervisor: Scott Cyphers Title: Research Scientist 2 Contents 1 Introduction and Background 7 1.1 WAMI - Web Accessible Multimodal Toolkit . 8 1.1.1 Existing Java applet . 11 1.2 SALT . -

Microsoft Powerpoint

Development of Multimedia WebApp on Tizen Platform 1. HTML Multimedia 2. Multimedia Playing with HTML5 Tags (1) HTML5 Video (2) HTML5 Audio (3) HTML Pulg-ins (4) HTML YouTube (5) Accessing Media Streams and Playing (6) Multimedia Contents Mgmt (7) Capturing Images 3. Multimedia Processing Web Device API Multimedia WepApp on Tizen - 1 - 1. HTML Multimedia • What is Multimedia ? − Multimedia comes in many different formats. It can be almost anything you can hear or see. − Examples : Pictures, music, sound, videos, records, films, animations, and more. − Web pages often contain multimedia elements of different types and formats. • Multimedia Formats − Multimedia elements (like sounds or videos) are stored in media files. − The most common way to discover the type of a file, is to look at the file extension. ⇔ When a browser sees the file extension .htm or .html, it will treat the file as an HTML file. ⇔ The .xml extension indicates an XML file, and the .css extension indicates a style sheet file. ⇔ Pictures are recognized by extensions like .gif, .png and .jpg. − Multimedia files also have their own formats and different extensions like: .swf, .wav, .mp3, .mp4, .mpg, .wmv, and .avi. Multimedia WepApp on Tizen - 2 - 2. Multimedia Playing with HTML5 Tags (1) HTML5 Video • Some of the popular video container formats include the following: Audio Video Interleave (.avi) Flash Video (.flv) MPEG 4 (.mp4) Matroska (.mkv) Ogg (.ogv) • Browser Support Multimedia WepApp on Tizen - 3 - • Common Video Format Format File Description .mpg MPEG. Developed by the Moving Pictures Expert Group. The first popular video format on the MPEG .mpeg web. -

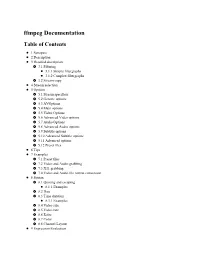

Ffmpeg Documentation Table of Contents

ffmpeg Documentation Table of Contents 1 Synopsis 2 Description 3 Detailed description 3.1 Filtering 3.1.1 Simple filtergraphs 3.1.2 Complex filtergraphs 3.2 Stream copy 4 Stream selection 5 Options 5.1 Stream specifiers 5.2 Generic options 5.3 AVOptions 5.4 Main options 5.5 Video Options 5.6 Advanced Video options 5.7 Audio Options 5.8 Advanced Audio options 5.9 Subtitle options 5.10 Advanced Subtitle options 5.11 Advanced options 5.12 Preset files 6 Tips 7 Examples 7.1 Preset files 7.2 Video and Audio grabbing 7.3 X11 grabbing 7.4 Video and Audio file format conversion 8 Syntax 8.1 Quoting and escaping 8.1.1 Examples 8.2 Date 8.3 Time duration 8.3.1 Examples 8.4 Video size 8.5 Video rate 8.6 Ratio 8.7 Color 8.8 Channel Layout 9 Expression Evaluation 10 OpenCL Options 11 Codec Options 12 Decoders 13 Video Decoders 13.1 rawvideo 13.1.1 Options 14 Audio Decoders 14.1 ac3 14.1.1 AC-3 Decoder Options 14.2 ffwavesynth 14.3 libcelt 14.4 libgsm 14.5 libilbc 14.5.1 Options 14.6 libopencore-amrnb 14.7 libopencore-amrwb 14.8 libopus 15 Subtitles Decoders 15.1 dvdsub 15.1.1 Options 15.2 libzvbi-teletext 15.2.1 Options 16 Encoders 17 Audio Encoders 17.1 aac 17.1.1 Options 17.2 ac3 and ac3_fixed 17.2.1 AC-3 Metadata 17.2.1.1 Metadata Control Options 17.2.1.2 Downmix Levels 17.2.1.3 Audio Production Information 17.2.1.4 Other Metadata Options 17.2.2 Extended Bitstream Information 17.2.2.1 Extended Bitstream Information - Part 1 17.2.2.2 Extended Bitstream Information - Part 2 17.2.3 Other AC-3 Encoding Options 17.2.4 Floating-Point-Only AC-3 Encoding -

Fast Cosine Transform to Increase Speed-Up and Efficiency of Karhunen-Loève Transform for Lossy Image Compression

Fast Cosine Transform to increase speed-up and efficiency of Karhunen-Loève Transform for lossy image compression Mario Mastriani, and Juliana Gambini Several authors have tried to combine the DCT with the Abstract —In this work, we present a comparison between two KLT but with questionable success [1], with particular interest techniques of image compression. In the first case, the image is to multispectral imagery [30, 32, 34]. divided in blocks which are collected according to zig-zag scan. In In all cases, the KLT is used to decorrelate in the spectral the second one, we apply the Fast Cosine Transform to the image, domain. All images are first decomposed into blocks, and each and then the transformed image is divided in blocks which are collected according to zig-zag scan too. Later, in both cases, the block uses its own KLT instead of one single matrix for the Karhunen-Loève transform is applied to mentioned blocks. On the whole image. In this paper, we use the KLT for a decorrelation other hand, we present three new metrics based on eigenvalues for a between sub-blocks resulting of the applications of a DCT better comparative evaluation of the techniques. Simulations show with zig-zag scan, that is to say, in the spectral domain. that the combined version is the best, with minor Mean Absolute We introduce in this paper an appropriate sequence, Error (MAE) and Mean Squared Error (MSE), higher Peak Signal to decorrelating first the data in the spatial domain using the DCT Noise Ratio (PSNR) and better image quality. -

© 2019 Jimi Jones

© 2019 Jimi Jones SO MANY STANDARDS, SO LITTLE TIME: A HISTORY AND ANALYSIS OF FOUR DIGITAL VIDEO STANDARDS BY JIMI JONES DISSERTATION Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Library and Information Science in the Graduate College of the University of Illinois at Urbana-Champaign, 2019 Urbana, Illinois Doctoral Committee: Associate Professor Jerome McDonough, Chair Associate Professor Lori Kendall Assistant Professor Peter Darch Professor Howard Besser, New York University ABSTRACT This dissertation focuses on standards for digital video - the social aspects of their design and the sociotechnical forces that drive their development and adoption. This work is a history and analysis of how the MXF, JPEG 2000, FFV1 and Matroska standards have been adopted and/or adapted by libraries and archives of different sizes. Well-funded institutions often have the resources to develop tailor-made specifications for the digitization of their analog video objects. Digital video standards and specifications of this kind are often derived from the needs of the cinema production and television broadcast realms in the United States and may be unsuitable for smaller memory institutions that are resource-poor and/or lack staff with the knowledge to implement these technologies. This research seeks to provide insight into how moving image preservation professionals work with - and sometimes against - broadcast and film production industries in order to produce and/or implement standards governing video formats and encodings. This dissertation describes the transition of four digital video standards from niches to widespread use in libraries and archives. It also examines the effects these standards produce on cultural heritage video preservation by interviewing people who implement the standards as well as people who develop them.