Merging RGB And

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

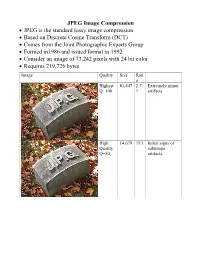

JPEG Image Compression

JPEG Image Compression JPEG is the standard lossy image compression Based on Discrete Cosine Transform (DCT) Comes from the Joint Photographic Experts Group Formed in1986 and issued format in 1992 Consider an image of 73,242 pixels with 24 bit color Requires 219,726 bytes Image Quality Size Rati o Highest 81,447 2.7: Extremely minor Q=100 1 artifacts High 14,679 15:1 Initial signs of Quality subimage Q=50 artifacts Medium 9,407 23:1 Stronger Q artifacts; loss of high frequency information Low 4,787 46:1 Severe high frequency loss leads to obvious artifacts on subimage boundaries ("macroblocking ") Lowest 1,523 144: Extreme loss of 1 color and detail; the leaves are nearly unrecognizable JPEG How it works Begin with a color translation RGB goes to Y′CBCR Luma and two Chroma colors Y is brightness CB is B-Y CR is R-Y Downsample or Chroma Subsampling Chroma data resolutions reduced by 2 or 3 Eye is less sensitive to fine color details than to brightness Block splitting Each channel broken into 8x8 blocks no subsampling Or 16x8 most common at medium compression Or 16x16 Must fill in remaining areas of incomplete blocks This gives the values DCT - centering Center the data about 0 Range is now -128 to 127 Middle is zero Discrete cosine transform formula Apply as 2D DCT using the formula Creates a new matrix Top left (largest) is the DC coefficient constant component Gives basic hue for the block Remaining 63 are AC coefficients Discrete cosine transform The DCT transforms an 8×8 block of input values to a linear combination of these 64 patterns. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Camera Raw Workflows

RAW WORKFLOWS: FROM CAMERA TO POST Copyright 2007, Jason Rodriguez, Silicon Imaging, Inc. Introduction What is a RAW file format, and what cameras shoot to these formats? How does working with RAW file-format cameras change the way I shoot? What changes are happening inside the camera I need to be aware of, and what happens when I go into post? What are the available post paths? Is there just one, or are there many ways to reach my end goals? What post tools support RAW file format workflows? How do RAW codecs like CineForm RAW enable me to work faster and with more efficiency? What is a RAW file? In simplest terms is the native digital data off the sensor's A/D converter with no further destructive DSP processing applied Derived from a photometrically linear data source, or can be reconstructed to produce data that directly correspond to the light that was captured by the sensor at the time of exposure (i.e., LOG->Lin reverse LUT) Photometrically Linear 1:1 Photons Digital Values Doubling of light means doubling of digitally encoded value What is a RAW file? In film-analogy would be termed a “digital negative” because it is a latent representation of the light that was captured by the sensor (up to the limit of the full-well capacity of the sensor) “RAW” cameras include Thomson Viper, Arri D-20, Dalsa Evolution 4K, Silicon Imaging SI-2K, Red One, Vision Research Phantom, noXHD, Reel-Stream “Quasi-RAW” cameras include the Panavision Genesis In-Camera Processing Most non-RAW cameras on the market record to 8-bit YUV formats -

The Video Codecs Behind Modern Remote Display Protocols Rody Kossen

The video codecs behind modern remote display protocols Rody Kossen 1 Rody Kossen - Consultant [email protected] @r_kossen rody-kossen-186b4b40 www.rodykossen.com 2 Agenda The basics H264 – The magician Other codecs Hardware support (NVIDIA) The basics 4 Let’s start with some terms YCbCr RGB Bit Depth YUV 5 Colors 6 7 8 9 Visible colors 10 Color Bit-Depth = Amount of different colors Used in 2 ways: > The number of bits used to indicate the color of a single pixel, for example 24 bit > Number of bits used for each color component of a single pixel (Red Green Blue), for example 8 bit Can be combined with Alpha, which describes transparency Most commonly used: > High Color – 16 bit = 5 bits per color + 1 unused bit = 32.768 colors = 2^15 > True Color – 24 bit Color + 8 bit Alpha = 8 bits per color = 16.777.216 colors > Deep color – 30 bit Color + 10 bit Alpha = 10 bits per color = 1.073 billion colors 11 Color Bit-Depth 12 Color Gamut Describes the subset of colors which can be displayed in relation to the human eye Standardized gamut: > Rec.709 (Blu-ray) > Rec.2020 (Ultra HD Blu-ray) > Adobe RGB > sRBG 13 Compression Lossy > Irreversible compression > Used to reduce data size > Examples: MP3, JPEG, MPEG-4 Lossless > Reversable compression > Examples: ZIP, PNG, FLAC or Dolby TrueHD Visually Lossless > Irreversible compression > Difference can’t be seen by the Human Eye 14 YCbCr Encoding RGB uses Red Green Blue values to describe a color YCbCr is a different way of storing color: > Y = Luma or Brightness of the Color > Cr = Chroma difference -

Yasser Syed & Chris Seeger Comcast/NBCU

Usage of Video Signaling Code Points for Automating UHD and HD Production-to-Distribution Workflows Yasser Syed & Chris Seeger Comcast/NBCU Comcast TPX 1 VIPER Architecture Simpler Times - Delivering to TVs 720 1920 601 HD 486 1080 1080i 709 • SD - HD Conversions • Resolution, Standard Dynamic Range and 601/709 Color Spaces • 4:3 - 16:9 Conversions • 4:2:0 - 8-bit YUV video Comcast TPX 2 VIPER Architecture What is UHD / 4K, HFR, HDR, WCG? HIGH WIDE HIGHER HIGHER DYNAMIC RESOLUTION COLOR FRAME RATE RANGE 4K 60p GAMUT Brighter and More Colorful Darker Pixels Pixels MORE FASTER BETTER PIXELS PIXELS PIXELS ENABLED BY DOLBYVISION Comcast TPX 3 VIPER Architecture Volume of Scripted Workflows is Growing Not considering: • Live Events (news/sports) • Localized events but with wider distributions • User-generated content Comcast TPX 4 VIPER Architecture More Formats to Distribute to More Devices Standard Definition Broadcast/Cable IPTV WiFi DVDs/Files • More display devices: TVs, Tablets, Mobile Phones, Laptops • More display formats: SD, HD, HDR, 4K, 8K, 10-bit, 8-bit, 4:2:2, 4:2:0 • More distribution paths: Broadcast/Cable, IPTV, WiFi, Laptops • Integration/Compositing at receiving device Comcast TPX 5 VIPER Architecture Signal Normalization AUTOMATED LOGIC FOR CONVERSION IN • Compositing, grading, editing SDR HLG PQ depends on proper signal BT.709 BT.2100 BT.2100 normalization of all source files (i.e. - Still Graphics & Titling, Bugs, Tickers, Requires Conversion Native Lower-Thirds, weather graphics, etc.) • ALL content must be moved into a single color volume space. Normalized Compositing • Transformation from different Timeline/Switcher/Transcoder - PQ-BT.2100 colourspaces (BT.601, BT.709, BT.2020) and transfer functions (Gamma 2.4, PQ, HLG) Convert Native • Correct signaling allows automation of conversion settings. -

Color Images, Color Spaces and Color Image Processing

color images, color spaces and color image processing Ole-Johan Skrede 08.03.2017 INF2310 - Digital Image Processing Department of Informatics The Faculty of Mathematics and Natural Sciences University of Oslo After original slides by Fritz Albregtsen today’s lecture ∙ Color, color vision and color detection ∙ Color spaces and color models ∙ Transitions between color spaces ∙ Color image display ∙ Look up tables for colors ∙ Color image printing ∙ Pseudocolors and fake colors ∙ Color image processing ∙ Sections in Gonzales & Woods: ∙ 6.1 Color Funcdamentals ∙ 6.2 Color Models ∙ 6.3 Pseudocolor Image Processing ∙ 6.4 Basics of Full-Color Image Processing ∙ 6.5.5 Histogram Processing ∙ 6.6 Smoothing and Sharpening ∙ 6.7 Image Segmentation Based on Color 1 motivation ∙ We can differentiate between thousands of colors ∙ Colors make it easy to distinguish objects ∙ Visually ∙ And digitally ∙ We need to: ∙ Know what color space to use for different tasks ∙ Transit between color spaces ∙ Store color images rationally and compactly ∙ Know techniques for color image printing 2 the color of the light from the sun spectral exitance The light from the sun can be modeled with the spectral exitance of a black surface (the radiant exitance of a surface per unit wavelength) 2πhc2 1 M(λ) = { } : λ5 hc − exp λkT 1 where ∙ h ≈ 6:626 070 04 × 10−34 m2 kg s−1 is the Planck constant. ∙ c = 299 792 458 m s−1 is the speed of light. ∙ λ [m] is the radiation wavelength. ∙ k ≈ 1:380 648 52 × 10−23 m2 kg s−2 K−1 is the Boltzmann constant. T ∙ [K] is the surface temperature of the radiating Figure 1: Spectral exitance of a black body surface for different body. -

Image Compression GIF (Graphics Interchange Format) LZW (Lempel

GIF (Graphics Interchange Image Compression Format) • GIF (Graphics Interchange Format) • Introduced by Compuserve in 1987 (GIF87a), multiple-image in one file/application specific • PNG (Portable Network Graphics)/MNG metadata support added in 1989 (GIF89a) (Multiple-image Network Graphics) • LZW (Lempel-Ziv-Welch) compression replaced • JPEG (Joint Pictures Experts Group) earlier RLE (Run Length Encoding) B&W version – Patented by Compuserve/Unisys (has run out in US, will run out in June 2004 in Europe) • Maximum of 256 colours (from a palette) including a “transparent” colour • Optional interlacing feature • http://www.w3.org/Graphics/GIF/spec-gif89a.txt LZW (Lempel-Ziv-Welch) LZW Algorithm • Most of this method was invented and published • Dictionary initially contains all possible one byte codes by Lempel and Ziv in 1978 (LZ78 algorithm) (256 entries) • Input is taken one byte at a time to find the longest initial • A few details and improvements were later given string present in the dictionary by Welch in 1984 (variable increasing index sizes, • The code for that string is output, then the string is efficient dictionary data structure) extended with one more input byte, b • Achieves approx. 50% compression on large • A new entry is added to the table mapping the extended English texts string to the next unused code • superseded by DEFLATE and Burrows-Wheeler • The process repeats, starting from byte b transform methods • The number of bits in an output code, and hence the maximum number of entries in the table is fixed • once -

![Arxiv:1902.00267V1 [Cs.CV] 1 Feb 2019 Fcmue Iin Oto H Aaesue O Mg Classificat Image Th for in Used Applications Datasets Fundamental the Most of the Most Vision](https://docslib.b-cdn.net/cover/0817/arxiv-1902-00267v1-cs-cv-1-feb-2019-fcmue-iin-oto-h-aaesue-o-mg-classi-cat-image-th-for-in-used-applications-datasets-fundamental-the-most-of-the-most-vision-1150817.webp)

Arxiv:1902.00267V1 [Cs.CV] 1 Feb 2019 Fcmue Iin Oto H Aaesue O Mg Classificat Image Th for in Used Applications Datasets Fundamental the Most of the Most Vision

ColorNet: Investigating the importance of color spaces for image classification⋆ Shreyank N Gowda1 and Chun Yuan2 1 Computer Science Department, Tsinghua University, Beijing 10084, China [email protected] 2 Graduate School at Shenzhen, Tsinghua University, Shenzhen 518055, China [email protected] Abstract. Image classification is a fundamental application in computer vision. Recently, deeper networks and highly connected networks have shown state of the art performance for image classification tasks. Most datasets these days consist of a finite number of color images. These color images are taken as input in the form of RGB images and clas- sification is done without modifying them. We explore the importance of color spaces and show that color spaces (essentially transformations of original RGB images) can significantly affect classification accuracy. Further, we show that certain classes of images are better represented in particular color spaces and for a dataset with a highly varying number of classes such as CIFAR and Imagenet, using a model that considers multi- ple color spaces within the same model gives excellent levels of accuracy. Also, we show that such a model, where the input is preprocessed into multiple color spaces simultaneously, needs far fewer parameters to ob- tain high accuracy for classification. For example, our model with 1.75M parameters significantly outperforms DenseNet 100-12 that has 12M pa- rameters and gives results comparable to Densenet-BC-190-40 that has 25.6M parameters for classification of four competitive image classifica- tion datasets namely: CIFAR-10, CIFAR-100, SVHN and Imagenet. Our model essentially takes an RGB image as input, simultaneously converts the image into 7 different color spaces and uses these as inputs to individ- ual densenets. -

Basics of Video

Analog and Digital Video Basics Nimrod Peleg Update: May. 2006 1 Video Compression: list of topics • Analog and Digital Video Concepts • Block-Based Motion Estimation • Resolution Conversion • H.261: A Standard for VideoConferencing • MPEG-1: A Standard for CD-ROM Based App. • MPEG-2 and HDTV: All Digital TV • H.263: A Standard for VideoPhone • MPEG-4: Content-Based Description 2 1 Analog Video Signal: Raster Scan 3 Odd and Even Scan Lines 4 2 Analog Video Signal: Image line 5 Analog Video Standards • All video standards are in • Almost any color can be reproduced by mixing the 3 additive primaries: R (red) , G (green) , B (blue) • 3 main different representations: – Composite – Component or S-Video (Y/C) 6 3 Composite Video 7 Component Analog Video • Each primary is considered as a separate monochromatic video signal • Basic presentation: R G B • Other RGB based: – YIQ – YCrCb – YUV – HSI To Color Spaces Demo 8 4 Composite Video Signal Encoding the Chrominance over Luminance into one signal (saving bandwidth): – NTSC (National TV System Committee) North America, Japan – PAL (Phased Alternation Line) Europe (Including Israel) – SECAM (Systeme Electronique Color Avec Memoire) France, Russia and more 9 Analog Standards Comparison NTSC PAL/SECAM Defined 1952 1960 Scan Lines/Field 525/262.5 625/312.5 Active horiz. lines 480 576 Subcarrier Freq. 3.58MHz 4.43MHz Interlacing 2:1 2:1 Aspect ratio 4:3 4:3 Horiz. Resol.(pel/line) 720 720 Frames/Sec 29.97 25 Component Color TUV YCbCr 10 5 Analog Video Equipment • Cameras – Vidicon, Film, CCD) • Video Tapes (magnetic): – Betacam, VHS, SVHS, U-matic, 8mm ... -

Chroma Subsampling Influence on the Perceived Video Quality for Compressed Sequences in High Resolutions

DIGITAL IMAGE PROCESSING AND COMPUTER GRAPHICS VOLUME: 15 j NUMBER: 4 j 2017 j SPECIAL ISSUE Chroma Subsampling Influence on the Perceived Video Quality for Compressed Sequences in High Resolutions Miroslav UHRINA, Juraj BIENIK, Tomas MIZDOS Department of Multimedia and Information-Communication Technologies, Faculty of Electrical Engineering, University of Zilina, Univerzitna 1, 01026, Zilina, Slovak Republic [email protected], [email protected], [email protected] DOI: 10.15598/aeee.v15i4.2414 Abstract. This paper deals with the influence of 2. State of the Art chroma subsampling on perceived video quality mea- sured by subjective metrics. The evaluation was Although many research activities focus on objective done for two most used video codecs H.264/AVC and and subjective video quality assessment, only a few of H.265/HEVC. Eight types of video sequences with Full them analyze the quality affected by chroma subsam- HD and Ultra HD resolutions depending on content pling. In the paper [1], the efficiency of the chroma were tested. The experimental results showed that ob- subsampling for sequences with HDR content using servers did not see the difference between unsubsampled only objective metrics is assessed. The paper [2] and subsampled sequences, so using subsampled videos presents a novel chroma subsampling strategy for com- is preferable even 50 % of the amount of data can be pressing mosaic videos with arbitrary RGB-CFA struc- saved. Also, the minimum bitrates to achieve the good tures in H.264/AVC and High Efficiency Video Coding and fair quality by each codec and resolution were de- (HEVC). -

Color Spaces

RGB Color Space 15 Chapter 3: Color Spaces Chapter 3 Color Spaces A color space is a mathematical representation RGB Color Space of a set of colors. The three most popular color models are RGB (used in computer graphics); The red, green, and blue (RGB) color space is YIQ, YUV, or YCbCr (used in video systems); widely used throughout computer graphics. and CMYK (used in color printing). However, Red, green, and blue are three primary addi- none of these color spaces are directly related tive colors (individual components are added to the intuitive notions of hue, saturation, and together to form a desired color) and are rep- brightness. This resulted in the temporary pur- resented by a three-dimensional, Cartesian suit of other models, such as HSI and HSV, to coordinate system (Figure 3.1). The indicated simplify programming, processing, and end- diagonal of the cube, with equal amounts of user manipulation. each primary component, represents various All of the color spaces can be derived from gray levels. Table 3.1 contains the RGB values the RGB information supplied by devices such for 100% amplitude, 100% saturated color bars, as cameras and scanners. a common video test signal. BLUE CYAN MAGENTA WHITE BLACK GREEN RED YELLOW Figure 3.1. The RGB Color Cube. 15 16 Chapter 3: Color Spaces Red Blue Cyan Black White Green Range Yellow Nominal Magenta R 0 to 255 255 255 0 0 255 255 0 0 G 0 to 255 255 255 255 255 0 0 0 0 B 0 to 255 255 0 255 0 255 0 255 0 Table 3.1.