Color Space Conversion in Hardware for Multimedia Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Evaluation of Color Differences Across Different Devices Craitishia Lewis Clemson University, [email protected]

Clemson University TigerPrints All Theses Theses 12-2013 What You See Isn't Always What You Get: An Evaluation of Color Differences Across Different Devices Craitishia Lewis Clemson University, [email protected] Follow this and additional works at: https://tigerprints.clemson.edu/all_theses Part of the Communication Commons Recommended Citation Lewis, Craitishia, "What You See Isn't Always What You Get: An Evaluation of Color Differences Across Different Devices" (2013). All Theses. 1808. https://tigerprints.clemson.edu/all_theses/1808 This Thesis is brought to you for free and open access by the Theses at TigerPrints. It has been accepted for inclusion in All Theses by an authorized administrator of TigerPrints. For more information, please contact [email protected]. What You See Isn’t Always What You Get: An Evaluation of Color Differences Across Different Devices A Thesis Presented to The Graduate School of Clemson University In Partial Fulfillment Of the Requirements for the Degree Master of Science Graphic Communications By Craitishia Lewis December 2013 Accepted by: Dr. Samuel Ingram, Committee Chair Kern Cox Dr. Eric Weisenmiller Dr. Russell Purvis ABSTRACT The objective of this thesis was to examine color differences between different digital devices such as, phones, tablets, and monitors. New technology has always been the catalyst for growth and change within the printing industry. With gadgets like the iPhone and the iPad becoming increasingly more popular in the recent years, printers have yet another technological advancement to consider. Soft proofing strategies use color management technology that allows the client to view their proof on a monitor as a duplication of how the finished product will appear on a printed piece of paper. -

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

Package 'Colorscience'

Package ‘colorscience’ October 29, 2019 Type Package Title Color Science Methods and Data Version 1.0.8 Encoding UTF-8 Date 2019-10-29 Maintainer Glenn Davis <[email protected]> Description Methods and data for color science - color conversions by observer, illuminant, and gamma. Color matching functions and chromaticity diagrams. Color indices, color differences, and spectral data conversion/analysis. License GPL (>= 3) Depends R (>= 2.10), Hmisc, pracma, sp Enhances png LazyData yes Author Jose Gama [aut], Glenn Davis [aut, cre] Repository CRAN NeedsCompilation no Date/Publication 2019-10-29 18:40:02 UTC R topics documented: ASTM.D1925.YellownessIndex . .5 ASTM.E313.Whiteness . .6 ASTM.E313.YellownessIndex . .7 Berger59.Whiteness . .7 BVR2XYZ . .8 cccie31 . .9 cccie64 . 10 CCT2XYZ . 11 CentralsISCCNBS . 11 CheckColorLookup . 12 1 2 R topics documented: ChromaticAdaptation . 13 chromaticity.diagram . 14 chromaticity.diagram.color . 14 CIE.Whiteness . 15 CIE1931xy2CIE1960uv . 16 CIE1931xy2CIE1976uv . 17 CIE1931XYZ2CIE1931xyz . 18 CIE1931XYZ2CIE1960uv . 19 CIE1931XYZ2CIE1976uv . 20 CIE1960UCS2CIE1964 . 21 CIE1960UCS2xy . 22 CIE1976chroma . 23 CIE1976hueangle . 23 CIE1976uv2CIE1931xy . 24 CIE1976uv2CIE1960uv . 25 CIE1976uvSaturation . 26 CIELabtoDIN99 . 27 CIEluminanceY2NCSblackness . 28 CIETint . 28 ciexyz31 . 29 ciexyz64 . 30 CMY2CMYK . 31 CMY2RGB . 32 CMYK2CMY . 32 ColorBlockFromMunsell . 33 compuphaseDifferenceRGB . 34 conversionIlluminance . 35 conversionLuminance . 36 createIsoTempLinesTable . 37 daylightcomponents . 38 deltaE1976 -

Photometric Modelling for Efficient Lighting and Display Technologies

Sinan G Sinan ENÇ PHOTOMETRIC MODELLING FOR PHOTOMETRIC MODELLING FOR EFFICIENT LIGHTING LIGHTING EFFICIENT FOR MODELLING PHOTOMETRIC EFFICIENT LIGHTING AND DISPLAY TECHNOLOGIES AND DISPLAY TECHNOLOGIES DISPLAY AND A MASTER’S THESIS By Sinan GENÇ December 2016 AGU 2016 ABDULLAH GÜL UNIVERSITY PHOTOMETRIC MODELLING FOR EFFICIENT LIGHTING AND DISPLAY TECHNOLOGIES A THESIS SUBMITTED TO THE DEPARTMENT OF ELECTRICAL AND COMPUTER ENGINEERING AND THE GRADUATE SCHOOL OF ENGINEERING AND SCIENCE OF ABDULLAH GÜL UNIVERSITY IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF SCIENCE By Sinan GENÇ December 2016 i SCIENTIFIC ETHICS COMPLIANCE I hereby declare that all information in this document has been obtained in accordance with academic rules and ethical conduct. I also declare that, as required by these rules and conduct, I have fully cited and referenced all materials and results that are not original to this work. Sinan GENÇ ii REGULATORY COMPLIANCE M.Sc. thesis titled “PHOTOMETRIC MODELLING FOR EFFICIENT LIGHTING AND DISPLAY TECHNOLOGIES” has been prepared in accordance with the Thesis Writing Guidelines of the Abdullah Gül University, Graduate School of Engineering & Science. Prepared By Advisor Sinan GENÇ Asst. Prof. Evren MUTLUGÜN Head of the Electrical and Computer Engineering Program Assoc. Prof. Vehbi Çağrı GÜNGÖR iii ACCEPTANCE AND APPROVAL M.Sc. thesis titled “PHOTOMETRIC MODELLING FOR EFFICIENT LIGHTING AND DISPLAY TECHNOLOGIES” and prepared by Sinan GENÇ has been accepted by the jury in the Electrical and Computer Engineering Graduate Program at Abdullah Gül University, Graduate School of Engineering & Science. 26/12/2016 (26/12/2016) JURY: Prof. Bülent YILMAZ :………………………………. Assoc. Prof. M. Serdar ÖNSES :……………………………… Asst. -

An Improved SPSIM Index for Image Quality Assessment

S S symmetry Article An Improved SPSIM Index for Image Quality Assessment Mariusz Frackiewicz * , Grzegorz Szolc and Henryk Palus Department of Data Science and Engineering, Silesian University of Technology, Akademicka 16, 44-100 Gliwice, Poland; [email protected] (G.S.); [email protected] (H.P.) * Correspondence: [email protected]; Tel.: +48-32-2371066 Abstract: Objective image quality assessment (IQA) measures are playing an increasingly important role in the evaluation of digital image quality. New IQA indices are expected to be strongly correlated with subjective observer evaluations expressed by Mean Opinion Score (MOS) or Difference Mean Opinion Score (DMOS). One such recently proposed index is the SuperPixel-based SIMilarity (SPSIM) index, which uses superpixel patches instead of a rectangular pixel grid. The authors of this paper have proposed three modifications to the SPSIM index. For this purpose, the color space used by SPSIM was changed and the way SPSIM determines similarity maps was modified using methods derived from an algorithm for computing the Mean Deviation Similarity Index (MDSI). The third modification was a combination of the first two. These three new quality indices were used in the assessment process. The experimental results obtained for many color images from five image databases demonstrated the advantages of the proposed SPSIM modifications. Keywords: image quality assessment; image databases; superpixels; color image; color space; image quality measures Citation: Frackiewicz, M.; Szolc, G.; Palus, H. An Improved SPSIM Index 1. Introduction for Image Quality Assessment. Quantitative domination of acquired color images over gray level images results in Symmetry 2021, 13, 518. https:// the development not only of color image processing methods but also of Image Quality doi.org/10.3390/sym13030518 Assessment (IQA) methods. -

JPEG Image Compression

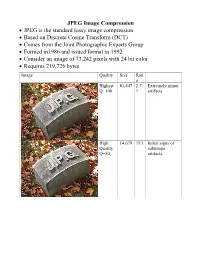

JPEG Image Compression JPEG is the standard lossy image compression Based on Discrete Cosine Transform (DCT) Comes from the Joint Photographic Experts Group Formed in1986 and issued format in 1992 Consider an image of 73,242 pixels with 24 bit color Requires 219,726 bytes Image Quality Size Rati o Highest 81,447 2.7: Extremely minor Q=100 1 artifacts High 14,679 15:1 Initial signs of Quality subimage Q=50 artifacts Medium 9,407 23:1 Stronger Q artifacts; loss of high frequency information Low 4,787 46:1 Severe high frequency loss leads to obvious artifacts on subimage boundaries ("macroblocking ") Lowest 1,523 144: Extreme loss of 1 color and detail; the leaves are nearly unrecognizable JPEG How it works Begin with a color translation RGB goes to Y′CBCR Luma and two Chroma colors Y is brightness CB is B-Y CR is R-Y Downsample or Chroma Subsampling Chroma data resolutions reduced by 2 or 3 Eye is less sensitive to fine color details than to brightness Block splitting Each channel broken into 8x8 blocks no subsampling Or 16x8 most common at medium compression Or 16x16 Must fill in remaining areas of incomplete blocks This gives the values DCT - centering Center the data about 0 Range is now -128 to 127 Middle is zero Discrete cosine transform formula Apply as 2D DCT using the formula Creates a new matrix Top left (largest) is the DC coefficient constant component Gives basic hue for the block Remaining 63 are AC coefficients Discrete cosine transform The DCT transforms an 8×8 block of input values to a linear combination of these 64 patterns. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Camera Raw Workflows

RAW WORKFLOWS: FROM CAMERA TO POST Copyright 2007, Jason Rodriguez, Silicon Imaging, Inc. Introduction What is a RAW file format, and what cameras shoot to these formats? How does working with RAW file-format cameras change the way I shoot? What changes are happening inside the camera I need to be aware of, and what happens when I go into post? What are the available post paths? Is there just one, or are there many ways to reach my end goals? What post tools support RAW file format workflows? How do RAW codecs like CineForm RAW enable me to work faster and with more efficiency? What is a RAW file? In simplest terms is the native digital data off the sensor's A/D converter with no further destructive DSP processing applied Derived from a photometrically linear data source, or can be reconstructed to produce data that directly correspond to the light that was captured by the sensor at the time of exposure (i.e., LOG->Lin reverse LUT) Photometrically Linear 1:1 Photons Digital Values Doubling of light means doubling of digitally encoded value What is a RAW file? In film-analogy would be termed a “digital negative” because it is a latent representation of the light that was captured by the sensor (up to the limit of the full-well capacity of the sensor) “RAW” cameras include Thomson Viper, Arri D-20, Dalsa Evolution 4K, Silicon Imaging SI-2K, Red One, Vision Research Phantom, noXHD, Reel-Stream “Quasi-RAW” cameras include the Panavision Genesis In-Camera Processing Most non-RAW cameras on the market record to 8-bit YUV formats -

The Video Codecs Behind Modern Remote Display Protocols Rody Kossen

The video codecs behind modern remote display protocols Rody Kossen 1 Rody Kossen - Consultant [email protected] @r_kossen rody-kossen-186b4b40 www.rodykossen.com 2 Agenda The basics H264 – The magician Other codecs Hardware support (NVIDIA) The basics 4 Let’s start with some terms YCbCr RGB Bit Depth YUV 5 Colors 6 7 8 9 Visible colors 10 Color Bit-Depth = Amount of different colors Used in 2 ways: > The number of bits used to indicate the color of a single pixel, for example 24 bit > Number of bits used for each color component of a single pixel (Red Green Blue), for example 8 bit Can be combined with Alpha, which describes transparency Most commonly used: > High Color – 16 bit = 5 bits per color + 1 unused bit = 32.768 colors = 2^15 > True Color – 24 bit Color + 8 bit Alpha = 8 bits per color = 16.777.216 colors > Deep color – 30 bit Color + 10 bit Alpha = 10 bits per color = 1.073 billion colors 11 Color Bit-Depth 12 Color Gamut Describes the subset of colors which can be displayed in relation to the human eye Standardized gamut: > Rec.709 (Blu-ray) > Rec.2020 (Ultra HD Blu-ray) > Adobe RGB > sRBG 13 Compression Lossy > Irreversible compression > Used to reduce data size > Examples: MP3, JPEG, MPEG-4 Lossless > Reversable compression > Examples: ZIP, PNG, FLAC or Dolby TrueHD Visually Lossless > Irreversible compression > Difference can’t be seen by the Human Eye 14 YCbCr Encoding RGB uses Red Green Blue values to describe a color YCbCr is a different way of storing color: > Y = Luma or Brightness of the Color > Cr = Chroma difference -

Creating 4K/UHD Content Poster

Creating 4K/UHD Content Colorimetry Image Format / SMPTE Standards Figure A2. Using a Table B1: SMPTE Standards The television color specification is based on standards defined by the CIE (Commission 100% color bar signal Square Division separates the image into quad links for distribution. to show conversion Internationale de L’Éclairage) in 1931. The CIE specified an idealized set of primary XYZ SMPTE Standards of RGB levels from UHDTV 1: 3840x2160 (4x1920x1080) tristimulus values. This set is a group of all-positive values converted from R’G’B’ where 700 mv (100%) to ST 125 SDTV Component Video Signal Coding for 4:4:4 and 4:2:2 for 13.5 MHz and 18 MHz Systems 0mv (0%) for each ST 240 Television – 1125-Line High-Definition Production Systems – Signal Parameters Y is proportional to the luminance of the additive mix. This specification is used as the color component with a color bar split ST 259 Television – SDTV Digital Signal/Data – Serial Digital Interface basis for color within 4K/UHDTV1 that supports both ITU-R BT.709 and BT2020. 2020 field BT.2020 and ST 272 Television – Formatting AES/EBU Audio and Auxiliary Data into Digital Video Ancillary Data Space BT.709 test signal. ST 274 Television – 1920 x 1080 Image Sample Structure, Digital Representation and Digital Timing Reference Sequences for The WFM8300 was Table A1: Illuminant (Ill.) Value Multiple Picture Rates 709 configured for Source X / Y BT.709 colorimetry ST 296 1280 x 720 Progressive Image 4:2:2 and 4:4:4 Sample Structure – Analog & Digital Representation & Analog Interface as shown in the video ST 299-0/1/2 24-Bit Digital Audio Format for SMPTE Bit-Serial Interfaces at 1.5 Gb/s and 3 Gb/s – Document Suite Illuminant A: Tungsten Filament Lamp, 2854°K x = 0.4476 y = 0.4075 session display. -

Yasser Syed & Chris Seeger Comcast/NBCU

Usage of Video Signaling Code Points for Automating UHD and HD Production-to-Distribution Workflows Yasser Syed & Chris Seeger Comcast/NBCU Comcast TPX 1 VIPER Architecture Simpler Times - Delivering to TVs 720 1920 601 HD 486 1080 1080i 709 • SD - HD Conversions • Resolution, Standard Dynamic Range and 601/709 Color Spaces • 4:3 - 16:9 Conversions • 4:2:0 - 8-bit YUV video Comcast TPX 2 VIPER Architecture What is UHD / 4K, HFR, HDR, WCG? HIGH WIDE HIGHER HIGHER DYNAMIC RESOLUTION COLOR FRAME RATE RANGE 4K 60p GAMUT Brighter and More Colorful Darker Pixels Pixels MORE FASTER BETTER PIXELS PIXELS PIXELS ENABLED BY DOLBYVISION Comcast TPX 3 VIPER Architecture Volume of Scripted Workflows is Growing Not considering: • Live Events (news/sports) • Localized events but with wider distributions • User-generated content Comcast TPX 4 VIPER Architecture More Formats to Distribute to More Devices Standard Definition Broadcast/Cable IPTV WiFi DVDs/Files • More display devices: TVs, Tablets, Mobile Phones, Laptops • More display formats: SD, HD, HDR, 4K, 8K, 10-bit, 8-bit, 4:2:2, 4:2:0 • More distribution paths: Broadcast/Cable, IPTV, WiFi, Laptops • Integration/Compositing at receiving device Comcast TPX 5 VIPER Architecture Signal Normalization AUTOMATED LOGIC FOR CONVERSION IN • Compositing, grading, editing SDR HLG PQ depends on proper signal BT.709 BT.2100 BT.2100 normalization of all source files (i.e. - Still Graphics & Titling, Bugs, Tickers, Requires Conversion Native Lower-Thirds, weather graphics, etc.) • ALL content must be moved into a single color volume space. Normalized Compositing • Transformation from different Timeline/Switcher/Transcoder - PQ-BT.2100 colourspaces (BT.601, BT.709, BT.2020) and transfer functions (Gamma 2.4, PQ, HLG) Convert Native • Correct signaling allows automation of conversion settings. -

AN9717: Ycbcr to RGB Considerations (Multimedia)

YCbCr to RGB Considerations TM Application Note March 1997 AN9717 Author: Keith Jack Introduction Converting 4:2:2 to 4:4:4 YCbCr Many video ICs now generate 4:2:2 YCbCr video data. The Prior to converting YCbCr data to R´G´B´ data, the 4:2:2 YCbCr color space was developed as part of ITU-R BT.601 YCbCr data must be converted to 4:4:4 YCbCr data. For the (formerly CCIR 601) during the development of a world-wide YCbCr to RGB conversion process, each Y sample must digital component video standard. have a corresponding Cb and Cr sample. Some of these video ICs also generate digital RGB video Figure 1 illustrates the positioning of YCbCr samples for the data, using lookup tables to assist with the YCbCr to RGB 4:4:4 format. Each sample has a Y, a Cb, and a Cr value. conversion. By understanding the YCbCr to RGB conversion Each sample is typically 8 bits (consumer applications) or 10 process, the lookup tables can be eliminated, resulting in a bits (professional editing applications) per component. substantial cost savings. Figure 2 illustrates the positioning of YCbCr samples for the This application note covers some of the considerations for 4:2:2 format. For every two horizontal Y samples, there is converting the YCbCr data to RGB data without the use of one Cb and Cr sample. Each sample is typically 8 bits (con- lookup tables. The process basically consists of three steps: sumer applications) or 10 bits (professional editing applica- tions) per component.