Visual Computing Systems Stanford CS348K, Spring 2020 Lecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Kulkarni Uta 2502M 11649.Pdf

IMPLEMENTATION OF A FAST INTER-PREDICTION MODE DECISION IN H.264/AVC VIDEO ENCODER by AMRUTA KIRAN KULKARNI Presented to the Faculty of the Graduate School of The University of Texas at Arlington in Partial Fulfillment of the Requirements for the Degree of MASTER OF SCIENCE IN ELECTRICAL ENGINEERING THE UNIVERSITY OF TEXAS AT ARLINGTON May 2012 ACKNOWLEDGEMENTS First and foremost, I would like to take this opportunity to offer my gratitude to my supervisor, Dr. K.R. Rao, who invested his precious time in me and has been a constant support throughout my thesis with his patience and profound knowledge. His motivation and enthusiasm helped me in all the time of research and writing of this thesis. His advising and mentoring have helped me complete my thesis. Besides my advisor, I would like to thank the rest of my thesis committee. I am also very grateful to Dr. Dongil Han for his continuous technical advice and financial support. I would like to acknowledge my research group partner, Santosh Kumar Muniyappa, for all the valuable discussions that we had together. It helped me in building confidence and motivated towards completing the thesis. Also, I thank all other lab mates and friends who helped me get through two years of graduate school. Finally, my sincere gratitude and love go to my family. They have been my role model and have always showed me right way. Last but not the least; I would like to thank my husband Amey Mahajan for his emotional and moral support. April 20, 2012 ii ABSTRACT IMPLEMENTATION OF A FAST INTER-PREDICTION MODE DECISION IN H.264/AVC VIDEO ENCODER Amruta Kulkarni, M.S The University of Texas at Arlington, 2011 Supervising Professor: K.R. -

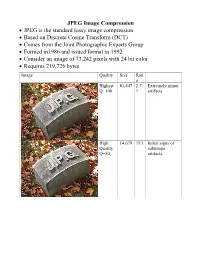

JPEG Image Compression

JPEG Image Compression JPEG is the standard lossy image compression Based on Discrete Cosine Transform (DCT) Comes from the Joint Photographic Experts Group Formed in1986 and issued format in 1992 Consider an image of 73,242 pixels with 24 bit color Requires 219,726 bytes Image Quality Size Rati o Highest 81,447 2.7: Extremely minor Q=100 1 artifacts High 14,679 15:1 Initial signs of Quality subimage Q=50 artifacts Medium 9,407 23:1 Stronger Q artifacts; loss of high frequency information Low 4,787 46:1 Severe high frequency loss leads to obvious artifacts on subimage boundaries ("macroblocking ") Lowest 1,523 144: Extreme loss of 1 color and detail; the leaves are nearly unrecognizable JPEG How it works Begin with a color translation RGB goes to Y′CBCR Luma and two Chroma colors Y is brightness CB is B-Y CR is R-Y Downsample or Chroma Subsampling Chroma data resolutions reduced by 2 or 3 Eye is less sensitive to fine color details than to brightness Block splitting Each channel broken into 8x8 blocks no subsampling Or 16x8 most common at medium compression Or 16x16 Must fill in remaining areas of incomplete blocks This gives the values DCT - centering Center the data about 0 Range is now -128 to 127 Middle is zero Discrete cosine transform formula Apply as 2D DCT using the formula Creates a new matrix Top left (largest) is the DC coefficient constant component Gives basic hue for the block Remaining 63 are AC coefficients Discrete cosine transform The DCT transforms an 8×8 block of input values to a linear combination of these 64 patterns. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

1. in the New Document Dialog Box, on the General Tab, for Type, Choose Flash Document, Then Click______

1. In the New Document dialog box, on the General tab, for type, choose Flash Document, then click____________. 1. Tab 2. Ok 3. Delete 4. Save 2. Specify the export ________ for classes in the movie. 1. Frame 2. Class 3. Loading 4. Main 3. To help manage the files in a large application, flash MX professional 2004 supports the concept of _________. 1. Files 2. Projects 3. Flash 4. Player 4. In the AppName directory, create a subdirectory named_______. 1. Source 2. Flash documents 3. Source code 4. Classes 5. In Flash, most applications are _________ and include __________ user interfaces. 1. Visual, Graphical 2. Visual, Flash 3. Graphical, Flash 4. Visual, AppName 6. Test locally by opening ________ in your web browser. 1. AppName.fla 2. AppName.html 3. AppName.swf 4. AppName 7. The AppName directory will contain everything in our project, including _________ and _____________ . 1. Source code, Final input 2. Input, Output 3. Source code, Final output 4. Source code, everything 8. In the AppName directory, create a subdirectory named_______. 1. Compiled application 2. Deploy 3. Final output 4. Source code 9. Every Flash application must include at least one ______________. 1. Flash document 2. AppName 3. Deploy 4. Source 10. In the AppName/Source directory, create a subdirectory named __________. 1. Source 2. Com 3. Some domain 4. AppName 11. In the AppName/Source/Com directory, create a sub directory named ______ 1. Some domain 2. Com 3. AppName 4. Source 12. A project is group of related _________ that can be managed via the project panel in the flash. -

The Video Codecs Behind Modern Remote Display Protocols Rody Kossen

The video codecs behind modern remote display protocols Rody Kossen 1 Rody Kossen - Consultant [email protected] @r_kossen rody-kossen-186b4b40 www.rodykossen.com 2 Agenda The basics H264 – The magician Other codecs Hardware support (NVIDIA) The basics 4 Let’s start with some terms YCbCr RGB Bit Depth YUV 5 Colors 6 7 8 9 Visible colors 10 Color Bit-Depth = Amount of different colors Used in 2 ways: > The number of bits used to indicate the color of a single pixel, for example 24 bit > Number of bits used for each color component of a single pixel (Red Green Blue), for example 8 bit Can be combined with Alpha, which describes transparency Most commonly used: > High Color – 16 bit = 5 bits per color + 1 unused bit = 32.768 colors = 2^15 > True Color – 24 bit Color + 8 bit Alpha = 8 bits per color = 16.777.216 colors > Deep color – 30 bit Color + 10 bit Alpha = 10 bits per color = 1.073 billion colors 11 Color Bit-Depth 12 Color Gamut Describes the subset of colors which can be displayed in relation to the human eye Standardized gamut: > Rec.709 (Blu-ray) > Rec.2020 (Ultra HD Blu-ray) > Adobe RGB > sRBG 13 Compression Lossy > Irreversible compression > Used to reduce data size > Examples: MP3, JPEG, MPEG-4 Lossless > Reversable compression > Examples: ZIP, PNG, FLAC or Dolby TrueHD Visually Lossless > Irreversible compression > Difference can’t be seen by the Human Eye 14 YCbCr Encoding RGB uses Red Green Blue values to describe a color YCbCr is a different way of storing color: > Y = Luma or Brightness of the Color > Cr = Chroma difference -

Discrete Cosine Transform for 8X8 Blocks with CUDA

Discrete Cosine Transform for 8x8 Blocks with CUDA Anton Obukhov [email protected] Alexander Kharlamov [email protected] October 2008 Document Change History Version Date Responsible Reason for Change 0.8 24.03.2008 Alexander Kharlamov Initial release 0.9 25.03.2008 Anton Obukhov Added algorithm-specific parts, fixed some issues 1.0 17.10.2008 Anton Obukhov Revised document structure October 2008 2 Abstract In this whitepaper the Discrete Cosine Transform (DCT) is discussed. The two-dimensional variation of the transform that operates on 8x8 blocks (DCT8x8) is widely used in image and video coding because it exhibits high signal decorrelation rates and can be easily implemented on the majority of contemporary computing architectures. The key feature of the DCT8x8 is that any pair of 8x8 blocks can be processed independently. This makes possible fully parallel implementation of DCT8x8 by definition. Most of CPU-based implementations of DCT8x8 are firmly adjusted for operating using fixed point arithmetic but still appear to be rather costly as soon as blocks are processed in the sequential order by the single ALU. Performing DCT8x8 computation on GPU using NVIDIA CUDA technology gives significant performance boost even compared to a modern CPU. The proposed approach is accompanied with the sample code “DCT8x8” in the NVIDIA CUDA SDK. October 2008 3 1. Introduction The Discrete Cosine Transform (DCT) is a Fourier-like transform, which was first proposed by Ahmed et al . (1974). While the Fourier Transform represents a signal as the mixture of sines and cosines, the Cosine Transform performs only the cosine-series expansion. -

Analysis Application for H.264 Video Encoding

IT 10 061 Examensarbete 30 hp November 2010 Analysis Application for H.264 Video Encoding Ying Wang Institutionen för informationsteknologi Department of Information Technology Abstract Analysis Application for H.264 Video Encoding Ying Wang Teknisk- naturvetenskaplig fakultet UTH-enheten A video analysis application ERANA264(Ericsson Research h.264 video Besöksadress: ANalysis Application) is developed in Ångströmlaboratoriet Lägerhyddsvägen 1 this project. Erana264 is a tool that Hus 4, Plan 0 analyzes H.264 encoded video bitstreams, extracts the encoding information and Postadress: parameters, analyzes them in different Box 536 751 21 Uppsala stages and displays the results in a user friendly way. The intention is that Telefon: such an application would be used during 018 – 471 30 03 development and testing of video codecs. Telefax: The work is implemented on top of 018 – 471 30 00 existing H.264 encoder/decoder source code (C/C++) developed at Ericsson Hemsida: Research. http://www.teknat.uu.se/student Erana264 consists of three layers. The first layer is the H.264 decoder previously developed in Ericsson Research. By using the decoder APIs, the information is extracted from the bitstream and is sent to the higher layers. The second layer visualizes the different decoding stages, uses overlay to display some macro block and picture level information and provides a set of play back functions. The third layer analyzes and presents the statistics of prominent parameters in video compression process, such as video quality measurements, motion vector distribution, picture bit distribution etc. Key words: H.264, Video compression, Bitstream analysis, Video encoding Handledare: Zhuangfei Wu and Clinton Priddle Ämnesgranskare: Cris Luengo Examinator: Anders Jansson IT10061 Tryckt av: Reprocentralen ITC Acknowledgements Fist of all, I am heartily thankful to my supervisors, Fred Wu and Clinton Priddle, whose encouragement, supervision and support from the preliminary to the concluding level enabled me to develop an understanding of the subject. -

Multiple Reference Motion Compensation: a Tutorial Introduction and Survey Contents

Foundations and TrendsR in Signal Processing Vol. 2, No. 4 (2008) 247–364 c 2009 A. Leontaris, P. C. Cosman and A. M. Tourapis DOI: 10.1561/2000000019 Multiple Reference Motion Compensation: A Tutorial Introduction and Survey By Athanasios Leontaris, Pamela C. Cosman and Alexis M. Tourapis Contents 1 Introduction 248 1.1 Motion-Compensated Prediction 249 1.2 Outline 254 2 Background, Mosaic, and Library Coding 256 2.1 Background Updating and Replenishment 257 2.2 Mosaics Generated Through Global Motion Models 261 2.3 Composite Memories 264 3 Multiple Reference Frame Motion Compensation 268 3.1 A Brief Historical Perspective 268 3.2 Advantages of Multiple Reference Frames 270 3.3 Multiple Reference Frame Prediction 271 3.4 Multiple Reference Frames in Standards 277 3.5 Interpolation for Motion Compensated Prediction 281 3.6 Weighted Prediction and Multiple References 284 3.7 Scalable and Multiple-View Coding 286 4 Multihypothesis Motion-Compensated Prediction 290 4.1 Bi-Directional Prediction and Generalized Bi-Prediction 291 4.2 Overlapped Block Motion Compensation 294 4.3 Hypothesis Selection Optimization 296 4.4 Multihypothesis Prediction in the Frequency Domain 298 4.5 Theoretical Insight 298 5 Fast Multiple-Frame Motion Estimation Algorithms 301 5.1 Multiresolution and Hierarchical Search 302 5.2 Fast Search using Mathematical Inequalities 303 5.3 Motion Information Re-Use and Motion Composition 304 5.4 Simplex and Constrained Minimization 306 5.5 Zonal and Center-biased Algorithms 307 5.6 Fractional-pixel Texture Shifts or Aliasing -

CALIFORNIA STATE UNIVERSITY, NORTHRIDGE Optimized AV1 Inter

CALIFORNIA STATE UNIVERSITY, NORTHRIDGE Optimized AV1 Inter Prediction using Binary classification techniques A graduate project submitted in partial fulfillment of the requirements for the degree of Master of Science in Software Engineering by Alex Kit Romero May 2020 The graduate project of Alex Kit Romero is approved: ____________________________________ ____________ Dr. Katya Mkrtchyan Date ____________________________________ ____________ Dr. Kyle Dewey Date ____________________________________ ____________ Dr. John J. Noga, Chair Date California State University, Northridge ii Dedication This project is dedicated to all of the Computer Science professors that I have come in contact with other the years who have inspired and encouraged me to pursue a career in computer science. The words and wisdom of these professors are what pushed me to try harder and accomplish more than I ever thought possible. I would like to give a big thanks to the open source community and my fellow cohort of computer science co-workers for always being there with answers to my numerous questions and inquiries. Without their guidance and expertise, I could not have been successful. Lastly, I would like to thank my friends and family who have supported and uplifted me throughout the years. Thank you for believing in me and always telling me to never give up. iii Table of Contents Signature Page ................................................................................................................................ ii Dedication ..................................................................................................................................... -

Video Coding Standards

Module 8 Video Coding Standards Version 2 ECE IIT, Kharagpur Lesson 23 MPEG-1 standards Version 2 ECE IIT, Kharagpur Lesson objectives At the end of this lesson, the students should be able to : 1. Enlist the major video coding standards 2. State the basic objectives of MPEG-1 standard. 3. Enlist the set of constrained parameters in MPEG-1 4. Define the I- P- and B-pictures 5. Present the hierarchical data structure of MPEG-1 6. Define the macroblock modes supported by MPEG-1 23.0 Introduction In lesson 21 and lesson 22, we studied how to perform motion estimation and thereby temporally predict the video frames to exploit significant temporal redundancies present in the video sequence. The error in temporal prediction is encoded by standard transform domain techniques like the DCT, followed by quantization and entropy coding to exploit the spatial and statistical redundancies and achieve significant video compression. The video codecs therefore follow a hybrid coding structure in which DPCM is adopted in temporal domain and DCT or other transform domain techniques in spatial domain. Efforts to standardize video data exchange via storage media or via communication networks are actively in progress since early 1980s. A number of international video and audio standardization activities started within the International Telephone Consultative Committee (CCITT), followed by the International Radio Consultative Committee (CCIR), and the International Standards Organization / International Electrotechnical Commission (ISO/IEC). An experts group, known as the Motion Pictures Expects Group (MPEG) was established in 1988 in the framework of the Joint ISO/IEC Technical Committee with an objective to develop standards for coded representation of moving pictures, associated audio, and their combination for storage and retrieval of digital media. -

Image Compression GIF (Graphics Interchange Format) LZW (Lempel

GIF (Graphics Interchange Image Compression Format) • GIF (Graphics Interchange Format) • Introduced by Compuserve in 1987 (GIF87a), multiple-image in one file/application specific • PNG (Portable Network Graphics)/MNG metadata support added in 1989 (GIF89a) (Multiple-image Network Graphics) • LZW (Lempel-Ziv-Welch) compression replaced • JPEG (Joint Pictures Experts Group) earlier RLE (Run Length Encoding) B&W version – Patented by Compuserve/Unisys (has run out in US, will run out in June 2004 in Europe) • Maximum of 256 colours (from a palette) including a “transparent” colour • Optional interlacing feature • http://www.w3.org/Graphics/GIF/spec-gif89a.txt LZW (Lempel-Ziv-Welch) LZW Algorithm • Most of this method was invented and published • Dictionary initially contains all possible one byte codes by Lempel and Ziv in 1978 (LZ78 algorithm) (256 entries) • Input is taken one byte at a time to find the longest initial • A few details and improvements were later given string present in the dictionary by Welch in 1984 (variable increasing index sizes, • The code for that string is output, then the string is efficient dictionary data structure) extended with one more input byte, b • Achieves approx. 50% compression on large • A new entry is added to the table mapping the extended English texts string to the next unused code • superseded by DEFLATE and Burrows-Wheeler • The process repeats, starting from byte b transform methods • The number of bits in an output code, and hence the maximum number of entries in the table is fixed • once -

Basics of Video

Analog and Digital Video Basics Nimrod Peleg Update: May. 2006 1 Video Compression: list of topics • Analog and Digital Video Concepts • Block-Based Motion Estimation • Resolution Conversion • H.261: A Standard for VideoConferencing • MPEG-1: A Standard for CD-ROM Based App. • MPEG-2 and HDTV: All Digital TV • H.263: A Standard for VideoPhone • MPEG-4: Content-Based Description 2 1 Analog Video Signal: Raster Scan 3 Odd and Even Scan Lines 4 2 Analog Video Signal: Image line 5 Analog Video Standards • All video standards are in • Almost any color can be reproduced by mixing the 3 additive primaries: R (red) , G (green) , B (blue) • 3 main different representations: – Composite – Component or S-Video (Y/C) 6 3 Composite Video 7 Component Analog Video • Each primary is considered as a separate monochromatic video signal • Basic presentation: R G B • Other RGB based: – YIQ – YCrCb – YUV – HSI To Color Spaces Demo 8 4 Composite Video Signal Encoding the Chrominance over Luminance into one signal (saving bandwidth): – NTSC (National TV System Committee) North America, Japan – PAL (Phased Alternation Line) Europe (Including Israel) – SECAM (Systeme Electronique Color Avec Memoire) France, Russia and more 9 Analog Standards Comparison NTSC PAL/SECAM Defined 1952 1960 Scan Lines/Field 525/262.5 625/312.5 Active horiz. lines 480 576 Subcarrier Freq. 3.58MHz 4.43MHz Interlacing 2:1 2:1 Aspect ratio 4:3 4:3 Horiz. Resol.(pel/line) 720 720 Frames/Sec 29.97 25 Component Color TUV YCbCr 10 5 Analog Video Equipment • Cameras – Vidicon, Film, CCD) • Video Tapes (magnetic): – Betacam, VHS, SVHS, U-matic, 8mm ...