Hardware Implementation of a High Speed Deblocking Filter for Theh

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MPEG Video in Software: Representation, Transmission, and Playback

High Speed Networking and Multimedia Computing, IS&T/SPIE Symp. on Elec. Imaging Sci. & Tech., San Jose, CA, February 1994. MPEG Video in Software: Representation, Transmission, and Playback Lawrence A. Rowe, Ketan D. Patel, Brian C Smith, and Kim Liu Computer Science Division - EECS University of California Berkeley, CA 94720 ([email protected]) Abstract A software decoder for MPEG-1 video was integrated into a continuous media playback system that supports synchronized playing of audio and video data stored on a file server. The MPEG-1 video playback system supports forward and backward play at variable speeds and random positioning. Sending and receiving side heuristics are described that adapt to frame drops due to network load and the available decoding capacity of the client workstation. A series of experiments show that the playback system adds a small overhead to the stand alone software decoder and that playback is smooth when all frames or very few frames can be decoded. Between these extremes, the system behaves reasonably but can still be improved. 1.0 Introduction As processor speed increases, real-time software decoding of compressed video is possible. We developed a portable software MPEG-1 video decoder that can play small-sized videos (e.g., 160 x 120) in real-time and medium-sized videos within a factor of two of real-time on current workstations [1]. We also developed a system to deliver and play synchronized continuous media streams (e.g., audio, video, images, animation, etc.) on a network [2].Initially, this system supported 8kHz 8-bit audio and hardware-assisted motion JPEG compressed video streams. -

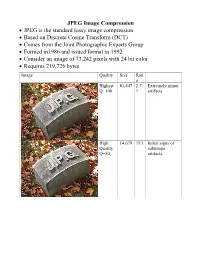

JPEG Image Compression

JPEG Image Compression JPEG is the standard lossy image compression Based on Discrete Cosine Transform (DCT) Comes from the Joint Photographic Experts Group Formed in1986 and issued format in 1992 Consider an image of 73,242 pixels with 24 bit color Requires 219,726 bytes Image Quality Size Rati o Highest 81,447 2.7: Extremely minor Q=100 1 artifacts High 14,679 15:1 Initial signs of Quality subimage Q=50 artifacts Medium 9,407 23:1 Stronger Q artifacts; loss of high frequency information Low 4,787 46:1 Severe high frequency loss leads to obvious artifacts on subimage boundaries ("macroblocking ") Lowest 1,523 144: Extreme loss of 1 color and detail; the leaves are nearly unrecognizable JPEG How it works Begin with a color translation RGB goes to Y′CBCR Luma and two Chroma colors Y is brightness CB is B-Y CR is R-Y Downsample or Chroma Subsampling Chroma data resolutions reduced by 2 or 3 Eye is less sensitive to fine color details than to brightness Block splitting Each channel broken into 8x8 blocks no subsampling Or 16x8 most common at medium compression Or 16x16 Must fill in remaining areas of incomplete blocks This gives the values DCT - centering Center the data about 0 Range is now -128 to 127 Middle is zero Discrete cosine transform formula Apply as 2D DCT using the formula Creates a new matrix Top left (largest) is the DC coefficient constant component Gives basic hue for the block Remaining 63 are AC coefficients Discrete cosine transform The DCT transforms an 8×8 block of input values to a linear combination of these 64 patterns. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

The Video Codecs Behind Modern Remote Display Protocols Rody Kossen

The video codecs behind modern remote display protocols Rody Kossen 1 Rody Kossen - Consultant [email protected] @r_kossen rody-kossen-186b4b40 www.rodykossen.com 2 Agenda The basics H264 – The magician Other codecs Hardware support (NVIDIA) The basics 4 Let’s start with some terms YCbCr RGB Bit Depth YUV 5 Colors 6 7 8 9 Visible colors 10 Color Bit-Depth = Amount of different colors Used in 2 ways: > The number of bits used to indicate the color of a single pixel, for example 24 bit > Number of bits used for each color component of a single pixel (Red Green Blue), for example 8 bit Can be combined with Alpha, which describes transparency Most commonly used: > High Color – 16 bit = 5 bits per color + 1 unused bit = 32.768 colors = 2^15 > True Color – 24 bit Color + 8 bit Alpha = 8 bits per color = 16.777.216 colors > Deep color – 30 bit Color + 10 bit Alpha = 10 bits per color = 1.073 billion colors 11 Color Bit-Depth 12 Color Gamut Describes the subset of colors which can be displayed in relation to the human eye Standardized gamut: > Rec.709 (Blu-ray) > Rec.2020 (Ultra HD Blu-ray) > Adobe RGB > sRBG 13 Compression Lossy > Irreversible compression > Used to reduce data size > Examples: MP3, JPEG, MPEG-4 Lossless > Reversable compression > Examples: ZIP, PNG, FLAC or Dolby TrueHD Visually Lossless > Irreversible compression > Difference can’t be seen by the Human Eye 14 YCbCr Encoding RGB uses Red Green Blue values to describe a color YCbCr is a different way of storing color: > Y = Luma or Brightness of the Color > Cr = Chroma difference -

HERO6 Black Manual

USER MANUAL 1 JOIN THE GOPRO MOVEMENT facebook.com/GoPro youtube.com/GoPro twitter.com/GoPro instagram.com/GoPro TABLE OF CONTENTS TABLE OF CONTENTS Your HERO6 Black 6 Time Lapse Mode: Settings 65 Getting Started 8 Time Lapse Mode: Advanced Settings 69 Navigating Your GoPro 17 Advanced Controls 70 Map of Modes and Settings 22 Connecting to an Audio Accessory 80 Capturing Video and Photos 24 Customizing Your GoPro 81 Settings for Your Activities 26 Important Messages 85 QuikCapture 28 Resetting Your Camera 86 Controlling Your GoPro with Your Voice 30 Mounting 87 Playing Back Your Content 34 Removing the Side Door 5 Using Your Camera with an HDTV 37 Maintenance 93 Connecting to Other Devices 39 Battery Information 94 Offloading Your Content 41 Troubleshooting 97 Video Mode: Capture Modes 45 Customer Support 99 Video Mode: Settings 47 Trademarks 99 Video Mode: Advanced Settings 55 HEVC Advance Notice 100 Photo Mode: Capture Modes 57 Regulatory Information 100 Photo Mode: Settings 59 Photo Mode: Advanced Settings 61 Time Lapse Mode: Capture Modes 63 YOUR HERO6 BLACK YOUR HERO6 BLACK 1 2 4 4 3 11 2 12 5 9 6 13 7 8 4 10 4 14 6 1. Shutter Button [ ] 6. Latch Release Button 10. Speaker 2. Camera Status Light 7. USB-C Port 11. Mode Button [ ] 3. Camera Status Screen 8. Micro HDMI Port 12. Battery 4. Microphone (cable not included) 13. microSD Card Slot 5. Side Door 9. Touch Display 14. Battery Door For information about mounting items that are included in the box, see Mounting (page 87). -

The Evolutionof Premium Vascular Ultrasound

Ultrasound EPIQ 5 The evolution of premium vascular ultrasound Philips EPIQ 5 ultrasound system The new challenges in global healthcare Unprecedented advances in premium ultrasound performance can help address the strains on overburdened hospitals and healthcare systems, which are continually being challenged to provide a higher quality of care cost-effectively. The goal is quick and accurate diagnosis the first time and in less time. Premium ultrasound users today demand improved clinical information from each scan, faster and more consistent exams that are easier to perform, and allow for a high level of confidence, even for technically difficult patients. 2 Performance More confidence in your diagnoses even for your most difficult cases EPIQ 5 is the new direction for premium vascular ultrasound, featuring an exceptional level of clinical performance to meet the challenges of today’s most demanding practices. Our most powerful architecture ever applied to vascular ultrasound EPIQ performance touches all aspects of acoustic acquisition and processing, allowing you to truly experience the evolution to a more definitive modality. Carotid artery bulb Superficial varicose veins 3 The evolution in premium vascular ultrasound Supported by our family of proprietary PureWave transducers and our leading-edge Anatomical Intelligence, this platform offers our highest level of premium performance. Key trends in global ultrasound • The need for more definitive premium • A demand to automate most operator ultrasound with exceptional image functions -

A Deblocking Filter Hardware Architecture for the High Efficiency

A Deblocking Filter Hardware Architecture for the High Efficiency Video Coding Standard Cláudio Machado Diniz1, Muhammad Shafique2, Felipe Vogel Dalcin1, Sergio Bampi1, Jörg Henkel2 1Informatics Institute, PPGC, Federal University of Rio Grande do Sul (UFRGS), Porto Alegre, Brazil 2Chair for Embedded Systems (CES), Karlsruhe Institute of Technology (KIT), Germany {cmdiniz, fvdalcin, bampi}@inf.ufrgs.br; {muhammad.shafique, henkel}@kit.edu Abstract—The new deblocking filter (DF) tool of the next encoder configuration: (i) Random Access (RA) configuration1 generation High Efficiency Video Coding (HEVC) standard is with Group of Pictures (GOP) equal to 8 (ii) Intra period2 for one of the most time consuming algorithms in video decoding. In each video sequence is defined as in [8] depending upon the order to achieve real-time performance at low-power specific frame rate of the video sequence, e.g. 24, 30, 50 or 60 consumption, we developed a hardware accelerator for this filter. frames per second (fps); (iii) each sequence is encoded with This paper proposes a high throughput hardware architecture four different Quantization Parameter (QP) values for HEVC deblocking filter employing hardware reuse to QP={22,27,32,37} as defined in the HEVC Common Test accelerate filtering decision units with a low area cost. Our Conditions [8]. Fig. 1 shows the accumulated execution time architecture achieves either higher or equivalent throughput (in % of total decoding time) of all functions included in C++ (4096x2048 @ 60 fps) with 5X-6X lower area compared to state- class TComLoopFilter that implement the DF in HEVC of-the-art deblocking filter architectures. decoder software. DF contributes to up to 5%-18% to the total Keywords—HEVC coding; Deblocking Filter; Hardware decoding time, depending on video sequence and QP. -

An Efficient Pipeline Architecture for Deblocking Filter in H.264/AVC

IEICE TRANS. INF. & SYST., VOL.E90–D, NO.1 JANUARY 2007 99 PAPER Special Section on Advanced Image Technology An Efficient Pipeline Architecture for Deblocking Filter in H.264/AV C Chung-Ming CHEN†a), Member and Chung-Ho CHEN†b), Nonmember SUMMARY In this paper, we study and analyze the computational complexity of deblocking filter in H.264/AVC baseline decoder based on SimpleScalar/ARM simulator. The simulation result shows that the mem- ory reference, content activity check operations, and filter operations are known to be very time consuming in the decoder of this new video cod- ing standard. In order to improve overall system performance, we propose a novel processing order with efficient VLSI architecture which simultane- ously processes the horizontal filtering of vertical edge and vertical filtering of horizontal edge. As a result, the memory performance of the proposed architecture is improved by four times when compared to the software im- plementation. Moreover, the system performance of our design signifi- cantly outperforms the previous proposals. key words: deblocking filter, H.264/AVC, video coding 1. Introduction Fig. 1 Block diagram of H.264/AV C . Video compression is a critical component in today’s multi- media systems. The limited transmission bandwidth or stor- As our experiment result indicates, the operation of age capacity for applications such as DVD, digital televi- the deblocking filter is the most time consuming part of sion, or internet video streaming emphasizes the demand for the H.264/AVC video decoder. The block-based structure higher video compression rates. To achieve this demand, of the H.264/AVC architecture produces artifacts known as the new video coding standard Recommendation H.264 of blocking artifacts. -

Variable Block-Based Deblocking Filter for H.264/Avc

VARIABLE BLOCK-BASED DEBLOCKING FILTER FOR H.264/AVC Seung-Ho Shin, Young-Joon Chai, Kyu-Sik Jang, Tae-Yong Kim GSAIM, Chung-Ang University, Seoul, Korea ABSTRACT macroblocks up to 4x4 blocks, it is possible to decrease artifacts on the boundaries between 4x4 blocks. In the The deblocking filter in H.264/AVC is used on low-end process of decreasing the blocking artifacts, however, the terminals to a limited extent due to computational actual images’ edges may erroneously be blurred. And it complexity. In this paper, Variable block-based deblocking may not be used on low-end terminals due to complex filter that efficiently eliminates the blocking artifacts computation and large memory capacity. Despite such occurred during the compression of low-bit rates digital shortcomings, however, the deblocking filter can be said to motion pictures is suggested. Blocking artifacts are plaid be the most essential technology in enhancing the subjective images appear on the block boundaries due to DCT and image quality. The general opinion of the subjective image quantization. In the method proposed in this paper, the quality test proves that there is distinguishing difference in image's spatial correlational characteristics are extracted by the image qualities with and without the deblocking filter using the variable block information of motion used [6]. compensation; the filtering is divided into 4 modes according to the characteristics, and adaptive filtering is In this paper, we suggest a block-based deblocking filter by executed in the divided regions. The proposed deblocking using the variable block information of the motion method eliminates the blocking artifacts, prevents excessive compensation. -

Analysis of Video Compression Using DCT

Imperial Journal of Interdisciplinary Research (IJIR) Vol-3, Issue-3, 2017 ISSN: 2454-1362, http://www.onlinejournal.in Analysis of Video Compression using DCT Pranavi Patil1, Sanskruti Patil2 & Harshala Shelke3 & Prof. Anand Sankhe4 1,2,3 Bachelor of Engineering in computer, Mumbai University 4Proffessor, Department of Computer Science & Engineering, Mumbai University Maharashtra, India Abstract: Video is most useful media to represent the where in order to get the best possible compression great information. Videos, images are the most efficiency, it considers certain level of distortion. essential approaches to represent data. Now this year, all the communications are done on such ii. Lossless Compression: media. The central problem for the media is its large The lossless compression technique is generally size. Also this large data contains a lot of redundant focused on the decreasing the compressed output information. The huge usage of digital multimedia video' bit rate without any alteration of the frame. leads to inoperable growth of data flow through The decompressed bit-stream is matching to the various mediums. Now a days, to solve the problem original bit-stream. of bandwidth requirement in communication, multimedia data is a big challenge. The main 2. Video Compression concept in the present paper is about the Discrete The main unit behind the video compression Cosine Transform (DCT) algorithm for compressing approach is the video encoder found at the video's size. Keywords: Compression, DCT, PSNR, transmitter side, which encodes the video that is to be MSE, Redundancy. transmitted in form of bits and also the video decoder positioned at the receiver side, which rebuild the Keywords: Compression, DCT, PSNR, MSE, video in its original form based on the bit sequence Redundancy. -

Image Compression GIF (Graphics Interchange Format) LZW (Lempel

GIF (Graphics Interchange Image Compression Format) • GIF (Graphics Interchange Format) • Introduced by Compuserve in 1987 (GIF87a), multiple-image in one file/application specific • PNG (Portable Network Graphics)/MNG metadata support added in 1989 (GIF89a) (Multiple-image Network Graphics) • LZW (Lempel-Ziv-Welch) compression replaced • JPEG (Joint Pictures Experts Group) earlier RLE (Run Length Encoding) B&W version – Patented by Compuserve/Unisys (has run out in US, will run out in June 2004 in Europe) • Maximum of 256 colours (from a palette) including a “transparent” colour • Optional interlacing feature • http://www.w3.org/Graphics/GIF/spec-gif89a.txt LZW (Lempel-Ziv-Welch) LZW Algorithm • Most of this method was invented and published • Dictionary initially contains all possible one byte codes by Lempel and Ziv in 1978 (LZ78 algorithm) (256 entries) • Input is taken one byte at a time to find the longest initial • A few details and improvements were later given string present in the dictionary by Welch in 1984 (variable increasing index sizes, • The code for that string is output, then the string is efficient dictionary data structure) extended with one more input byte, b • Achieves approx. 50% compression on large • A new entry is added to the table mapping the extended English texts string to the next unused code • superseded by DEFLATE and Burrows-Wheeler • The process repeats, starting from byte b transform methods • The number of bits in an output code, and hence the maximum number of entries in the table is fixed • once -

Deblocking Scheme for JPEG-Coded Images Using Sparse Representation and All Phase Biorthogonal Transform

Journal of Communications Vol. 11, No. 12, December 2016 Deblocking Scheme for JPEG-Coded Images Using Sparse Representation and All Phase Biorthogonal Transform Liping Wang, Chengyou Wang, and Xiao Zhou School of Mechanical, Electrical and Information Engineering, Shandong University, Weihai 264209, China Email: [email protected]; {wangchengyou, zhouxiao}@sdu.edu.cn Abstract—For compressed images, a major drawback is that The image deblocking algorithm aims to alleviate those images will exhibit severe blocking artifacts at very low blocking artifacts and improve visual quality of bit rates due to adopting Block-Based Discrete Cosine compressed images. Deblocking methods can be mainly Transform (BDCT). In this paper, a novel deblocking scheme divided into two categories: in-loop processing methods using sparse representation is proposed. A new transform called All Phase Biorthogonal Transform (APBT) was proposed in and post-processing methods. The in-loop deblocking recent years. APBT has the similar energy compaction property processing methods not only avoid the propagation of with Discrete Cosine Transform (DCT). It has very good blocking artifacts between adjacent frames, but also can column properties, high frequency attenuation characteristics, enhance coding efficiency. For example, H.264/AVC [2] low frequency energy aggregation, and so on. In this paper, we adopts the in-loop deblocking processing. Some use it to generate the over-completed dictionary for sparse researchers have designed the in-loop deblocking filter coding. For Orthogonal Matching Pursuit (OMP), we select an [4]. Numerous experimental results manifest that the in- adaptive residual threshold by combining blind image blocking assessment. Experimental results show that this new scheme is loop deblocking filter can provide both objective and effective in image deblocking and can avoid over-blurring of subjective improvement compared with the compressed edges and textures.