Color Space Adaptation for Video Coding – Adrià Arrufat 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

An Improved SPSIM Index for Image Quality Assessment

S S symmetry Article An Improved SPSIM Index for Image Quality Assessment Mariusz Frackiewicz * , Grzegorz Szolc and Henryk Palus Department of Data Science and Engineering, Silesian University of Technology, Akademicka 16, 44-100 Gliwice, Poland; [email protected] (G.S.); [email protected] (H.P.) * Correspondence: [email protected]; Tel.: +48-32-2371066 Abstract: Objective image quality assessment (IQA) measures are playing an increasingly important role in the evaluation of digital image quality. New IQA indices are expected to be strongly correlated with subjective observer evaluations expressed by Mean Opinion Score (MOS) or Difference Mean Opinion Score (DMOS). One such recently proposed index is the SuperPixel-based SIMilarity (SPSIM) index, which uses superpixel patches instead of a rectangular pixel grid. The authors of this paper have proposed three modifications to the SPSIM index. For this purpose, the color space used by SPSIM was changed and the way SPSIM determines similarity maps was modified using methods derived from an algorithm for computing the Mean Deviation Similarity Index (MDSI). The third modification was a combination of the first two. These three new quality indices were used in the assessment process. The experimental results obtained for many color images from five image databases demonstrated the advantages of the proposed SPSIM modifications. Keywords: image quality assessment; image databases; superpixels; color image; color space; image quality measures Citation: Frackiewicz, M.; Szolc, G.; Palus, H. An Improved SPSIM Index 1. Introduction for Image Quality Assessment. Quantitative domination of acquired color images over gray level images results in Symmetry 2021, 13, 518. https:// the development not only of color image processing methods but also of Image Quality doi.org/10.3390/sym13030518 Assessment (IQA) methods. -

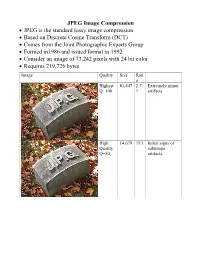

JPEG Image Compression

JPEG Image Compression JPEG is the standard lossy image compression Based on Discrete Cosine Transform (DCT) Comes from the Joint Photographic Experts Group Formed in1986 and issued format in 1992 Consider an image of 73,242 pixels with 24 bit color Requires 219,726 bytes Image Quality Size Rati o Highest 81,447 2.7: Extremely minor Q=100 1 artifacts High 14,679 15:1 Initial signs of Quality subimage Q=50 artifacts Medium 9,407 23:1 Stronger Q artifacts; loss of high frequency information Low 4,787 46:1 Severe high frequency loss leads to obvious artifacts on subimage boundaries ("macroblocking ") Lowest 1,523 144: Extreme loss of 1 color and detail; the leaves are nearly unrecognizable JPEG How it works Begin with a color translation RGB goes to Y′CBCR Luma and two Chroma colors Y is brightness CB is B-Y CR is R-Y Downsample or Chroma Subsampling Chroma data resolutions reduced by 2 or 3 Eye is less sensitive to fine color details than to brightness Block splitting Each channel broken into 8x8 blocks no subsampling Or 16x8 most common at medium compression Or 16x16 Must fill in remaining areas of incomplete blocks This gives the values DCT - centering Center the data about 0 Range is now -128 to 127 Middle is zero Discrete cosine transform formula Apply as 2D DCT using the formula Creates a new matrix Top left (largest) is the DC coefficient constant component Gives basic hue for the block Remaining 63 are AC coefficients Discrete cosine transform The DCT transforms an 8×8 block of input values to a linear combination of these 64 patterns. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Robust Pulse-Rate from Chrominance-Based Rppg Gerard De Haan and Vincent Jeanne

IEEE TRANSACTIONS ON BIOMEDICAL ENGINEERING, VOL. ?, NO. ?, MONTH 2013 1 Robust pulse-rate from chrominance-based rPPG Gerard de Haan and Vincent Jeanne Abstract—Remote photoplethysmography (rPPG) enables con- PPG signal into two independent signals built as a linear tactless monitoring of the blood volume pulse using a regular combination of two color channels [8]. One combination camera. Recent research focused on improved motion robustness, approximated the clean pulse-signal, the other the motion but the proposed blind source separation techniques (BSS) in RGB color space show limited success. We present an analysis of artifact, and the energy in the pulse-signal was minimized the motion problem, from which far superior chrominance-based to optimize the combination. Poh et al. extended this work methods emerge. For a population of 117 stationary subjects, we proposing a linear combination of all three color channels show our methods to perform in 92% good agreement (±1:96σ) defining three independent signals with Independent Compo- with contact PPG, with RMSE and standard deviation both a nent Analysis (ICA) using non-Gaussianity as the criterion factor of two better than BSS-based methods. In a fitness setting using a simple spectral peak detector, the obtained pulse-rate for independence [5]. Lewandowska et al. varied this con- for modest motion (bike) improves from 79% to 98% correct, cept defining three independent linear combinations of the and for vigorous motion (stepping) from less than 11% to more color channels with Principal Component Analysis (PCA) [6]. than 48% correct. We expect the greatly improved robustness to With both Blind Source Separation (BSS) techniques, the considerably widen the application scope of the technology. -

The Video Codecs Behind Modern Remote Display Protocols Rody Kossen

The video codecs behind modern remote display protocols Rody Kossen 1 Rody Kossen - Consultant [email protected] @r_kossen rody-kossen-186b4b40 www.rodykossen.com 2 Agenda The basics H264 – The magician Other codecs Hardware support (NVIDIA) The basics 4 Let’s start with some terms YCbCr RGB Bit Depth YUV 5 Colors 6 7 8 9 Visible colors 10 Color Bit-Depth = Amount of different colors Used in 2 ways: > The number of bits used to indicate the color of a single pixel, for example 24 bit > Number of bits used for each color component of a single pixel (Red Green Blue), for example 8 bit Can be combined with Alpha, which describes transparency Most commonly used: > High Color – 16 bit = 5 bits per color + 1 unused bit = 32.768 colors = 2^15 > True Color – 24 bit Color + 8 bit Alpha = 8 bits per color = 16.777.216 colors > Deep color – 30 bit Color + 10 bit Alpha = 10 bits per color = 1.073 billion colors 11 Color Bit-Depth 12 Color Gamut Describes the subset of colors which can be displayed in relation to the human eye Standardized gamut: > Rec.709 (Blu-ray) > Rec.2020 (Ultra HD Blu-ray) > Adobe RGB > sRBG 13 Compression Lossy > Irreversible compression > Used to reduce data size > Examples: MP3, JPEG, MPEG-4 Lossless > Reversable compression > Examples: ZIP, PNG, FLAC or Dolby TrueHD Visually Lossless > Irreversible compression > Difference can’t be seen by the Human Eye 14 YCbCr Encoding RGB uses Red Green Blue values to describe a color YCbCr is a different way of storing color: > Y = Luma or Brightness of the Color > Cr = Chroma difference -

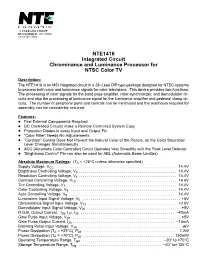

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV Description: The NTE1416 is an MSI integrated circuit in a 28–Lead DIP type package designed for NTSC systems to process both color and luminance signals for color televisions. This device provides two functions: The processing of color signals for the band pass amplifier, color synchronizer, and demodulator cir- cuits and also the processing of luminance signal for the luminance amplifier and pedestal clamp cir- cuits. The number of peripheral parts and controls can be minimized and the manhours required for assembly can be considerbly reduced. Features: D Few External Components Required D DC Controlled Circuits make a Remote Controlled System Easy D Protection Diodes in every Input and Output Pin D “Color Killer” Needs No Adjustements D “Contrast” Control Does Not Prevent the Natural Color of the Picture, as the Color Saturation Level Changes Simultaneously D ACC (Automatic Color Controller) Circuit Operates Very Smoothly with the Peak Level Detector D “Brightness Control” Pin can also be used for ABL (Automatic Beam Limitter) Absolute Maximum Ratings: (TA = +25°C unless otherwise specified) Supply Voltage, VCC . 14.4V Brightness Controlling Voltage, V3 . 14.4V Resolution Controlling Voltage, V4 . 14.4V Contrast Controlling Voltage, V10 . 14.4V Tint Controlling Voltage, V7 . 14.4V Color Controlling Voltage, V9 . 14.4V Auto Controlling Voltage, V8 . 14.4V Luminance Input Signal Voltage, V5 . +5V Chrominance Signal Input Voltage, V13 . +2.5V Demodulator Input Signal Voltage, V25 . +5V R.G.B. Output Current, I26, I27, I28 . –40mA Gate Pulse Input Voltage, V20 . +5V Gate Pulse Output Current, I20 . -

Tracking and Automation of Images by Colour Based

Vol 11, Issue 8,August/ 2020 ISSN NO: 0377-9254 TRACKING AND AUTOMATION OF IMAGES BY COLOUR BASED PROCESSING N Alekhya 1, K Venkanna Naidu 2 and M.SunilKumar 3 1PG student, D.N.R College of Engineering, ECE, JNTUK, INDIA 2 Associate Professor D.N.R College of Engineering, ECE, JNTUK, INDIA 3Assistant Professor Sir CRR College of Engineering , EEE, JNTUK, INDIA [email protected], [email protected] ,[email protected] Abstract— Now a day all application sectors are mostly image analysis involves maneuver the moving for the automation processing and image data to conclude exactly the information sensing . for example image processing in compulsory to help to answer a computer imaging medical field ,in industrial process lines , object problem. detection and Ranging application, satellite Digital image processing methods stems from two imaging Processing ,Military imaging etc, In principal application areas: improvement of each and every application area the raw images pictorial information for human interpretation, and are to be captured and to be processed for processing of image data for tasks such as storage, human visual inspection or digital image transmission, and extraction of pictorial processing systems. Automation applications In information this proposed system the video is converted into The remaining paper is structured as follows. frames and then it is get divided into sub bands Section 2 deals with the existing method of Image and then background is get subtracted, then the Processing. Section 3 deals with the proposed object is get identified and then it is tracked in method of Image Processing. Section 4 deals the the framed from the video .This work presents a results and discussions. -

Video Compression for New Formats

ANNÉE 2016 THÈSE / UNIVERSITÉ DE RENNES 1 sous le sceau de l’Université Bretagne Loire pour le grade de DOCTEUR DE L’UNIVERSITÉ DE RENNES 1 Mention : Traitement du Signal et Télécommunications Ecole doctorale Matisse présentée par Philippe Bordes Préparée à l’unité de recherche UMR 6074 - INRIA Institut National Recherche en Informatique et Automatique Adapting Video Thèse soutenue à Rennes le 18 Janvier 2016 devant le jury composé de : Compression to new Thomas SIKORA formats Professeur [Tech. Universität Berlin] / rapporteur François-Xavier COUDOUX Professeur [Université de Valenciennes] / rapporteur Olivier DEFORGES Professeur [Institut d'Electronique et de Télécommunications de Rennes] / examinateur Marco CAGNAZZO Professeur [Telecom Paris Tech] / examinateur Philippe GUILLOTEL Distinguished Scientist [Technicolor] / examinateur Christine GUILLEMOT Directeur de Recherche [INRIA Bretagne et Atlantique] / directeur de thèse Adapting Video Compression to new formats Résumé en français Adaptation de la Compression Vidéo aux nouveaux formats Introduction Le domaine de la compression vidéo se trouve au carrefour de l’avènement des nouvelles technologies d’écrans, l’arrivée de nouveaux appareils connectés et le déploiement de nouveaux services et applications (vidéo à la demande, jeux en réseau, YouTube et vidéo amateurs…). Certaines de ces technologies ne sont pas vraiment nouvelles, mais elles ont progressé récemment pour atteindre un niveau de maturité tel qu’elles représentent maintenant un marché considérable, tout en changeant petit à petit nos habitudes et notre manière de consommer la vidéo en général. Les technologies d’écrans ont rapidement évolués du plasma, LED, LCD, LCD avec rétro- éclairage LED, et maintenant OLED et Quantum Dots. Cette évolution a permis une augmentation de la luminosité des écrans et d’élargir le spectre des couleurs affichables. -

Demosaicking: Color Filter Array Interpolation [Exploring the Imaging

[Bahadir K. Gunturk, John Glotzbach, Yucel Altunbasak, Ronald W. Schafer, and Russel M. Mersereau] FLOWER PHOTO © PHOTO FLOWER MEDIA, 1991 21ST CENTURY PHOTO:CAMERA AND BACKGROUND ©VISION LTD. DIGITAL Demosaicking: Color Filter Array Interpolation [Exploring the imaging process and the correlations among three color planes in single-chip digital cameras] igital cameras have become popular, and many people are choosing to take their pic- tures with digital cameras instead of film cameras. When a digital image is recorded, the camera needs to perform a significant amount of processing to provide the user with a viewable image. This processing includes correction for sensor nonlinearities and nonuniformities, white balance adjustment, compression, and more. An important Dpart of this image processing chain is color filter array (CFA) interpolation or demosaicking. A color image requires at least three color samples at each pixel location. Computer images often use red (R), green (G), and blue (B). A camera would need three separate sensors to completely meas- ure the image. In a three-chip color camera, the light entering the camera is split and projected onto each spectral sensor. Each sensor requires its proper driving electronics, and the sensors have to be registered precisely. These additional requirements add a large expense to the system. Thus, many cameras use a single sensor covered with a CFA. The CFA allows only one color to be measured at each pixel. This means that the camera must estimate the missing two color values at each pixel. This estimation process is known as demosaicking. Several patterns exist for the filter array. -

22926-22936 Page 22926 the Color Features Based on Computer Vision

Umapathy Eaganathan* et al. /International Journal of Pharmacy & Technology ISSN: 0975-766X CODEN: IJPTFI Available Online through Research Article www.ijptonline.com ANALYSIS OF VARIOUS COLOR MODELS IN MEDICAL IMAGE PROCESSING Umapathy Eaganathan* Faculty in Computing, Asia Pacific University, Bukit Jalil, Kuala Lumpur, 57000, Malaysia. Received on: 20.10.2016 Accepted on: 25.11.2016 Abstract Color is a visual element for humanbeing to perceive in everyday activities. Inspecting the color manually may be a tedious job to the humanbeing whereas the current digital systems and technologies help to inspect even the pixels color accurately for the given objects. Various researches carried out by the researchers over the world to identify diseased spots, damaged spots, pattern recognition, and graphical identification. Color plays a vital role in image processing and geographical information systems. This article explains about different color models such as RGB, YCbCr, HSV, HSI, CIELab in medical image processing and it additionally explores how these color models can be derived from the basic color model RGB. Further this study will also discuss the suitable color models that can be used in medical image processing. Keywords : Image Processing, Medical Images, RGB Color, Image Segmentation, Pattern Recognition, Color Models 1. Introduction Nowadays in medical health system the images playing a vital role throughout the process of medical disease management, intensive treatment, surgical procedures and clinical researches. In medical image processing such as neuro-imaging, bio-imaging, medical visualisations the imaging modalities based on digital images and may vary depends upon the diagnosis stage. Main improvements of medical imaging systems working faster than the traditional disease identification system. -

Improved Television Systems: NTSC and Beyond

• Improved Television Systems: NTSC and Beyond By William F. Schreiber After a discussion ofthe limits to received image quality in NTSC and a excellent results. Demonstrations review of various proposals for improvement, it is concluded that the have been made showing good motion current system is capable ofsignificant increase in spatial and temporal rendition with very few frames per resolution. and that most of these improvements can be made in a second,2 elimination of interline flick er by up-conversion, 3 and improved compatible manner. Newly designed systems,for the sake ofmaximum separation of luminance and chromi utilization of channel capacity. should use many of the techniques nance by means of comb tilters. ~ proposedfor improving NTSC. such as high-rate cameras and displays, No doubt the most important ele but should use the component. rather than composite, technique for ment in creating interest in this sub color multiplexing. A preference is expressed for noncompatible new ject was the demonstration of the Jap systems, both for increased design flexibility and on the basis oflikely anese high-definition television consumer behaL'ior. Some sample systems are described that achieve system in 1981, a development that very high quality in the present 6-MHz channels, full "HDTV" at the took more than ten years.5 Orches CCIR rate of 216 Mbits/sec, or "better-than-35mm" at about 500 trated by NHK, with contributions Mbits/sec. Possibilities for even higher efficiency using motion compen from many Japanese companies, im sation are described. ages have been produced that are comparable to 35mm theater quality.