Video Compression for New Formats

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Package 'Colorscience'

Package ‘colorscience’ October 29, 2019 Type Package Title Color Science Methods and Data Version 1.0.8 Encoding UTF-8 Date 2019-10-29 Maintainer Glenn Davis <[email protected]> Description Methods and data for color science - color conversions by observer, illuminant, and gamma. Color matching functions and chromaticity diagrams. Color indices, color differences, and spectral data conversion/analysis. License GPL (>= 3) Depends R (>= 2.10), Hmisc, pracma, sp Enhances png LazyData yes Author Jose Gama [aut], Glenn Davis [aut, cre] Repository CRAN NeedsCompilation no Date/Publication 2019-10-29 18:40:02 UTC R topics documented: ASTM.D1925.YellownessIndex . .5 ASTM.E313.Whiteness . .6 ASTM.E313.YellownessIndex . .7 Berger59.Whiteness . .7 BVR2XYZ . .8 cccie31 . .9 cccie64 . 10 CCT2XYZ . 11 CentralsISCCNBS . 11 CheckColorLookup . 12 1 2 R topics documented: ChromaticAdaptation . 13 chromaticity.diagram . 14 chromaticity.diagram.color . 14 CIE.Whiteness . 15 CIE1931xy2CIE1960uv . 16 CIE1931xy2CIE1976uv . 17 CIE1931XYZ2CIE1931xyz . 18 CIE1931XYZ2CIE1960uv . 19 CIE1931XYZ2CIE1976uv . 20 CIE1960UCS2CIE1964 . 21 CIE1960UCS2xy . 22 CIE1976chroma . 23 CIE1976hueangle . 23 CIE1976uv2CIE1931xy . 24 CIE1976uv2CIE1960uv . 25 CIE1976uvSaturation . 26 CIELabtoDIN99 . 27 CIEluminanceY2NCSblackness . 28 CIETint . 28 ciexyz31 . 29 ciexyz64 . 30 CMY2CMYK . 31 CMY2RGB . 32 CMYK2CMY . 32 ColorBlockFromMunsell . 33 compuphaseDifferenceRGB . 34 conversionIlluminance . 35 conversionLuminance . 36 createIsoTempLinesTable . 37 daylightcomponents . 38 deltaE1976 -

Tracking and Automation of Images by Colour Based

Vol 11, Issue 8,August/ 2020 ISSN NO: 0377-9254 TRACKING AND AUTOMATION OF IMAGES BY COLOUR BASED PROCESSING N Alekhya 1, K Venkanna Naidu 2 and M.SunilKumar 3 1PG student, D.N.R College of Engineering, ECE, JNTUK, INDIA 2 Associate Professor D.N.R College of Engineering, ECE, JNTUK, INDIA 3Assistant Professor Sir CRR College of Engineering , EEE, JNTUK, INDIA [email protected], [email protected] ,[email protected] Abstract— Now a day all application sectors are mostly image analysis involves maneuver the moving for the automation processing and image data to conclude exactly the information sensing . for example image processing in compulsory to help to answer a computer imaging medical field ,in industrial process lines , object problem. detection and Ranging application, satellite Digital image processing methods stems from two imaging Processing ,Military imaging etc, In principal application areas: improvement of each and every application area the raw images pictorial information for human interpretation, and are to be captured and to be processed for processing of image data for tasks such as storage, human visual inspection or digital image transmission, and extraction of pictorial processing systems. Automation applications In information this proposed system the video is converted into The remaining paper is structured as follows. frames and then it is get divided into sub bands Section 2 deals with the existing method of Image and then background is get subtracted, then the Processing. Section 3 deals with the proposed object is get identified and then it is tracked in method of Image Processing. Section 4 deals the the framed from the video .This work presents a results and discussions. -

SAF7115 Multistandard Video Decoder with Super-Adaptive Comb Filter

SAF7115 Multistandard video decoder with super-adaptive comb filter, scaler and VBI data read-back via I2C-bus Rev. 01 — 15 October 2008 Product data sheet 1. General description The SAF7115 is a video capture device that, due to its improved comb filter performance and 10-bit video output capabilities, is suitable for various applications such as In-car video reception, In-car entertainment or In-car navigation. The SAF7115 is a combination of a two channel analog preprocessing circuit and a high performance scaler. The two channel analog preprocessing circuit includes source-selection, an anti-aliasing filter and Analog-to-Digital Converter (ADC) per channel, an automatic clamp and gain control, two Clock Generation Circuits (CGC1 and CGC2) and a digital multi standard decoder that contains two-dimensional chrominance/luminance separation utilizing an improved adaptive comb filter. The high performance scaler has variable horizontal and vertical up and down scaling and a brightness/contrast/saturation control circuit. The decoder is based on the principle of line-locked clock decoding and is able to decode the color of PAL, SECAM and NTSC signals into ITU-601 compatible color component values. The SAF7115 accepts CVBS or S-video (Y/C) from TV or VCR sources as analog inputs, including weak and distorted signals. The expansion port (X-port) for digital video (bi-directional half duplex, D1 compatible) can be used to either output unscaled video using 10-bit or 8-bit dithered resolution or to connect to other external digital video sources for reuse of the SAF7115 scaler features. The enhanced image port (I-port) of the SAF7115 supports 8-bit and 16-bit wide output data with auxiliary reference data for interfacing, e.g. -

22926-22936 Page 22926 the Color Features Based on Computer Vision

Umapathy Eaganathan* et al. /International Journal of Pharmacy & Technology ISSN: 0975-766X CODEN: IJPTFI Available Online through Research Article www.ijptonline.com ANALYSIS OF VARIOUS COLOR MODELS IN MEDICAL IMAGE PROCESSING Umapathy Eaganathan* Faculty in Computing, Asia Pacific University, Bukit Jalil, Kuala Lumpur, 57000, Malaysia. Received on: 20.10.2016 Accepted on: 25.11.2016 Abstract Color is a visual element for humanbeing to perceive in everyday activities. Inspecting the color manually may be a tedious job to the humanbeing whereas the current digital systems and technologies help to inspect even the pixels color accurately for the given objects. Various researches carried out by the researchers over the world to identify diseased spots, damaged spots, pattern recognition, and graphical identification. Color plays a vital role in image processing and geographical information systems. This article explains about different color models such as RGB, YCbCr, HSV, HSI, CIELab in medical image processing and it additionally explores how these color models can be derived from the basic color model RGB. Further this study will also discuss the suitable color models that can be used in medical image processing. Keywords : Image Processing, Medical Images, RGB Color, Image Segmentation, Pattern Recognition, Color Models 1. Introduction Nowadays in medical health system the images playing a vital role throughout the process of medical disease management, intensive treatment, surgical procedures and clinical researches. In medical image processing such as neuro-imaging, bio-imaging, medical visualisations the imaging modalities based on digital images and may vary depends upon the diagnosis stage. Main improvements of medical imaging systems working faster than the traditional disease identification system. -

AN9717: Ycbcr to RGB Considerations (Multimedia)

YCbCr to RGB Considerations TM Application Note March 1997 AN9717 Author: Keith Jack Introduction Converting 4:2:2 to 4:4:4 YCbCr Many video ICs now generate 4:2:2 YCbCr video data. The Prior to converting YCbCr data to R´G´B´ data, the 4:2:2 YCbCr color space was developed as part of ITU-R BT.601 YCbCr data must be converted to 4:4:4 YCbCr data. For the (formerly CCIR 601) during the development of a world-wide YCbCr to RGB conversion process, each Y sample must digital component video standard. have a corresponding Cb and Cr sample. Some of these video ICs also generate digital RGB video Figure 1 illustrates the positioning of YCbCr samples for the data, using lookup tables to assist with the YCbCr to RGB 4:4:4 format. Each sample has a Y, a Cb, and a Cr value. conversion. By understanding the YCbCr to RGB conversion Each sample is typically 8 bits (consumer applications) or 10 process, the lookup tables can be eliminated, resulting in a bits (professional editing applications) per component. substantial cost savings. Figure 2 illustrates the positioning of YCbCr samples for the This application note covers some of the considerations for 4:2:2 format. For every two horizontal Y samples, there is converting the YCbCr data to RGB data without the use of one Cb and Cr sample. Each sample is typically 8 bits (con- lookup tables. The process basically consists of three steps: sumer applications) or 10 bits (professional editing applica- tions) per component. -

Application No. AU 2020201212 A1 (19) AUSTRALIAN PATENT OFFICE

(12) STANDARD PATENT APPLICATION (11) Application No. AU 2020201212 A1 (19) AUSTRALIAN PATENT OFFICE (54) Title Adaptive color space transform coding (51) International Patent Classification(s) H04N 19/122 (2014.01) H04N 19/196 (2014.01) H04N 19/186 (2014.01) H04N 19/46 (2014.01) (21) Application No: 2020201212 (22) Date of Filing: 2020.02.20 (43) Publication Date: 2020.03.12 (43) Publication Journal Date: 2020.03.12 (62) Divisional of: 2017265177 (71) Applicant(s) Apple Inc. (72) Inventor(s) TOURAPIS, Alexandras (74) Agent / Attorney FPA Patent Attorneys Pty Ltd, ANZ Tower 161 Castlereagh Street, Sydney, NSW, 2000, AU 1002925143 ABSTRACT 2020 An apparatus for decoding video data comprising: a means for decoding encoded video data to determine transformed residual sample data and to determine color transform parameters Feb for a current image area, wherein the color transform parameters specify inverse color 20 transform coefficients with a predefined precision; a means for determining a selected inverse color transform for the current image area from the color transform parameters; a means for performing the selected inverse color transform on the transformed residual 1212 sample data to produce inverse transformed residual sample data; and a means for combining the inverse transformed residual sample data with motion predicted image data to generate restored image data for the current image area of an output video. 1002925143 ADAPTIVE COLOR SPACE TRANSFORM CODING 2020 Feb PRIORITY CLAIM 20 [01] The present application claims priority to U.S. Patent Application No. 13/940,025, filed on July 11, 2013, which is a Continuation-in-Part of U.S. -

AMSA Meat Color Measurement Guidelines

AMSA Meat Color Measurement Guidelines Revised December 2012 American Meat Science Association http://www.m eatscience.org AMSA Meat Color Measurement Guidelines Revised December 2012 American Meat Science Association 201 West Springfi eld Avenue, Suite 1202 Champaign, Illinois USA 61820 800-517-2672 [email protected] http://www.m eatscience.org iii CONTENTS Technical Writing Committee .................................................................................................................... v Preface ..............................................................................................................................................................vi Section I: Introduction ................................................................................................................................. 1 Section II: Myoglobin Chemistry ............................................................................................................... 3 A. Fundamental Myoglobin Chemistry ................................................................................................................ 3 B. Dynamics of Myoglobin Redox Form Interconversions ........................................................................... 3 C. Visual, Practical Meat Color Versus Actual Pigment Chemistry ........................................................... 5 D. Factors Affecting Meat Color ............................................................................................................................... 6 E. Muscle -

12-Bit, Integrated, Multiformat SDTV/HDTV Video Decoder and RGB Graphics Digitizer

12-Bit, Integrated, Multiformat SDTV/HDTV Video Decoder and RGB Graphics Digitizer Data Sheet ADV7403 FEATURES GENERAL DESCRIPTION 4 Noise Shaped Video (NSV)® 12-bit analog-to-digital The ADV7403 is a high quality, single chip, multiformat video converters (ADCs) sampling up to 140 MHz (140 MHz decoder and graphics digitizer. This multiformat decoder supports speed grade only) the conversion of PAL, NTSC, and SECAM standards in the Mux with 12 analog input channels form of composite or S-Video into a digital ITU-R BT.656 format. SCART fast blank support The ADV7403 also supports the decoding of a component Internal antialias filters RGB/YPrPb video signal into a digital YCrCb or RGB pixel output NTSC/PAL/SECAM color standards support stream. The support for component video includes standards 525p/625p component progressive scan support such as 525i, 625i, 525p, 625p, 720p, 1080i, 1250i, and many 720p/1080i component HDTV support other HD and SMPTE standards. Graphic digitization is also Digitizes RGB graphics up to 1280 × 1024 at 75 Hz (SXGA) supported by the ADV7403; it is capable of digitizing RGB (140 MHz speed grade only) graphics signals from VGA to SXGA rates and converting them 24-bit digital input port supports data from DVI/HDMI into a digital RGB or YCrCb pixel output stream. SCART and receiver IC overlay functionality are enabled by the ability of the ADV7403 Any-to-any, 3 × 3 color-space conversion matrix to simultaneously process CVBS and standard definition RGB Industrial temperature range: −40°C to +85°C signals. The fast blank pin controls the mixing of these signals. -

SAA7114 PAL/NTSC/SECAM Video Decoder with Adaptive PAL/NTSC Comb filter, VBI Data Slicer and High Performance Scaler Rev

SAA7114 PAL/NTSC/SECAM video decoder with adaptive PAL/NTSC comb filter, VBI data slicer and high performance scaler Rev. 03 — 17 January 2006 Product data sheet 1. General description The SAA7114 is a video capture device for applications at the image port of Video Graphics Array (VGA) controllers. The SAA7114 is a combination of a two-channel analog preprocessing circuit including source selection, anti-aliasing filter and Analog-to-Digital Converter (ADC), an automatic clamp and gain control, a Clock Generation Circuit (CGC), a digital multistandard decoder containing two-dimensional chrominance/luminance separation by an adaptive comb filter and a high performance scaler, including variable horizontal and vertical up and downscaling and a brightness, contrast and saturation control circuit. It is a highly integrated circuit for desktop video and similar applications. The decoder is based on the principle of line-locked clock decoding and is able to decode the color of PAL, SECAM and NTSC signals into ITU 601 compatible color component values. The SAA7114 accepts CVBS or S-video (Y/C) as analog inputs from TV or VCR sources, including weak and distorted signals. An expansion port (X port) for digital video (bidirectional half duplex, D1 compatible) is also supported to connect to MPEG or a video phone codec. At the so called image port (I port) the SAA7114 supports 8-bit or 16-bit wide output data with auxiliary reference data for interfacing to VGA controllers. The target application for the SAA7114 is to capture and scale video images, to be provided as a digital video stream through the image port of a VGA controller, for display via the frame buffer of the VGA, or for capture to system memory. -

Ycbcr and XYZ Colour Image Edge Detection

GNANATHEJA RAKESH V, T SREENIVASULU REDDY / International Journal of Engineering Research and Applications (IJERA) ISSN: 2248-9622 www.ijera.com Vol. 2, Issue 2,Mar-Apr 2012, pp.152-156 YCoCg color Image Edge detection GNANATHEJA RAKESH V T SREENIVASULU REDDY M.Tech Student, Department of EEE Associate Professor of ECE, Sri Venkateswara University College of Engineering, Sri Venkateswara University College of Engineering, Tirupati Tirupati Abstract: Edges are prominent features in images gray value images when neighboring objects have and their analysis and detection are an essential goal different hues but equal intensities. Such objects can not in computer vision and image processing. Indeed, be distinguished in gray value images. They are treated identifying and localizing edges are a low level task in like one big object in the scene. This is not significant if a variety of applications such as 3-D reconstruction, obstacle avoidness is the task of the vision system. shape recognition, image compression, enhancement, Opposed to this, the capability of distinguishing between and restoration. This paper presents a comparative one big object and two (or several) small objects may study on different approaches to edge detection of become crucial for the task of object grasping or even in colour images. The approaches are based on 2-D image segmentation. Additionally, The common transforming the RGB image to YUV and YCoCg shortcomings of the RGB image edge detection and back to RGB colour model. Edges are detected arithmetic are the low speed and the color losses after the by Laplacian operator and Gradient Operator. The each component of the image is processed. -

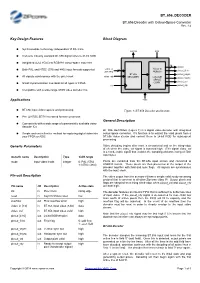

BT 656 DECODER BT.656 Decoder with Colour-Space Converter Key

BT_656_DECODER BT.656 Decoder with Colour-Space Converter Rev. 1.4 Key Design Features Block Diagram ● Synthesizable, technology independent VHDL Core ● Converts industry standard BT.656 digital video to 24-bit RGB ● Integrated 4:2:2 YCbCr to RGB888 colour-space converter ● Both PAL and NTSC (576i and 480i) input formats supported ● All signals synchronous with the pixel clock ● Small implementation size ideal for all types of FPGA ● Compatible with a wide range of SD video decoder ICs Applications ● BT.656 input video capture and processing Figure 1: BT.656 Decoder architecture ● PAL & NTSC SDTV interlaced format conversion General Description ● Connectivity with a wide range of commercially available video decoder ICs BT_656_DECODER (Figure 1) is a digital video decoder with integrated ● Simple and cost-effective method for capturing digital video into colour-space converter. It's function is to extract the valid pixels from a your FPGA or ASIC BT.656 video stream and convert them to 24-bit RGB for subsequent processing. Generic Parameters Video decoding begins after reset is de-asserted and on the rising-edge of clk when the video_val signal is asserted high. (The signal video_val is a clock-enable signal that enables the sampling and processing of each input byte). Generic name Description Type Valid range mode Input video mode integer 0: PAL (576i) Pixels are extracted from the BT.656 input stream and converted to 1: NTSC (480i) RGB888 format. These pixels are then presented at the output of the decoder together with field and sync flags. All signals are synchronous with the input clock. -

Colour Image Segmentation Using Perceptual Colour Difference Saliency Algorithm

COLOUR IMAGE SEGMENTATION USING PERCEPTUAL COLOUR DIFFERENCE SALIENCY ALGORITHM By TAIWO, TUNMIKE BUKOLA (21557473) Submitted in fulfilment of the requirements of the MASTERS DEGREE IN INFORMATION AND COMMUNICATION TECHNNOLOGY in the DEPARTMENT OF INFORMATION TECHNOLOGY in the FACULTY OF ACCOUNTING AND INFORMATICS at DURBAN UNIVERSITY OF TECHNOLOGY July 2017 DECLARATION I, Taiwo, Tunmike Bukola hereby declares that this dissertation is my own work and has not been previously submitted in any form to any other university or institution of higher learning by other persons or myself. I further declare that all the sources of information used in this dissertation have been acknowledged. _________________________ ____________________ Taiwo, Tunmike Bukola Date Approved for final submission Supervisor: _______________________ ______________________ Professor O. O. Olugbara Date PhD (Computer Science) Co-Supervisor: __________________ ______________________ Dr. Delene Heukelman Date DTech (Information Technology) ii DEDICATION This dissertation is dedicated to my family for their support, encouragement and motivation throughout the period of this study. iii ACKNOWLEDGEMENTS First and foremost, my profound appreciation goes to the Almighty Jehovah, the giver of life and wisdom, for His limitless love, inspiration and strength throughout the period of this study. I return to Him all the glory, honour and adoration. I am particularly grateful to my supervisor, Prof. Oludayo Olugbara. A humble genius in image processing research field, his mentorship on both professional and personal levels was tremendous. Since the beginning of this study, he has always been there not only as my supervisor, but a father and guardian. I wouldn’t be writing this today if I had a different supervisor. I really appreciate all the valuable time he spent in helping me with programming and new concepts with reference to my research work.