Lecture 6: Compression II This Week's Schedule

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Computational Complexity of Motion Estimation Algorithms in MPEG-4 Encoder

On Computational Complexity of Motion Estimation Algorithms in MPEG-4 Encoder Muhammad Shahid This thesis report is presented as a part of degree of Master of Science in Electrical Engineering Blekinge Institute of Technology, 2010 Supervisor: Tech Lic. Andreas Rossholm, ST-Ericsson Examiner: Dr. Benny Lovstrom, Blekinge Institute of Technology Abstract Video Encoding in mobile equipments is a computationally demanding fea- ture that requires a well designed and well developed algorithm. The op- timal solution requires a trade off in the encoding process, e.g. motion estimation with tradeoff between low complexity versus high perceptual quality and efficiency. The present thesis works on reducing the complexity of motion estimation algorithms used for MPEG-4 video encoding taking SLIMPEG motion estimation algorithm as reference. The inherent prop- erties of video like spatial and temporal correlation have been exploited to test new techniques of motion estimation. Four motion estimation algo- rithms have been proposed. The computational complexity and encoding quality have been evaluated. The resulting encoded video quality has been compared against the standard Full Search algorithm. At the same time, reduction in computational complexity of the improved algorithm is com- pared against SLIMPEG which is already about 99 % more efficient than Full Search in terms of computational complexity. The fourth proposed algorithm, Adaptive SAD Control, offers a mechanism of choosing trade off between computational complexity and encoding quality in a dynamic way. Acknowledgements It is a matter of great pleasure to express my deepest gratitude to my ad- visors Dr. Benny L¨ovstr¨om and Andreas Rossholm for all their guidance, support and encouragement throughout my thesis work. -

Kulkarni Uta 2502M 11649.Pdf

IMPLEMENTATION OF A FAST INTER-PREDICTION MODE DECISION IN H.264/AVC VIDEO ENCODER by AMRUTA KIRAN KULKARNI Presented to the Faculty of the Graduate School of The University of Texas at Arlington in Partial Fulfillment of the Requirements for the Degree of MASTER OF SCIENCE IN ELECTRICAL ENGINEERING THE UNIVERSITY OF TEXAS AT ARLINGTON May 2012 ACKNOWLEDGEMENTS First and foremost, I would like to take this opportunity to offer my gratitude to my supervisor, Dr. K.R. Rao, who invested his precious time in me and has been a constant support throughout my thesis with his patience and profound knowledge. His motivation and enthusiasm helped me in all the time of research and writing of this thesis. His advising and mentoring have helped me complete my thesis. Besides my advisor, I would like to thank the rest of my thesis committee. I am also very grateful to Dr. Dongil Han for his continuous technical advice and financial support. I would like to acknowledge my research group partner, Santosh Kumar Muniyappa, for all the valuable discussions that we had together. It helped me in building confidence and motivated towards completing the thesis. Also, I thank all other lab mates and friends who helped me get through two years of graduate school. Finally, my sincere gratitude and love go to my family. They have been my role model and have always showed me right way. Last but not the least; I would like to thank my husband Amey Mahajan for his emotional and moral support. April 20, 2012 ii ABSTRACT IMPLEMENTATION OF A FAST INTER-PREDICTION MODE DECISION IN H.264/AVC VIDEO ENCODER Amruta Kulkarni, M.S The University of Texas at Arlington, 2011 Supervising Professor: K.R. -

Music 422 Project Report

Music 422 Project Report Jatin Chowdhury, Arda Sahiner, and Abhipray Sahoo October 10, 2020 1 Introduction We present an audio coder that seeks to improve upon traditional coding methods by implementing block switching, spectral band replication, and gain-shape quantization. Block switching improves on coding of transient sounds. Spectral band replication exploits low sensitivity of human hearing towards high frequencies. It fills in any missing coded high frequency content with information from the low frequencies. We chose to do gain-shape quantization in order to explicitly code the energy of each band, preserving the perceptually important spectral envelope more accurately. 2 Implementation 2.1 Block Switching Block switching, as introduced by Edler [1], allows the audio coder to adaptively change the size of the block being coded. Modern audio coders typically encode frequency lines obtained using the Modified Discrete Cosine Transform (MDCT). Using longer blocks for the MDCT allows forbet- ter spectral resolution, while shorter blocks have better time resolution, due to the time-frequency trade-off known as the Fourier uncertainty principle [2]. Due to their poor time resolution, using long MDCT blocks for an audio coder can result in “pre-echo” when the coder encodes transient sounds. Block switching allows the coder to use long MDCT blocks for normal signals being encoded and use shorter blocks for transient parts of the signal. In our coder, we implement the block switching algorithm introduced in [1]. This algorithm consists of four types of block windows: long, short, start, and stop. Long and short block windows are simple sine windows, as defined in [3]: (( ) ) 1 π w[n] = sin n + (1) 2 N where N is the length of the block. -

A Survey Paper on Different Speech Compression Techniques

Vol-2 Issue-5 2016 IJARIIE-ISSN (O)-2395-4396 A Survey Paper on Different Speech Compression Techniques Kanawade Pramila.R1, Prof. Gundal Shital.S2 1 M.E. Electronics, Department of Electronics Engineering, Amrutvahini College of Engineering, Sangamner, Maharashtra, India. 2 HOD in Electronics Department, Department of Electronics Engineering , Amrutvahini College of Engineering, Sangamner, Maharashtra, India. ABSTRACT This paper describes the different types of speech compression techniques. Speech compression can be divided into two main types such as lossless and lossy compression. This survey paper has been written with the help of different types of Waveform-based speech compression, Parametric-based speech compression, Hybrid based speech compression etc. Compression is nothing but reducing size of data with considering memory size. Speech compression means voiced signal compress for different application such as high quality database of speech signals, multimedia applications, music database and internet applications. Today speech compression is very useful in our life. The main purpose or aim of speech compression is to compress any type of audio that is transfer over the communication channel, because of the limited channel bandwidth and data storage capacity and low bit rate. The use of lossless and lossy techniques for speech compression means that reduced the numbers of bits in the original information. By the use of lossless data compression there is no loss in the original information but while using lossy data compression technique some numbers of bits are loss. Keyword: - Bit rate, Compression, Waveform-based speech compression, Parametric-based speech compression, Hybrid based speech compression. 1. INTRODUCTION -1 Speech compression is use in the encoding system. -

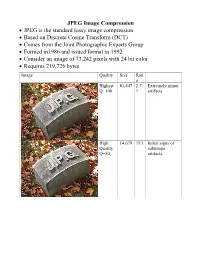

JPEG Image Compression

JPEG Image Compression JPEG is the standard lossy image compression Based on Discrete Cosine Transform (DCT) Comes from the Joint Photographic Experts Group Formed in1986 and issued format in 1992 Consider an image of 73,242 pixels with 24 bit color Requires 219,726 bytes Image Quality Size Rati o Highest 81,447 2.7: Extremely minor Q=100 1 artifacts High 14,679 15:1 Initial signs of Quality subimage Q=50 artifacts Medium 9,407 23:1 Stronger Q artifacts; loss of high frequency information Low 4,787 46:1 Severe high frequency loss leads to obvious artifacts on subimage boundaries ("macroblocking ") Lowest 1,523 144: Extreme loss of 1 color and detail; the leaves are nearly unrecognizable JPEG How it works Begin with a color translation RGB goes to Y′CBCR Luma and two Chroma colors Y is brightness CB is B-Y CR is R-Y Downsample or Chroma Subsampling Chroma data resolutions reduced by 2 or 3 Eye is less sensitive to fine color details than to brightness Block splitting Each channel broken into 8x8 blocks no subsampling Or 16x8 most common at medium compression Or 16x16 Must fill in remaining areas of incomplete blocks This gives the values DCT - centering Center the data about 0 Range is now -128 to 127 Middle is zero Discrete cosine transform formula Apply as 2D DCT using the formula Creates a new matrix Top left (largest) is the DC coefficient constant component Gives basic hue for the block Remaining 63 are AC coefficients Discrete cosine transform The DCT transforms an 8×8 block of input values to a linear combination of these 64 patterns. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

1. in the New Document Dialog Box, on the General Tab, for Type, Choose Flash Document, Then Click______

1. In the New Document dialog box, on the General tab, for type, choose Flash Document, then click____________. 1. Tab 2. Ok 3. Delete 4. Save 2. Specify the export ________ for classes in the movie. 1. Frame 2. Class 3. Loading 4. Main 3. To help manage the files in a large application, flash MX professional 2004 supports the concept of _________. 1. Files 2. Projects 3. Flash 4. Player 4. In the AppName directory, create a subdirectory named_______. 1. Source 2. Flash documents 3. Source code 4. Classes 5. In Flash, most applications are _________ and include __________ user interfaces. 1. Visual, Graphical 2. Visual, Flash 3. Graphical, Flash 4. Visual, AppName 6. Test locally by opening ________ in your web browser. 1. AppName.fla 2. AppName.html 3. AppName.swf 4. AppName 7. The AppName directory will contain everything in our project, including _________ and _____________ . 1. Source code, Final input 2. Input, Output 3. Source code, Final output 4. Source code, everything 8. In the AppName directory, create a subdirectory named_______. 1. Compiled application 2. Deploy 3. Final output 4. Source code 9. Every Flash application must include at least one ______________. 1. Flash document 2. AppName 3. Deploy 4. Source 10. In the AppName/Source directory, create a subdirectory named __________. 1. Source 2. Com 3. Some domain 4. AppName 11. In the AppName/Source/Com directory, create a sub directory named ______ 1. Some domain 2. Com 3. AppName 4. Source 12. A project is group of related _________ that can be managed via the project panel in the flash. -

Video Coding Standards

Video coding standards Video signals represent sequences of images or frames which can be transmitted with a rate from 15 to 60 frames per second (fps), that provides the illusion of motion in the displayed signal. Unlike images they contain the so-called temporal redundancy. Temporal redundancy arises from repeated objects in consecutive frames of the video sequence. Such objects can remain, they can move horizontally, vertically, or any combination of directions (translation movement), they can fade in and out, and they can disappear from the image as they move out of view. Temporal redundancy Motion compensation A motion compensation technique is used to compensate the temporal redundancy of video sequence. The main idea of the this method is to predict the displacement of group of pixels (usually block of pixels) from their position in the previous frame. Information about this displacement is represented by motion vectors which are transmitted together with the DCT coded difference between the predicted and the original images. Motion compensation Image VLC DCT Quantizer Buffer - Dequantizer Coded DCT coefficients. IDCT Motion compensated predictor Motion Motion vectors estimation Decoded VL image Buffer Dequantizer IDCT decoder Motion compensated predictor Motion vectors Motion compensation Previous frame Current frame Set of macroblocks of the previous frame used to predict the selected macroblock of the current frame Motion compensation Previous frame Current frame Macroblocks of previous frame used to predict current frame Motion compensation Each 16x16 pixel macroblock in the current frame is compared with a set of macroblocks in the previous frame to determine the one that best predicts the current macroblock. -

The Video Codecs Behind Modern Remote Display Protocols Rody Kossen

The video codecs behind modern remote display protocols Rody Kossen 1 Rody Kossen - Consultant [email protected] @r_kossen rody-kossen-186b4b40 www.rodykossen.com 2 Agenda The basics H264 – The magician Other codecs Hardware support (NVIDIA) The basics 4 Let’s start with some terms YCbCr RGB Bit Depth YUV 5 Colors 6 7 8 9 Visible colors 10 Color Bit-Depth = Amount of different colors Used in 2 ways: > The number of bits used to indicate the color of a single pixel, for example 24 bit > Number of bits used for each color component of a single pixel (Red Green Blue), for example 8 bit Can be combined with Alpha, which describes transparency Most commonly used: > High Color – 16 bit = 5 bits per color + 1 unused bit = 32.768 colors = 2^15 > True Color – 24 bit Color + 8 bit Alpha = 8 bits per color = 16.777.216 colors > Deep color – 30 bit Color + 10 bit Alpha = 10 bits per color = 1.073 billion colors 11 Color Bit-Depth 12 Color Gamut Describes the subset of colors which can be displayed in relation to the human eye Standardized gamut: > Rec.709 (Blu-ray) > Rec.2020 (Ultra HD Blu-ray) > Adobe RGB > sRBG 13 Compression Lossy > Irreversible compression > Used to reduce data size > Examples: MP3, JPEG, MPEG-4 Lossless > Reversable compression > Examples: ZIP, PNG, FLAC or Dolby TrueHD Visually Lossless > Irreversible compression > Difference can’t be seen by the Human Eye 14 YCbCr Encoding RGB uses Red Green Blue values to describe a color YCbCr is a different way of storing color: > Y = Luma or Brightness of the Color > Cr = Chroma difference -

An Overview of Block Matching Algorithms for Motion Vector Estimation

Proceedings of the Second International Conference on Research in DOI: 10.15439/2017R85 Intelligent and Computing in Engineering pp. 217–222 ACSIS, Vol. 10 ISSN 2300-5963 An Overview of Block Matching Algorithms for Motion Vector Estimation Sonam T. Khawase1, Shailesh D. Kamble2, Nileshsingh V. Thakur3, Akshay S. Patharkar4 1PG Scholar, Computer Science & Engineering, Yeshwantrao Chavan College of Engineering, India 2Computer Science & Engineering, Yeshwantrao Chavan College of Engineering, India 3Computer Science & Engineering, Prof Ram Meghe College of Engineering & Management, India 4Computer Technology, K.D.K. College of Engineering, India [email protected], [email protected],[email protected], [email protected] Abstract–In video compression technique, motion estimation is one of the key components because of its high computation complexity involves in finding the motion vectors (MV) between the frames. The purpose of motion estimation is to reduce the storage space, bandwidth and transmission cost for transmission of video in many multimedia service applications by reducing the temporal redundancies while maintaining a good quality of the video. There are many motion estimation algorithms, but there is a trade-off between algorithms accuracy and speed. Among all of these, block-based motion estimation algorithms are most robust and versatile. In motion estimation, a variety of fast block based matching algorithms has been proposed to address the issues such as reducing the number of search/checkpoints, computational cost, and complexities etc. Due to its simplicity, the Fig. 1. Classification of Frames block-based technique is most popular. Motion estimation is only known for video coding process but for solving real life redundancies. -

Discrete Cosine Transform for 8X8 Blocks with CUDA

Discrete Cosine Transform for 8x8 Blocks with CUDA Anton Obukhov [email protected] Alexander Kharlamov [email protected] October 2008 Document Change History Version Date Responsible Reason for Change 0.8 24.03.2008 Alexander Kharlamov Initial release 0.9 25.03.2008 Anton Obukhov Added algorithm-specific parts, fixed some issues 1.0 17.10.2008 Anton Obukhov Revised document structure October 2008 2 Abstract In this whitepaper the Discrete Cosine Transform (DCT) is discussed. The two-dimensional variation of the transform that operates on 8x8 blocks (DCT8x8) is widely used in image and video coding because it exhibits high signal decorrelation rates and can be easily implemented on the majority of contemporary computing architectures. The key feature of the DCT8x8 is that any pair of 8x8 blocks can be processed independently. This makes possible fully parallel implementation of DCT8x8 by definition. Most of CPU-based implementations of DCT8x8 are firmly adjusted for operating using fixed point arithmetic but still appear to be rather costly as soon as blocks are processed in the sequential order by the single ALU. Performing DCT8x8 computation on GPU using NVIDIA CUDA technology gives significant performance boost even compared to a modern CPU. The proposed approach is accompanied with the sample code “DCT8x8” in the NVIDIA CUDA SDK. October 2008 3 1. Introduction The Discrete Cosine Transform (DCT) is a Fourier-like transform, which was first proposed by Ahmed et al . (1974). While the Fourier Transform represents a signal as the mixture of sines and cosines, the Cosine Transform performs only the cosine-series expansion. -

CHAPTER 10 Basic Video Compression Techniques Contents

Multimedia Network Lab. CHAPTER 10 Basic Video Compression Techniques Multimedia Network Lab. Prof. Sang-Jo Yoo http://multinet.inha.ac.kr The Graduate School of Information Technology and Telecommunications, INHA University http://multinet.inha.ac.kr Multimedia Network Lab. Contents 10.1 Introduction to Video Compression 10.2 Video Compression with Motion Compensation 10.3 Search for Motion Vectors 10.4 H.261 10.5 H.263 The Graduate School of Information Technology and Telecommunications, INHA University 2 http://multinet.inha.ac.kr Multimedia Network Lab. 10.1 Introduction to Video Compression A video consists of a time-ordered sequence of frames, i.e.,images. Why we need a video compression CIF (352x288) : 35 Mbps HDTV: 1Gbps An obvious solution to video compression would be predictive coding based on previous frames. Compression proceeds by subtracting images: subtract in time order and code the residual error. It can be done even better by searching for just the right parts of the image to subtract from the previous frame. Motion estimation: looking for the right part of motion Motion compensation: shifting pieces of the frame The Graduate School of Information Technology and Telecommunications, INHA University 3 http://multinet.inha.ac.kr Multimedia Network Lab. 10.2 Video Compression with Motion Compensation Consecutive frames in a video are similar – temporal redundancy Frame rate of the video is relatively high (30 frames/sec) Camera parameters (position, angle) usually do not change rapidly. Temporal redundancy is exploited so that not every frame of the video needs to be coded independently as a new image. The difference between the current frame and other frame(s) in the sequence will be coded – small values and low entropy, good for compression.