The Perception and Measurement of Human-Robot Trust

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Humanoid Robot

NAIN 1.0 – A HUMANOID ROBOT by Shivam Shukla (1406831124) Shubham Kumar (1406831131) Shashank Bhardwaj (1406831117) Department of Electronics & Communication Engineering Meerut Institute of Engineering & Technology Meerut, U.P. (India)-250005 May, 2018 NAIN 1.0 – HUMANOID ROBOT by Shivam Shukla (1406831124) Shubham Kumar (1406831131) Shashank Bhardwaj (1406831117) Submitted to the Department of Electronics & Communication Engineering in partial fulfillment of the requirements for the degree of Bachelor of Technology in Electronics & Communication Meerut Institute of Engineering & Technology, Meerut Dr. A.P.J. Abdul Kalam Technical University, Lucknow May, 2018 DECLARATION I hereby declare that this submission is my own work and that, to the best of my knowledge and belief, it contains no material previously published or written by another person nor material which to a substantial extent has been accepted for the award of any other degree or diploma of the university or other institute of higher learning except where due acknowledgment has been made in the text. Signature Signature Name: Mr. Shivam Shukla Name: Mr. Shashank Bhardwaj Roll No. 1406831124 Roll No. 1406831117 Date: Date: Signature Name: Mr. Shubham Kumar Roll No. 1406831131 Date: ii CERTIFICATE This is to certify that Project Report entitled “Humanoid Robot” which is submitted by Shivam Shukla (1406831124), Shashank Bhardwaj (1406831117), Shubahm Kumar (1406831131) in partial fulfillment of the requirement for the award of degree B.Tech in Department of Electronics & Communication Engineering of Gautam Buddh Technical University (Formerly U.P. Technical University), is record of the candidate own work carried out by him under my/our supervision. The matter embodied in this thesis is original and has not been submitted for the award of any other degree. -

The Tip of a Hospitality Revolution (5:48 Mins) Listening Comprehension with Pre-Listening and Post-Listening Exercises Pre-List

Listening comprehension worksheet by Carol Richards The tip of a hospitality revolution (5:48 mins) World and Press • 1st April issue 2021• page 10 page 1 of 8 Listening comprehension with pre-listening and post-listening exercises This worksheet and the original report are in American English. Pre-listening 1. Match the robot with its description. Write your answer on the lines below. a) iCub b) ASIMO c) welding robot d) Cheetah e) l’automa cavaliere f) TOPIO ____ automaton knight designed by Leonardo da Vinci in 1495; could be moved by using a system of cables and pulleys ____ built for artificial intelligence research; can learn from mistakes, crawl, walk; has basic cognitive skills ____ plays table tennis against human opponents ____ most often used in the automobile industry; can either be programmed to weld pre- chosen positions or use machine vision ____ four-legged military robot, can move at 28mph, jump over things, use climb stairs ____ could walk, recognize faces, respond to questions, and interact with people; first model built in 2000; also built to provide companionship; size: 4 feet, 3 inches (1.30 cm) © 2021 Carl Ed. Schünemann KG. All rights reserved. Copies of this material may only be produced by subscribers for use in their own lessons. The tip of a hospitality revolution World and Press • April 1 / 2021 • page 10 page 2 of 8 2. Vocabulary practice Match the terms on the left with their German meanings on the right. Write your answers in the grid below. a) billion A Abfindung b) competitive advantage B auf etw. -

Design of a Drone with a Robotic End-Effector

Design of a Drone with a Robotic End-Effector Suhas Varadaramanujan, Sawan Sreenivasa, Praveen Pasupathy, Sukeerth Calastawad, Melissa Morris, Sabri Tosunoglu Department of Mechanical and Materials Engineering Florida International University Miami, Florida 33174 [email protected], [email protected] , [email protected], [email protected], [email protected], [email protected] ABSTRACT example, the widely-used predator drone for military purposes is the MQ-1 by General Atomics which is remote controlled, UAVs The concept presented involves a combination of a quadcopter typically fall into one of six functional categories (i.e. target and drone and an end-effector arm, which is designed with the decoy, reconnaissance, combat, logistics, R&D, civil and capability of lifting and picking fruits from an elevated position. commercial). The inspiration for this concept was obtained from the swarm robots which have an effector arm to pick small cubes, cans to even With the advent of aerial robotics technology, UAVs became more collecting experimental samples as in case of space exploration. sophisticated and led to development of quadcopters which gained The system as per preliminary analysis would contain two popularity as mini-helicopters. A quadcopter, also known as a physically separate components, but linked with a common quadrotor helicopter, is lifted by means of four rotors. In operation, algorithm which includes controlling of the drone’s positions along the quadcopters generally use two pairs of identical fixed pitched with the movement of the arm. propellers; two clockwise (CW) and two counterclockwise (CCW). They use independent variation of the speed of each rotor to achieve Keywords control. -

Surveillance Robot Controlled Using an Android App Project Report Submitted in Partial Fulfillment of the Requirements for the Degree Of

Surveillance Robot controlled using an Android app Project Report Submitted in partial fulfillment of the requirements for the degree of Bachelor of Engineering by Shaikh Shoeb Maroof Nasima (Roll No.12CO92) Ansari Asgar Ali Shamshul Haque Shakina (Roll No.12CO106) Khan Sufiyan Liyaqat Ali Kalimunnisa (Roll No.12CO81) Mir Ibrahim Salim Farzana (Roll No.12CO82) Supervisor Prof. Kalpana R. Bodke Co-Supervisor Prof. Amer Syed Department of Computer Engineering, School of Engineering and Technology Anjuman-I-Islam’s Kalsekar Technical Campus Plot No. 2 3, Sector -16, Near Thana Naka, Khanda Gaon, New Panvel, Navi Mumbai. 410206 Academic Year : 2014-2015 CERTIFICATE Department of Computer Engineering, School of Engineering and Technology, Anjuman-I-Islam’s Kalsekar Technical Campus Khanda Gaon,New Panvel, Navi Mumbai. 410206 This is to certify that the project entitled “Surveillance Robot controlled using an Android app” is a bonafide work of Shaikh Shoeb Maroof Nasima (12CO92), Ansari Asgar Ali Shamshul Haque Shakina (12CO106), Khan Sufiyan Liyaqat Ali Kalimunnisa (12CO81), Mir Ibrahim Salim Farzana (12CO82) submitted to the University of Mumbai in partial ful- fillment of the requirement for the award of the degree of “Bachelor of Engineering” in De- partment of Computer Engineering. Prof. Kalpana R. Bodke Prof. Amer Syed Supervisor/Guide Co-Supervisor/Guide Prof. Tabrez Khan Dr. Abdul Razak Honnutagi Head of Department Director Project Approval for Bachelor of Engineering This project I entitled Surveillance robot controlled using an android application by Shaikh Shoeb Maroof Nasima, Ansari Asgar Ali Shamshul Haque Shakina, Mir Ibrahim Salim Farzana,Khan Sufiyan Liyaqat Ali Kalimunnisa is approved for the degree of Bachelor of Engineering in Department of Computer Engineering. -

Humanoid Robots (Figures 41, 42)

A Roboethics Framework for the Development and Introduction of Social Assistive Robots in Elderly Care Antonio M. M. C. Espingardeiro Salford Business School University of Salford, Manchester, UK Submitted in Partial Fulfilment of the Requirements of the Degree of Doctor of Philosophy, September 2013 TABLE OF CONTENTS Chapter 1 - Introduction ......................................................................................................................................... 1 Chapter 2 - Literature review ................................................................................................................................. 9 2.1. Ethics in the digital world ................................................................................................................................ 9 2.2. Exploratory work in roboethics ..................................................................................................................... 14 2.2. Roboethics rules and guidance ...................................................................................................................... 18 2.3. “In-situ” practical workshops with SARs ........................................................................................................ 23 2.4. Summary ........................................................................................................................................................ 24 Chapter 3 - Human robotics interactions and ethical principles ......................................................................... -

Robotic Art and Cultural Imagination

http://dx.doi.org/10.14236/ewic/EVAC18.48 Robotic Art and Cultural Imagination Bojana Romic School of Arts and Communication Nordenskiöldsgatan 1, 211 19 Malmö, Sweden [email protected] In this article, I aim to accentuate the importance of the cultural imagination about robots, observing it 'as a mixed register of fantasy and an actual practice' (Kakoudaki 2007, 165). I emphasise the field of robotic art, which, I argue, is in a fluid state of exchange with other areas of robotic research, equally benefiting from the larger context of the cultural imagination about robots. Furthermore, I discuss artworks that offer a valuable commentary on robots even though they are not defined as robotic art in a narrow sense (Penny 2013), given that they feature only the representation of robots or robot-like characters. Nevertheless, these artworks contribute to the circulation of symbolic registers that revolve around the multifaceted figure of a robot. Robot. Robotic Art. Cultural imagination. Cultural robotics. 1. INTRODUCTION imagination: I do not probe deeper into ideological, religious and societal reasons for imaginaries that In recent years, there has been an ongoing debate are characteristic of particular societies (e.g. about the notion and (possible) function of robotic Japanese, US, Korean, etc.). Instead, I focus on culture. With the development of the new generation phenomenological aspects of cultural imagination in of drones, as well as the advancement in social and robotic art in general. health robotics, questions about the robot culture seem to open a variety of discussions (Kim & Kim 2013, Samani et al. 2013, Šabanović et al. -

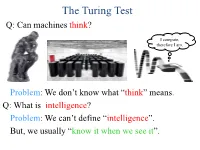

The Turing Test Q: Can Machines Think?

The Turing Test Q: Can machines think? I compute, therefore I am. Problem: We don’t know what ―think‖ means. Q: What is intelligence? Problem: We can’t define ―intelligence‖. But, we usually ―know it when we see it‖. The Turing Test Q: Which of the following can think? The Turing Test Q: Can you distinguish a machine from a person? ≡ Can a machine impersonate a person? The Turing Test • The first deep investigation into whether machines can ―behave intelligently‖ • Helped usher in field of AI • Decoupled ―intelligence‖ from ―human‖ • Based ―intelligence‖ on I/O, not entity’s ―look and feel‖ • Proposed a practical, formal test for intelligence • Definitions & test are operational & easily implementable • Turing test variants: ―immortality‖, ―fly-on-wall‖, ―meta‖, ―reverse‖, ―subject matter expert‖, ―compression‖, ―minimum intelligent signal‖ Turing Test Milestones Turk (von Kempelen), 1770: • Played a strong game of chess • Toured the world for 84 years • Generated much interest in automata • Was a hoax (hidden human operator) Turing Test Milestones Eliza (Weizenbaum), 1966: • First ―chatterbot‖ • Named after ―Eliza Doolittle‖ of Shaw’s Pygmalion • Simulated Rogerian psychotherapist • Often convinced people it is human • Influence computer games (e.g., Dungeon, Adventure) • Inspired Arthur C. Clarke’s HAL 9000 • Chatterbots appear today in chatrooms and dating services • Chatterbot competitions (Turing Test format): – Loebner Prize – Chatterbox Challenge, 2010 Turing Test Milestones Elbot, 2008: • Won 2008 Loebner Prize • Fooled 3 of 12 judges (Turing: >30%) • Typical excerpt: Judge: What's your favourite novel? Elbot: I often read telephone books, or dadaist poetry: Brä brä brä Judge: Typical computer! Elbot: You tend towards generalizations. -

Communicating Simulated Emotional States of Robots by Expressive Movements

Middlesex University Research Repository An open access repository of Middlesex University research http://eprints.mdx.ac.uk Sial, Sara Baber (2013) Communicating simulated emotional states of robots by expressive movements. Masters thesis, Middlesex University. [Thesis] Final accepted version (with author’s formatting) This version is available at: https://eprints.mdx.ac.uk/12312/ Copyright: Middlesex University Research Repository makes the University’s research available electronically. Copyright and moral rights to this work are retained by the author and/or other copyright owners unless otherwise stated. The work is supplied on the understanding that any use for commercial gain is strictly forbidden. A copy may be downloaded for personal, non-commercial, research or study without prior permission and without charge. Works, including theses and research projects, may not be reproduced in any format or medium, or extensive quotations taken from them, or their content changed in any way, without first obtaining permission in writing from the copyright holder(s). They may not be sold or exploited commercially in any format or medium without the prior written permission of the copyright holder(s). Full bibliographic details must be given when referring to, or quoting from full items including the author’s name, the title of the work, publication details where relevant (place, publisher, date), pag- ination, and for theses or dissertations the awarding institution, the degree type awarded, and the date of the award. If you believe that any material held in the repository infringes copyright law, please contact the Repository Team at Middlesex University via the following email address: [email protected] The item will be removed from the repository while any claim is being investigated. -

Sensorimotor Control of Octopus-Inspired Soft Robots

Zurich Open Repository and Archive University of Zurich Main Library Strickhofstrasse 39 CH-8057 Zurich www.zora.uzh.ch Year: 2013 Learning from the octopus: sensorimotor control of octopus-inspired soft robots Li, Tao Posted at the Zurich Open Repository and Archive, University of Zurich ZORA URL: https://doi.org/10.5167/uzh-91630 Dissertation Published Version Originally published at: Li, Tao. Learning from the octopus: sensorimotor control of octopus-inspired soft robots. 2013, University of Zurich, Faculty of Economics. Department of Informatics Learning from the Octopus: Sensorimotor Control of Octopus-Inspired Soft Robots A dissertation submitted to the Faculty of Economics, Business Administration and Information Technology of the University of Zurich for the degree of Doctor of Science (Ph.D.) by Tao Li from China Accepted on the recommendation of Prof. Dr. Rolf Pfeifer Prof. Akio Ishiguro, Ph.D. 2013 The Faculty of Economics, Business Administration and Information Technol- ogy of the University of Zurich herewith permits the publication of the afore- mentioned dissertation without expressing any opinion on the views contained therein. Zurich, October 23, 2013 Head of the Ph.D. committee for informatics: Prof. Abraham Bernstein, Ph.D Abstract Soft robotics is one of the most promising, yet challenging, research topics in bio-inspired robotics. In terms of morphological and behavioral flexibility as well as interaction safety, soft robots have significant advantages over traditional articulated rigid robots. However, it is difficult to achieve autonomous sensorimotor control of a soft robot by conventional engineering approaches primar- ily because of its complex nonlinear soft body dynamics. Most soft robots are currently controlled only in an open-loop scheme. -

PRE-HISPANIC CENTRAL MEXICAN HISTORIOGRAPHY One of Tlie

www.senado2010.gob.mx PRE-HISPANIC CENTRAL MEXICAN HISTORIOGRAPHY H. B. NICIIOLSON Los indígenas no sólo de MCnico, sino de toda Mesoamhica, poseían una ver- dadera vocación histórica y relataban y esnibían historia . No me parece justo, después de quemarles a los indios sus historias, declarar que no las escribían. INTRODUCTION One of tlie leading diagnostics of the Mesoamerican Area Co-Tradition was the detailed recording of past events over relatively long time spans. 'í'his "cliroiiiclc conciousness", as 1 have elsewliere (Nicholson 1955b) refcrred to it, was much more fully developed here than in any other aboriginal New World region. Even many Old World cultures assigned to a substantially higlier rung on the ladder of cultural complexity cannot offer historical records nearly as rich or extending over such long periods. Students interested in the historical aspect of WIesoamerican studies Iiave always intensively utilized these native chronicles, but less attention has been directed to the native concepts of history and to transinission media and techniques. In a preliminary paper, deliver- cd orally 9 years ago an published only in brief abstract form (Ni- cholson 1963), 1 hriefly discussed the former aspect. Mesoamerican concepts of history -which would include consideration of why such a strong interest in history fluorished in this are- deserve much more analysis tlian they have yet re~eived.~However: this paper will iiot be concerned with concepts but rather will focus on the methods employed to transmit knowledge of the past aud the kinds of events recorded -its historiography, if you wili- in one Meso- amcrican subarea, Central Mexico. -

Inter·American Tropical Tuna Commission

INTER·AMERICAN TROPICAL TUNA COMMISSION Special Report No.5 ORGANIZATION, FUNCTIONS, AND ACHIEVEMENTS OF THE t INTER-AMERICAN TROPICAL TUNA COMMISSION I ~. t I, ! by i ~ Clifford L. Peterson and William H. Bayliff Ii f I I t La Jolla, California 1985 TABLE OF CONTENTS INTRODUCTION. • 1 AREA COVERED BY THE CONVENTION. 1 SPECIES COVERED BY THE CONVENTION AND SUBSEQUENT INSTRUCTIONS 3 Yellowtin tuna • 3 Skipjack tuna. 3 Northern bluetin tuna. 4 Other tunas. 5 Billtishes 6 Baittishes 6 Dolphins • 7 ORGANIZATION. 7 Membership • 7 Languages. 8 Commissioners. 8 Meetings 8 Chairman and secretary 9 Voting • 9 Director of investigations and statf • 9 Headquarters and field laboratories. 10 Finance. 10 RESEARCH PROGRAM. 11 Fish • 11 Fishery statistics. 12 Biology of tunas and billtishes • 14 Biology ot baittishes • 16 Oceanography and meteorology. 16 Stock assessment. 17 Dolphins • • • 19 Data collection • 19 Population assessment • • 20 Attempts to reduce dolphin mortality. • 21 Interactions between dolphins and tunas • 22 REGULATIONS • 22 INTERGOVERNMENTAL MEETINGS. 26 RELATIONS WITH OTHER ORGANIZATIONS. • 27 International. • 27 National • 27 Intranational • • 28 PUBLICATIONS. • • 29 LITERATURE CITED. • 30 FIGURE. • 31 TABLES. • • • 32 APPENDIX 1. • • 35 APPENDIX 2. • 43 INTRODUCTION The Inter-American Tropical Tuna Commission (IATTC) operates under the authority and direction of a Convention originally entered into by the governments of Costa Rica and the United states. The Convention, which came into force in 1950, is open to the adherence by other governments whose nationals participate in the fisheries for tropical tunas in the eastern Pacific Ocean. The member nations of the Commission now are France. Japan, Nicaragua. Panama, and the United States. -

Erika Girdauskaitė ŢMONIŲ REAKCIJA Į ROBOTUS

VYTAUTO DIDŢIOJO UNIVERSITETAS INFORMATIKOS FAKULTETAS TAIKOMOSIOS INFORMATIKOS KATEDRA Erika Girdauskaitė ŢMONIŲ REAKCIJA Į ROBOTUS NAUDOJANT BIO-SIGNALUS IR APKLAUSAS Magistro baigiamasis darbas Taikomosios informatikos studijų programa, valstybinis kodas 621I13003 Informatikos studijų kryptis Vadovas Prof. Tomas Krilavičius _________ __________ (Moksl. laipsnis, vardas, pavardė) (Parašas) (Data) Apginta Doc. Dr. Daiva Vitkutė-Adţgauskienė _________ __________ (Fakulteto dekanas) (Parašas) (Data) Kaunas, 2015 TURINYS SANTRUMPŲ IR TERMINŲ ŢODYNAS .............................................................................................. 3 SANTRAUKA .......................................................................................................................................... 4 ABSTRACT .............................................................................................................................................. 5 1. ĮVADAS............................................................................................................................................ 6 2. ROBOTŲ ANALIZĖS TEORINIAI ASPEKTAI ............................................................................ 8 2.1. Robotų evoliucija ir ateities perspektyvos.................................................................................. 8 2.2. Roboto paţinimo literatūros analizė ........................................................................................... 9 2.3. Robotų sąveikos su ţmonėmis tyrimai ....................................................................................