A Humanoid Robot

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Tip of a Hospitality Revolution (5:48 Mins) Listening Comprehension with Pre-Listening and Post-Listening Exercises Pre-List

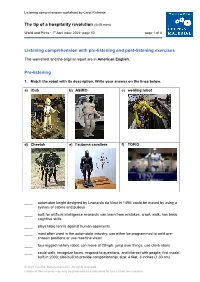

Listening comprehension worksheet by Carol Richards The tip of a hospitality revolution (5:48 mins) World and Press • 1st April issue 2021• page 10 page 1 of 8 Listening comprehension with pre-listening and post-listening exercises This worksheet and the original report are in American English. Pre-listening 1. Match the robot with its description. Write your answer on the lines below. a) iCub b) ASIMO c) welding robot d) Cheetah e) l’automa cavaliere f) TOPIO ____ automaton knight designed by Leonardo da Vinci in 1495; could be moved by using a system of cables and pulleys ____ built for artificial intelligence research; can learn from mistakes, crawl, walk; has basic cognitive skills ____ plays table tennis against human opponents ____ most often used in the automobile industry; can either be programmed to weld pre- chosen positions or use machine vision ____ four-legged military robot, can move at 28mph, jump over things, use climb stairs ____ could walk, recognize faces, respond to questions, and interact with people; first model built in 2000; also built to provide companionship; size: 4 feet, 3 inches (1.30 cm) © 2021 Carl Ed. Schünemann KG. All rights reserved. Copies of this material may only be produced by subscribers for use in their own lessons. The tip of a hospitality revolution World and Press • April 1 / 2021 • page 10 page 2 of 8 2. Vocabulary practice Match the terms on the left with their German meanings on the right. Write your answers in the grid below. a) billion A Abfindung b) competitive advantage B auf etw. -

An Introduction to the NASA Robotics Alliance Cadets Program

Session F An Introduction to the NASA Robotics Alliance Cadets Program David R. Schneider, Clare van den Blink NASA, DAVANNE, & Cornell University / Cornell University CIT [email protected], [email protected] Abstract The 2006 report National Defense Education and Innovation Initiative highlighted this nation’s growing need to revitalize undergraduate STEM education. In response, NASA has partnered with the DAVANNE Corporation to create the NASA Robotics Alliance Cadets Program to develop innovative, highly integrated and interactive curriculum to redesign the first two years of Mechanical Engineering, Electrical Engineering and Computer Science. This paper introduces the NASA Cadets Program and provides insight into the skill areas targeted by the program as well as the assessment methodology for determining the program’s effectiveness. The paper also offers a brief discussion on the capabilities of the program’s robotic platform and a justification for its design into the program. As an example of the integration of the robotic platform with the program’s methodologies, this paper concludes by outlining one of the first educational experiments of NASA Cadets Program at Cornell University to be implemented in the Spring 2007 semester. I. Introduction To be an engineer is to be a designer, a creator of new technology, and the everyday hero that solves society’s problems through innovative methods and products by making ideas become a reality. However, the opportunity to truly explore these key concepts of being an engineer are often withheld from most incoming engineering students until at least their junior year causing many new students to lose motivation and potentially leave the program. -

Towards a Robot Learning Architecture

From: AAAI Technical Report WS-93-06. Compilation copyright © 1993, AAAI (www.aaai.org). All rights reserved. Towards a Robot Learning Architecture Joseph O’Sullivan* School Of Computer Science, Carnegie Mellon University, Pittsburgh, PA 15213 email: josu][email protected] Abstract continuously improves its performance through learning. I summarize research toward a robot learning Such a robot must be capable of aa autonomous exis- architecture intended to enable a mobile robot tence during which its world knowledge is continuously to learn a wide range of find-and-fetch tasks. refined from experience as well as from teachings. In particular, this paper summarizesrecent re- It is all too easy to assumethat a learning agent has search in the Learning Robots Laboratory at unrealistic initial capabilities such as a prepared environ- Carnegie Mellon University on aspects of robot ment mapor perfect knowledgeof its actions. To ground learning, and our current worktoward integrat- our research, we use s Heath/Zenith Hero 2000 robot ing and extending this within a single archi- (named simply "Hero"), a commerc/aI wheeled mobile tecture. In previous work we developed sys- manipulator with a two finger hand, as a testbed on tems that learn action models for robot ma- whichsuccess or failure is judged. nipulation, learn cost-effective strategies for us- In rids paper, I describe the steps being taken to design ing sensors to approach and classify objects, and implement a learning robot agent. In Design Prin- learn models of sonar sensors for map build- ciples, an outline is stated of the believed requirements ing, learn reactive control strategies via rein- for a successful agent. -

Inverse Kinematics at the Anthropomorphic Robots, by a Trigonometric Method

American Journal of Engineering and Applied Sciences Original Research Paper Inverse Kinematics at the Anthropomorphic Robots, by a Trigonometric Method 1Relly Victoria V. Petrescu, 2Raffaella Aversa, 3Bilal Akash, 4Ronald B. Bucinell, 5Juan M. Corchado, 2Antonio Apicella and 1Florian Ion T. Petrescu 1ARoTMM-IFToMM, Bucharest Polytechnic University, Bucharest, (CE), Romania 2Advanced Material Lab, Department of Architecture and Industrial Design, Second University of Naples, 81031 Aversa (CE), Italy 3Dean of School of Graduate Studies and Research, American University of Ras Al Khaimah, UAE 4Union College, USA 5University of Salamanca, Spain Article history Abstract: A robot is a machine especially programmable one through a Received: 03-01-2017 computer capable of performing a complex series of actions in the Revised: 05-01-2017 automatic mode. Robots may be guided by a control device or external Accepted: 27-04-2017 control may be incorporated in the inside. Robots can be built to take human form, but most robots are machines designed to perform a task Corresponding Author: Florian Ion T. Petrescu without taking into account the manner in which it looks. The branch of ARoTMM-IFToMM, the technology which is concerned with the design, construction, Bucharest Polytechnic operation and application of robots, as well as other information systems University, Bucharest, (CE) for their control, the feedback of the touch screen and processing Romania information is quite robotic. These technologies do with automatic Email: [email protected] machine, which may take the place of the people in hazardous environments or manufacturing processes, or looks like people in appearance, behavior and/or cognitive. Many of the robots of today are inspired by nature which contributes to the field of robotics bio-inspired by it. -

Design of a Drone with a Robotic End-Effector

Design of a Drone with a Robotic End-Effector Suhas Varadaramanujan, Sawan Sreenivasa, Praveen Pasupathy, Sukeerth Calastawad, Melissa Morris, Sabri Tosunoglu Department of Mechanical and Materials Engineering Florida International University Miami, Florida 33174 [email protected], [email protected] , [email protected], [email protected], [email protected], [email protected] ABSTRACT example, the widely-used predator drone for military purposes is the MQ-1 by General Atomics which is remote controlled, UAVs The concept presented involves a combination of a quadcopter typically fall into one of six functional categories (i.e. target and drone and an end-effector arm, which is designed with the decoy, reconnaissance, combat, logistics, R&D, civil and capability of lifting and picking fruits from an elevated position. commercial). The inspiration for this concept was obtained from the swarm robots which have an effector arm to pick small cubes, cans to even With the advent of aerial robotics technology, UAVs became more collecting experimental samples as in case of space exploration. sophisticated and led to development of quadcopters which gained The system as per preliminary analysis would contain two popularity as mini-helicopters. A quadcopter, also known as a physically separate components, but linked with a common quadrotor helicopter, is lifted by means of four rotors. In operation, algorithm which includes controlling of the drone’s positions along the quadcopters generally use two pairs of identical fixed pitched with the movement of the arm. propellers; two clockwise (CW) and two counterclockwise (CCW). They use independent variation of the speed of each rotor to achieve Keywords control. -

College4kids Summer Career Academies 2014

College4KidsSummer Career Academies 2014 at Piedmont Virginia Community College For Rising 3rd-9th Graders • June 16-Aug. 15, 2014 www.pvcc.edu/academies 1 For Rising 6th-9th Graders Acting for Film Lights! Camera! Action! Want to be a film actor? You’ll “Great teacher. Best computer class yet.” College4Kids have a blast in this workshop learning script analysis, – ComputeR SCIENCE STUDENT experiencing moment-to-moment acting techniques, Summer Career Academies 2014 performing for the camera and establishing a at Piedmont Virginia Community College confident performance through the pursuit of dramatic intention. You’ll be assigned a scene from a Hollywood Building a Computer From Parts • Can you count to two? If yes, then you can build a For Rising 3rd-9th Graders June 16-Aug. 15, 2014 movie to develop a character, work with scene complete computer system from parts and load the partners and perform on camera. Robert Wray is a operating system. Learn about computer system teacher, playwright and actor, with appearances in fundamentals including the binary number system and numerous films and New York City theater productions. logic gates, the elements of any computer system and Explore your interests with new friends. M-F 7/7-7/11 8:45-11:45 a.m. $125 operations at a basic level. Architecture M-F 6/16-6/20 12:30-3:30 p.m. $189 Have fun while you learn! If you could design your own building, what would it M-F 7/14-7/18 12:30-3:30 p.m. $189 look like? Dream big, then design it in detail! Create Computer Numerical Control (CNC): • Enjoy hands-on, project-based learning! architectural drawings by hand, build a physical 3-D model and then create a virtual model with Google Learn to Manufacture! SketchUp. -

Surveillance Robot Controlled Using an Android App Project Report Submitted in Partial Fulfillment of the Requirements for the Degree Of

Surveillance Robot controlled using an Android app Project Report Submitted in partial fulfillment of the requirements for the degree of Bachelor of Engineering by Shaikh Shoeb Maroof Nasima (Roll No.12CO92) Ansari Asgar Ali Shamshul Haque Shakina (Roll No.12CO106) Khan Sufiyan Liyaqat Ali Kalimunnisa (Roll No.12CO81) Mir Ibrahim Salim Farzana (Roll No.12CO82) Supervisor Prof. Kalpana R. Bodke Co-Supervisor Prof. Amer Syed Department of Computer Engineering, School of Engineering and Technology Anjuman-I-Islam’s Kalsekar Technical Campus Plot No. 2 3, Sector -16, Near Thana Naka, Khanda Gaon, New Panvel, Navi Mumbai. 410206 Academic Year : 2014-2015 CERTIFICATE Department of Computer Engineering, School of Engineering and Technology, Anjuman-I-Islam’s Kalsekar Technical Campus Khanda Gaon,New Panvel, Navi Mumbai. 410206 This is to certify that the project entitled “Surveillance Robot controlled using an Android app” is a bonafide work of Shaikh Shoeb Maroof Nasima (12CO92), Ansari Asgar Ali Shamshul Haque Shakina (12CO106), Khan Sufiyan Liyaqat Ali Kalimunnisa (12CO81), Mir Ibrahim Salim Farzana (12CO82) submitted to the University of Mumbai in partial ful- fillment of the requirement for the award of the degree of “Bachelor of Engineering” in De- partment of Computer Engineering. Prof. Kalpana R. Bodke Prof. Amer Syed Supervisor/Guide Co-Supervisor/Guide Prof. Tabrez Khan Dr. Abdul Razak Honnutagi Head of Department Director Project Approval for Bachelor of Engineering This project I entitled Surveillance robot controlled using an android application by Shaikh Shoeb Maroof Nasima, Ansari Asgar Ali Shamshul Haque Shakina, Mir Ibrahim Salim Farzana,Khan Sufiyan Liyaqat Ali Kalimunnisa is approved for the degree of Bachelor of Engineering in Department of Computer Engineering. -

POVERTY ALLEVIATION: a Role for Technology and Infrastructure?

Fondazione per la Collaborazione tra i Popoli Foundation for World Wide Cooperation Presidente Romano Prodi POVERTY ALLEVIATION: A Role for Technology and Infrastructure? CONCEPT NOTE ENERGY CONNECTIVITY (Communication and learning) HEALTH & FOOD ROMA, 2015 MAY Fondazione per la Collaborazione tra i Popoli Foundation for World Wide Cooperation Presidente Romano Prodi CONCEPT NOTE Poverty Alleviation: A Role for Technology and Infrastructure? The goal of the conference is twofold: to assess the results of technology-based poverty alleviation projects and to explore the social and political effects of this technology. In particular, we want to start floating an idea that may be out of the 'mainstream’ of political thinking. We all agree that peace and security are crucial to work for poverty alleviation. The common approach of international aid agencies is to build institutional and governance reform. While this is indispensable, it should not be the only focus. While rushing to create multi-party, parliamentary systems, independent judiciaries and free press, we should not forget the human factor, i.e. the need to build trust and communication among different individuals who will need, quite simply, to work together in order to make those institutions function. Today, in the twenty-first century, some of the most exciting tools available for addressing these issues are technology and innovation. Even with the best of governance and a visionary leadership, if there is no inclusive development, a country cannot move forward. Throughout history technology has been a powerful instrument for economic and social development. Technology played a critical role in reducing poverty in vast areas of the world in the past and can play today a crucial function in the battle against poverty. -

Down-Line Loading on Hero Robot

University of Wollongong Research Online Department of Computing Science Working Faculty of Engineering and Information Paper Series Sciences 1984 Down-line loading on hero robot John Fulcher University of Wollongong, [email protected] Follow this and additional works at: https://ro.uow.edu.au/compsciwp Recommended Citation Fulcher, John, Down-line loading on hero robot, Department of Computing Science, University of Wollongong, Working Paper 84-13, 1984, 39p. https://ro.uow.edu.au/compsciwp/9 Research Online is the open access institutional repository for the University of Wollongong. For further information contact the UOW Library: [email protected] Down-line loading on Hero robot John Fulcher Department of Computing Science University of Wollongong ABSTRACT Expansion of ET-18 (Hero 1.0) Educational Robot in order to provide down-line loading capability from the Unix time-shared operating system is described. This expansion has also enabled up-line saving of programs from Hero to Unix. These facilities are implemented in EPROM form (2716) in the optional ROM socket on Hero's CPU board. The program stored in EPROM is essentially the same as the Utility EPROM used on the expanded ET-3400 Microprocessor Trainers in the Department's Microcomputer Laboratory, with minor modifications to run on Hero. As a direct result of incorporating these facilities into Hero, the Unix down-line load program 'dll' has been upgraded (to allow for up- line save), and an r-file for the Unix general-purpose cross-~ssembler 'mac' has been written to allow for the use of Robot Interpreter commands (Hero utilizes unused M6800 op. -

Critical Review of Robots and Humans Interaction

http://www.inosr.net/inosr-applied-sciences/ Henry et al INOSR APPLIED SCIENCES 4(1): 22-29, 2018 ©INOSR PUBLICATIONS International Network Organization for Scientific Research ISSN: 2705-165X Critical Review of Robots and Humans Interaction Henry Anthony, Maddison Luna and Joseph Penelope Computer science Colombia University New York, USA ABSTRACT This article shows the critical review of advantage of the many benefits it offers. robots and human interaction. A robot is a Manufacturers in the UK are starting machine especially one programmable by invest more in the technology in a computer capable of carrying out a preparation for our departure from the complex series of actions automatically EU, allowing them to maintain their The idea of automata originates in the competitive edge in the market. Naturally, mythologies of many cultures around the not everyone has been convinced of the world. Engineers and inventors from advantages robotic automation can ancient civilizations, including Ancient deliver. There is still some cautiousness China,[16] Ancient Greece, and Ptolemaic about adapting an existing production Egypt, attempted to build self-operating line, with some reasonable objections machines, some resembling animals and posed by those yet to try the technology. humans. The growing popularity of robotic To address both sides of the discussion, we automation across a wide range of sectors have put together a few brief advantages looks set to continue over the next few and disadvantages of using robotic years, as businesses look to take automation. Keywords: Critical, review, Robots, Humans, interaction. INTRODUCTION A robot is a machine especially one robots, UAV drones such as General programmable by a computer capable of Atomics MQ-1 Predator, and even carrying out a complex series of actions microscopic nano robots. -

Communicating Simulated Emotional States of Robots by Expressive Movements

Middlesex University Research Repository An open access repository of Middlesex University research http://eprints.mdx.ac.uk Sial, Sara Baber (2013) Communicating simulated emotional states of robots by expressive movements. Masters thesis, Middlesex University. [Thesis] Final accepted version (with author’s formatting) This version is available at: https://eprints.mdx.ac.uk/12312/ Copyright: Middlesex University Research Repository makes the University’s research available electronically. Copyright and moral rights to this work are retained by the author and/or other copyright owners unless otherwise stated. The work is supplied on the understanding that any use for commercial gain is strictly forbidden. A copy may be downloaded for personal, non-commercial, research or study without prior permission and without charge. Works, including theses and research projects, may not be reproduced in any format or medium, or extensive quotations taken from them, or their content changed in any way, without first obtaining permission in writing from the copyright holder(s). They may not be sold or exploited commercially in any format or medium without the prior written permission of the copyright holder(s). Full bibliographic details must be given when referring to, or quoting from full items including the author’s name, the title of the work, publication details where relevant (place, publisher, date), pag- ination, and for theses or dissertations the awarding institution, the degree type awarded, and the date of the award. If you believe that any material held in the repository infringes copyright law, please contact the Repository Team at Middlesex University via the following email address: [email protected] The item will be removed from the repository while any claim is being investigated. -

Trade Marks Journal No: 1936 , 13/01/2020 Class 9 2829969 20/10

Trade Marks Journal No: 1936 , 13/01/2020 Class 9 2829969 20/10/2014 L.A. INTERNATIONAL PVT. LTD. trading as ;L.A. INTERNATIONAL PVT. LTD. B-XXX-788, SHERPUR BYE PASS, LUDHIANA (PB.) MANUFACTURER & MERCHANTS Address for service in India/Agents address: PURI TRADE MARK CO. "BRAND HOUSE",54-55, SUPER CYCLE MARKET, OPP. KWALITY KANDA, GILL ROAD, LUDHIANA-141003 (PUNJAB). Used Since :01/04/2005 DELHI MOBILE & MOBILE PARTS, THEIR FITTINGS & ACCESSORIES 1374 Trade Marks Journal No: 1936 , 13/01/2020 Class 9 RoadReady 2906450 20/02/2015 HERO MOTOCORP LTD. 34 BASANT LOK, VASANT VIHAR, NEW DELHI 110057 MANUFACTURERS AN INDIAN COMPANY INCORPORATED UNDER THE COMPANIES ACT, 1956 Address for service in India/Agents address: ANAND AND ANAND. B-41,NIZAMUDDIN EAST, NEW DELHI - 110 013. Proposed to be Used DELHI HELMETS AND HEAD PROTECTIVE GEARS IN CLASS 9. 1375 Trade Marks Journal No: 1936 , 13/01/2020 Class 9 2916801 04/03/2015 GE INTELLIGENT PLATFORMS, INC. Route 29 North and Route 606, Charlottesville Virginia 22911 Manufacturers & Merchants Address for service in India/Attorney address: K & S PARTNERS 109, SECTOR-44, GURGAON - 122 003, NATIONAL CAPITAL REGION, INDIA Proposed to be Used DELHI Computer software for empirical modeling and statistical analysis of data obtained from and the monitoring of process controls systems in the fields of chemical processes, manufacturing processes, instrumentation processes, continuous manufacturing processes, financial processes, biological processes, machine operations, and data sensor operations. 1376 Trade