Stability and Static Noise Margin Analysis of Static Random Access Memory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 3 Semiconductor Memories

Chapter 3 Semiconductor Memories Jin-Fu Li Department of Electrical Engineering National Central University Jungli, Taiwan Outline Introduction Random Access Memories Content Addressable Memories Read Only Memories Flash Memories Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 2 Overview of Memory Types Semiconductor Memories Read/Write Memory or Random Access Memory (RAM) Read Only Memory (ROM) Random Access Non-Random Access Memory (RAM) Memory (RAM) •Mask (Fuse) ROM •Programmable ROM (PROM) •Erasable PROM (EPROM) •Static RAM (SRAM) •FIFO/LIFO •Electrically EPROM (EEPROM) •Dynamic RAM (DRAM) •Shift Register •Flash Memory •Register File •Content Addressable •Ferroelectric RAM (FRAM) Memory (CAM) •Magnetic RAM (MRAM) Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 3 Memory Elements – Memory Architecture Memory elements may be divided into the following categories Random access memory Serial access memory Content addressable memory Memory architecture 2m+k bits row decoder row decoder 2n-k words row decoder row decoder column decoder k column mux, n-bit address sense amp, 2m-bit data I/Os write buffers Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 4 1-D Memory Architecture S0 S0 Word0 Word0 S1 S1 Word1 Word1 S2 S2 Word2 Word2 A0 S3 S3 A1 Decoder Ak-1 Sn-2 Storage Sn-2 Wordn-2 element Wordn-2 Sn-1 Sn-1 Wordn-1 Wordn-1 m-bit m-bit Input/Output Input/Output n select signals are reduced n select signals: S0-Sn-1 to k address signals: A0-Ak-1 Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 5 Memory Architecture S0 Word0 Wordi-1 S1 A0 A1 Ak-1 Row Decoder Sn-1 Wordni-1 A 0 Column Decoder Aj-1 Sense Amplifier Read/Write Circuit m-bit Input/Output Advanced Reliable Systems (ARES) Lab. -

Error Detection and Correction Methods for Memories Used in System-On-Chip Designs

International Journal of Engineering and Advanced Technology (IJEAT) ISSN: 2249 – 8958, Volume-8, Issue-2S2, January 2019 Error Detection and Correction Methods for Memories used in System-on-Chip Designs Gunduru Swathi Lakshmi, Neelima K, C. Subhas ABSTRACT— Memory is the basic necessity in any SoC Static Random Access Memory (SRAM): It design. Memories are classified into single port memory and consists of a latch or flipflop to store each bit of multiport memory. Multiport memory has ability to source more memory and it does not required any refresh efficient execution of operation and high speed performance operation. SRAM is mostly used in cache memory when compared to single port. Testing of semiconductor memories is increasing because of high density of current in the and in hand-held devices. It has advantages like chips. Due to increase in embedded on chip memory and memory high speed and low power consumption. It has density, the number of faults grow exponentially. Error detection drawback like complex structure and expensive. works on concept of redundancy where extra bits are added for So, it is not used for high capacity applications. original data to detect the error bits. Error correction is done in Read Only Memory (ROM): It is a non-volatile memory. two forms: one is receiver itself corrects the data and other is It can only access data but cannot modify data. It is of low receiver sends the error bits to sender through feedback. Error detection and correction can be done in two ways. One is Single cost. Some applications of ROM are scanners, ID cards, Fax bit and other is multiple bit. -

Low Voltage, One-Time Programmable, Read-Only Memory

Features • Fast read access time – 90ns • Dual voltage range operation – Low voltage power supply range, 3.0V to 3.6V, or – Standard power supply range, 5V 10% • Compatible with JEDEC standard Atmel® AT27C512R • Low-power CMOS operation – 20µA max standby (less than 1µA, typical) for VCC = 3.6V – 29mW max active at 5MHz for VCC = 3.6V 512K (64K x 8) • JEDEC standard package – 32-lead PLCC Low Voltage, • High-reliability CMOS technology One-time – 2,000V ESD protection Programmable, – 200mA latchup immunity • Rapid programming algorithm – 100µs/byte (typical) Read-only Memory • CMOS- and TTL-compatible inputs and outputs – JEDEC standard for LVTTL • Integrated product identification code Atmel AT27LV512A • Industrial temperature range • Green (Pb/halide-free) packaging option 1. Description The Atmel AT27LV512A is a high-performance, low-power, low-voltage, 524,288-bit, one- time programmable, read-only memory (OTP EPROM) organized as 64K by 8 bits. It requires only one supply in the range of 3.0 to 3.6V in normal read mode operation, making it ideal for fast, portable systems using battery power. The Atmel innovative design techniques provide fast speeds that rival 5V parts, while keep- ing the low power consumption of a 3.3V supply. At VCC = 3.0V, any byte can be accessed in less than 90ns. With a typical power dissipation of only 18mW at 5MHz and VCC = 3.3V, the AT27LV512A consumes less than one-fifth the power of a standard, 5V EPROM. Standby mode supply current is typically less than 1µA at 3.3V. The AT27LV512A is available in industry-standard, JEDEC-approved, one-time programmable (OTP) PLCC package. -

Semiconductor Memories

Semiconductor Memories Prof. MacDonald Types of Memories! l" Volatile Memories –" require power supply to retain information –" dynamic memories l" use charge to store information and require refreshing –" static memories l" use feedback (latch) to store information – no refresh required l" Non-Volatile Memories –" ROM (Mask) –" EEPROM –" FLASH – NAND or NOR –" MRAM Memory Hierarchy! 100pS RF 100’s of bytes L1 1nS SRAM 10’s of Kbytes 10nS L2 100’s of Kbytes SRAM L3 100’s of 100nS DRAM Mbytes 1us Disks / Flash Gbytes Memory Hierarchy! l" Large memories are slow l" Fast memories are small l" Memory hierarchy gives us illusion of large memory space with speed of small memory. –" temporal locality –" spatial locality Register Files ! l" Fastest and most robust memory array l" Largest bit cell size l" Basically an array of large latches l" No sense amps – bits provide full rail data out l" Often multi-ported (i.e. 8 read ports, 2 write ports) l" Often used with ALUs in the CPU as source/destination l" Typically less than 10,000 bits –" 32 32-bit fixed point registers –" 32 60-bit floating point registers SRAM! l" Same process as logic so often combined on one die l" Smaller bit cell than register file – more dense but slower l" Uses sense amp to detect small bit cell output l" Fastest for reads and writes after register file l" Large per bit area costs –" six transistors (single port), eight transistors (dual port) l" L1 and L2 Cache on CPU is always SRAM l" On-chip Buffers – (Ethernet buffer, LCD buffer) l" Typical sizes 16k by 32 Static Memory -

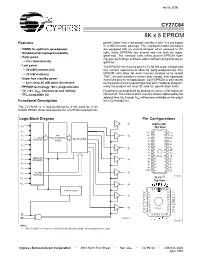

8K X 8 EPROM Features Powers Down Into a Low-Power Standby Mode

1CY 27C6 4 fax id: 3006 CY27C64 8K x 8 EPROM Features powers down into a low-power standby mode. It is packaged in a 600-mil-wide package. The reprogrammable packages • CMOS for optimum speed/power are equipped with an erasure window; when exposed to UV • Windowed for reprogrammability light, these EPROMs are erased and can then be repro- • High speed grammed. The memory cells utilize proven EPROM float- ing-gate technology and byte-wide intelligent programming al- — 0 ns (commercial) gorithms. • Low power The EPROM cell requires only 12.5V for the super voltage and — 40 mW (commercial) low–current requirements allow for gang programming. The — 30 mW (military) EPROM cells allow for each memory location to be tested 100%, as each location is written into, erased, and repeatedly • Super low standby power exercised prior to encapsulation. Each EPROM is also tested — Less than 85 mW when deselected for AC performance to guarantee that after customer program- • EPROM technology 100% programmable ming, the product will meet DC and AC specification limits. ± • 5V 10% VCC, commercial and military Reading is accomplished by placing an active LOW signal on • TTL-compatible I/O OE and CE. The contents of the memory location addressed by the address lines (A0 through A12) will become available on the output Functional Description lines (O0 through O7). The CY27C64 is a high-performance 8192 word by 8 bit CMOS PROM. When deselected, the CY27C64 automatically Logic Block Diagram Pin Configurations O7 DIP/CerDIP A0 Top View A1 VCC 1 28 VCC 2 27 A2 64K O6 A12 VCC ROW PROGRAMMABLE A7 3 26 NC A ADDRESS MULTIPLEXER 3 ARRAY A 4 6 25 A8 A5 5 24 A9 A4 A 6 4 23 A11 O5 A5 A3 7 22 OE A2 8 21 A10 A6 A1 9 27C64 20 CE ADDRESS A A7 O 0 10 19 O7 DECODER 4 O0 11 18 O6 A8 O1 12 17 O5 O 13 16 O A9 2 4 GND 14 15 O3 O A10 COLUMN 3 ADDRESS [1] 27C64-2 A11 PLCC Top View A 12 O2 4 POWER DOWN 32132 31 30 A A 6 5 29 8 A 5 6 28 A9 O1 A 4 7 27 A11 A 3 8 26 NC A 2 9 25 OE A A 1 10 24 10 A0 23 CE O0 11 27C64 NC 12 22 O7 O0 13 21 O6 14 15 16 17 18 19 20 CE OE 27C64-1 27C64-3 Notes: 1. -

Application Note 170 Adding Nonvolatile SRAM Into Embedded Systems

Application Note 170 Adding Nonvolatile SRAM into Embedded Systems www.maxim-ic.com INTRODUCTION Dallas Semiconductor’s nonvolatile (NV) SRAMs are plastic encapsulated modules that combine an SRAM, a power control IC, and a battery to provide high performance NV memory. NV SRAMs are the only NV solution on the market that does not specify a maximum number of write cycles. Additionally, the interface remains the same as a standard SRAM, and the read and write access times remain similar to the SRAM used within the module. NV SRAMs are particularly well suited to microprocessor circuits because of their fast access times and the SRAM interface. External memory buses available on many microprocessors are intended for use with SRAM for data memory. Thus, due to the equivalent interfaces, NV SRAMs can simply replace an SRAM to provide NV storage in many applications. Often in the past, EPROM, EEPROM, and flash have been used for program memory space; however, NV SRAMs may also be used for program memory as well. This application note will show how to interface an NV SRAM to a microprocessor based system as either program or data memory, and list the advantages that using a NV SRAM will bring to the system compared to other NV memories currently available. DIFFERENCE BETWEEN EPROM, EEPROM, FLASH AND NV SRAM Although EPROM, EEPROM, Flash and NV SRAM are compatible NV storage solutions to some extent, accidentally choosing one that is a poor choice for a particular application can be crippling to a design. The main challenges that a microcontroller -

17. Semiconductor Memories

17. Semiconductor Memories Institute of Microelectronic Systems Overview •Introduction • Read Only Memory (ROM) • Nonvolatile Read/Write Memory (RWM) • Static Random Access Memory (SRAM) • Dynamic Random Access Memory (DRAM) •Summary Institute of Microelectronic 17: Semiconductor Memories Systems 2 Semiconductor Memory Classification Non-Volatile Memory Volatile Memory Read Only Memory Read/Write Memory Read/Write Memory (ROM) (RWM) Random Non-Random Mask-Programmable EPROM Access Access ROM E2PROM SRAM FIFO Programmable ROM FLASH DRAM LIFO Shift Register EPROM - Erasable Programmable ROM SRAM - Static Random Access Memory E2PROM - Electrically Erasable DRAM - Dynamic Random Access Memory Programmable ROM FIFO - First-In First-Out LIFO - Last-In First-Out Institute of Microelectronic 17: Semiconductor Memories Systems 3 Random Access Memory Array Organization Memory array • Memory storage cells • Address decoders Each memory cell • stores one bit of binary information (”0“ or ”1“ logic) • shares common connections with other cells: rows, columns Institute of Microelectronic 17: Semiconductor Memories Systems 4 Read Only Memory - ROM • Simple combinatorial Boolean network which produces a specific output for each input combination (address) • ”1“ bit stored - absence of an active transistor • ”0“ bit stored - presence of an active transistor • Organized in arrays of 2N words • Typical applications: • store the microcoded instructions set of a microprocessor • store a portion of the operation system for PCs • store the fixed programs for -

Memory Devices

Memory Devices CEN433 King Saud University Dr. Mohammed Amer Arafah 1 Types of Memory Devices Two main types of memory: ROM Read Only Memory Non Volatile data storage (remains valid after power off) For permanent storage of system software and data Can be PROM, EPROM or EEPROM (Flash) memory RAM Random Access Memory Volatile data storage (data disappears after power off) For temporary storage of application software and data Can be SRAM (static) or DRAM (dynamic) CEN433 - King Saud University 2 Mohammed Amer Arafah Memory Pin Connections Address Inputs: Select the required location in memory. Memory A0 Address lines are numbered from A1 A0 to as many as required to A2 Address . O0 address all memory locations Connections . O1 Output . Or O2 . Input/Output Example: 12-bit address: A0-A11 . Connections 12 . 2 = 4K memory locations AN OM Today’s memory devices range in capacities upto 1G locations (30 CE/ address lines) OE/ WE/ Example: 4K memory: 12 bits: 000H-FFFH. e.g. from 40000H to 40FFFH. Decode this part for CS CEN433 - King Saud University 3 Mohammed Amer Arafah Memory Pin Connections Data Inputs/Outputs (RAM) Data Outputs (ROM) Number of lines = width of data Memory A storage, usually a byte D0-D7 0 A (M=7) 1 A2 Address . O0 Connections . Wider processor data buses use . O1 Output . O Or multiple of such byte-wide . 2 . Input/Output memory devices, e.g. 64-bit . Connections AN . O 8 x 8-bit devices M CE/ Sometimes the total memory capacity is expressed in bits, e.g. OE/ a 64K x 8-bit = 512 Kbit WE/ CEN433 - King Saud University 4 Mohammed Amer Arafah Memory Pin Connections Control Inputs: Chip Enable (CE/), or Chip Select (CS/), or simply Select (S/): Select the memory device for READ or Memory WRITE operations. -

Semiconductor Memories

SEMICONDUCTOR MEMORIES Digital Integrated Circuits Memory © Prentice Hall 1995 Chapter Overview • Memory Classification • Memory Architectures • The Memory Core • Periphery • Reliability Digital Integrated Circuits Memory © Prentice Hall 1995 Semiconductor Memory Classification RWM NVRWM ROM Random Non-Random EPROM Mask-Programmed Access Access 2 E PROM Programmable (PROM) SRAM FIFO FLASH DRAM LIFO Shift Register CAM Digital Integrated Circuits Memory © Prentice Hall 1995 Memory Architecture: Decoders M bits M bits S S0 0 Word 0 Word 0 S1 Word 1 A0 Word 1 S2 Storage Storage s Word 2 Word 2 d Cell A1 Cell r r o e d W o c N AK-1 e S D N-2 Word N-2 Word N-2 SN_1 Word N-1 Word N-1 Input-Output Input-Output (M bits) (M bits) N words => N select signals Decoder reduces # of select signals Too many select signals K = log2N Digital Integrated Circuits Memory © Prentice Hall 1995 Array-Structured Memory Architecture Problem: ASPECT RATIO or HEIGHT >> WIDTH 2L-K Bit Line Storage Cell AK r e d Word Line AK+1 o c e D AL-1 w o R M.2K Sense Amplifiers / Drivers Amplify swing to rail-to-rail amplitude A 0 Column Decoder Selects appropriate AK-1 word Input-Output (M bits) Digital Integrated Circuits Memory © Prentice Hall 1995 Hierarchical Memory Architecture Row Address Column Address Block Address Global Data Bus Control Block Selector Global Circuitry Amplifier/Driver I/O Advantages: 1. Shorter wires within blocks 2. Block address activates only 1 block => power savings Digital Integrated Circuits Memory © Prentice Hall 1995 Memory Timing: Definitions -

Introduction to Advanced Semiconductor Memories

CHAPTER 1 INTRODUCTION TO ADVANCED SEMICONDUCTOR MEMORIES 1.1. SEMICONDUCTOR MEMORIES OVERVIEW The goal of Advanced Semiconductor Memories is to complement the material already covered in Semiconductor Memories. The earlier book covered the fol- lowing topics: random access memory technologies (SRAMs and DRAMs) and their application to specific architectures; nonvolatile technologies such as the read-only memories (ROMs), programmable read-only memories (PROMs), and erasable PROMs in both ultraviolet erasable (UVPROM) and electrically erasable (EEPROM) versions; memory fault modeling and testing; memory design for testability and fault tolerance; semiconductor memory reliability; semiconductor memories radiation effects; advanced memory technologies; and high-density memory packaging technologies [1]. This section provides a general overview of the semiconductor memories topics that are covered in Semiconductor Memories. In the last three decades of semiconductor memories' phenomenal growth, the DRAMs have been the largest volume volatile memory produced for use as main computer memories because of their high density and low cost per bit advantage. SRAM densities have generally lagged a generation behind the DRAM. However, the SRAMs offer low-power consumption and high-per- formance features, which makes them practical alternatives to the DRAMs. Nowadays, a vast majority of SRAMs are being fabricated in the NMOS and CMOS technologies (and a combination of two technologies, also referred to as the mixed-MOS) for commodity SRAMs. 1 2 INTRODUCTION TO ADVANCED SEMICONDUCTOR MEMORIES MOS Memory Market ($M) Non-Memory IC Market ($M) Memory % of Total IC Market 300,000 40% 250,000 30% 200,00U "o Q 15 150,000 20% 2 </> a. o 100,000 2 10% 50,000 0 0% 96 97 98 99 00 01* 02* 03* 04* 05* MOS Memory Market ($M) 36,019 29,335 22,994 32,288 49,112 51,646 56,541 70,958 94,541 132,007 Non-Memory IC Market ($M) 78,923 90,198 86,078 97,930 126,551 135,969 148,512 172,396 207,430 262,172 Memory % of Total IC Market 31% . -

A Study About Non-Volatile Memories

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 29 July 2016 doi:10.20944/preprints201607.0093.v1 1 Article 2 A Study about Non‐Volatile Memories 3 Dileep Kumar* 4 Department of Information Media, The University of Suwon, Hwaseong‐Si South Korea ; [email protected] 5 * Correspondence: [email protected] ; Tel.: +82‐31‐229‐8212 6 7 8 Abstract: This paper presents an upcoming nonvolatile memories (NVM) overview. Non‐volatile 9 memory devices are electrically programmable and erasable to store charge in a location within the 10 device and to retain that charge when voltage supply from the device is disconnected. The 11 non‐volatile memory is typically a semiconductor memory comprising thousands of individual 12 transistors configured on a substrate to form a matrix of rows and columns of memory cells. 13 Non‐volatile memories are used in digital computing devices for the storage of data. In this paper 14 we have given introduction including a brief survey on upcoming NVMʹs such as FeRAM, MRAM, 15 CBRAM, PRAM, SONOS, RRAM, Racetrack memory and NRAM. In future Non‐volatile memory 16 may eliminate the need for comparatively slow forms of secondary storage systems, which include 17 hard disks. 18 Keywords: Non‐volatile Memories; NAND Flash Memories; Storage Memories 19 PACS: J0101 20 21 22 1. Introduction 23 Memory is divided into two main parts: volatile and nonvolatile. Volatile memory loses any 24 data when the system is turned off; it requires constant power to remain viable. Most kinds of 25 random access memory (RAM) fall into this category. -

CSCI 4717/5717 Computer Architecture Basic Organization

Basic Organization CSCI 4717/5717 Memory Cell Operation Computer Architecture • Represent two stable/semi-stable states representing 1 and 0 Topic: Internal Memory Details • Capable of being written to at least once • Capable of being read multiple times Reading: Stallings, Sections 5.1 & 5.3 CSCI 4717 – Computer Architecture Memory Details – Page 1 of 34 CSCI 4717 – Computer Architecture Memory Details – Page 2 of 34 Random Access Memory Semiconductor Memory Types • Random Access Memory (RAM) • Misnomer (Last week we learned that the term • Read Only Memory (ROM) Random Access Memory refers to accessing • Programmable Read Only Memory (PROM) individual memory locations directly by address) • Eraseable Programmable Read Only Memory • RAM allows reading and writing (electrically) of (EPROM) data at the byte level • Electronically Eraseable Programmable Read •Two types Only Memory (EEPROM) –Static RAM – Dynamic RAM • Flash Memory • Volatile CSCI 4717 – Computer Architecture Memory Details – Page 3 of 34 CSCI 4717 – Computer Architecture Memory Details – Page 4 of 34 Read Only Memory (ROM) ROM Uses • Sometimes can be erased for reprogramming, but might have odd requirements such as UV light or • Permanent storage – nonvolatile erasure only at the block level • Microprogramming • Sometimes require special device to program, i.e., • Library subroutines processor can only read, not write • Systems programs (BIOS) •Types • Function tables – EPROM • Embedded system code – EEPROM – Custom Masked ROM –OTPROM –FLASH CSCI 4717 – Computer Architecture