Efficient Implementation of the Or

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Téléchargez La Plaquette De La

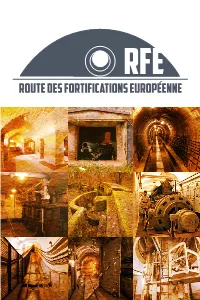

RFE ROUTE DES ROUTE DES FORTIFICATIONSFort de fortifications EUROPÉENNE Schoenenbourg europÉennes RFE ROUTE DES Fort de Mutzig fortifications Feste Kaiser Wilhelm II europÉennes SOMMAIRE Fortifications françaises de 1871 à 1918 Pages 6-9 La région Grand-Est possède sur son territoire un ensemble d’ouvrages fortifiés français et allemands Fort de Villey-le-Sec construits entre 1871 et 1945 véritablement ex- Ouvrage Séré de Rivières (1874-1914)- 54840 Villey-le-Sec ceptionnel. Fort d’Uxegney Ce patrimoine, témoin de deux confrontations armées Ouvrage Séré de Rivières (1884-1914) - 88390 Uxegney majeures en Europe et ainsi lieu de mémoire franco- allemand et européen, a déjà amplement démontré qu’il possède un potentiel pédagogique et qu’il constitue une formidable attraction touristique. Fortifications allemandes de 1871 à 1918 Pages 10-15 C’est pour ces raisons qu’après plus de quarante années de recherches et de publications historiques, de travail Feste Kaiser Wilhelm II de restauration des ouvrages et d’organisation de leur Fort de Mutzig (1893-1918) - 67190 Mutzig visite, neuf sites fortifiés qui accueillent 140 000 visiteurs par an ont décidé d’unir leurs efforts afin de se Feste Obergentringen faire connaître d’un public encore plus large : scolaires, Fort de Guentrange (1899-1906) - 57100 Thionville familles, vacanciers, professionnels du tourisme. Fort Wagner Fort de Verny (1904-1910) - 57420 Pommerieux Cette plaquette a pour but de vous faire découvrir ce patrimoine. Attachez vos ceintures, la visite de ces forts vous fera entrer -

La France Déploie 600 Soldats De Plus Le Grand Huit De Novak

Londres Niederbronn-les-Bains Attaque terroriste : trois Debout sur le zinc a rendu blessés, l’assaillant tué Page 5 hommage à Boris Vian Page 22 Haguenau Les Bleus surclassent Wissembourg l’Angleterre (24-17) en ouverture du www.dna.fr Tournoi des Lundi 3 Février 2020 six nations 1,10 € Page 34 Photo AFP/Martin BUREAU consommation Des boulangeries épinglées sur l’hygiène Une vie de labeur à transmettre Photo archives DNA/Michel FRISON Parmi les commerces, ce sont les boulangeries-pâtisseries qui ont les moins bons résultats en matière d’hygiène, selon une association. Page 2 Sahel La France déploie 600 soldats de plus Photo AFP/Daphné BENOIT Photo archives DNA/Laurent RÉA 3HIMSQB*gabbad+[A\C\K\N\A Face aux djihadistes du Sahel, Un tiers des agriculteurs a plus de 56 ans. Se pose pour eux la question de la transmission de leur les forces françaises de l’opération exploitation. Une démarche d’autant plus difficile qu’il s’agit du travail de toute une vie. Page 13 Barkhane vont passer de 4 500 à 5 100 hommes. Page 3 avec l’éditorial TENNIS BADMINTON Le grand huit Mulhouse : belle Pratique de Novak Djokovic moisson à domicile Hippisme Page 9 Jeux - Horoscope Page 10 Le Serbe a remporté Le Red Star Mulhouse Télévision - météo Pages 11 et 12 son huitième Open d’Australie s’est offert trois médailles d’or Chuchotements Page 14 à Melbourne. Il redevient et une d’argent lors des Sports Pages 23 à 44 numéro 1 mondial. Page 44 Photo AFP/Saeed KHAN championnats de France. -

Auf Den Spuren Eines Riesen… on the Paths of a Giant…

Un peu d'histoire A historical overview Suite à la guerre franco-allemande de 1870-1871, After the Franco-German l'Alsace et la Moselle sont rattachées au nouvel empire War of 1870-1871, the pm 4 to -1.30 pm 12.30 to am 10 allemand sous le nom de “Reischsland Elsass- Alsace and the Moselle : December and mid-october to June from Sundays on Also Open from Monday to Sat day : 9 to 12 am - 2 to 5 pm 5 to 2 - am 12 to 9 : ur day Sat to Monday from Open Lothringen”. Dès 1872, Français et Allemands fortifient were attached to the Ger- Uhr 16 bis 13.30 - Uhr 12.30 bis 10 n la nouvelle frontière afin de préparer une future guerre man Empire and this region : Dezember und mitte-Oktober bis Juni von tags Son Und jugée inévitable. became the "Reichsland Uhr 17 bis 14 - Uhr 12 bis 9 Elsass-Lothrigen". Since : Samstag bis Montag von geöffnet über Jahr ganze Das Horaires élargis selon saison selon élargis Horaires Une fortification impériale : 1872, France and Germany fortify their new border to 13h30-16h et 10h-12h30 : décembre en la décision de construire prepare for a future war, which is assumed to be inevita- et mi-octobre à Juin de dimanches les Egalement une fortification à Mutzig ble. 14h-17h et 9h-12h : Samedi au Lundi du l'année toute Ouvert [email protected] est prise en janvier 1893 par www.ot-molsheim-mutzig.com l'empereur Guillaume II. En An imperial Fort : the deci- 61 11 38 88 +33(0)3 lc elHtld il -72 MOLSHEIM F-67120 Ville de l'Hôtel de Place 9 liaison avec la ceinture for- sion to build the fort of 1 tifiée de Strasbourg, le Mutzig was taken in Janu- MOLSHEIM-MUTZIG DE groupe fortifié de l'empereur aura pour rôle de barrer la ary 1893 by the Emperor REGION LA DE TOURISME DE OFFICE plaine du Rhin contre toute offensive française. -

France Historical AFV Register

France Historical AFV Register Armored Fighting Vehicles Preserved in France Updated 24 July 2016 Pierre-Olivier Buan Neil Baumgardner For the AFV Association 1 TABLE OF CONTENTS INTRODUCTION....................................................................................................4 ALSACE.................................................................................................................5 Bas-Rhin / Lower Rhine (67)........................................................5 Haut-Rhin / Upper Rhine (68)......................................................10 AQUITAINE...........................................................................................................12 Dordogne (24) .............................................................................12 Gironde (33) ................................................................................13 Lot-et-Garonne (47).....................................................................14 AUVERGNE............................................................................................................15 Puy-de-Dôme (63)........................................................................15 BASSE-NORMANDIE / LOWER NORMANDY............................................................16 Calvados (14)...............................................................................16 Manche (50).................................................................................19 Orne (61).....................................................................................21 -

Valles De Vosges Centrales

L’ALSACE À VÉLO Radwandern im Elsass Cycling in Alsace Fietsen in de Elzas Boucles régionales LES VALLÉES DES VOSGES CENTRALES Regionale Fahrradrundwege Die Täler der mittleren Vogesen Regional circular cycle trails 17 The valleys of the middle Vosges Regionale fietstochten De valleien in de Midden-Vogezen 94 km - 1695 m alsaceavelo.fr D1004 Dossenheim- Kuttolsheim Kochersberg D143 Romanswiller Nordheim Obersteigen D143 Wasselonne Fessenheim-le-Bas Brechlingen Quatzenheim Souffel D224 D754 16 Engenthal-le-Bas D224 D824 30 D218 Cosswiller Marlenheim Hurtigheim Wangenbourg-Engenthal Freudeneck D1004 Wangen Furdenheim Kirchheim Ittenheim D75 Schneethal Handschuheim Westhoffen Odratzheim MOSELLE Scharrachbergheim- Wolfsthal Irmstett 22 Osthoffen Dahlenheim D30 Balbronn D218 D275 Bergbieten Soultz- D45 les-Bains D75 Wolxheim Ergersheim Flexbourg D422 Ernolsheim- Dangolsheim 30 Bru Bruche 12 che Avolsheim D218 Dachstein VVA Still D422 Oberhaslach Dinsheim-sur- Bruche Molsheim D30 D392 D30 33 Niederhaslach D392 Départ du circuit / Start der Tour / Heiligenberg Mutzig Start of trail / Vertrekpunt D218 Gresswiller Duttlenheim D1420 Sens du circuit / Richtung der Tour / 33 Direction of trail / Rijrichting Urmatt Dorlisheim Route / Straße / Road / Verharde weg D392 Voie à circulation restreinte/ Lutzelhouse Straße mit eingeschränktem Muhlbach- Verkehr / Restricted access road/ sur-Bruche Mollkirch Weg met beperkt verkeer Wisches Rosheim Parcours cyclables en site propre/ 21 Radweg im Gelände / Separate Laubenheim D704 Hersbach Les Bruyères D204 -

Fortress Study Group Library Catalogue

FSG LIBRARY CATALOGUE OCTOBER 2015 TITLE AUTHOR SOURCE PUBLISHER DATE PAGE COUNTRY CLASSIFICATION LENGTH "Gibraltar of the West Indies": Brimstone Hill, St Kitts Smith, VTC Fortress, no 6, 24-36 1990 West Indies J/UK/FORTRESS "Ludendorff" fortified group of the Oder-Warthe-Bogen front Kedryna, A & Jurga, R Fortress, no 17, 46-58 1993 Germany J/UK/FORTRESS "Other" coast artillery posts of southern California: Camp Haan, Berhow, MA CDSG News Volume 4, 1990 2 USA J/USA/CDSG 1 Camp Callan and Camp McQuaide Number 1, February 1990 100 Jahre Gotthard-Festung, 1885-1985 : Geschichte und Ziegler P GBC, Basel 1986 Switzerland B Bedeutung unserer Alpenfestung [100 years of the Gotthard Fortress, 1885-1985 : history and importance of our Alpine Fortress] 100 Jahre Gotthard-Festung, 1885-1985 : Geschichte und Ziegler P 1995 Switzerland B Bedeutung unserer Alpenfestung [100 years of the Gotthard Fortress, 1885-1985 : history and importance of our Alpine Fortress] 10thC castle on the Danube Popa, R Fortress, no 16, 16-24 1993 Bulgaria J/UK/FORTRESS 12-Inch Breech Loading Mortars Smith, BW CDSG Journal Volume 7, 1993 2 USA J/USA/CDSG 1 Issue 3, November 1993 13th Coast Artillery (Harbor Defense) Regiment Gaines, W CDSG Journal Volume 7, 1993 10 USA J/USA/CDSG 1 Issue 2, May 1993 14th Coast Artillery (Harbor Defense) Regiment, An Organizational Gaines, WC CDSG Journal Volume 9, 1995 17 USA J/USA/CDSG 2 History, The Issue 3, August 1995 16-Inch Batteries at San Francisco and The Evolution of The Smith B Coast Defense Journal 2001 68 USA J/USA/CDSG 2 Casemated 16-Inch Battery, The Volume 15, Issue 1, February 2001 180 Mm Coast Artillery Batteries Guarding Vladivostok,1932-1945 Kalinin, VI et al Coast Defense Journal 2002 25 Russia J/USA/CDSG 2 Part 2: Turret Batteries Volume 16, Issue 1, February 2002 180mm Coast Artillery Batteries Guarding Vladivostok, Russia, Kalinin, VI et al Coast Defense Journal 2001 53 Russia J/USA/CDSG 2 1932-1945: Part 1. -

URMATT (67) D'une Guerre À L'autre

Les documents de Mémorial-GenWeb _______________________________________________________________________ URMATT (67) D'une guerre à l'autre par Marie-Odette BINDEL-SCHNEIDER La guerre 1914-1918 Depuis 1870 le village avait passé, comme toute l'Alsace sous la suzeraineté allemande après le vote de l'Assemblée Nationale réunie à Bordeaux. Dès le 26 juillet 1914, des bruits relatifs à une guerre, circulent dans le village. Au cours de la journée du 1er au 2 août c'est la : Mobilisation Générale. Au cours de cette première guerre mondiale, tous les hommes valides entre 18 et 50 ans furent incorporés dans l'armée allemande et engagés sur différents fronts. En l'absence de la main d'œuvre habituelle les travaux agricoles sont assurés par les femmes, par les hommes de plus de 50 ans et par les écoliers. D'ailleurs, à maintes reprises les congés scolaires furent prolongés ou introduits pour permettre aux écoliers d'effectuer les travaux dans les champs. Les congés scolaires pour rentrer le foin : " d'Heuferien ", durent du 6 au 20 juin. Le 24 juillet 1916 débutent les congés pour la rentrée des céréales : " d'Ernteferien ". En 1917, les congés pour rentrer les récoltes : " d'Herbsferien ", s'étirent du 2 septembre au 21 octobre. Sœur Maximin, l'une des sœurs enseignantes en poste à Urmatt tient la traditionnelle " chronique de l'école : d'Schulkronik " et les lignes tracées de sa main nous apprennent donc l'essentiel de ces années de guerre. Bien vite, après le 1er août 1914, le bruit sourd des canons se fait entendre dans l'arrière de la vallée. -

Vidéo Sites De Mémoire Alsace/Vosges

Cahier des charges Tourisme de mémoire Création d’une vidéo de promotion des sites de mémoire Alsace/Vosges Client ALSACE DESTINATION TOURISME (ADT) Objet de la consultation Réalisation d’une vidéo de promotion des sites de mémoire Alsace/Vosges qui furent le théâtre de nombreux événements historiques, au cœur notamment de trois conflits majeurs depuis la Guerre de 1870 en passant par le premier et le second conflit mondial, jusqu’à la Paix d’aujourd’hui. Date limite de réception des offres Le 13 mai 2020 Contexte Alsace Destination Tourisme est née de la fusion des Comités Départementaux du Tourisme du Haut-Rhin et du Bas-Rhin. Cette nouvelle entité, créée le 1er juillet 2016, est le fruit d’une volonté commune des Conseils Départementaux de donner une impulsion nouvelle à leur action touristique, tant en matière de développement des territoires que d’accompagnement des projets, par la mise en place d’une organisation unique et performante associant tous les acteurs du tourisme. Alsace Destination Tourisme a entre autres pour mission de réaliser la promotion touristique de la destination Alsace, ainsi que celle du Massif des Vosges : STRATEGIE D’INNOVATION ET DE DEVELOPPEMENT TOURISTIQUE POUR L’ALSACE (2017-2021) S’inscrivant dans la compétence partagée du tourisme et du code du Tourisme, ADT met en œuvre sa Stratégie d’innovation et de développement touristique pour l’Alsace, portée et adoptée par les Conseils Départementaux. Cette stratégie est organisée autour de 5 défis : Innover, adapter et réinventer l’offre touristique afin de répondre aux attentes et modes de consommation en constante évolution Améliorer l’expérience client avant, pendant et après son séjour en Alsace, en s’appuyant sur des outils de médiation diversifiés et innovants Passer de l’information à la consommation Assurer une meilleure diffusion des flux des visiteurs sur l’ensemble du territoire Garantir la qualité et tenir la promesse. -

Alsace Lieu De Mémoires Terre Sans Frontière

ALSACE LIEU DE MÉMOIRES TERRE SANS FRONTIÈRE Das Elsass, Ort der Erinnerung, Land ohne Grenze Alsace: a place full of memories, a land without borders Une édition des ADT du Bas-Rhin et du Haut-Rhin [A] [B] [C] [D] 1 1 6 2 3 4 5 8 7 11 9 12 13 10 2 14 15 16 17 3 18 22 19 20 21 23 24 25 26 29 27 32 30 31 28 4 34 33 35 36 5 39 37 38 41 40 6 45 42 44 43 46 7 49 47 48 51 8 50 KdfWhYekhi fWii_eddWdj |igVkZghaÉ]^hid^gZ gXZciZYZaÉ6ahVXZ ;_d_dj[h[iiWdj[hIjh[_\pk]ZkhY^ Y^Z_c\ZgZ<ZhX]^X]iZYZh:ahVhh 7\WiY_dWj_d]`ekhd[o^cidi]ZgZXZci]^hidgnd[6ahVXZ Eg[VXZ$Kdgldgi$EgZ[VXZ 44 Monument des «Diables Rouges» 31 Centre européen du résistant déporté (Denkmal) ........................................................... p.12 ...[B6] (Europäisches Zentrum der deportierten Widerstands- 35 Musée «Hansi» (Museum) ................................ p.5 .....[B5] kämpfer / European centre for deported resistance 42 Monument des «Diables Bleus» (Denkmal) .. p.12 ...[B6] fighters) .............................................................. p.21 ...[B4] 34 Patrimoine du Val d’Argent (Naturerbe 32 Sentier des Passeurs (Weg der Fluchthelfer / AV\jZggZYZ&-,% vom Silbertal / Heritage of Val d’Argent) ....... p.13 ...[B5] Smuggler’s Trail) ............................................... p.21 ...[B-A4] 9Zg@g^Z\kdc&-,% 36 «Tête des Faux» (Buchenkopf) ...................... p.13 ...[B5] 51 Monument «Groupe Mobile d’Alsace» I]Z;gVcXd"Egjhh^VcLVgd[&-,% 39 Mémorial du Linge (Gedenkstätte (Denkmal) .......................................................... p.21 ...[B8] am Lingekopf / Linge memorial) .................... p.14 ...[B5] 50 Ferme des «Ebourbettes» (Hof / Farm) ........ p.22 ...[B8] 1 Champs de bataille du «Geisberg» 41 Circuit historique (Historischer (Schlachtfelder / Battlefields) ......................... -

Télécharger Le

TEMPS FORTS SPOTS WISSEMBOURG LE RHIN LÉGENDE 01 LA ROUTE DES VINS D’ALSACE STRASBOURG MON AMOUR / FÉVRIER INSTAGRAMABLES 3 LE CARNAVAL DE MULHOUSE / MARS LA ROUTE DES CRÊTES R PA CLEEBOURG LE PRINTEMPS D’ALSACE À OBERNAI / MARS É RC E L LE RHIN LE CHÂTEAU DU HAUT-KOENIGSBOURG GI N AT U R COLMAR FÊTE LE PRINTEMPS / AVRIL ON ES 02 1 LE MONT SAINTE-ODILE MOSELLE AL DES VOSG UNESCO LE CORTÈGE DU FEUILLU À L’ECOMUSÉE D’ALSACE / MAI LE DONON A4 MEISENTHAL D 03 LE PIQUE-NIQUE DES VIGNERONS ET LES APÉROS GOURMANDS / MAI U NORD VIGNOBLE LA CATHÉDRALE DE STRASBOURG POTERIE PISTEURS D’ÉTOILES À OBERNAI / MAI 05 LA PETITE VENISE À COLMAR MUSÉE BETSCHDORF LES PLUS BEAUX VILLAGES L A JOURNÉE DES CHÂTEAUX FORTS DANS TOUTE L’ALSACE / 1ER MAI 04 LA PETITE FRANCE À STRASBOURG LALIQUE DE FRANCE LE SLOW UP / JUIN LA MAISON DES PONTS COUVERTS À STRASBOURG Wingen-sur-Moder POTERIE HUNSPACH LA STREISSELHOCHZEIT DE SEEBACH / JUILLET LE PONT FORTIFIÉ DE KAYSERSBERG SOUFFLENHEIM MITTELBERGHEIM LE FESTIVAL INTERNATIONAL DE COLMAR / JUILLET D1063 LA TOUR DE GARDE DE RIQUEWIHR LA PETITE 06 BADEN-BADEN HUNAWIHR LE FESTIVAL DÉCIBULLES DANS LA VALLÉE DE VILLÉ / JUILLET LE M.U.R À MULHOUSE PIERRE HAGUENAU RIQUEWIHR LE FESTIVAL DU HOUBLON À HAGUENAU/ AOÛT EGUISHEIM LE CORSO FLEURI À SÉLESTAT / AOÛT A35 A4 FOIRE AUX VINS D’ALSACE À COLMAR / AOÛT SAVERNE D1340 CATHÉDRALE LES PLUS BEAUX DÉTOURS LA FÊTE DES MÉNÉTRIERS À RIBEAUVILLÉ / SEPTEMBRE 07 DE STRASBOURG DE FRANCE 08 LA FÊTE DES VENDANGES À BARR / SEPTEMBRE LA MA E RNE AU RHIN THANN ST-ART -

Alsace Lieu De Mémoires Terre Sans Frontière

ALSACE LIEU DE MÉMOIRES TERRE SANS FRONTIÈRE Das Elsass, Ort der Erinnerung, Land ohne Grenze Alsace: a place full of memories, a land without borders Une édition des ADT du Bas-Rhin et du Haut-Rhin [A] [B] [C] [D] 1 1 6 2 3 4 5 8 7 11 9 12 13 10 2 14 15 16 17 3 18 22 19 20 21 23 24 25 26 29 27 32 30 31 28 4 34 33 35 36 5 39 37 38 41 40 6 45 42 44 43 46 7 49 47 48 51 8 50 KdfWhYekhi fWii_eddWdj |igVkZghaÉ]^hid^gZ gXZciZYZaÉ6ahVXZ ;_d_dj[h[iiWdj[hIjh[_\pk]ZkhY^ Y^Z_c\ZgZ<ZhX]^X]iZYZh:ahVhh 7\WiY_dWj_d]`ekhd[o^cidi]ZgZXZci]^hidgnd[6ahVXZ Eg[VXZ$Kdgldgi$EgZ[VXZ 44 Monument des «Diables Rouges» 31 Centre européen du résistant déporté (Denkmal) ........................................................... p.12 ...[B6] (Europäisches Zentrum der deportierten Widerstands- 35 Musée «Hansi» (Museum) ................................ p.5 .....[B5] kämpfer / European centre for deported resistance 42 Monument des «Diables Bleus» (Denkmal) .. p.12 ...[B6] fighters) .............................................................. p.21 ...[B4] 34 Patrimoine du Val d’Argent (Naturerbe 32 Sentier des Passeurs (Weg der Fluchthelfer / AV\jZggZYZ&-,% vom Silbertal / Heritage of Val d’Argent) ....... p.13 ...[B5] Smuggler’s Trail) ............................................... p.21 ...[B-A4] 9Zg@g^Z\kdc&-,% 36 «Tête des Faux» (Buchenkopf) ...................... p.13 ...[B5] 51 Monument «Groupe Mobile d’Alsace» I]Z;gVcXd"Egjhh^VcLVgd[&-,% 39 Mémorial du Linge (Gedenkstätte (Denkmal) .......................................................... p.21 ...[B8] am Lingekopf / Linge memorial) .................... p.14 ...[B5] 50 Ferme des «Ebourbettes» (Hof / Farm) ........ p.22 ...[B8] 1 Champs de bataille du «Geisberg» 41 Circuit historique (Historischer (Schlachtfelder / Battlefields) ......................... -

A La Découverte Du Patrimoine Militaire Terrifortain

2020 2021 À la découverte du Patrimoine Militaire Territoire de Belfort SOMMAIRE I. Appartenir à un pays (autour de la guerre de 1870-1871) ................................................................. 2 II. Exposition Un Territoire de défense ................................................................................................. 4 III. Histoire d’une frontière, la naissance du Territoire de Belfort ........................................................... 5 IV. Concours du meilleur Petit Journal du Patrimoine Militaire Terrifortain ........................................... 7 V. Fort OTAN....................................................................................................................................... 9 VI. Visites des expositions du Musée d’Art et d’Histoire de Belfort : ................................................... 10 VII. Visites théâtralisées du Musée d’Histoire ...................................................................................... 11 VIII. Monuments de Mémoire .............................................................................................................. 13 IX. Parcours « Belfort en Résistance, 1870-1945 » ............................................................................... 15 X. Le système de fortification Séré de Rivières ................................................................................... 16 XI. Visite du Fort de Vézelois .............................................................................................................