Use of Classification Algorithms for the Ice Jams Forecasting Problem

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Periglacial Climate and Environment in Northern Eurasia

ARTICLE IN PRESS Quaternary Science Reviews 23 (2004) 1333–1357 The periglacial climate andenvironment in northern Eurasia during the Last Glaciation Hans W. Hubbertena,*, Andrei Andreeva, Valery I. Astakhovb, Igor Demidovc, Julian A. Dowdeswelld, Mona Henriksene, Christian Hjortf, Michael Houmark-Nielseng, Martin Jakobssonh, Svetlana Kuzminai, Eiliv Larsenj, Juha Pekka Lunkkak, AstridLys a(j, Jan Mangerude, Per Moller. f, Matti Saarnistol, Lutz Schirrmeistera, Andrei V. Sherm, Christine Siegerta, Martin J. Siegertn, John Inge Svendseno a Alfred Wegener Institute for Polar and Marine Research (AWI), Telegrafenberg A43, Potsdam D-14473, Germany b Geological Faculty, St. Petersburg University, Universitetskaya 7/9, St. Petersburg 199034, Russian Federation c Institute of Geology, Karelian Branch of Russian Academy of Sciences, Pushkinskaya 11, Petrozavodsk 125610, Russian Federation d Scott Polar Research Institute and Department of Geography, University of Cambridge, Cambridge CBZ IER, UK e Department of Earth Science, University of Bergen, Allegt.! 41, Bergen N-5007, Norway f Quaternary Science, Department of Geology, Lund University, Geocenter II, Solvegatan. 12, Lund Sweden g Geological Institute, University of Copenhagen, Øster Voldgade 10, Copenhagen DK-1350, Denmark h Center for Coastal and Ocean Mapping, Chase Ocean Engineering Lab, University of New Hampshire, Durham, NH 03824, USA i Paleontological Institute, RAS, Profsoyuznaya ul., 123, Moscow 117868, Russia j Geological Survey of Norway, PO Box 3006 Lade, Trondheim N-7002, Norway -

Nepcon CB Public Summary Report V1.4 Main Audit Sokol Timber

NEPCon Evaluation of “Sokol Timber Company” Joint-Stock Company Compliance with the SBP Framework: Public Summary Report Main (Initial) Audit www.sbp-cert.org Focusing on sustainable sourcing solutions Completed in accordance with the CB Public Summary Report Template Version 1.4 For further information on the SBP Framework and to view the full set of documentation see www.sbp-cert.org Document history Version 1.0: published 26 March 2015 Version 1.1: published 30 January 2018 Version 1.2: published 4 April 2018 Version 1.3: published 10 May 2018 Version 1.4: published 16 August 2018 © Copyright The Sustainable Biomass Program Limited 2018 NEPCon Evaluation of “Sokol Timber Company” Joint-Stock Company: Public Summary Report, Main (Initial) Audit Page ii Focusing on sustainable sourcing solutions Table of Contents 1 Overview 2 Scope of the evaluation and SBP certificate 3 Specific objective 4 SBP Standards utilised 4.1 SBP Standards utilised 4.2 SBP-endorsed Regional Risk Assessment 5 Description of Company, Supply Base and Forest Management 5.1 Description of Company 5.2 Description of Company’s Supply Base 5.3 Detailed description of Supply Base 5.4 Chain of Custody system 6 Evaluation process 6.1 Timing of evaluation activities 6.2 Description of evaluation activities 6.3 Process for consultation with stakeholders 7 Results 7.1 Main strengths and weaknesses 7.2 Rigour of Supply Base Evaluation 7.3 Compilation of data on Greenhouse Gas emissions 7.4 Competency of involved personnel 7.5 Stakeholder feedback 7.6 Preconditions 8 Review -

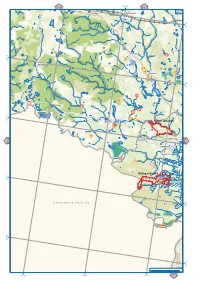

Atlas of High Conservation Value Areas, and Analysis of Gaps and Representativeness of the Protected Area Network in Northwest R

34°40' 216 217 Chudtsy Efimovsky 237 59°30' 59°20' Anisimovo Loshchinka River Somino Tushemelka River 59°20' Chagoda River Golovkovo Ostnitsy Spirovo 59°10' Klimovo Padun zakaznik Smordomsky 238 Puchkino 236 Ushakovo Ignashino Rattsa zakaznik 59°0' Rattsa River N O V G O R O D R E G I O N 59°0' 58°50' °50' 58 0369 км 34°20' 34°40' 35°0' 251 35°0' 35°20' 217 218 Glubotskoye Belaya Velga 238 protected mire protected mire Podgornoye Zaborye 59°30' Duplishche protected mire Smorodinka Volkhovo zakaznik protected mire Lid River °30' 59 Klopinino Mountain Stone protected mire (Kamennaya Gora) nature monument 59°20' BABAEVO Turgosh Vnina River °20' 59 Chadogoshchensky zakaznik Seredka 239 Pervomaisky 237 Planned nature monument Chagoda CHAGODA River and Pes River shores Gorkovvskoye protected mire Klavdinsky zakaznik SAZONOVO 59°10' Vnina Zalozno Staroye Ogarevo Chagodoshcha River Bortnikovo Kabozha Pustyn 59°0' Lake Chaikino nature monument Izbouishchi Zubovo Privorot Mishino °0' Pokrovskoye 59 Dolotskoye Kishkino Makhovo Novaya Planned nature monument Remenevo Kobozha / Anishino Chernoozersky Babushkino Malakhovskoye protected mire Kobozha River Shadrino Kotovo protected Chikusovo Kobozha mire zakazhik 58°50' Malakhovskoye / Kobozha 0369 protected mire км 35°20' 251 35°40' 36°0' 252 36°0' 36°20' 36°40' 218 219 239 Duplishche protected mire Kharinsky Lake Bolshoe-Volkovo zakaznik nature monument Planned nature monument Linden Alley 59°30' Pine forest Sudsky, °30' nature monument 59 Klyuchi zakaznik BABAEVO абаево Great Mosses Maza River 59°20' -

Interdisciplinary and Comparative Methodologies

The Retrospective Methods Network Newsletter Interdisciplinary and Comparative Methodologies № 14 Exploring Circum-Baltic Cultures and Beyond Guest Editors: Joonas Ahola and Kendra Willson Published by Folklore Studies / Department of Cultures University of Helsinki, Helsinki 1 RMN Newsletter is a medium of contact and communication for members of the Retrospective Methods Network (RMN). The RMN is an open network which can include anyone who wishes to share in its focus. It is united by an interest in the problems, approaches, strategies and limitations related to considering some aspect of culture in one period through evidence from another, later period. Such comparisons range from investigating historical relationships to the utility of analogical parallels, and from comparisons across centuries to developing working models for the more immediate traditions behind limited sources. RMN Newsletter sets out to provide a venue and emergent discourse space in which individual scholars can discuss and engage in vital cross- disciplinary dialogue, present reports and announcements of their own current activities, and where information about events, projects and institutions is made available. RMN Newsletter is edited by Frog, Helen F. Leslie-Jacobsen, Joseph S. Hopkins, Robert Guyker and Simon Nygaard, published by: Folklore Studies / Department of Cultures University of Helsinki PO Box 59 (Unioninkatu 38 C 217) 00014 University of Helsinki Finland The open-access electronic edition of this publication is available on-line at: https://www.helsinki.fi/en/networks/retrospective-methods-network Interdisciplinary and Comparative Methodologies: Exploring Circum-Baltic Cultures and Beyond is a special issue organized and edited by Frog, Joonas Ahola and Kendra Willson. © 2019 RMN Newsletter; authors retain rights to reproduce their own works and to grant permission for the reproductions of those works. -

Monuments of Church Architecture in Belozersk: Late Sixteenth to the Early Nineteenth Centuries

russian history 44 (2017) 260-297 brill.com/ruhi Monuments of Church Architecture in Belozersk: Late Sixteenth to the Early Nineteenth Centuries William Craft Brumfield Professor of Slavic Studies and Sizeler Professor of Jewish Studies, Department of Germanic and Slavic Studies, Tulane University, New Orleans [email protected] Abstract The history of the community associated with the White Lake (Beloe Ozero) is a rich one. This article covers a brief overview of the developing community from medieval through modern times, and then focuses the majority of its attention on the church ar- chitecture of Belozersk. This rich tradition of material culture increases our knowledge about medieval and early modern Rus’ and Russia. Keywords Beloozero – Belozersk – Russian Architecture – Church Architecture The origins and early location of Belozersk (now a regional town in the center of Vologda oblast’) are subject to discussion, but it is uncontestably one of the oldest recorded settlements among the eastern Slavs. “Beloozero” is mentioned in the Primary Chronicle (or Chronicle of Bygone Years; Povest’ vremennykh let) under the year 862 as one of the five towns granted to the Varangian brothers Riurik, Sineus and Truvor, invited (according to the chronicle) to rule over the eastern Slavs in what was then called Rus’.1 1 The Chronicle text in contemporary Russian translation is as follows: “B гoд 6370 (862). И изгнaли вapягoв зa мope, и нe дaли им дaни, и нaчaли caми coбoй влaдeть, и нe былo cpeди ниx пpaвды, и вcтaл poд нa poд, и былa у ниx уcoбицa, и cтaли вoeвaть дpуг c дpугoм. И cкaзaли: «Пoищeм caми ceбe князя, кoтopый бы влaдeл нaми и pядил пo pяду и пo зaкoну». -

Maps -- by Region Or Country -- Eastern Hemisphere -- Europe

G5702 EUROPE. REGIONS, NATURAL FEATURES, ETC. G5702 Alps see G6035+ .B3 Baltic Sea .B4 Baltic Shield .C3 Carpathian Mountains .C6 Coasts/Continental shelf .G4 Genoa, Gulf of .G7 Great Alföld .P9 Pyrenees .R5 Rhine River .S3 Scheldt River .T5 Tisza River 1971 G5722 WESTERN EUROPE. REGIONS, NATURAL G5722 FEATURES, ETC. .A7 Ardennes .A9 Autoroute E10 .F5 Flanders .G3 Gaul .M3 Meuse River 1972 G5741.S BRITISH ISLES. HISTORY G5741.S .S1 General .S2 To 1066 .S3 Medieval period, 1066-1485 .S33 Norman period, 1066-1154 .S35 Plantagenets, 1154-1399 .S37 15th century .S4 Modern period, 1485- .S45 16th century: Tudors, 1485-1603 .S5 17th century: Stuarts, 1603-1714 .S53 Commonwealth and protectorate, 1660-1688 .S54 18th century .S55 19th century .S6 20th century .S65 World War I .S7 World War II 1973 G5742 BRITISH ISLES. GREAT BRITAIN. REGIONS, G5742 NATURAL FEATURES, ETC. .C6 Continental shelf .I6 Irish Sea .N3 National Cycle Network 1974 G5752 ENGLAND. REGIONS, NATURAL FEATURES, ETC. G5752 .A3 Aire River .A42 Akeman Street .A43 Alde River .A7 Arun River .A75 Ashby Canal .A77 Ashdown Forest .A83 Avon, River [Gloucestershire-Avon] .A85 Avon, River [Leicestershire-Gloucestershire] .A87 Axholme, Isle of .A9 Aylesbury, Vale of .B3 Barnstaple Bay .B35 Basingstoke Canal .B36 Bassenthwaite Lake .B38 Baugh Fell .B385 Beachy Head .B386 Belvoir, Vale of .B387 Bere, Forest of .B39 Berkeley, Vale of .B4 Berkshire Downs .B42 Beult, River .B43 Bignor Hill .B44 Birmingham and Fazeley Canal .B45 Black Country .B48 Black Hill .B49 Blackdown Hills .B493 Blackmoor [Moor] .B495 Blackmoor Vale .B5 Bleaklow Hill .B54 Blenheim Park .B6 Bodmin Moor .B64 Border Forest Park .B66 Bourne Valley .B68 Bowland, Forest of .B7 Breckland .B715 Bredon Hill .B717 Brendon Hills .B72 Bridgewater Canal .B723 Bridgwater Bay .B724 Bridlington Bay .B725 Bristol Channel .B73 Broads, The .B76 Brown Clee Hill .B8 Burnham Beeches .B84 Burntwick Island .C34 Cam, River .C37 Cannock Chase .C38 Canvey Island [Island] 1975 G5752 ENGLAND. -

Download This Article in PDF Format

E3S Web of Conferences 266, 08011 (2021) https://doi.org/10.1051/e3sconf/202126608011 TOPICAL ISSUES 2021 Ensuring environmental safety of the Baltic Sea basin E.E. Smirnova, L.D. Tokareva Department of Technosphere Safety, St. Petersburg State University of Architecture and Civil Engineering, St. Petersburg, Russia Abstract:The Baltic Sea is not only important for transport, but for a long time it has been supplying people with seafood. In 1998, the Government of the Russian Federation adopted Decree N 1202 “On approval of the 1992 Convention on the Protection of the Marine Environment of the Baltic Sea Region”, according to which Russia approved the Helsinki Convention and its obligations. However, the threat of eutrophication has become urgent for the Baltic Sea basin and Northwest region due to the increased concentration of phosphorus and nitrogen in wastewater. This article studies the methods of dephosphation of wastewater using industrial waste. 1 Introduction The main topic of the article is to show the possibility of using industrial waste as reagents to optimize the water treatment system of rivers flowing into the Baltic Sea and water reservoirs of the Northwest-region (including part of the water system of the Vologda Region associated with the Baltic basin) in order to bring the quality of wastewater to the international requirements established for the Baltic Sea. These measures will help to improve the environmental situation in the sphere of waste processing and thereby ensure an acceptable degree of trophicity of the reservoirs. As far as self-purification processes in rivers and seas cannot provide complete recovery from wastewater pollution, the problem of removing phosphorus-containing compounds from wastewater and purifying wastewater is of great importance [1-3]. -

Combined Dendrochronological and Radiocarbon Dating of Three Russian Icons from the 15Th–17Th Century

G Model DENDRO-25341; No. of Pages 9 ARTICLE IN PRESS Dendrochronologia xxx (2015) xxx–xxx Contents lists available at ScienceDirect Dendrochronologia journal homepage: www.elsevier.com/locate/dendro Combined dendrochronological and radiocarbon dating of three Russian icons from the 15th–17th century a,∗ a b,c Vladimir Matskovsky , Andrey Dolgikh , Konstantin Voronin a Institute of Geography RAS, 119017, Staromonetniy pereulok 29, Moscow, Russia b Institute of Archaeology RAS, 117036, Dmitryɑ Ulyanovɑ st. 19, Moscow, Russia c Stolichnoe Archaeological Bureau, 119121, Iljinka st. 4, office 226, Moscow, Russia a r t i c l e i n f o a b s t r a c t Article history: Dendrochronology is usually the only method of precise dating of unsigned art objects made on or of Received 10 June 2015 wood. It has a long history of application in Europe, however in Russia such an approach is still at an Received in revised form 9 October 2015 infant stage, despite its cultural importance. Here we present the results of dendrochronological and Accepted 12 October 2015 radiocarbon accelerated mass spectrometry (AMS) dating of three medieval icons from the 15th–17th Available online xxx century that originate from the North of European Russia and are painted on wooden panels made from Scots pines. For each icon the wooden panels were dendrochronologically studied and five to six AMS Keywords: dates were made. Two icons were successfully dendro-dated whereas one failed to be reliably cross-dated Icons Wiggle-matching with the existing master tree-ring chronologies, but was dated by radiocarbon wiggle-matching. -

The First Romanovs. (1613-1725)

Cornell University Library The original of this book is in the Cornell University Library. There are no known copyright restrictions in the United States on the use of the text. http://www.archive.org/details/cu31924028446767 Cornell University Library DK 114.B16 First Romanovs. (1613-1725 3 1924 028 446 767 THE FIRST ROMANOVS. ^etnuXJ ,(/? V.Xuissoru/TL 'mpcralor Jraler atruoLJ Mter- a, ^r^c^t at tAe O^e of // : The First Romanovs. (1613— 1725.) A HISTORY OF MOSCOVITE CIVILISATION AND THE RISE OF MODERN RUSSIA UNDER PETER THE GREAT AND HIS FORERUNNERS. By R. NISBET BAIN. fntH ILLUSTRATIONS. LONDON ARCHIBALD CONSTABLE & CO., Ltd., 16, JAMES STREET, HAYMARKET, 1905- BRADBURY, AGNEW, & CO. LD., PRINTERS, LONDON AND TONBRIOGE.' MY MOTHER. INTRODUCTION. It will be my endeavour, in the following pages, to describe the social, ecclesiastical, and political conditions of Eastern Europe from 1613 to 1725, and trace the gradual transformation, during the seventeenth century, of the semi-mOpastic, semi-barbarous Tsardom of Moscovy into the modern Russian State. The emergence of the unlooked-for and unwelcome Empire of the Tsars in the Old World was an event not inferior in importance to the discovery of the unsuspected Continents of the New, and the manner of its advent was even stranger than the advent itself. Like all periods of sudden transition, the century which divides the age of Ivan the Great from the age of Peter the Great has its own peculiar interest, abounding, as it does, in singular contradictions and picturesque contrasts. Throughout this period. East and West, savagery and civilisation strive incessantly for the mastery, and sinister and colossal shapes> fit representatives of wildly contending, elemental forces, flit phantasmagorically across the dirty ways; of the twilight scene. -

Supplement of Geosci

Supplement of Geosci. Model Dev., 11, 1343–1375, 2018 https://doi.org/10.5194/gmd-11-1343-2018-supplement © Author(s) 2018. This work is distributed under the Creative Commons Attribution 4.0 License. Supplement of LPJmL4 – a dynamic global vegetation model with managed land – Part 1: Model description Sibyll Schaphoff et al. Correspondence to: Sibyll Schaphoff ([email protected]) The copyright of individual parts of the supplement might differ from the CC BY 4.0 License. S1 Supplementary informations to the evaluation of the LPJmL4 model The here provided supplementary informations give more details to the evaluations given in Schaphoff et al.(under Revision). All sources and data used are described in detail there. Here we present ad- ditional figures for evaluating the LPJmL4 model on a plot scale for water and carbon fluxes Fig. S1 5 - S16. Here we use the standard input as described by Schaphoff et al.(under Revision, Section 2.1). Furthermore, we evaluate the model performance on eddy flux tower sites by using site specific me- teorological input data provided by http://fluxnet.fluxdata.org/data/la-thuile-dataset/(ORNL DAAC, 2011). Here the long time spin up of 5000 years was made with the input data described in Schaphoff et al.(under Revision), but an additional spin up of 30 years was conducted with the site specific 10 input data followed by the transient run given by the observation period. Comparisons are shown for some illustrative stations for net ecosystem exchange (NEE) in Fig. S17 and for evapotranspira- tion Fig. S18. -

Totma, a Small Russian Town

19005 Coast Highway One, Jenner, CA 95450 ■ 707.847.3437 ■ [email protected] ■ www.fortross.org Title: Totma, A Small Russian Town Author (s): Elya Vasilyeva Source: Fort Ross Conservancy Library URL: http://www.fortross.org/lib.html Unless otherwise noted in the manuscript, each author maintains copyright of his or her written material. Fort Ross Conservancy (FRC) asks that you acknowledge FRC as the distributor of the content; if you use material from FRC’s online library, we request that you link directly to the URL provided. If you use the content offline, we ask that you credit the source as follows: “Digital content courtesy of Fort Ross Conservancy, www.fortross.org; author maintains copyright of his or her written material.” Also please consider becoming a member of Fort Ross Conservancy to ensure our work of promoting and protecting Fort Ross continues: http://www.fortross.org/join.htm. This online repository, funded by Renova Fort Ross Foundation, is brought to you by Fort Ross Conservancy, a 501(c)(3) and California State Park cooperating association. FRC’s mission is to connect people to the history and beauty of Fort Ross and Salt Point State Parks. By Elya Vasilyeva Photographs by ~lexander Lyskin Along the banks of the -~-- ... Sukhona River sits the town of TobN, which has the black fox of North otma is the home town of Ivan Kuskov, the founder of Few Russians know about the · Fort Ross, a Russian settlement in northern California. town of Totma, and even The well-preserved house in which he lived can still be seen at the Fort Ross Museum. -

VOLOGDA REGION on Behalf of My Landsmen I Am Happy to Present Vologda Region to You

VOLOGDA REGION On behalf of my landsmen I am happy to present Vologda region to you. Ancient history of Vologda region traces back to the depth of the centuries. Vologda land played an important part in formation of the Russian nation, made great contribution to the treasury of Russian and world culture. Nowadays, a favourable geographical position of the region, its natural resources, high-skilled specialists, programmed and targeted approach to economic management, fovourable opportunities for business development open wide prospects for active, energetic and enterprising people. Vologda region is an industrially developed and an export- oriented region of Russia's North-West. We have long- standing commercial and economic relations with more than 100 countries all over the world. We construct roads and lay gas pipelines, harvest and process timber, supply Russian and foreign markets with high-quality foodstuffs, cast steel, grow flax, make wonderful laces and produce modern trolley-buses, annually receive tens of thousands of tourists. Vologda region is hospitality and kindliness, innumerable monuments of glorious past, picturesque landscapes and miracles of nature. All this makes Vologda region an interesting and attractive area for tourists. I am sure that investor's guide, that you are holding now, will be the first step to acquaintance and mutually beneficial cooperation with Vologda region. Oleg Kuvshinnikov, Vologda region Governor 2 Geographical location Region is situated in the north 1700 km of the European part of Russia Helsinki total 144,5 area ths. sq km number of 1,2 population million people St. Petersburg - VOLOGDA REGION Vologda Cherepovets Potential sales market- Jaroslavl 50 million N.