History & Binary Representation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Microprocessori

Microprocessori R.k.e. edizioni Antonio Pucci Microprocessori R.k.e. edizioni Indice Introduzione Cpu o microprocessore pagina 7 Il primo processore pagina 8 Elenco dei processori per anno pagina 11 Elenco dei processori per codice pagina 27 Net-o-grafia pagina 61 5 Introduzione CPU o microprocessore Un microprocessore spesso abbreviato con µP è esempio di microprocessore un componente elettronico digitale formato da permise di ridurre transistor racchiuso in uno significativamente i costi dei o più circuiti integrati. calcolatori. Uno o più processori sono Dagli anni 80 in poi i utilizzati come Cpu da un microprocessori sono sistema di elaborazione praticamente l’unica digitale come può essere un implementazione di Cpu. personal computer, un palmare, un telefono cellulare o un altro dispositivo digitale. La costruzione dei microprocessori fu resa possibile dall’avvento della tecnologia Lsi: integrando una Cpu completa in un solo chip 7 Il primo processore L’ obbiettivo del progetto era pre-programmate. Comunque sia nel 1971 equipaggiare il nuovo F-14 Il 17 settembre 1971 che nel 1976 Intel e Ti Tomcat che allora era in annunciò il modello Tms stipularono un accordo sviluppo. 1802 Nc, programmabile, in cui Intel pagava a Ti Il progetto venne che poteva essere i diritti per l’utilizzo del completato nel 1970 e utilizzato per implementare suo brevetto. Un riassunto utilizzava integrati mos per un calcolatore. della storia e contenuto nella il core della Cpu. L’ Intel 4004, processore a documentazione che Intel Il microprocessore Il proggetto era semplice e 4 Bit, venne presentato il 15 presentò in tribunale quando apparve appena la tecnologia innovativo e novembre 1971 e fu fu chiamata in giudizio da lo consentì dato che l’idea vinse sui competitori sviluppato da Federico Cyrix per violazione dei di integrare i componenti elettromeccanici Faggin. -

Lecture Notes in Assembly Language

Lecture Notes in Assembly Language Short introduction to low-level programming Piotr Fulmański Łódź, 12 czerwca 2015 Spis treści Spis treści iii 1 Before we begin1 1.1 Simple assembler.................................... 1 1.1.1 Excercise 1 ................................... 2 1.1.2 Excercise 2 ................................... 3 1.1.3 Excercise 3 ................................... 3 1.1.4 Excercise 4 ................................... 5 1.1.5 Excercise 5 ................................... 6 1.2 Improvements, part I: addressing........................... 8 1.2.1 Excercise 6 ................................... 11 1.3 Improvements, part II: indirect addressing...................... 11 1.4 Improvements, part III: labels............................. 18 1.4.1 Excercise 7: find substring in a string .................... 19 1.4.2 Excercise 8: improved polynomial....................... 21 1.5 Improvements, part IV: flag register ......................... 23 1.6 Improvements, part V: the stack ........................... 24 1.6.1 Excercise 12................................... 26 1.7 Improvements, part VI – function stack frame.................... 29 1.8 Finall excercises..................................... 34 1.8.1 Excercise 13................................... 34 1.8.2 Excercise 14................................... 34 1.8.3 Excercise 15................................... 34 1.8.4 Excercise 16................................... 34 iii iv SPIS TREŚCI 1.8.5 Excercise 17................................... 34 2 First program 37 2.1 Compiling, -

Lecture Notes in Assembly Language Piotr Fulmański

Uniwersytet Łódzki Wydział Matematyki i Informatyki Informatyka Lecture Notes in Assembly Language Short introduction to low-level programming Piotr Fulmański Łódź, 2013 Spis treści Spis treści iii 1 Before we begin1 1.1 Simple assembler.................................... 1 1.1.1 Excercise 1 ................................... 2 1.1.2 Excercise 2 ................................... 2 1.1.3 Excercise 3 ................................... 3 1.1.4 Excercise 4 ................................... 5 1.2 Improvements, part I.................................. 6 1.2.1 Excercise 5 ................................... 9 1.3 Improvements, part II ................................. 9 1.3.1 Solution 5.2.2 – bad second approach..................... 14 1.4 Improvements, part III................................. 16 1.4.1 Excercise 6 ................................... 17 1.5 Improvements, part IV................................. 19 1.5.1 Excercise 6 – second approach ........................ 19 1.5.2 Excercise 7 ................................... 19 1.5.3 Excercise 8 ................................... 20 1.6 Improvements, part V ................................. 20 1.6.1 Excercise 9 ................................... 20 1.6.2 Excercise 10................................... 21 1.7 Other excercises .................................... 21 1.7.1 Excercise 11................................... 21 1.7.2 Excercise x ................................... 22 iii iv SPIS TREŚCI 1.7.3 Excercise x ................................... 22 1.7.4 Excercise x .................................. -

History-Of-Microprocessors.Pdf

HISTORY OF MICROPROCESSORS 1 Gursharan Singh Tatla [email protected] www.eazynotes.com CONTENTS Introduction 4-Bit Microprocessors 8-Bit Microprocessors 16-Bit Microprocessors 32-Bit Microprocessors 64-Bit Microprocessors 2 Gursharan Singh Tatla www.eazynotes.com [email protected] INTRODUCTION Fairchild Semiconductors (founded in 1957) invented the first IC in 1959. In 1968, Robert Noyce, Gordan Moore, Andrew Grove resigned from Fairchild Semiconductors. They founded their own company Intel (Integrated Electronics). Intel grown from 3 man start-up in 1968 to industrial giant by 1981. It had 20,000 employees and $188 million revenue. 3 Gursharan Singh Tatla www.eazynotes.com [email protected] 4-BIT MICROPROCESSORS 4 Gursharan Singh Tatla www.eazynotes.com [email protected] INTEL 4004 Introduced in 1971. It was the first microprocessor by Intel. It was a 4-bit µP. Its clock speed was 740KHz. It had 2,300 transistors. It could execute around 60,000 instructions per second. 5 Gursharan Singh Tatla www.eazynotes.com [email protected] INTEL 4040 Introduced in 1974. It was also 4-bit µP. 6 Gursharan Singh Tatla www.eazynotes.com [email protected] 8-BIT MICROPROCESSORS 7 Gursharan Singh Tatla www.eazynotes.com [email protected] INTEL 8008 Introduced in 1972. It was first 8-bit µP. Its clock speed was 500 KHz. Could execute 50,000 instructions per second. 8 Gursharan Singh Tatla www.eazynotes.com [email protected] INTEL 8080 Introduced in 1974. It was also 8-bit µP. Its clock speed was 2 MHz. It had 6,000 transistors. Was 10 times faster than 8008. -

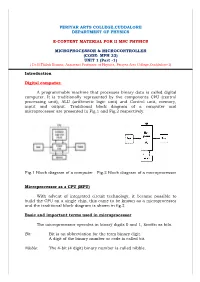

E-Content Material for Ii Msc Physics

PERIYAR ARTS COLLEGE,CUDDALORE DEPARTMENT OF PHYSICS E-CONTENT MATERIAL FOR II MSC PHYSICS MICROPROCESSOR & MICROCONTROLLER (CODE: MPH 33) UNIT 1 (Part -1) ( Dr.R.Thilak Kumar, Assistant Professor of Physics, Periyar Arts College,Cuddalore-1) Introduction Digital computer: A programmable machine that processes binary data is called digital computer. It is traditionally represented by five components CPU (central processing unit), ALU (arithmetic logic unit) and Control unit, memory, input and output. Traditional block diagram of a computer and microprocessor are presented in Fig.1 and Fig.2 respectively. Fig.1 Block diagram of a computer Fig.2 Block diagram of a microprocessor Microprocessor as a CPU (MPU) With advent of integrated circuit technology, it became possible to build the CPU on a single chip, this came to be known as a microprocessor and the traditional block diagram is shown in fig.2 Basic and important terms used in microprocessor The microprocessor operates in binary digits 0 and 1, known as bits. Bit: Bit is an abbreviation for the term binary digit. A digit of the binary number or code is called bit. Nibble: The 4-bit (4 digit) binary number is called nibble. Byte: The 8-bit(8 digit) binary number is called byte. Word: The 16-bit (16 digit) binary number is called word. Double word: The 32-bit (32 digit) binary number is called double word. Hexadecimal number system: The hexadecimal number system has a base 16. The basic digits are 0,1,2,3,4,5,6,7,8,9,A,B,C,D,E,F. -

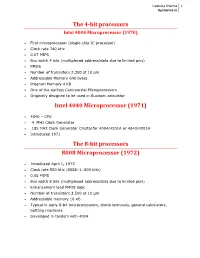

The 4-Bit Processors Intel 4040 Microprocessor

Upendra Sharma 1 Upsharma.in The 4-bit processors Intel 4004 Microprocessor (1970) First microprocessor (single-chip IC processor) Clock rate 740 kHz 0.07 MIPS Bus width 4 bits (multiplexed address/data due to limited pins) PMOS Number of transistors 2,300 at 10 μm Addressable Memory 640 bytes Program Memory 4 KB One of the earliest Commercial Microprocessors Originally designed to be used in Busicom calculator Intel 4040 Microprocessor (1971) 4040 – CPU 4 MHz Clock Generator .185 MHz Clock Generator Crystal for 4004/4201A or 4040/4201A Introduced 1971 The 8-bit processors 8008 Microprocessor (1972) Introduced April 1, 1972 Clock rate 500 kHz (8008–1: 800 kHz) 0.05 MIPS Bus width 8 bits (multiplexed address/data due to limited pins) Enhancement load PMOS logic Number of transistors 3,500 at 10 μm Addressable memory 16 KB Typical in early 8-bit microcomputers, dumb terminals, general calculators, bottling machines Developed in tandem with 4004 Upendra Sharma 2 Upsharma.in 8080 Microprocessor (1974) Introduced April 1, 1974 Clock rate 2 MHz (very rare 8080B: 3 MHz) 0.29 MIPS Bus width 8 bits data, 16 bits address Enhancement load NMOS logic Number of transistors 4,500, 6 μm Assembly language downwards compatible with 8008. Addressable memory 64 KB Up to 10X the performance of the 8008 Used in the Altair 8800, Traffic light controller, cruise missile Required six support chips versus 20 for the 8008 8085 Microprocessor (1976) Introduced March 1976 Clock rate 3 MHz 0.37 MIPS Bus width 8 bits data, 16 bits address Depletion load NMOS logic Number of transistors 6,500 at 3 μm Binary compatible downwards with the 8080. -

Microcomputer Digest V03n01

HO PU-rEB DIGES-r Volume 3, Number 1 July, 1976 THE "SUPER 8080" MICROCOMPUTER MICRONOVA MICROCOMPUTER FAMILY Z-80, Zilog's first microcomputer, was As reported last month, Data General introduced at the California Computer Show Corp. has introduced a 16-bit microcomputer and includes all the logic circuits necessary family with the architecture, software and for building high performance microcomputer system performance of a NOVA minicomputer. based products with virtually no external The family ranges from chip sets to fully logic, and a minimum number of static or dy packaged computer systems. The microNOVA namic memories. family is based on a high-performance, Data Totally software compatible with Intel's General designed and manufactured 40-pin 8080A, the 40-pin N-channel, depletion mode, NMOS microprocessor. MOS microprocessor has a repertoire of 158 instructions and 17 internal registers inclu ding two real index registers. Additional features include built-in refresh for dynamic memory, 1.6 us machine cycle time, and a single 5V power supply and a single phase TTL clock. (cont'd on page 2) AMI 6800 PROTOTYPE CARD American Microsystems, Inc. has introduced a microprocessor prototyping board for hard ware and software evaluation of 6800-based microcomputer systems family in specific ap plications. The AMI 6800 Microprocessor Evaluation Board (EVK300) features a built-in programmer for the S6834 EPROM microcircuitry. This feature, not offered on competing prototyping systems, gives the AMI board greater capabil ity in developing prototype microcomputer This microprocessor features a 16-bit programs. (cont'd on page 2) word length, NOVA-compatible architecture, 32K main memory capacity and a sophisticated I/O enco.ding scheme capable of controlling NATIONAL STUNS INDUSTRY W/8080 multiple high-performance peripherals. -

Microprocessors in U.S. Electrical Engineering Departments

.DOCUMENT-atsimE ED 118 381 SE 020 178: _ AUTHOR Sloan, M. E:; . TITLE. ' -fficroprocelSors itlu44s;,Electrical Engineering Departments,19741.0735V, $ , -PUB DATE Jut 15 ,,, NOTE .20P.; Paper'Presented at theAnnual Meeting of the American Society .for Engine'ring Education (Colorado State Universitz, Ft, Collin Colorado, Jute 16 -19, 1975) - EDRS PRICE IMF -$0.83 HC- $1:61 Plus Postage DESCRIPTOIIS Cqmputers; *Computer Science; *Course Descriptions; Curric4um; Engineering; *Engiteering-Education; *Higher EduCation; Surveys ("IDENTIFIERS *Microprocessors ABSTRACT - Drawn from a survey of engineering departments known to be teaching microprgicessor courses, this paper:.Shows that the adoption of micropiocessors by Electrical Engineering Departments has been rapid compared with-their adoPtioriiof,minicomputers. The types of courses that are being taugpt can,be categorized as: surveys of microprocessors, intensive study of one microcomputer, inclusion of microprocessors in a general'courseeproject courses, .and specific applications courses. (41.H) I 4- 4 ***********************************************************4C********* * Dcuments acquired by ERIC include many informal unpublifhed * ,k,* materials not available from other sources. ERIC makes every effort * * to obtain ike best copy aiailable. Nevertheless, items ofMarginal * * reproducibility are,often encountered and this affects the qualitya,* * of the microfiche and hardcopy reproductions ERIC makesavailake * '4( via the ERIC Document Reproduction Service (EDRS). EDRS is not .* * -

309696 - MODULO DE MICROPROCESADORES & MICROCONTROLADORES HECTOR URIEL VILLAMIL GONZALEZ (Director Nacional)

UNIVERSIDAD NACIONAL ABIERTA Y A DISTANCIA – UNAD Escuela de Ciencias Básicas Tecnología e Ingeniería Contenido didáctico del curso Microprocesadores y Microcontroladores UNIVERSIDAD NACIONAL ABIERTA Y A DISTANCIA ESCUELA DE CIENCIAS BASICAS TECNOLOGIA E INGENIERIA PROGRAMA DE INGENIERIA ELECTRONICA 309696 - MODULO DE MICROPROCESADORES & MICROCONTROLADORES HECTOR URIEL VILLAMIL GONZALEZ (Director Nacional) MIGUEL PINTO APARICIO Acreditador CHIQUINQUIRA Julio de 2009 UNIVERSIDAD NACIONAL ABIERTA Y A DISTANCIA – UNAD Escuela de Ciencias Básicas Tecnología e Ingeniería Contenido didáctico del curso Microprocesadores y Microcontroladores INDICE DE CONTENIDO INTRODUCCIÓN .................................................................................................... ix UNIDAD 1.............................................................................................................. 10 CAPÍTULO 1: MICROPROCESADOR, PRINCIPIOS BASICOS ....................... 11 Lección 1: Invención y evolución histórica del Microprocesador. ................... 11 lección 2: Bases numéricas, bits y bytes. ....................................................... 22 lección 3: Estructura interna y funcionamiento. .............................................. 27 lección 4: Registros y segmentos. .................................................................. 39 lección 5: Modos de direccionamiento. .......................................................... 40 CAPÍTULO 2: FAMILIAS DE MICROPROCESADORES................................... 43 lección 1: Principales -

Zilog Oral History Panel on the Founding of the Company and the Development of the Z80 Microprocessor

Zilog Oral History Panel on the Founding of the Company and the Development of the Z80 Microprocessor Moderator: Michael Slater Panelists: Federico Faggin Masatoshi Shima Ralph Ungermann Recorded: April 27, 2007 Mountain View, California CHM Reference number: X4023.2007 © 2007 Computer History Museum Oral History of the Zilog Z80 Microprocessor Michael Slater: We have with us Ralph Ungermann, Federico Faggin, and Masatoshi Shima. I think we’ll start. We’ve had in the previous tapes [oral histories of the Intel 4004 and 8080 MPU projects recorded on April 26, 2007 at the museum] some information on the background of Federico and Shima- San, so we’ll start, Ralph, with you. Could you give us a brief summary of your background, your education, and what sort of jobs you had before coming to this project? Ralph Ungermann: Certainly. I grew up in Southern California. I’m a Berkeley graduate in Double E, and a masters degree in Computer Architecture from UC Irvine. I got out of college and spent a little time in the aerospace industry, and then moved towards the semiconductor business. I joined Collins Radio, a tremendously interesting company to work for. That was in the late ’60s, [the company was] completely networked around the whole world. Way ahead of its time. I left there and started to work at Western Digital, a [semiconductor] start-up in Southern California. We did communication chips. Custom communication chips. I left there and went to Intel, because I saw the microprocessor as being the next huge wave, and I was there at the Z80 introduction. -

17Uit3a3 - Microprocessor & Alp

17UIT3A3 - MICROPROCESSOR & ALP K1 LEVEL UNIT - I 1.The CPU of a digital computer built into a single IC is called__________ a)processor b)multiprocessor c)microprocessor d)microprogramming ans:c)microprocessor 2. In 1985, Intel introduced a more powerful 32 bit microprocessor called___________ a)Intel 486 b)Intel 386 c)Intel 4040 d)Intel 8086 ans: a)Intel 386 3. ___________ was the first digital signal processing chip. a)Intel 2902 b)Intel 2920 c)Intel 2900 d)Intel 2930 ans: b)Intel 2920 4. Embedded control applications has two distinct areas of control named ___________ and __________ a)digital control, chip control b)process control, design control c)event control, data control c)connection control, block control ans: c)event control, data control 5. AMD produces ___________ family of bit-slice microprocessor components to built a bit-slice processor. a)2910 b)2900 c)2930 d)2901 ans: b)2900 6. In a computer having a microprogrammed control unit, the instruction of another computer can be executed is known as___________ a)microprogram b)microinstruction c)microprogramming d)emulation ans: d)emulation 7. Large cache is employed in ____________ a)CISC processor b)scalar processor c)RISC processor d)superscalar processor ans: c)RISC processor 8. CISC stands for __________ a)Complex Instruction Standard Computer b)Complex Integrated Set Computer c)Complex Instruction Set Computer d)Control Instruction Set Computer ans: c)Complex Instruction Set Computer 9. A superscalar processor contains ___________and executes more than one instruction per clock cycle. a)vector operands b)multiplepipelines c)control memory d)host processor ans: b)multiple pipelines 10. -

Microcomputer Digest Sept. 1975

ICROC PUTER Copyright © 1975 by Microcomputer Associates Inc. Printed in U.S.A. DIGEST Volume 2, Number 3 September, 1975 16-BIT PANAFACOM MICROPROCESSOR THE JOLT FROM MAl The latest entrant in the microprocessor Microcomputer Associates Inc. has announced arena, Panafacom Ltd. of Tokyo Japan, has un the world's lowest cost microcomputer system veiled a l6-bit microprocessor chip set fully yet available. The system is offered in kit supported by a series of microcomputer cards form as well as assembled. The heart of the and a resident hardware development system. JOLT system is MOS Technology's 6502 8-bit The PFL-16A is a three chip LSI microcom microprocessor. puter designed to offer users functions match The outstanding feature of the system is a ing those of a minicomputer in the form of a ROM mask programmed DEbug-MONitor (DEMON) which system component. (cont'd on page 2) provides instant software to the user after completion of the kit. 8080 IN CIRCUIT EMULATOR DEMON includes a unique feature found in no o~her microcomputer system, that is, a self Ramtek Corp. has announced an In Circuit adapting interface to any terminal speed from Emulator for the 8080 microprocessor. The MM 10 to 30 cps. A TTY 20 rnA current loop as well 80 (nicknamed the ICEBOX) directly replaces as an EIA interface is standard with the kit. the 8080 microprocessor in the user's system Other DEMON features include display-alter and allows the designer to examine, alter and CPU registers, display-alter memory, read/ control the 8080 system.