Representation Learning: What Is It and How Do You Teach It?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

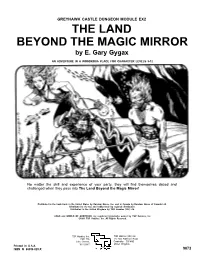

THE LAND BEYOND the MAGIC MIRROR by E

GREYHAWK CASTLE DUNGEON MODULE EX2 THE LAND BEYOND THE MAGIC MIRROR by E. Gary Gygax AN ADVENTURE IN A WONDROUS PLACE FOR CHARACTER LEVELS 9-12 No matter the skill and experience of your party, they will find themselves dazed and challenged when they pass into The Land Beyond the Magic Mirror! Distributed to the book trade in the United States by Random House, Inc. and in Canada by Random House of Canada Ltd. Distributed to the toy and hobby trade by regional distributors. Distributed in the United Kingdom by TSR Hobbies (UK) Ltd. AD&D and WORLD OF GREYHAWK are registered trademarks owned by TSR Hobbies, Inc. ©1983 TSR Hobbies, Inc. All Rights Reserved. TSR Hobbies, Inc. TSR Hobbies (UK) Ltd. POB 756 The Mill, Rathmore Road Lake Geneva, Cambridge CB14AD United Kingdom Printed in U.S.A. WI 53147 ISBN O 88038-025-X 9073 TABLE OF CONTENTS This module is the companion to Dungeonland and was originally part of the Greyhawk Castle dungeon complex. lt is designed so that it can be added to Dungeonland, used alone, or made part of virtually any campaign. It has an “EX” DUNGEON MASTERS PREFACE ...................... 2 designation to indicate that it is an extension of a regular THE LAND BEYOND THE MAGIC MIRROR ............. 4 dungeon level—in the case of this module, a far-removed .................... extension where all adventuring takes place on another plane The Magic Mirror House First Floor 4 of existence that is quite unusual, even for a typical AD&D™ The Cellar ......................................... 6 Second Floor ...................................... 7 universe. This particular scenario has been a consistent ......................................... -

Not for Sale, Program That Works, and We’D but It Might Be Moving Like to Bring It to More Schools Soon, According to Owner Across the County.” Chris Redd

Covering all of Baldwin County, AL every Friday. The Baldwin Times State track meet titles PAGE 15 MAY 12, 2017 | GulfCoastNewsToday.com | 75¢ County, Fairhope at odds yet Baldwin again on county road repairs schools By CLIFF MCCOLLUM message boards to County projects. moving [email protected] Road 32 near the intersec- The list includes the fol- tion with Highway 181 lowing: Tensions have once displaying a message to • CR48 just West of Bo- again flared between the motorists and the city hemian Hall Rd forward Baldwin County Com- itself: “Delays caused by According to notes mission and the city of Fairhope Utility. Call 928- from the Baldwin County Academic plan has 4 Fairhope’s utilities depart- 2136.” Highway Department: major components ment following continued The county said several “This site is currently discussions and problems roads, including County open to the public. We By CLIFF MCCOLLUM involving needed upgrades Road 32, have been held have received a quote from [email protected] to county roads on the up for far too long. County Fairhope Water & Sewer PHOTO BY CLIFF MCCOLLUM city’s borders. officials said the holdup to relocate this conflict. During a recent special called County officials moved message boards along Late Tuesday afternoon, Baldwin County Board of Edu- County Road 32 that urged residents upset has been utility line re- The City of Fairhope has by construction delays to contact the City of the county moved the locations near or around the utility agreement and cation work session, Baldwin Fairhope’s utilities department. highway department’s the various uncompleted SEE ROADS, PAGE 25 County Schools Academic Dean Joyce Woodburn laid out an academic plan for the board members comprised of four major components. -

ARTICLE: Jan Susina: Playing Around in Lewis Carroll's Alice Books

Playing Around in Lewis Carroll’s Alice Books • Jan Susina Mathematician Charles Dodgson’s love of play and his need for rules came together in his use of popular games as part of the structure of the two famous children’s books, Alice in Wonderland and Through the Looking-Glass, he wrote under the pseudonym Lewis Carroll. The author of this article looks at the interplay between the playing of such games as croquet and cards and the characters and events of the novels and argues that, when reading Carroll (who took a playful approach even in his academic texts), it is helpful to understand games and game play. Charles Dodgson, more widely known by his pseudonym Lewis Carroll, is perhaps one of the more playful authors of children’s literature. In his career, as a children’s author and as an academic logician and mathematician, and in his personal life, Carroll was obsessed with games and with various forms of play. While some readers are surprised by the seemingly split personality of Charles Dodgson, the serious mathematician, and Lewis Carroll, the imaginative author of children’s books, it was his love of play and games and his need to establish rules and guidelines that effectively govern play that unite these two seemingly disparate facets of Carroll’s personality. Carroll’s two best-known children’s books—Alice’s Adventures in Wonderland (1865) and Through the Looking- Glass and What Alice Found There (1871)—use popular games as part of their structure. In Victoria through the Looking-Glass, Florence Becker Lennon has gone so far as to suggest about Carroll that “his life was a game, even his logic, his mathematics, and his singular ordering of his household and other affairs. -

Annihilating Nihilistic Nonsense Tim Burton Guts Lewis Carroll’S Jabberwocky

Annihilating Nihilistic Nonsense Tim Burton Guts Lewis Carroll’s Jabberwocky Alice in Wonderland seems to beg for a morbid interpretation. Whether it's Marilyn Manson's "Eat Me, Drink Me," the video game "American McGee's Alice," or Svankmajer's "Alice" and "Jabberwocky," artists love bringing out the darker elements of Alice’s adventures as she wanders among creepy creatures. The 2010 Tim Burton film is the latest twisted adaptation, featuring an older Alice that slays the Jabberwocky. However, unlike the other adaptations, Burton’s adaptation draws most of its grim outlook by gutting Alice in Wonderland of its fundamental core - its nonsense. Alice in Wonderland uses nonsense to liberate, offering frightening amounts of freedom through its playful use of nonsense. However, Burton turns this whimsy into menacing machinations - he pretends to use nonsense for its original liberating purpose but actually uses it for adult plots and preset paths. Burton takes the destructive power of Alice’s insistence for order and amplifies it dramatically, completely removing its original subversive release from societal constraints. Under the façade of paying tribute to Carroll’s whimsical nonsense verse, Burton directly removes nonsense’s anarchic freedom and replaces it with a destructive commitment to sense. This brutal change to both plot and structure turns Alice into a mindless juggernaut, slaying not only the Jabberwock, but also the realm of nonsense, non-linear narrative and real world empires. At first, nonsense in Lewis Carroll’s books seems to just a light-hearted play with language. Even before we come into Wonderland, the idea of nonsense as just a simple child’s diversion is given by the epigraph. -

The Musical Misadventures of a Girl Named Alice Book by JAMES DEVITA Music and Lyrics by BILL FRANCOEUR Based on the Novel Through the Looking Glass by LEWIS CARROLL

The Musical Misadventures of a Girl Named Alice based on the novel Through the Looking Glass by Lewis Carroll Book by JAMES DEVITA Music and Lyrics by BILL FRANCOEUR © Copyright 2002, JAMES DeVITA PERFORMANCE LICENSE The amateur acting rights to this play are controlled exclusively by PIONEER DRAMA SERVICE, INC., P.O. Box 4267, Englewood, Colorado 80155, without whose permission no performance, reading or presentation of any kind may be given. On all programs and advertising this notice must appear: “Produced by special arrangement with Pioneer Drama Service, Inc., Englewood, Colorado.” COPYING OR REPRODUCING ALL OR ANY PART OF THIS BOOK IN ANY MANNER IS STRICTLY FORBIDDEN BY LAW. All other rights in this play, including those of professional production, radio broadcasting and motion picture rights, are controlled by Pioneer Drama Service, Inc., to whom all inquiries should be addressed. WONDERLAN D! The Musical Misadventures of a Girl Named Alice Book by JAMES DEVITA Music and Lyrics by BILL FRANCOEUR based on the novel Through the Looking Glass by LEWIS CARROLL CAST OF CHARACTERS ALICE .............................................. the same one that chased the rabbit down the hole TROUBADOUR* .............................. quite the singer MOTHER’S VOICE .......................... offstage RED KING ....................................... soporific monarch WHITE KING .................................... defender of the crown RED QUEEN .................................... vicious, nasty temper WHITE QUEEN ............................... -

Through the Looking-Glass: Translating Nonsense

Through the Looking-Glass: Translating Nonsense In 1871, Lewis Carroll published Through the Looking- Glass, and What Alice Found There, a sequel to his hugely popular Alice’s Adventures in Wonderland. In this sequel, Alice sees a world through her looking-glass which looks almost the same as her own world, but not quite. I'll tell you all my ideas about Looking-glass House. First, there's the room you can see through the glass – that's just the same as our drawing-room, only the things go the other way. [...] the books are something like our books, only the words go the wrong way[...] Alice goes through the mirror into the alternative world, which, not unlike Wonderland, is full of weird and wonderful characters. She finds a book there, which is “all in some language I don't know”. Below are the first few lines of the book – can you read it? Just the same, only things go the other way... ‘Some language I don’t know’, ‘the words go the wrong way’. Alice might almost be talking about the practice of translation, which makes a text accessible to a reader unfamiliar with the original language it was written in. And translation, too, can often feel like it is almost the same as the original, and yet somehow also different. We might say that translation is like Alice’s looking-glass: it reflects the original but in distorted and imaginative ways. Can you think of any other similes for translation? Translation is like ....................................................................................................................... because -

Lewis Carroll 'The Jabberwocky'

Lewis Carroll ‘The Jabberwocky’ 'Twas brillig, and the slithy toves Did gyre and gimble in the wabe; All mimsy were the borogoves, And the mome raths outgrabe. "Beware the Jabberwock, my son The jaws that bite, the claws that catch! Beware the Jubjub bird, and shun The frumious Bandersnatch!" He took his vorpal sword in hand; Long time the manxome foe he sought— So rested he by the Tumtum tree, And stood awhile in thought. And, as in uffish thought he stood, The Jabberwock, with eyes of flame, Came whiffling through the tulgey wood, And burbled as it came! One, two! One, two! And through and through The vorpal blade went snicker-snack! He left it dead, and with its head He went galumphing back. "And hast thou slain the Jabberwock? Come to my arms, my beamish boy! O frabjous day! Callooh! Callay!" He chortled in his joy. 'Twas brillig, and the slithy toves Did gyre and gimble in the wabe; All mimsy were the borogoves, And the mome raths outgrabe. Rudyard Kipling ‘The Way Through The Woods’ They shut the road through the woods Seventy years ago. W eather and rain have undone it again, And now you would never know There was once a road through the woods Before they planted the trees. It is underneath the coppice and heath And the thin anemones. Only the keeper sees That, where the ring-dove broods, And the badgers roll at ease, There was once a road through the woods. Yet, if you enter the woods Of a summer evening late, When the night-air cools on the trout-ringed pools Where the otter whistles his mate, (They fear not men in the woods, Because they see so few) You will hear the beat of a horse’s feet, And the swish of a skirt in the dew, Steadily cantering through The misty solitudes, As though they perfectly knew The old lost road through the woods… But there is no road through the woods. -

Ambivalent Texts, the Borderline, and the Sense of Nonsense in Lewis Carroll’S “Jabberwocky”

International Journal of IJES English Studies UNIVERSITY OF MURCIA http://revistas.um.es/ijes Ambivalent texts, the borderline, and the sense of nonsense in Lewis Carroll’s “Jabberwocky” MICHAEL TEMPLETON Princess Nourah bint Abdulrahman University (Saudi Arabia) Received: 13/11/2017. Accepted: 18/08/2018. ABSTRACT Taking Carroll‘s ―Jabberwocky‖ as emblematic of a text historically enjoyed by both children and adults, this article seeks to place the text in what Kristeva defines as the borderline between language and subjectivity to theorize a realm in which ambivalent texts emerge as such. The fact that children‘s literature remains largely trapped in the literary–didactic split in which these texts are understood as either learning materials and primers for literacy, or as examples of poetic or historical modernist discourse. This article situates Carroll‘s text in the theories of language, subjectivity, and clinical discourse toward a more complex reading of a children‘s poem, one that finds a point of intersection between the adult and the child reader. KEYWORDS: Ambivalent text, The borderline, Children‘s literature, Nonsense poems. 1. INTRODUCTION Maria Nikolajeka demonstrates that children‘s literature occupies a middle place in the world of literary studies. Children‘s literature is either studied exclusively as a didactic tool—a literary form that exists entirely to provide stepping stones toward literacy for young readers—or else children‘s literature is understood as a projection of the author‘s imagination, a nostalgic portrait of some feature of the author‘s own youth. This is commonly referred to as the ―literary–didactic split‖ (Nikolajeka, 2005: xi). -

THE WESTFIELD LEADER the Leading and Most Widely Circulated Weekly Newspaper in Union County Pfieventh YEAR—No

•"•*. THE WESTFIELD LEADER The Leading And Most Widely Circulated Weekly Newspaper In Union County PfiEVENTH YEAR—No. 32 li.llt.eieU M OtoUUllli 1.1U9* . P> Offl TVtflM WESTFIELD. NEW JERSEY, THURSDAY, APRIL 18, 1957 36 Pagei—10 Cent* Westfield Club Primary Vote In Westfield [an Eastertide REPUBLICAN Displays Flowers IW 2W 3W 4W Tot.l Light Vote Tallied Here GOVERNOR Forbes 813 f>47 458 381 2,299 At Public Show Dumont 317 216 187 137 857 lurch Services ASSEMBLY In Quiet Primary Election Griffin . 680 511 367 304 1,862 First Event Rand 190 133 148 109 580 Paris To Be Scene Set At Local Murray , 369 310 222 156 1,057 No Opposition liial Good Of Sunrise Service Thomas 819 037 464 381 2,301 Irene Griffin No Contests For Homes May 9 Vanderbilt 813 627 436 367 2,243 In Mountainside Crane „ . 825 652 459 364 2,300 lay Devotion An Easter Sunday, sunrise serv- Mrs. Torg Tonnessen, president Stamler . 427 319 217 197 1,160 Wins County GOP MOUNTAINSIDE — With Either Party ice will be held at Mindowaskin of the Rake and Hoe Garden Club Velbinger 120 80 109 69 378 •oting described as "extremely Park this week at 0 a.m. under of Westfield, has announced the ight," Mayor Joseph A. C. Komich first Baptist the sponsorship of the youth com- FREEHOLDERS club will hold its first public open Bailey 1,022 805 569 454 2,850 Nod For Assembly nd Councilmen Ronald L. Farrell On Local Scene mittee of the Westfield Council of home flower show May 9. -

Lewis Carroll (Charles L

LEWIS CARROLL (CHARLES L. DODGSON) a selection from The Library of an English Bibliophile Peter Harrington london VAT no. gb 701 5578 50 Peter Harrington Limited. Registered office: WSM Services Limited, Connect House, 133–137 Alexandra Road, Wimbledon, London SW19 7JY. Registered in England and Wales No: 3609982 Design: Nigel Bents; Photography Ruth Segarra. Peter Harrington london catalogue 119 LEWIS CARROLL (CHARLES L. DODGSON) A collection of mainly signed and inscribed first and early editions From The Library of an English Bibliophile All items from this catalogue are on display at Dover Street mayfair chelsea Peter Harrington Peter Harrington 43 Dover Street 100 Fulham Road London w1s 4ff London sw3 6hs uk 020 3763 3220 uk 020 7591 0220 eu 00 44 20 3763 3220 eu 00 44 20 7591 0220 usa 011 44 20 3763 3220 usa 011 44 20 7591 0220 Dover St opening hours: 10am–7pm Monday–Friday; 10am–6pm Saturday www.peterharrington.co.uk FOREWORD In 1862 Charles Dodgson, a shy Oxford mathematician with a stammer, created a story about a little girl tumbling down a rabbit hole. With Alice’s Adventures in Wonderland (1865), children’s literature escaped from the grimly moral tone of evangelical tracts to delight in magical worlds populated by talking rabbits and stubborn lobsters. A key work in modern fantasy literature, it is the prototype of the portal quest, in which readers are invited to follow the protagonist into an alternate world of the fantastic. The Alice books are one of the best-known works in world literature. They have been translated into over one hundred languages, and are referenced and cited in academic works and popular culture to this day. -

Carrollian Language Arts & Rhetoric

CARROLLIAN LANGUAGE ARTS & RHETORIC: DODGSON’S QUEST FOR ORDER & MEANING, WITH A PORPOISE By Madonna Fajardo Kemp Approved: _____________________________________ __________________________________ Rebecca Ellen Jones Susan North UC Foundation Associate Professor Director of Composition of Rhetoric and Composition Committee Member Director of Thesis _____________________________________ Charles L. Sligh Assistant Professor of Literature Committee Member _____________________________________ __________________________________ Herbert Burhenn A. Jerald Ainsworth Dean of the College of Arts and Sciences Dean of the Graduate School CARROLLIAN LANGUAGE ARTS & RHETORIC: DODGSON’S QUEST FOR ORDER & MEANING, WITH A PORPOISE By Madonna Fajardo Kemp A Thesis Submitted to the Faculty of the University of Tennessee at Chattanooga in Partial Fulfillment of the Requirements for the Degree of Master of Arts in English: Rhetoric and Composition The University of Tennessee at Chattanooga Chattanooga, TN December 2011 ii Copyright © 2011 By Madonna Fajardo Kemp All Rights Reserved iii ABSTRACT Lewis Carroll (Rev. Charles Dodgson) is a language specialist who has verifiably altered our lexicon and created fictional worlds that serve as commentary on our ability to effectively create meaning within our existing communicative systems. This ability to create language and illustrations of everyday language issues can be traced back to his personal quest for order and meaning; the logician and teacher has uncovered the accepted language and language practices that can result in verbal confusion and ineffective speech, as well as the accepted practices that can help us to avoid verbal confusion and social conflict—all of which reveals a theorist in his own right, one who aides our understanding of signification and pragmatic social skills. Dodgson’s fictive representations of our ordinary language concerns serve as concrete examples of contextual language interactions; therefore, they serve as appropriate material for the teaching of rhetorical theory and, most especially, language arts. -

Alice Through the Looking-Glass

ALICE THROUGH THE LOOKING-GLASS Alice Through the Looking-Glass – Resource Pack Welcome to the Britten Sinfonia interactive family concert 2018! We are very much looking forward to performing for you at the festival. The Concert This concert has been devised by musician and presenter Jessie Maryon Davies. Each concert will last approximately 50 minutes. Jessie will explore the story and main characters with the audience, assisted by a chamber quintet from the Britten Sinfonia. The narrative will be interwoven and brought to life with fantastic pieces of classical music, songs and actions. Participation The audience will be encouraged to participate fully throughout the concerts. We will sing songs, create actions together and make a soundscape. The better you know the story, the more fun we can have exploring the main themes in the concert. The story We don’t expect you to read the whole book before the show, but it would be very helpful to have an overview of the story and to read the chapters on the specific characters we have selected to feature in our show. Through the Looking-Glass, and What Alice Found There is the sequel to Alice’s Adventures in Wonderland and was written by Lewis Carroll in 1871. The sequel is set six months later than the original, so rather than a balmy summer’s day in the garden, we find Alice in the depths of a wintry November afternoon. She is playing with her two kittens and falls into a deep sleep, dreaming of a world beyond her mirror. This book explores her journey across Looking-Glass Land, as she tries to discover the mystery of The Jabberwock, and plays a giant game of chess to become the new Queen.