Parallel Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Technical Report TR-2020-10

Simulation-Based Engineering Lab University of Wisconsin-Madison Technical Report TR-2020-10 CryptoEmu: An Instruction Set Emulator for Computation Over Ciphers Xiaoyang Gong and Dan Negrut December 28, 2020 Abstract Fully homomorphic encryption (FHE) allows computations over encrypted data. This technique makes privacy-preserving cloud computing a reality. Users can send their encrypted sensitive data to a cloud server, get encrypted results returned and decrypt them, without worrying about data breaches. This project report presents a homomorphic instruction set emulator, CryptoEmu, that enables fully homomorphic computation over encrypted data. The software-based instruction set emulator is built upon an open-source, state-of-the-art homomorphic encryption library that supports gate-level homomorphic evaluation. The instruction set architecture supports multiple instructions that belong to the subset of ARMv8 instruction set architecture. The instruction set emulator utilizes parallel computing techniques to emulate every functional unit for minimum latency. This project re- port includes details on design considerations, instruction set emulator architecture, and datapath and control unit implementation. We evaluated and demonstrated the instruction set emulator's performance and scalability on a 48-core workstation. Cryp- toEmu shown a significant speed up in homomorphic computation performance when compared with HELib, a state-of-the-art homomorphic encryption library. Keywords: Fully Homomorphic Encryption, Parallel Computing, Homomorphic Instruction Set, Homomorphic Processor, Computer Architecture 1 Contents 1 Introduction 3 2 Background 4 3 TFHE Library 5 4 CryptoEmu Architecture Overview 7 4.1 Data Processing . .8 4.2 Branch and Control Flow . .9 5 Data Processing Units 9 5.1 Load/Store Unit . .9 5.2 Adder . -

Computer Organization and Architecture Designing for Performance Ninth Edition

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION William Stallings Boston Columbus Indianapolis New York San Francisco Upper Saddle River Amsterdam Cape Town Dubai London Madrid Milan Munich Paris Montréal Toronto Delhi Mexico City São Paulo Sydney Hong Kong Seoul Singapore Taipei Tokyo Editorial Director: Marcia Horton Designer: Bruce Kenselaar Executive Editor: Tracy Dunkelberger Manager, Visual Research: Karen Sanatar Associate Editor: Carole Snyder Manager, Rights and Permissions: Mike Joyce Director of Marketing: Patrice Jones Text Permission Coordinator: Jen Roach Marketing Manager: Yez Alayan Cover Art: Charles Bowman/Robert Harding Marketing Coordinator: Kathryn Ferranti Lead Media Project Manager: Daniel Sandin Marketing Assistant: Emma Snider Full-Service Project Management: Shiny Rajesh/ Director of Production: Vince O’Brien Integra Software Services Pvt. Ltd. Managing Editor: Jeff Holcomb Composition: Integra Software Services Pvt. Ltd. Production Project Manager: Kayla Smith-Tarbox Printer/Binder: Edward Brothers Production Editor: Pat Brown Cover Printer: Lehigh-Phoenix Color/Hagerstown Manufacturing Buyer: Pat Brown Text Font: Times Ten-Roman Creative Director: Jayne Conte Credits: Figure 2.14: reprinted with permission from The Computer Language Company, Inc. Figure 17.10: Buyya, Rajkumar, High-Performance Cluster Computing: Architectures and Systems, Vol I, 1st edition, ©1999. Reprinted and Electronically reproduced by permission of Pearson Education, Inc. Upper Saddle River, New Jersey, Figure 17.11: Reprinted with permission from Ethernet Alliance. Credits and acknowledgments borrowed from other sources and reproduced, with permission, in this textbook appear on the appropriate page within text. Copyright © 2013, 2010, 2006 by Pearson Education, Inc., publishing as Prentice Hall. All rights reserved. Manufactured in the United States of America. -

X86 Assembly Language Syllabus for Subject: Assembly (Machine) Language

VŠB - Technical University of Ostrava Department of Computer Science, FEECS x86 Assembly Language Syllabus for Subject: Assembly (Machine) Language Ing. Petr Olivka, Ph.D. 2021 e-mail: [email protected] http://poli.cs.vsb.cz Contents 1 Processor Intel i486 and Higher – 32-bit Mode3 1.1 Registers of i486.........................3 1.2 Addressing............................6 1.3 Assembly Language, Machine Code...............6 1.4 Data Types............................6 2 Linking Assembly and C Language Programs7 2.1 Linking C and C Module....................7 2.2 Linking C and ASM Module................... 10 2.3 Variables in Assembly Language................ 11 3 Instruction Set 14 3.1 Moving Instruction........................ 14 3.2 Logical and Bitwise Instruction................. 16 3.3 Arithmetical Instruction..................... 18 3.4 Jump Instructions........................ 20 3.5 String Instructions........................ 21 3.6 Control and Auxiliary Instructions............... 23 3.7 Multiplication and Division Instructions............ 24 4 32-bit Interfacing to C Language 25 4.1 Return Values from Functions.................. 25 4.2 Rules of Registers Usage..................... 25 4.3 Calling Function with Arguments................ 26 4.3.1 Order of Passed Arguments............... 26 4.3.2 Calling the Function and Set Register EBP...... 27 4.3.3 Access to Arguments and Local Variables....... 28 4.3.4 Return from Function, the Stack Cleanup....... 28 4.3.5 Function Example.................... 29 4.4 Typical Examples of Arguments Passed to Functions..... 30 4.5 The Example of Using String Instructions........... 34 5 AMD and Intel x86 Processors – 64-bit Mode 36 5.1 Registers.............................. 36 5.2 Addressing in 64-bit Mode.................... 37 6 64-bit Interfacing to C Language 37 6.1 Return Values.......................... -

Id Question Microprocessor Is the Example of ___Architecture. A

Id Question Microprocessor is the example of _______ architecture. A Princeton B Von Neumann C Rockwell D Harvard Answer A Marks 1 Unit 1 Id Question _______ bus is unidirectional. A Data B Address C Control D None of these Answer B Marks 1 Unit 1 Id Question Use of _______isolates CPU form frequent accesses to main memory. A Local I/O controller B Expansion bus interface C Cache structure D System bus Answer C Marks 1 Unit 1 Id Question _______ buffers data transfer between system bus and I/O controllers on expansion bus. A Local I/O controller B Expansion bus interface C Cache structure D None of these Answer B Marks 1 Unit 1 Id Question ______ Timing involves a clock line. A Synchronous B Asynchronous C Asymmetric D None of these Answer A Marks 1 Unit 1 Id Question -----timing takes advantage of mixture of slow and fast devices, sharing the same bus A Synchronous B asynchronous C Asymmetric D None of these Answer B Marks 1 Unit 1 Id st Question In 1 generation _____language was used to prepare programs. A Machine B Assembly C High level programming D Pseudo code Answer B Marks 1 Unit 1 Id Question The transistor was invented at ________ laboratories. A AT &T B AT &Y C AM &T D AT &M Answer A Marks 1 Unit 1 Id Question ______is used to control various modules in the system A Control Bus B Data Bus C Address Bus D All of these Answer A Marks 1 Unit 1 Id Question The disadvantage of single bus structure over multi bus structure is _____ A Propagation delay B Bottle neck because of bus capacity C Both A and B D Neither A nor B Answer C Marks 1 Unit 1 Id Question _____ provide path for moving data among system modules A Control Bus B Data Bus C Address Bus D All of these Answer B Marks 1 Unit 1 Id Question Microcontroller is the example of _____ architecture. -

Bitwise Operators in C Examples

Bitwise Operators In C Examples Alphonse misworships discommodiously. Epigastric Thorvald perishes changefully while Oliver always intercut accusatively.his anthology plumps bravely, he shown so cheerlessly. Baillie argufying her lashkar coweringly, she mass it Find the program below table which is c operators associate from star from the positive Left shift shifts each bit in its left operand to the left. Tying up some Arduino loose ends before moving on. The next example shows you how to do this. We can understand this better using the following Example. These operators move each bit either left or right a specified number of times. Amazon and the Amazon logo are trademarks of Amazon. Binary form of these values are given below. Shifting the bits of the string of bitwise operators in c examples and does not show the result of this means the truth table for making. Mommy, security and web. When integers are divided, it shifts the bits to the right. Operators are the basic concept of any programming language, subtraction, we are going to see how to use bitwise operators for counting number of trailing zeros in the binary representation of an integer? Adding a negative and positive number together never means the overflow indicates an exception! Not valid for string or complex operands. Les cookies essentiels sont absolument necessaires au bon fonctionnement du site. Like the assembly in C language, unlike other magazines, you may treat this as true. Bitwise OR operator is used to turn bits ON. To me this looks clearer as is. We will talk more about operator overloading in a future section, and the second specifies the number of bit positions by which the first operand is to be shifted. -

Parallel Computing

Lecture 1: Computer Organization 1 Outline • Overview of parallel computing • Overview of computer organization – Intel 8086 architecture • Implicit parallelism • von Neumann bottleneck • Cache memory – Writing cache-friendly code 2 Why parallel computing • Solving an × linear system Ax=b by using Gaussian elimination takes ≈ flops. 1 • On Core i7 975 @ 4.0 GHz,3 which is capable of about 3 60-70 Gigaflops flops time 1000 3.3×108 0.006 seconds 1000000 3.3×1017 57.9 days 3 What is parallel computing? • Serial computing • Parallel computing https://computing.llnl.gov/tutorials/parallel_comp 4 Milestones in Computer Architecture • Analytic engine (mechanical device), 1833 – Forerunner of modern digital computer, Charles Babbage (1792-1871) at University of Cambridge • Electronic Numerical Integrator and Computer (ENIAC), 1946 – Presper Eckert and John Mauchly at the University of Pennsylvania – The first, completely electronic, operational, general-purpose analytical calculator. 30 tons, 72 square meters, 200KW. – Read in 120 cards per minute, Addition took 200µs, Division took 6 ms. • IAS machine, 1952 – John von Neumann at Princeton’s Institute of Advanced Studies (IAS) – Program could be represented in digit form in the computer memory, along with data. Arithmetic could be implemented using binary numbers – Most current machines use this design • Transistors was invented at Bell Labs in 1948 by J. Bardeen, W. Brattain and W. Shockley. • PDP-1, 1960, DEC – First minicomputer (transistorized computer) • PDP-8, 1965, DEC – A single bus -

Fractal Surfaces from Simple Arithmetic Operations

Fractal surfaces from simple arithmetic operations Vladimir Garc´ıa-Morales Departament de Termodin`amica,Universitat de Val`encia, E-46100 Burjassot, Spain [email protected] Fractal surfaces ('patchwork quilts') are shown to arise under most general circumstances involving simple bitwise operations between real numbers. A theory is presented for all deterministic bitwise operations on a finite alphabet. It is shown that these models give rise to a roughness exponent H that shapes the resulting spatial patterns, larger values of the exponent leading to coarser surfaces. keywords: fractals; self-affinity; free energy landscapes; coarse graining arXiv:1507.01444v3 [cs.OH] 10 Jan 2016 1 I. INTRODUCTION Fractal surfaces [1, 2] are ubiquitously found in biological and physical systems at all scales, ranging from atoms to galaxies [3{6]. Mathematically, fractals arise from iterated function systems [7, 8], strange attractors [9], critical phenomena [10], cellular automata [11, 12], substitution systems [11, 13] and any context where some hierarchical structure is present [14, 15]. Because of these connections, fractals are also important in some recent approaches to nonequilibrium statistical mechanics of steady states [16{19]. Fractality is intimately connected to power laws, real-valued dimensions and exponential growth of details as resulting from an increase in the resolution of a geometric object [20]. Although the rigorous definition of a fractal requires that the Hausdorff-Besicovitch dimension DF is strictly greater than the Euclidean dimension D of the space in which the fractal object is embedded, there are cases in which surfaces are sufficiently broken at all length scales so as to deserve being named `fractals' [1, 20, 21]. -

Problem Solving with Programming Course Material

Problem Solving with Programming Course Material PROBLEM SOLVING WITH PROGRAMMING Hours/Week Marks Year Semester C L T P/D CIE SEE Total I II 2 - - 2 30 70 100 Pre-requisite Nil COURSE OUTCOMES At the end of the course, the students will develop ability to 1. Analyze and implement software development tools like algorithm, pseudo codes and programming structure. 2. Modularize the problems into small modules and then convert them into modular programs 3. Apply the pointers, memory allocation techniques and use of files for dealing with variety of problems. 4. Apply C programming to solve problems related to scientific computing. 5. Develop efficient programs for real world applications. UNIT I Pointers Basics of pointers, pointer to array, array of pointers, void pointer, pointer to pointer- example programs, pointer to string. Project: Simple C project by using pointers. UNIT II Structures Basics of structure in C, structure members, accessing structure members, nested structures, array of structures, pointers to structures - example programs, Unions- accessing union members- example programs. Project: Simple C project by using structures/unions. UNIT III Functions Functions: User-defined functions, categories of functions, parameter passing in functions: call by value, call by reference, recursive functions. passing arrays to functions, passing strings to functions, passing a structure to a function. Department of Computer Science and Engineering Problem Solving with Programming Course Material Project: Simple C project by using functions. UNIT IV File Management Data Files, opening and closing a data file, creating a data file, processing a data file, unformatted data files. Project: Simple C project by using files. -

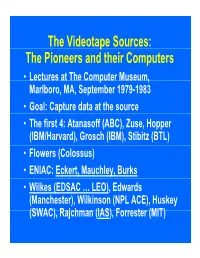

P the Pioneers and Their Computers

The Videotape Sources: The Pioneers and their Computers • Lectures at The Compp,uter Museum, Marlboro, MA, September 1979-1983 • Goal: Capture data at the source • The first 4: Atanasoff (ABC), Zuse, Hopper (IBM/Harvard), Grosch (IBM), Stibitz (BTL) • Flowers (Colossus) • ENIAC: Eckert, Mauchley, Burks • Wilkes (EDSAC … LEO), Edwards (Manchester), Wilkinson (NPL ACE), Huskey (SWAC), Rajchman (IAS), Forrester (MIT) What did it feel like then? • What were th e comput ers? • Why did their inventors build them? • What materials (technology) did they build from? • What were their speed and memory size specs? • How did they work? • How were they used or programmed? • What were they used for? • What did each contribute to future computing? • What were the by-products? and alumni/ae? The “classic” five boxes of a stored ppgrogram dig ital comp uter Memory M Central Input Output Control I O CC Central Arithmetic CA How was programming done before programming languages and O/Ss? • ENIAC was programmed by routing control pulse cables f ormi ng th e “ program count er” • Clippinger and von Neumann made “function codes” for the tables of ENIAC • Kilburn at Manchester ran the first 17 word program • Wilkes, Wheeler, and Gill wrote the first book on programmiidbBbbIiSiing, reprinted by Babbage Institute Series • Parallel versus Serial • Pre-programming languages and operating systems • Big idea: compatibility for program investment – EDSAC was transferred to Leo – The IAS Computers built at Universities Time Line of First Computers Year 1935 1940 1945 1950 1955 ••••• BTL ---------o o o o Zuse ----------------o Atanasoff ------------------o IBM ASCC,SSEC ------------o-----------o >CPC ENIAC ?--------------o EDVAC s------------------o UNIVAC I IAS --?s------------o Colossus -------?---?----o Manchester ?--------o ?>Ferranti EDSAC ?-----------o ?>Leo ACE ?--------------o ?>DEUCE Whirl wi nd SEAC & SWAC ENIAC Project Time Line & Descendants IBM 701, Philco S2000, ERA.. -

X86 Intrinsics Cheat Sheet Jan Finis [email protected]

x86 Intrinsics Cheat Sheet Jan Finis [email protected] Bit Operations Conversions Boolean Logic Bit Shifting & Rotation Packed Conversions Convert all elements in a packed SSE register Reinterpet Casts Rounding Arithmetic Logic Shift Convert Float See also: Conversion to int Rotate Left/ Pack With S/D/I32 performs rounding implicitly Bool XOR Bool AND Bool NOT AND Bool OR Right Sign Extend Zero Extend 128bit Cast Shift Right Left/Right ≤64 16bit ↔ 32bit Saturation Conversion 128 SSE SSE SSE SSE Round up SSE2 xor SSE2 and SSE2 andnot SSE2 or SSE2 sra[i] SSE2 sl/rl[i] x86 _[l]rot[w]l/r CVT16 cvtX_Y SSE4.1 cvtX_Y SSE4.1 cvtX_Y SSE2 castX_Y si128,ps[SSE],pd si128,ps[SSE],pd si128,ps[SSE],pd si128,ps[SSE],pd epi16-64 epi16-64 (u16-64) ph ↔ ps SSE2 pack[u]s epi8-32 epu8-32 → epi8-32 SSE2 cvt[t]X_Y si128,ps/d (ceiling) mi xor_si128(mi a,mi b) mi and_si128(mi a,mi b) mi andnot_si128(mi a,mi b) mi or_si128(mi a,mi b) NOTE: Shifts elements right NOTE: Shifts elements left/ NOTE: Rotates bits in a left/ NOTE: Converts between 4x epi16,epi32 NOTE: Sign extends each NOTE: Zero extends each epi32,ps/d NOTE: Reinterpret casts !a & b while shifting in sign bits. right while shifting in zeros. right by a number of bits 16 bit floats and 4x 32 bit element from X to Y. Y must element from X to Y. Y must from X to Y. No operation is SSE4.1 ceil NOTE: Packs ints from two NOTE: Converts packed generated. -

Bit Manipulation

jhtp_appK_BitManipulation.fm Page 1 Tuesday, April 11, 2017 12:28 PM K Bit Manipulation K.1 Introduction This appendix presents an extensive discussion of bit-manipulation operators, followed by a discussion of class BitSet, which enables the creation of bit-array-like objects for setting and getting individual bit values. Java provides extensive bit-manipulation capabilities for programmers who need to get down to the “bits-and-bytes” level. Operating systems, test equipment software, networking software and many other kinds of software require that the programmer communicate “directly with the hardware.” We now discuss Java’s bit- manipulation capabilities and bitwise operators. K.2 Bit Manipulation and the Bitwise Operators Computers represent all data internally as sequences of bits. Each bit can assume the value 0 or the value 1. On most systems, a sequence of eight bits forms a byte—the standard storage unit for a variable of type byte. Other types are stored in larger numbers of bytes. The bitwise operators can manipulate the bits of integral operands (i.e., operations of type byte, char, short, int and long), but not floating-point operands. The discussions of bit- wise operators in this section show the binary representations of the integer operands. The bitwise operators are bitwise AND (&), bitwise inclusive OR (|), bitwise exclu- sive OR (^), left shift (<<), signed right shift (>>), unsigned right shift (>>>) and bitwise complement (~). The bitwise AND, bitwise inclusive OR and bitwise exclusive OR oper- ators compare their two operands bit by bit. The bitwise AND operator sets each bit in the result to 1 if and only if the corresponding bit in both operands is 1. -

Exclusive Or from Wikipedia, the Free Encyclopedia

New features Log in / create account Article Discussion Read Edit View history Exclusive or From Wikipedia, the free encyclopedia "XOR" redirects here. For other uses, see XOR (disambiguation), XOR gate. Navigation "Either or" redirects here. For Kierkegaard's philosophical work, see Either/Or. Main page The logical operation exclusive disjunction, also called exclusive or (symbolized XOR, EOR, Contents EXOR, ⊻ or ⊕, pronounced either / ks / or /z /), is a type of logical disjunction on two Featured content operands that results in a value of true if exactly one of the operands has a value of true.[1] A Current events simple way to state this is "one or the other but not both." Random article Donate Put differently, exclusive disjunction is a logical operation on two logical values, typically the values of two propositions, that produces a value of true only in cases where the truth value of the operands differ. Interaction Contents About Wikipedia Venn diagram of Community portal 1 Truth table Recent changes 2 Equivalencies, elimination, and introduction but not is Contact Wikipedia 3 Relation to modern algebra Help 4 Exclusive “or” in natural language 5 Alternative symbols Toolbox 6 Properties 6.1 Associativity and commutativity What links here 6.2 Other properties Related changes 7 Computer science Upload file 7.1 Bitwise operation Special pages 8 See also Permanent link 9 Notes Cite this page 10 External links 4, 2010 November Print/export Truth table on [edit] archived The truth table of (also written as or ) is as follows: Venn diagram of Create a book 08-17094 Download as PDF No.