Building Computers in 1953: JOHNNIAC"

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computer Organization and Architecture Designing for Performance Ninth Edition

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION William Stallings Boston Columbus Indianapolis New York San Francisco Upper Saddle River Amsterdam Cape Town Dubai London Madrid Milan Munich Paris Montréal Toronto Delhi Mexico City São Paulo Sydney Hong Kong Seoul Singapore Taipei Tokyo Editorial Director: Marcia Horton Designer: Bruce Kenselaar Executive Editor: Tracy Dunkelberger Manager, Visual Research: Karen Sanatar Associate Editor: Carole Snyder Manager, Rights and Permissions: Mike Joyce Director of Marketing: Patrice Jones Text Permission Coordinator: Jen Roach Marketing Manager: Yez Alayan Cover Art: Charles Bowman/Robert Harding Marketing Coordinator: Kathryn Ferranti Lead Media Project Manager: Daniel Sandin Marketing Assistant: Emma Snider Full-Service Project Management: Shiny Rajesh/ Director of Production: Vince O’Brien Integra Software Services Pvt. Ltd. Managing Editor: Jeff Holcomb Composition: Integra Software Services Pvt. Ltd. Production Project Manager: Kayla Smith-Tarbox Printer/Binder: Edward Brothers Production Editor: Pat Brown Cover Printer: Lehigh-Phoenix Color/Hagerstown Manufacturing Buyer: Pat Brown Text Font: Times Ten-Roman Creative Director: Jayne Conte Credits: Figure 2.14: reprinted with permission from The Computer Language Company, Inc. Figure 17.10: Buyya, Rajkumar, High-Performance Cluster Computing: Architectures and Systems, Vol I, 1st edition, ©1999. Reprinted and Electronically reproduced by permission of Pearson Education, Inc. Upper Saddle River, New Jersey, Figure 17.11: Reprinted with permission from Ethernet Alliance. Credits and acknowledgments borrowed from other sources and reproduced, with permission, in this textbook appear on the appropriate page within text. Copyright © 2013, 2010, 2006 by Pearson Education, Inc., publishing as Prentice Hall. All rights reserved. Manufactured in the United States of America. -

Id Question Microprocessor Is the Example of ___Architecture. A

Id Question Microprocessor is the example of _______ architecture. A Princeton B Von Neumann C Rockwell D Harvard Answer A Marks 1 Unit 1 Id Question _______ bus is unidirectional. A Data B Address C Control D None of these Answer B Marks 1 Unit 1 Id Question Use of _______isolates CPU form frequent accesses to main memory. A Local I/O controller B Expansion bus interface C Cache structure D System bus Answer C Marks 1 Unit 1 Id Question _______ buffers data transfer between system bus and I/O controllers on expansion bus. A Local I/O controller B Expansion bus interface C Cache structure D None of these Answer B Marks 1 Unit 1 Id Question ______ Timing involves a clock line. A Synchronous B Asynchronous C Asymmetric D None of these Answer A Marks 1 Unit 1 Id Question -----timing takes advantage of mixture of slow and fast devices, sharing the same bus A Synchronous B asynchronous C Asymmetric D None of these Answer B Marks 1 Unit 1 Id st Question In 1 generation _____language was used to prepare programs. A Machine B Assembly C High level programming D Pseudo code Answer B Marks 1 Unit 1 Id Question The transistor was invented at ________ laboratories. A AT &T B AT &Y C AM &T D AT &M Answer A Marks 1 Unit 1 Id Question ______is used to control various modules in the system A Control Bus B Data Bus C Address Bus D All of these Answer A Marks 1 Unit 1 Id Question The disadvantage of single bus structure over multi bus structure is _____ A Propagation delay B Bottle neck because of bus capacity C Both A and B D Neither A nor B Answer C Marks 1 Unit 1 Id Question _____ provide path for moving data among system modules A Control Bus B Data Bus C Address Bus D All of these Answer B Marks 1 Unit 1 Id Question Microcontroller is the example of _____ architecture. -

Parallel Computing

Lecture 1: Computer Organization 1 Outline • Overview of parallel computing • Overview of computer organization – Intel 8086 architecture • Implicit parallelism • von Neumann bottleneck • Cache memory – Writing cache-friendly code 2 Why parallel computing • Solving an × linear system Ax=b by using Gaussian elimination takes ≈ flops. 1 • On Core i7 975 @ 4.0 GHz,3 which is capable of about 3 60-70 Gigaflops flops time 1000 3.3×108 0.006 seconds 1000000 3.3×1017 57.9 days 3 What is parallel computing? • Serial computing • Parallel computing https://computing.llnl.gov/tutorials/parallel_comp 4 Milestones in Computer Architecture • Analytic engine (mechanical device), 1833 – Forerunner of modern digital computer, Charles Babbage (1792-1871) at University of Cambridge • Electronic Numerical Integrator and Computer (ENIAC), 1946 – Presper Eckert and John Mauchly at the University of Pennsylvania – The first, completely electronic, operational, general-purpose analytical calculator. 30 tons, 72 square meters, 200KW. – Read in 120 cards per minute, Addition took 200µs, Division took 6 ms. • IAS machine, 1952 – John von Neumann at Princeton’s Institute of Advanced Studies (IAS) – Program could be represented in digit form in the computer memory, along with data. Arithmetic could be implemented using binary numbers – Most current machines use this design • Transistors was invented at Bell Labs in 1948 by J. Bardeen, W. Brattain and W. Shockley. • PDP-1, 1960, DEC – First minicomputer (transistorized computer) • PDP-8, 1965, DEC – A single bus -

Computer Oral History Collection, 1969-1973, 1977

Computer Oral History Collection, 1969-1973, 1977 Interviewee: Morris Rubinoff Interviewer: Richard R. Mertz Date: May 17, 1971 Repository: Archives Center, National Museum of American History MERTZ: Professor Rubinoff, would you care to describe your early training and background and influences. RUBINOFF: The early training is at the University of Toronto in mathematics and physics as an undergraduate, and then in physics as a graduate. The physics was tested in research projects during World War II, which was related to the proximity fuse. In fact, a strong interest in computational techniques, numerical methods was developed then, and also in switching devices because right after the War the proximity fuse techniques were used to make measurements of the angular motions of projectiles in flight. To do this it was necessary to calculate trajectories. Calculating trajectories is an interesting problem since it relates to what made the ENIAC so interesting at Aberdeen. They were using it for calculating trajectories, unknown to me at the time. We were calculating trajectories by hand at the University of Toronto using a method which is often referred to as the Richardson method. So the whole technique of numerical analysis and numerical computation got to be very intriguing to me. MERTZ: Was this done on a Friden [or] Marchant type calculator? RUBINOFF: MERTZ: This was a War project at the University of Toronto? RUBINOFF: This was a post-War project. It was an outgrowth of a war project on proximity fuse. It was supported by the Canadian Army who were very interested in finding out what made liquid filled shell tumble rather than fly properly when they went through space. -

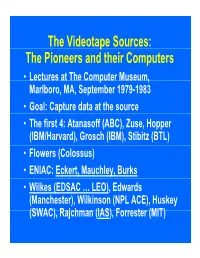

P the Pioneers and Their Computers

The Videotape Sources: The Pioneers and their Computers • Lectures at The Compp,uter Museum, Marlboro, MA, September 1979-1983 • Goal: Capture data at the source • The first 4: Atanasoff (ABC), Zuse, Hopper (IBM/Harvard), Grosch (IBM), Stibitz (BTL) • Flowers (Colossus) • ENIAC: Eckert, Mauchley, Burks • Wilkes (EDSAC … LEO), Edwards (Manchester), Wilkinson (NPL ACE), Huskey (SWAC), Rajchman (IAS), Forrester (MIT) What did it feel like then? • What were th e comput ers? • Why did their inventors build them? • What materials (technology) did they build from? • What were their speed and memory size specs? • How did they work? • How were they used or programmed? • What were they used for? • What did each contribute to future computing? • What were the by-products? and alumni/ae? The “classic” five boxes of a stored ppgrogram dig ital comp uter Memory M Central Input Output Control I O CC Central Arithmetic CA How was programming done before programming languages and O/Ss? • ENIAC was programmed by routing control pulse cables f ormi ng th e “ program count er” • Clippinger and von Neumann made “function codes” for the tables of ENIAC • Kilburn at Manchester ran the first 17 word program • Wilkes, Wheeler, and Gill wrote the first book on programmiidbBbbIiSiing, reprinted by Babbage Institute Series • Parallel versus Serial • Pre-programming languages and operating systems • Big idea: compatibility for program investment – EDSAC was transferred to Leo – The IAS Computers built at Universities Time Line of First Computers Year 1935 1940 1945 1950 1955 ••••• BTL ---------o o o o Zuse ----------------o Atanasoff ------------------o IBM ASCC,SSEC ------------o-----------o >CPC ENIAC ?--------------o EDVAC s------------------o UNIVAC I IAS --?s------------o Colossus -------?---?----o Manchester ?--------o ?>Ferranti EDSAC ?-----------o ?>Leo ACE ?--------------o ?>DEUCE Whirl wi nd SEAC & SWAC ENIAC Project Time Line & Descendants IBM 701, Philco S2000, ERA.. -

EULOGY for WILLIS WARE Michael Rich March 28, 2014

CHILDREN AND FAMILIES The RAND Corporation is a nonprofit institution that helps improve policy and EDUCATION AND THE ARTS decisionmaking through research and analysis. ENERGY AND ENVIRONMENT HEALTH AND HEALTH CARE This electronic document was made available from www.rand.org as a public service INFRASTRUCTURE AND of the RAND Corporation. TRANSPORTATION INTERNATIONAL AFFAIRS LAW AND BUSINESS Skip all front matter: Jump to Page 16 NATIONAL SECURITY POPULATION AND AGING PUBLIC SAFETY Support RAND SCIENCE AND TECHNOLOGY Browse Reports & Bookstore TERRORISM AND Make a charitable contribution HOMELAND SECURITY For More Information Visit RAND at www.rand.org Explore the RAND Corporation View document details Limited Electronic Distribution Rights This document and trademark(s) contained herein are protected by law as indicated in a notice appearing later in this work. This electronic representation of RAND intellectual property is provided for non- commercial use only. Unauthorized posting of RAND electronic documents to a non-RAND website is prohibited. RAND electronic documents are protected under copyright law. Permission is required from RAND to reproduce, or reuse in another form, any of our research documents for commercial use. For information on reprint and linking permissions, please see RAND Permissions. This product is part of the RAND Corporation corporate publication series. Corporate publications describe or promote RAND divisions and programs, summarize research results, or announce upcoming events. EULOGY FOR WILLIS WARE Michael Rich March 28, 2014 I want to begin by thanking the entire Ware Family for ensuring that all of us who knew and admired Willis Ware have this chance to reflect on his extraordinary life: • Willis’s daughters, Ali and Deb • his sons-in-law, Tom and Ed • his granddaughters, Arielle and Victoria • his great-grandson, Aidan • his brother, Stanley; sister-in-law, Sigrid; and niece, Joanne. -

Oral History Interview with Willis Ware

An Interview with Willis Ware OH 356 Conducted by Jeffrey R. Yost On 11 August 2003 RAND Corporation Santa Monica, California Charles Babbage Institute Center for the History of Information Processing University of Minnesota, Minneapolis Copyright 2003, Charles Babbage Institute Willis Ware Interview 11 August 2003 Oral History 356 Abstract Distinguished computer scientist, longtime head of the Computer Science Department at the RAND Corporation, and pioneer and leader on issues of computer security and privacy discusses the history of Computer Science at RAND. This includes the transition of RAND to digital computing with the Johnniac project, programming and software work, and a number of other topics. Dr. Ware relates his work with and role and leadership in organizations such as ACM, and AFIPS, as well as on the issues of computer security and privacy with the Ware Report on computer security for the Defense Science Board committee, HEW’s Advisory Committee on Automated Personal Data, and the Privacy Protection Study Commission. Finally, Dr. Ware outlines broad changes and developments with in the RAND Corporation, particularly as they relate to research in computing and software at the organization. Descriptors: RAND Corporation Institute for Advanced Study Privacy Protection Study Commission Association of Computing Machinery Advisory Committee On Automated Personal Data Computer Security Computer Privacy Johnniac 2 TAPE 1 (Side A) Yost: I’m here today with Willis Ware at the RAND Corporation. This interview is part of the NSF sponsored project, “Building a Future for Software History.” Yost: Willis, could you begin by giving some biographical information about where you grew up and your evolving educational interests both before and in college and graduate school? Ware: I was born in Atlantic City, New Jersey on August the 31, 1920, so you can figure out how old I am. -

Parallel Computing

Lecture 1: Single processor performance Why parallel computing • Solving an 푛 × 푛 linear system Ax=b by using Gaussian 1 elimination takes ≈ 푛3 flops. 3 • On Core i7 975 @ 4.0 GHz, which is capable of about 60-70 Gigaflops 푛 flops time 1000 3.3×108 0.006 seconds 1000000 3.3×1017 57.9 days Milestones in Computer Architecture • Analytic engine (mechanical device), 1833 – Forerunner of modern digital computer, Charles Babbage (1792-1871) at University of Cambridge • Electronic Numerical Integrator and Computer (ENIAC), 1946 – Presper Eckert and John Mauchly at the University of Pennsylvania – The first, completely electronic, operational, general-purpose analytical calculator. 30 tons, 72 square meters, 200KW. – Read in 120 cards per minute, Addition took 200µs, Division took 6 ms. • IAS machine, 1952 – John von Neumann at Princeton’s Institute of Advanced Studies (IAS) – Program could be represented in digit form in the computer memory, along with data. Arithmetic could be implemented using binary numbers – Most current machines use this design • Transistors was invented at Bell Labs in 1948 by J. Bardeen, W. Brattain and W. Shockley. • PDP-1, 1960, DEC – First minicomputer (transistorized computer) • PDP-8, 1965, DEC – A single bus (omnibus) connecting CPU, Memory, Terminal, Paper tape I/O and Other I/O. • 7094, 1962, IBM – Scientific computing machine in early 1960s. • 8080, 1974, Intel – First general-purpose 8-bit computer on a chip • IBM PC, 1981 – Started modern personal computer era Remark: see also http://www.computerhistory.org/timeline/?year=1946 -

RAND and the Information Evolution a History in Essays and Vignettes WILLIS H

THE ARTS This PDF document was made available from www.rand.org as a public CHILD POLICY service of the RAND Corporation. CIVIL JUSTICE EDUCATION ENERGY AND ENVIRONMENT Jump down to document6 HEALTH AND HEALTH CARE INTERNATIONAL AFFAIRS NATIONAL SECURITY The RAND Corporation is a nonprofit research POPULATION AND AGING organization providing objective analysis and effective PUBLIC SAFETY solutions that address the challenges facing the public SCIENCE AND TECHNOLOGY and private sectors around the world. SUBSTANCE ABUSE TERRORISM AND HOMELAND SECURITY TRANSPORTATION AND INFRASTRUCTURE WORKFORCE AND WORKPLACE Support RAND Purchase this document Browse Books & Publications Make a charitable contribution For More Information Visit RAND at www.rand.org Learn more about the RAND Corporation View document details Limited Electronic Distribution Rights This document and trademark(s) contained herein are protected by law as indicated in a notice appearing later in this work. This electronic representation of RAND intellectual property is provided for non-commercial use only. Unauthorized posting of RAND PDFs to a non-RAND Web site is prohibited. RAND PDFs are protected under copyright law. Permission is required from RAND to reproduce, or reuse in another form, any of our research documents for commercial use. For information on reprint and linking permissions, please see RAND Permissions. This product is part of the RAND Corporation corporate publication series. Corporate publications describe or promote RAND divisions and programs, summarize research results, or announce upcoming events. RAND and the Information Evolution A History in Essays and Vignettes WILLIS H. WARE C O R P O R A T I O N Funding for the publication of this document was provided through a generous gift from Paul Baran, an alumnus of RAND, and support from RAND via its philanthropic donors and income from operations. -

The Baleful Effect of Computer Languages and Benchmarks Upon Applied Mathematics, Physics and Chemistry

SIAMjvnl July 16, 1997 The John von Neumann Lecture on The Baleful Effect of Computer Languages and Benchmarks upon Applied Mathematics, Physics and Chemistry presented by Prof. W. Kahan Mathematics Dept., and Elect. Eng. & Computer Sci. Dept. University of California Berkeley CA 94720-1776 (510) 642-5638 at the 45th Annual Meeting of S.I.A.M. Stanford University 15 July 1997 PostScript file: http://http.cs.berkeley.edu/~wkahan/SIAMjvnl.ps For more details see also …/Triangle.ps and …/Cantilever.ps Slide 1 SIAMjvnl July 16, 1997 The Baleful Effect of Computer Languages and Benchmarks upon Applied Mathematics, Physics and Chemistry Abstract: An unhealthy preoccupation with floating–point arithmetic’s Speed, as if it were the same as Throughput, has distracted the computing industry and its marketplace from other important qualities that computers’ arithmetic hardware and software should possess too, qualities like Accuracy, Reliability, Ease of Use, Adaptability, ··· These qualities, especially the ones that arise out of Intellectual Economy, tend to be given little weight partly because they are so hard to quantify and to compare meaningfully. Worse, technical and political limitations built into current benchmarking practices discourage innovations and accommodations of features necessary as well as desirable for robust and reliable technical computation. Most exasperating are computer languages like Java that lack locutions to access advantageous features in hardware that we consequently cannot use though we have paid for them. That lack prevents benchmarks from demonstrating the features' benefits, thus denying language implementors any incentive to accommodate those features in their compilers. It is a vicious circle that the communities concerned with scientific and engineering computation must help the computing industry break. -

Computer History II

Key Events in the History of Computing II Post World War II 1945 Grace Murray Hopper, working in a temporary World War I building at Harvard University on the Mark II computer, found the first computer bug beaten to death in the jaws of a relay. She glued it into the logbook of the computer and thereafter when the machine stops (frequently) they tell Howard Aiken that they are "debugging" the computer. The very first bug still exists in the National Museum of American History of the Smithsonian Institution. The word bug and the concept of debugging had been used previously, perhaps by Edison, but this was probably the first verification that the concept applied to computers. 14 February 1946 ENIAC was unveiled in Philadelphia. ENIAC represented still a stepping stone towards the true computer, for differently than Babbage, Eckert and Mauchly, although they knew that the machine was not the ultimate in the state-of-the-art technology, completed the construction. ENIAC was programmed through the rewiring the interconnections between the various components and included the capability of parallel computation. ENIAC was later to be modified into a stored program machine, but not before other machines had claimed the claim to be the first true computer. 1946 was the year in which the first computer meeting took place, with the University of Pennsylvania organizing the first of a series of "summer meetings" where scientists from around the world learned about ENIAC and their plans for EDVAC. Among the attendees was Maurice Wilkes from the University of Cambridge who would return to England to build the EDSAC. -

Computer Architecture ELE 475 / COS 475 Slide Deck 1

Computer Architecture ELE 475 / COS 475 Slide Deck 1: Introduction and Instruction Set Architectures David Wentzlaff Department of Electrical Engineering Princeton University 1 What is Computer Architecture? Application 2 What is Computer Architecture? Application Physics 3 What is Computer Architecture? Application Gap too large to bridge in one step Physics 4 What is Computer Architecture? Application In its broadest definition, Gap too large to computer architecture is the design of the abstraction/ bridge in one step implementation layers that allow us to execute information processing applications efficiently using manufacturing technologies Physics 5 What is Computer Architecture? Application In its broadest definition, Gap too large to computer architecture is the design of the abstraction/ bridge in one step implementation layers that allow us to execute information processing applications efficiently using manufacturing technologies Physics 6 Abstractions in Modern Computing Systems Application Algorithm Programming Language Operating System/Virtual Machines Instruction Set Architecture Microarchitecture Register-Transfer Level Gates Circuits Devices Physics 7 Abstractions in Modern Computing Systems Application Algorithm Programming Language Operating System/Virtual Machines Instruction Set Architecture Computer Architecture Microarchitecture (ELE 475) Register-Transfer Level Gates Circuits Devices Physics 8 Computer Architecture is Constantly Changing Application Application Requirements: Algorithm • Suggest how to improve