Von Neumann Architecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computer Organization and Architecture Designing for Performance Ninth Edition

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION William Stallings Boston Columbus Indianapolis New York San Francisco Upper Saddle River Amsterdam Cape Town Dubai London Madrid Milan Munich Paris Montréal Toronto Delhi Mexico City São Paulo Sydney Hong Kong Seoul Singapore Taipei Tokyo Editorial Director: Marcia Horton Designer: Bruce Kenselaar Executive Editor: Tracy Dunkelberger Manager, Visual Research: Karen Sanatar Associate Editor: Carole Snyder Manager, Rights and Permissions: Mike Joyce Director of Marketing: Patrice Jones Text Permission Coordinator: Jen Roach Marketing Manager: Yez Alayan Cover Art: Charles Bowman/Robert Harding Marketing Coordinator: Kathryn Ferranti Lead Media Project Manager: Daniel Sandin Marketing Assistant: Emma Snider Full-Service Project Management: Shiny Rajesh/ Director of Production: Vince O’Brien Integra Software Services Pvt. Ltd. Managing Editor: Jeff Holcomb Composition: Integra Software Services Pvt. Ltd. Production Project Manager: Kayla Smith-Tarbox Printer/Binder: Edward Brothers Production Editor: Pat Brown Cover Printer: Lehigh-Phoenix Color/Hagerstown Manufacturing Buyer: Pat Brown Text Font: Times Ten-Roman Creative Director: Jayne Conte Credits: Figure 2.14: reprinted with permission from The Computer Language Company, Inc. Figure 17.10: Buyya, Rajkumar, High-Performance Cluster Computing: Architectures and Systems, Vol I, 1st edition, ©1999. Reprinted and Electronically reproduced by permission of Pearson Education, Inc. Upper Saddle River, New Jersey, Figure 17.11: Reprinted with permission from Ethernet Alliance. Credits and acknowledgments borrowed from other sources and reproduced, with permission, in this textbook appear on the appropriate page within text. Copyright © 2013, 2010, 2006 by Pearson Education, Inc., publishing as Prentice Hall. All rights reserved. Manufactured in the United States of America. -

Nojivw&Ohn? *Joto Omaj

Dec. 18, 1962 W. R. SLOAN 3,069,681 SYSTEM FOR LARGE-AREA DISPLAY OF TWO-COLOR INFORMATION Filed March la, l960 3 Sheets-Sheet 1. r LIMAS9.NIHO BOHABO ??31=== IWWBO-BNI-NOI.-OL SV78EWIlCJE OMAJ.*JOTOO NOJIVW&JOHN? MABIA9.NI BOV-38ñS [] [] INVENTOR. WILLIAM R. SLOAN BY ????????? ? ???? AT TORNEYS Dec. 18, 1962 W. R, SLOAN 3,069,681 SYSTEM FOR LARGE-AREA DISPLAY OF TWO-COLOR INFORMATION Filed March 14, 1960 3. Sheets-Sheet 2 GN2&#OTOO ea? ?——?? AT TORNEYS Dec. 18, 1962 W. R. SOAN 3,069,681 SYSTEM FOR LARGE-AREA DISPLAY OF TWO-COLOR INFORMATION Filed March 14, 1960 3 Sheets-Sheet 3 – E. T =-4 HORIZONAL ? SWEEP GENERATOR 44 68 256 K.C. GENERATORPULSE ( BSTABLE BSTABLE B|STABLE ? B|STABLE BSTABLE ??? ? ??82 m232 -- 198 lost 3 -- SAS JS JAAYeASeSYe SAuSJSJASJLLLTLTMSTTLTTTLLLSLLSS LL LLL LLLLLL DISPLAY SURFACE HORIZONTAL SWEEP GENERATOR INVENTOR. WLLAM R. SOAN ATTORNEYS 3,069,681 United States Patent Office Patented Dec. 18, 1962 2 3,069,681 when the electrodes are respectively pulsed. Each of the SYSTEM FOR LARGE-AREA DISPLAY OF pluralities of electrodes is in spaced-apart alignment along TWO,-COLOR ENSFORMATION one dimension of the sheet and the two pluralities of William R. Sloan, Fort Wayne, Ind., assignor to Inter electrodes are respectively spaced-apart along the other national Telephone and Telegraph Corporation dimension of the sheet. Means are provided for moving Filed Mar. 14, 1960, Ser. No. 14,743 the sheet with respect to the electrodes along the other 9 Claims. (Cl, 346-74) dimension. First switching means is provided sequen tially coupling the first electrodes to the converting means This invention relates to a system for presenting in for sampling one of the color-responsive signals at dis two colors alpha-numeric information, i.e., words and Crete intervals and for pulsing the first electrodes respon numbers, and graphical information, such as maps, sive to the presence of the one color signal at the respec graphs, etc., visually on a large-area display for group tive intervals. -

Teelllliedi Siteeifieatittil CONTENTS

'. INTERACTIVE GRAPHIC SYSTEMS t;DSn teellllieDI Siteeifieatittil CONTENTS Section Page Section Page 1 Introducing GDS II ........................................... 1-1 4 Specifications ................................................ 4-1 2 General Description .......................................... 2-1 4.1 Database Features ....................................... 4-1 4.2 Input/Editing ............................................ 4-1 2.1 The Problems of VLSI .................................... 2-1 4.3 Display Control .......................................... 4-3 2.2 The Solution - GDS II .................................... 2-1 4.4 Background Programs ................................... 4-4 2.3 Exploiting the Latest Technology .................. , ...... 2-2 4.5 Application Programming Tools .......................... 4-5 4.6 Hardware ............................................... 4-6 3 System Operation ............................................ 3-1 5 Software ..................................................... 5-1 3.1 Database Extensibility ................................... 3-1 3.2 Database Elements ...................................... 3-1 5.1 Multiground RDOS ...................................... 5-1 3.3 Database Construction ................................... 3-2 5.2 Database Management System ........................... 5-3 3.4 Menu Operations ........................................ 3-2 5.3 GPL™ ................................................... 5-7 3.5 Graphic Display ......................................... 3-2 -

Id Question Microprocessor Is the Example of ___Architecture. A

Id Question Microprocessor is the example of _______ architecture. A Princeton B Von Neumann C Rockwell D Harvard Answer A Marks 1 Unit 1 Id Question _______ bus is unidirectional. A Data B Address C Control D None of these Answer B Marks 1 Unit 1 Id Question Use of _______isolates CPU form frequent accesses to main memory. A Local I/O controller B Expansion bus interface C Cache structure D System bus Answer C Marks 1 Unit 1 Id Question _______ buffers data transfer between system bus and I/O controllers on expansion bus. A Local I/O controller B Expansion bus interface C Cache structure D None of these Answer B Marks 1 Unit 1 Id Question ______ Timing involves a clock line. A Synchronous B Asynchronous C Asymmetric D None of these Answer A Marks 1 Unit 1 Id Question -----timing takes advantage of mixture of slow and fast devices, sharing the same bus A Synchronous B asynchronous C Asymmetric D None of these Answer B Marks 1 Unit 1 Id st Question In 1 generation _____language was used to prepare programs. A Machine B Assembly C High level programming D Pseudo code Answer B Marks 1 Unit 1 Id Question The transistor was invented at ________ laboratories. A AT &T B AT &Y C AM &T D AT &M Answer A Marks 1 Unit 1 Id Question ______is used to control various modules in the system A Control Bus B Data Bus C Address Bus D All of these Answer A Marks 1 Unit 1 Id Question The disadvantage of single bus structure over multi bus structure is _____ A Propagation delay B Bottle neck because of bus capacity C Both A and B D Neither A nor B Answer C Marks 1 Unit 1 Id Question _____ provide path for moving data among system modules A Control Bus B Data Bus C Address Bus D All of these Answer B Marks 1 Unit 1 Id Question Microcontroller is the example of _____ architecture. -

Of 38 an Inexpensive VTCT Adapter for All Tektronix Scts Version. 1.04.1

Page 1 of 38 An Inexpensive VTCT Adapter for All Tektronix SCTs Version. 1.04.1 Page 2 of 38 An Inexpensive VTCT Adapter for All Tektronix SCTs Version. 1.04.1 Figure 1 Front cover—Tektronix 577 Semiconductor Curve Tracer displaying the characteristic curves of a TRIODE VACUUM TUBE. Page 3 of 38 An Inexpensive VTCT Adapter for All Tektronix SCTs Version. 1.04.1 AN INEXPENSIVE VACUUM TUBE CURVE TRACER ADAPTER FOR ALL TEKTRONIX SEMICONDUCTOR CURVE TRACERS © Dennis Tillman W7pF, [email protected], Version 1.04.1, Mar. 10, 2020 CONTENTS INTRODUCTION ................................................................................................................................... 5 TEKTRONIX CURVE TRACER FEATURE COMPARISON .................................................................. 6 TEKTRONIX CURVE TRACERS .......................................................................................................... 6 VACUUM TUBE TESTER FEATURE COMPARISON .......................................................................... 8 THEORY OF OPERATION .................................................................................................................. 11 EICO 667 MODIFICATIONS................................................................................................................ 13 WHAT CAN I EXPECT WITH THE CURVE TRACER I MAY ALREADY OWN? ................................ 13 WHAT YOU WILL NEED TO MAKE THIS VTCT ................................................................................ 14 TWO CHARACTERISTIC CURVES -

Parallel Computing

Lecture 1: Computer Organization 1 Outline • Overview of parallel computing • Overview of computer organization – Intel 8086 architecture • Implicit parallelism • von Neumann bottleneck • Cache memory – Writing cache-friendly code 2 Why parallel computing • Solving an × linear system Ax=b by using Gaussian elimination takes ≈ flops. 1 • On Core i7 975 @ 4.0 GHz,3 which is capable of about 3 60-70 Gigaflops flops time 1000 3.3×108 0.006 seconds 1000000 3.3×1017 57.9 days 3 What is parallel computing? • Serial computing • Parallel computing https://computing.llnl.gov/tutorials/parallel_comp 4 Milestones in Computer Architecture • Analytic engine (mechanical device), 1833 – Forerunner of modern digital computer, Charles Babbage (1792-1871) at University of Cambridge • Electronic Numerical Integrator and Computer (ENIAC), 1946 – Presper Eckert and John Mauchly at the University of Pennsylvania – The first, completely electronic, operational, general-purpose analytical calculator. 30 tons, 72 square meters, 200KW. – Read in 120 cards per minute, Addition took 200µs, Division took 6 ms. • IAS machine, 1952 – John von Neumann at Princeton’s Institute of Advanced Studies (IAS) – Program could be represented in digit form in the computer memory, along with data. Arithmetic could be implemented using binary numbers – Most current machines use this design • Transistors was invented at Bell Labs in 1948 by J. Bardeen, W. Brattain and W. Shockley. • PDP-1, 1960, DEC – First minicomputer (transistorized computer) • PDP-8, 1965, DEC – A single bus -

Frequency Spectrum Generated by Thyristor Control J

Electrocomponent Science and Technology (C) Gordon and Breach Science Publishers Ltd. 1974, Vol. 1, pp. 43-49 Printed in Great Britain FREQUENCY SPECTRUM GENERATED BY THYRISTOR CONTROL J. K. HALL and N. C. JONES Loughborough University of Technology, Loughborough, Leics. U.K. (Received October 30, 1973; in final form December 19, 1973) This paper describes the measured harmonics in the load currents of thyristor circuits and shows that with firing angle control the harmonic amplitudes decrease sharply with increasing harmonic frequency, but that they extend to very high harmonic orders of around 6000. The amplitudes of the harmonics are a maximum for a firing delay angle of around 90 Integral cycle control produces only low order harmonics and sub-harmonics. It is also shown that with firing angle control apparently random inter-harmonic noise is present and that the harmonics fall below this noise level at frequencies of approximately 250 KHz for a switched 50 Hz waveform and for the resistive load used. The noise amplitude decreases with increasing frequency and is a maximum with 90 firing delay. INTRODUCTION inductance. 6,7 Literature on the subject tends to assume that noise is due to the high frequency Thyristors are now widely used for control of power harmonics generated by thyristor switch-on and the in both d.c. and a.c. circuits. The advantages of design of suppression components is based on this. relatively small size, low losses and fast switching This investigation has been performed in order to speeds have contributed to the vast growth in establish the validity of this assumption, since it is application since their introduction, when they were believed that there may be a number of perhaps basically a solid-state replacement for mercury-arc separate sources of noise in thyristor circuits. -

Tabulation of Published Data on Electron Devices of the U.S.S.R. Through December 1976

NAT'L INST. OF STAND ms & TECH R.I.C. Pubii - cations A111D4 4 Tfi 3 4 4 NBSIR 78-1564 Tabulation of Published Data on Electron Devices of the U.S.S.R. Through December 1976 Charles P. Marsden Electron Devices Division Center for Electronics and Electrical Engineering National Bureau of Standards Washington, DC 20234 December 1978 Final QC— U.S. DEPARTMENT OF COMMERCE 100 NATIONAL BUREAU OF STANDARDS U56 73-1564 Buraev of Standard! NBSIR 78-1564 1 4 ^79 fyr *'• 1 f TABULATION OF PUBLISHED DATA ON ELECTRON DEVICES OF THE U.S.S.R. THROUGH DECEMBER 1976 Charles P. Marsden Electron Devices Division Center for Electronics and Electrical Engineering National Bureau of Standards Washington, DC 20234 December 1978 Final U.S. DEPARTMENT OF COMMERCE, Juanita M. Kreps, Secretary / Dr. Sidney Harman, Under Secretary Jordan J. Baruch, Assistant Secretary for Science and Technology NATIONAL BUREAU OF STANDARDS, Ernest Ambler, Director - 1 TABLE OF CONTENTS Page Preface i v 1. Introduction 2. Description of the Tabulation ^ 1 3. Organization of the Tabulation ’ [[ ] in ’ 4. Terminology Used the Tabulation 3 5. Groups: I. Numerical 7 II. Receiving Tubes 42 III . Power Tubes 49 IV. Rectifier Tubes 53 IV-A. Mechanotrons , Two-Anode Diode 54 V. Voltage Regulator Tubes 55 VI. Current Regulator Tubes 55 VII. Thyratrons 56 VIII. Cathode Ray Tubes 58 VIII-A. Vidicons 61 IX. Microwave Tubes 62 X. Transistors 64 X-A-l . Integrated Circuits 75 X-A-2. Integrated Circuits (Computer) 80 X-A-3. Integrated Circuits (Driver) 39 X-A-4. Integrated Circuits (Linear) 89 X- B. -

Hereby the Screen Stands in For, and Thereby Occludes, the Deeper Workings of the Computer Itself

John Warnock and an IDI graphical display unit, University of Utah, 1968. Courtesy Salt Lake City Deseret News . 24 doi:10.1162/GREY_a_00233 Downloaded from http://www.mitpressjournals.org/doi/pdf/10.1162/GREY_a_00233 by guest on 27 September 2021 The Random-Access Image: Memory and the History of the Computer Screen JACOB GABOURY A memory is a means for displacing in time various events which depend upon the same information. —J. Presper Eckert Jr. 1 When we speak of graphics, we think of images. Be it the windowed interface of a personal computer, the tactile swipe of icons across a mobile device, or the surreal effects of computer-enhanced film and video games—all are graphics. Understandably, then, computer graphics are most often understood as the images displayed on a computer screen. This pairing of the image and the screen is so natural that we rarely theorize the screen as a medium itself, one with a heterogeneous history that develops in parallel with other visual and computa - tional forms. 2 What then, of the screen? To be sure, the computer screen follows in the tradition of the visual frame that delimits, contains, and produces the image. 3 It is also the skin of the interface that allows us to engage with, augment, and relate to technical things. 4 But the computer screen was also a cathode ray tube (CRT) phosphorescing in response to an electron beam, modified by a grid of randomly accessible memory that stores, maps, and transforms thousands of bits in real time. The screen is not simply an enduring technique or evocative metaphor; it is a hardware object whose transformations have shaped the ma - terial conditions of our visual culture. -

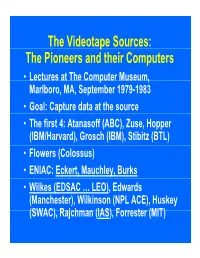

P the Pioneers and Their Computers

The Videotape Sources: The Pioneers and their Computers • Lectures at The Compp,uter Museum, Marlboro, MA, September 1979-1983 • Goal: Capture data at the source • The first 4: Atanasoff (ABC), Zuse, Hopper (IBM/Harvard), Grosch (IBM), Stibitz (BTL) • Flowers (Colossus) • ENIAC: Eckert, Mauchley, Burks • Wilkes (EDSAC … LEO), Edwards (Manchester), Wilkinson (NPL ACE), Huskey (SWAC), Rajchman (IAS), Forrester (MIT) What did it feel like then? • What were th e comput ers? • Why did their inventors build them? • What materials (technology) did they build from? • What were their speed and memory size specs? • How did they work? • How were they used or programmed? • What were they used for? • What did each contribute to future computing? • What were the by-products? and alumni/ae? The “classic” five boxes of a stored ppgrogram dig ital comp uter Memory M Central Input Output Control I O CC Central Arithmetic CA How was programming done before programming languages and O/Ss? • ENIAC was programmed by routing control pulse cables f ormi ng th e “ program count er” • Clippinger and von Neumann made “function codes” for the tables of ENIAC • Kilburn at Manchester ran the first 17 word program • Wilkes, Wheeler, and Gill wrote the first book on programmiidbBbbIiSiing, reprinted by Babbage Institute Series • Parallel versus Serial • Pre-programming languages and operating systems • Big idea: compatibility for program investment – EDSAC was transferred to Leo – The IAS Computers built at Universities Time Line of First Computers Year 1935 1940 1945 1950 1955 ••••• BTL ---------o o o o Zuse ----------------o Atanasoff ------------------o IBM ASCC,SSEC ------------o-----------o >CPC ENIAC ?--------------o EDVAC s------------------o UNIVAC I IAS --?s------------o Colossus -------?---?----o Manchester ?--------o ?>Ferranti EDSAC ?-----------o ?>Leo ACE ?--------------o ?>DEUCE Whirl wi nd SEAC & SWAC ENIAC Project Time Line & Descendants IBM 701, Philco S2000, ERA.. -

Electronics Components

CURRENT INDUSTRIAL REPORTS SERIES 2007 MA334Q — SEMICONDUCTORS, ELECTRONIC COMPONENTS, AND SEMICONDUCTOR MANUFACTURING EQUIPMENT DEFINITIONS AND SPECIAL INSTRUCTIONS 1. Scope of survey Your figure should include — This survey covers the manufacture of semi-conductors, Electronic components parts (integrated microcircuits, printed circuit boards, connectors, capacitors, and other transistors, diodes, rectifiers, capacitors, resistors, electronic components (except relays) and semi- coils, transformers, connectors, filters, etc.) conductor machinery in the United States. (See Reference List for complete list of products collected 2. Figures to be reported on this survey.) Companies with more than one establishment but exclude — manufacturing the products covered by this survey are requested to complete a separate report form for each Electric wiring devices should be reported on survey location. If you have not received a separate form for MA335K, "Wiring Devices and Supplies." each of your establishments, please call the contact shown on the report form or write to the U.S. Census Home entertainment electronic equipment, such as Bureau for additional forms. home and automobile radio receivers, television receivers, phonographs, hi-fidelity components, a. Value of Shipments consumer audio and video tape recorders, loud- speakers, microphones, ear phones, phonograph The figures on value of shipments should include the cartridges, and public address systems should be physical shipments of all products sold, transferred to reported on survey MA334M, “Consumer Electronics.” other establishments within your company, or shipped on consignment, whether for domestic or export sale. The Analytical and optical instruments should be reported value represents the net sales price, f.o.b. plant, to the in MA334A, “Electromedical Equipment and Analytical customer or branch to which the products are shipped, Instructions.” net of discounts, allowances, freight charges and returns. -

Master Product Fisting

Richardson "' Electronics, Ltd. Master Product fisting Part #and Description Guide • Electron Tubes • Semiconductors r Electron Tubes Part Description Part # Description Part # Description LCMG-B X-Ray Tube 2AS15A Receiving Rectifier QB3.5/750GA Power Tetrode QEL1/150 Power Tetrode 2AV2 Receiving Tube 6163.5/750/6156 Power Tetrode CCS-1/Y799 CC Power Tetrode 2822 Planar Diode QBL3.5/2000 Power Triode PE1/100/6083 Power Pentode 2B35/EA50 UHF Diode W3/2GC TWi C1A Thyratron 2694 Twin Tetrode W3/2GR TWi GV1A-1650 Corona Voltage Reg 2BU2/2AS2A/2AH2 Receiving Tube 61QV03/20A UHF Twin Tetrode CE1A/B Phototube 2C36 UHF Triode QQE03/20/6252 UHF Twin Tetrode 1A3/DA90 Mini HF Diode 2C39A Planar Triode QQE03/12/6360A Twin Tetrode 1AD2A/1BY2 Receiving Tube 2C39BA Planar Triode OE3/85A1 Voltage Regulator C1 B/3C31 /5664 Thyratron 2C39WA Planar Triode OG3/85A2 MIN Volt Regulator 1B3GT/1 G3GT Receiving Tube 2C40A Planar Triode QB3/200 Power Tetrode 1B35A ATR Tube 2C43 Planar Triode QB3/300 Power Tetrode 1658A TR Tube 2C51/396A MIN Twin Triode QB3/300GA Power Tetrode 1859/R1130B Glow Modulator 2C53 High Voltage Triode T83/750 Power Triode 1B63B TR Tube 2CW4 Receiving Tube OA3NR75 Voltage Regulator 1885 Geiger-Mueller Tube 2D21 Thyratron OB3NR90 Voltage Regulator 1BQ2IDY802 Receiving Rectifier 2D21W/5727 MIN Thyratron OC3NR105 Voltage Regulator 1C21 Cold Cathode 2D54 Receiving Tube OD3NR150 Voltage Regulator 1D21 /SN4 Cold Cathode Dischg 2E22 Pentode OB3A Receiving Tube 1G3GT/ 1B3GT Receiving Tube 2E24 Beam Amplifier OC3A Voltage Regulator 1G35P Hydrogen Thyratron 2E26 Beam Amplifier OD3A Voltage Regulator 1HSGT Recv.