LDL-2014 3Rd Workshop on Linked Data in Linguistics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hittite Etymologies and Notes*

Studia Linguistica Universitatis Iagellonicae Cracoviensis 129 (2012) DOI 10.4467/20834624SL.12.015.0604 ROBERT WOODHOUSE The University oo Queensland, Brisbane [email protected] HITTITE ETYMOLOGIES AND NOTES* Keywords: Hittite, etymology, Proto-Indo-European, historical phonology, semantics Abstract Discussed are the etymologies of twelve Hittite words and word groups (alpa- ‘cloud’, aku- ‘seashell’, ariye/a-zi ‘determine by or consult an oracle’, heu- / he(y)aw- ‘rain’, hāli- ‘pen, corral’, kalmara- ‘ray’ etc., māhla- ‘grapevine branch’, sūu, sūwaw- ‘full’, tarra-tta(ri) ‘be able’ and tarhu-zi ‘id.; conquer’, idālu- ‘evil’, tara-i / tari- ‘become weary, henkan ‘death, doom’) and some points of Hittite historical phonology, such as the fate of medial *-h2n- (sub §7) and final *-i (§13), all of which appear to receive somewhat inadequate treatment in Kloekhorst’s 2008 Hittite etymological dictionary. Several old etymologies are defended and some new ones suggested. The following notes were compiled while writing a response (in press b) to that part of the (2006) paper, recently kindly brought to my attention by its author, Professor Witold Mańczak, that purports to unseat the laryngeal theory on the basis of al- legedly incompatible Hittite material collected over three decades ago by Tischler (1980). The massive debate on the laryngeal theory that essentially followed Tisch- ler’s paper was no doubt in part a response to it and produced solutions to most if not all of the problems raised by Tischler, a position I attempt to summarize in my own paper noted above with reference to the superb Hittite etymological diction- ary recently published by Kloekhorst (2008, hereinafter referred to as K:) with its several innovations in the areas of Hittite and Anatolian historical phonology and morphology. -

What the Neurocognitive Study of Inner Language Reveals About Our Inner Space Hélène Loevenbruck

What the neurocognitive study of inner language reveals about our inner space Hélène Loevenbruck To cite this version: Hélène Loevenbruck. What the neurocognitive study of inner language reveals about our inner space. Epistémocritique, épistémocritique : littérature et savoirs, 2018, Langage intérieur - Espaces intérieurs / Inner Speech - Inner Space, 18. hal-02039667 HAL Id: hal-02039667 https://hal.archives-ouvertes.fr/hal-02039667 Submitted on 20 Sep 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Preliminary version produced by the author. In Lœvenbruck H. (2008). Épistémocritique, n° 18 : Langage intérieur - Espaces intérieurs / Inner Speech - Inner Space, Stéphanie Smadja, Pierre-Louis Patoine (eds.) [http://epistemocritique.org/what-the-neurocognitive- study-of-inner-language-reveals-about-our-inner-space/] - hal-02039667 What the neurocognitive study of inner language reveals about our inner space Hélène Lœvenbruck Université Grenoble Alpes, CNRS, Laboratoire de Psychologie et NeuroCognition (LPNC), UMR 5105, 38000, Grenoble France Abstract Our inner space is furnished, and sometimes even stuffed, with verbal material. The nature of inner language has long been under the careful scrutiny of scholars, philosophers and writers, through the practice of introspection. The use of recent experimental methods in the field of cognitive neuroscience provides a new window of insight into the format, properties, qualities and mechanisms of inner language. -

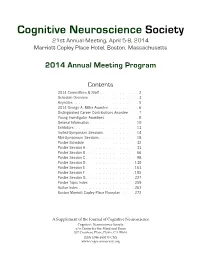

CNS 2014 Program

Cognitive Neuroscience Society 21st Annual Meeting, April 5-8, 2014 Marriott Copley Place Hotel, Boston, Massachusetts 2014 Annual Meeting Program Contents 2014 Committees & Staff . 2 Schedule Overview . 3 . Keynotes . 5 2014 George A . Miller Awardee . 6. Distinguished Career Contributions Awardee . 7 . Young Investigator Awardees . 8 . General Information . 10 Exhibitors . 13 . Invited-Symposium Sessions . 14 Mini-Symposium Sessions . 18 Poster Schedule . 32. Poster Session A . 33 Poster Session B . 66 Poster Session C . 98 Poster Session D . 130 Poster Session E . 163 Poster Session F . 195 . Poster Session G . 227 Poster Topic Index . 259. Author Index . 261 . Boston Marriott Copley Place Floorplan . 272. A Supplement of the Journal of Cognitive Neuroscience Cognitive Neuroscience Society c/o Center for the Mind and Brain 267 Cousteau Place, Davis, CA 95616 ISSN 1096-8857 © CNS www.cogneurosociety.org 2014 Committees & Staff Governing Board Mini-Symposium Committee Roberto Cabeza, Ph.D., Duke University David Badre, Ph.D., Brown University (Chair) Marta Kutas, Ph.D., University of California, San Diego Adam Aron, Ph.D., University of California, San Diego Helen Neville, Ph.D., University of Oregon Lila Davachi, Ph.D., New York University Daniel Schacter, Ph.D., Harvard University Elizabeth Kensinger, Ph.D., Boston College Michael S. Gazzaniga, Ph.D., University of California, Gina Kuperberg, Ph.D., Harvard University Santa Barbara (ex officio) Thad Polk, Ph.D., University of Michigan George R. Mangun, Ph.D., University of California, -

An Amerind Etymological Dictionary

An Amerind Etymological Dictionary c 2007 by Merritt Ruhlen ! Printed in the United States of America Library of Congress Cataloging-in-Publication Data Greenberg, Joseph H. Ruhlen, Merritt An Amerind Etymological Dictionary Bibliography: p. Includes indexes. 1. Amerind Languages—Etymology—Classification. I. Title. P000.G0 2007 000!.012 00-00000 ISBN 0-0000-0000-0 (alk. paper) This book is dedicated to the Amerind people, the first Americans Preface The present volume is a revison, extension, and refinement of the ev- idence for the Amerind linguistic family that was initially offered in Greenberg (1987). This revision entails (1) the correction of a num- ber of forms, and the elimination of others, on the basis of criticism by specialists in various Amerind languages; (2) the consolidation of certain Amerind subgroup etymologies (given in Greenberg 1987) into Amerind etymologies; (3) the addition of many reconstructions from different levels of Amerind, based on a comprehensive database of all known reconstructions for Amerind subfamilies; and, finally, (4) the addition of a number of new Amerind etymologies presented here for the first time. I believe the present work represents an advance over the original, but it is at the same time simply one step forward on a project that will never be finished. M. R. September 2007 Contents Introduction 1 Dictionary 11 Maps 272 Classification of Amerind Languages 274 References 283 Semantic Index 296 Introduction This volume presents the lexical and grammatical evidence that defines the Amerind linguistic family. The evidence is presented in terms of 913 etymolo- gies, arranged alphabetically according to the English gloss. -

Talking to Computers in Natural Language

feature Talking to Computers in Natural Language Natural language understanding is as old as computing itself, but recent advances in machine learning and the rising demand of natural-language interfaces make it a promising time to once again tackle the long-standing challenge. By Percy Liang DOI: 10.1145/2659831 s you read this sentence, the words on the page are somehow absorbed into your brain and transformed into concepts, which then enter into a rich network of previously-acquired concepts. This process of language understanding has so far been the sole privilege of humans. But the universality of computation, Aas formalized by Alan Turing in the early 1930s—which states that any computation could be done on a Turing machine—offers a tantalizing possibility that a computer could understand language as well. Later, Turing went on in his seminal 1950 article, “Computing Machinery and Intelligence,” to propose the now-famous Turing test—a bold and speculative method to evaluate cial intelligence at the time. Daniel Bo- Figure 1). SHRDLU could both answer whether a computer actually under- brow built a system for his Ph.D. thesis questions and execute actions, for ex- stands language (or more broadly, is at MIT to solve algebra word problems ample: “Find a block that is taller than “intelligent”). While this test has led to found in high-school algebra books, the one you are holding and put it into the development of amusing chatbots for example: “If the number of custom- the box.” In this case, SHRDLU would that attempt to fool human judges by ers Tom gets is twice the square of 20% first have to understand that the blue engaging in light-hearted banter, the of the number of advertisements he runs, block is the referent and then perform grand challenge of developing serious and the number of advertisements is 45, the action by moving the small green programs that can truly understand then what is the numbers of customers block out of the way and then lifting the language in useful ways remains wide Tom gets?” [1]. -

Revealing the Language of Thought Brent Silby 1

Revealing the Language of Thought Brent Silby 1 Revealing the Language of Thought An e-book by BRENT SILBY This paper was produced at the Department of Philosophy, University of Canterbury, New Zealand Copyright © Brent Silby 2000 Revealing the Language of Thought Brent Silby 2 Contents Abstract Chapter 1: Introduction Chapter 2: Thinking Sentences 1. Preliminary Thoughts 2. The Language of Thought Hypothesis 3. The Map Alternative 4. Problems with Mentalese Chapter 3: Installing New Technology: Natural Language and the Mind 1. Introduction 2. Language... what's it for? 3. Natural Language as the Language of Thought 4. What can we make of the evidence? Chapter 4: The Last Stand... Don't Replace The Old Code Yet 1. The Fight for Mentalese 2. Pinker's Resistance 3. Pinker's Continued Resistance 4. A Concluding Thought about Thought Chapter 5: A Direction for Future Thought 1. The Review 2. The Conclusion 3. Expanding the mind beyond the confines of the biological brain References / Acknowledgments Revealing the Language of Thought Brent Silby 3 Abstract Language of thought theories fall primarily into two views. The first view sees the language of thought as an innate language known as mentalese, which is hypothesized to operate at a level below conscious awareness while at the same time operating at a higher level than the neural events in the brain. The second view supposes that the language of thought is not innate. Rather, the language of thought is natural language. So, as an English speaker, my language of thought would be English. My goal is to defend the second view. -

3 Corpus Tools for Lexicographers

Comp. by: pg0994 Stage : Proof ChapterID: 0001546186 Date:14/5/12 Time:16:20:14 Filepath:d:/womat-filecopy/0001546186.3D31 OUP UNCORRECTED PROOF – FIRST PROOF, 14/5/2012, SPi 3 Corpus tools for lexicographers ADAM KILGARRIFF AND IZTOK KOSEM 3.1 Introduction To analyse corpus data, lexicographers need software that allows them to search, manipulate and save data, a ‘corpus tool’. A good corpus tool is the key to a comprehensive lexicographic analysis—a corpus without a good tool to access it is of little use. Both corpus compilation and corpus tools have been swept along by general technological advances over the last three decades. Compiling and storing corpora has become far faster and easier, so corpora tend to be much larger than previous ones. Most of the first COBUILD dictionary was produced from a corpus of eight million words. Several of the leading English dictionaries of the 1990s were produced using the British National Corpus (BNC), of 100 million words. Current lexico- graphic projects we are involved in use corpora of around a billion words—though this is still less than one hundredth of one percent of the English language text available on the Web (see Rundell, this volume). The amount of data to analyse has thus increased significantly, and corpus tools have had to be improved to assist lexicographers in adapting to this change. Corpus tools have become faster, more multifunctional, and customizable. In the COBUILD project, getting concordance output took a long time and then the concordances were printed on paper and handed out to lexicographers (Clear 1987). -

Natural Language Processing (NLP) for Requirements Engineering: a Systematic Mapping Study

Natural Language Processing (NLP) for Requirements Engineering: A Systematic Mapping Study Liping Zhao† Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] Waad Alhoshan Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] Alessio Ferrari Consiglio Nazionale delle Ricerche, Istituto di Scienza e Tecnologie dell'Informazione "A. Faedo" (CNR-ISTI), Pisa, Italy, [email protected] Keletso J. Letsholo Faculty of Computer Information Science, Higher Colleges of Technology, Abu Dhabi, United Arab Emirates, [email protected] Muideen A. Ajagbe Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] Erol-Valeriu Chioasca Exgence Ltd., CORE, Manchester, United Kingdom, [email protected] Riza T. Batista-Navarro Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] † Corresponding author. Context: NLP4RE – Natural language processing (NLP) supported requirements engineering (RE) – is an area of research and development that seeks to apply NLP techniques, tools and resources to a variety of requirements documents or artifacts to support a range of linguistic analysis tasks performed at various RE phases. Such tasks include detecting language issues, identifying key domain concepts and establishing traceability links between requirements. Objective: This article surveys the landscape of NLP4RE research to understand the state of the art and identify open problems. Method: The systematic mapping study approach is used to conduct this survey, which identified 404 relevant primary studies and reviewed them according to five research questions, cutting across five aspects of NLP4RE research, concerning the state of the literature, the state of empirical research, the research focus, the state of the practice, and the NLP technologies used. -

Detecting Politeness in Natural Language by Michael Yeomans, Alejandro Kantor, Dustin Tingley

CONTRIBUTED RESEARCH ARTICLES 489 The politeness Package: Detecting Politeness in Natural Language by Michael Yeomans, Alejandro Kantor, Dustin Tingley Abstract This package provides tools to extract politeness markers in English natural language. It also allows researchers to easily visualize and quantify politeness between groups of documents. This package combines and extends prior research on the linguistic markers of politeness (Brown and Levinson, 1987; Danescu-Niculescu-Mizil et al., 2013; Voigt et al., 2017). We demonstrate two applications for detecting politeness in natural language during consequential social interactions— distributive negotiations, and speed dating. Introduction Politeness is a universal dimension of human communication (Goffman, 1967; Lakoff, 1973; Brown and Levinson, 1987). In practically all settings, a speaker can choose to be more or less polite to their audience, and this can have consequences for the speakers’ social goals. Politeness is encoded in a discrete and rich set of linguistic markers that modify the information content of an utterance. Sometimes politeness is an act of commission (for example, saying “please” and “thank you”) <and sometimes it is an act of omission (for example, declining to be contradictory). Furthermore, the mapping of politeness markers often varies by culture, by context (work vs. family vs. friends), by a speaker’s characteristics (male vs. female), or the goals (buyer vs. seller). Many branches of social science might be interested in how (and when) people express politeness to one another, as one mechanism by which social co-ordination is achieved. The politeness package is designed to make it easier to detect politeness in English natural language, by quantifying relevant characteristics of polite speech, and comparing them to other data about the speaker. -

The Art of Lexicography - Niladri Sekhar Dash

LINGUISTICS - The Art of Lexicography - Niladri Sekhar Dash THE ART OF LEXICOGRAPHY Niladri Sekhar Dash Linguistic Research Unit, Indian Statistical Institute, Kolkata, India Keywords: Lexicology, linguistics, grammar, encyclopedia, normative, reference, history, etymology, learner’s dictionary, electronic dictionary, planning, data collection, lexical extraction, lexical item, lexical selection, typology, headword, spelling, pronunciation, etymology, morphology, meaning, illustration, example, citation Contents 1. Introduction 2. Definition 3. The History of Lexicography 4. Lexicography and Allied Fields 4.1. Lexicology and Lexicography 4.2. Linguistics and Lexicography 4.3. Grammar and Lexicography 4.4. Encyclopedia and lexicography 5. Typological Classification of Dictionary 5.1. General Dictionary 5.2. Normative Dictionary 5.3. Referential or Descriptive Dictionary 5.4. Historical Dictionary 5.5. Etymological Dictionary 5.6. Dictionary of Loanwords 5.7. Encyclopedic Dictionary 5.8. Learner's Dictionary 5.9. Monolingual Dictionary 5.10. Special Dictionaries 6. Electronic Dictionary 7. Tasks for Dictionary Making 7.1. Panning 7.2. Data Collection 7.3. Extraction of lexical items 7.4. SelectionUNESCO of Lexical Items – EOLSS 7.5. Mode of Lexical Selection 8. Dictionary Making: General Dictionary 8.1. HeadwordsSAMPLE CHAPTERS 8.2. Spelling 8.3. Pronunciation 8.4. Etymology 8.5. Morphology and Grammar 8.6. Meaning 8.7. Illustrative Examples and Citations 9. Conclusion Acknowledgements ©Encyclopedia of Life Support Systems (EOLSS) LINGUISTICS - The Art of Lexicography - Niladri Sekhar Dash Glossary Bibliography Biographical Sketch Summary The art of dictionary making is as old as the field of linguistics. People started to cultivate this field from the very early age of our civilization, probably seven to eight hundred years before the Christian era. -

The Importance of Morphology, Etymology, and Phonology

3/16/19 OUTLINE Introduction •Goals Scientific Word Investigations: •Spelling exercise •Clarify some definitions The importance of •Intro to/review of the brain and learning Morphology, Etymology, and •What is Dyslexia? •Reading Development and Literacy Instruction Phonology •Important facts about spelling Jennifer Petrich, PhD GOALS OUTLINE Answer the following: •Language History and Evolution • What is OG? What is SWI? • What is the difference between phonics and •Scientific Investigation of the writing phonology? system • What does linguistics tell us about written • Important terms language? • What is reading and how are we teaching it? • What SWI is and is not • Why should we use the scientific method to • Scientific inquiry and its tools investigate written language? • Goal is understanding the writing system Defining Our Terms Defining Our Terms •Linguistics à lingu + ist + ic + s •Phonics à phone/ + ic + s • the study of languages • literacy instruction based on small part of speech research and psychological research •Phonology à phone/ + o + log(e) + y (phoneme) • the study of the psychology of spoken language •Phonemic Awareness • awareness of phonemes?? •Phonetics à phone/ + et(e) + ic + s (phone) • the study of the physiology of spoken language •Orthography à orth + o + graph + y • correct spelling •Morphology à morph + o + log(e) + y (morpheme) • the study of the form/structure of words •Orthographic phonology • The study of the connection between graphemes and phonemes 1 3/16/19 Defining Our Terms The Beautiful Brain •Phonemeà -

Natural Language Processing

Natural Language Processing Liz Liddy (lead), Eduard Hovy, Jimmy Lin, John Prager, Dragomir Radev, Lucy Vanderwende, Ralph Weischedel This report is one of five reports that were based on the MINDS workshops, led by Donna Harman (NIST) and sponsored by Heather McCallum-Bayliss of the Disruptive Technology Office of the Office of the Director of National Intelligence's Office of Science and Technology (ODNI/ADDNI/S&T/DTO). To find the rest of the reports, and an executive overview, please see http://www.itl.nist.gov/iaui/894.02/minds.html. Historic Paradigm Shifts or Shift-Enablers A series of discoveries and developments over the past years has resulted in major shifts in the discipline of Natural Language Processing. Some have been more influential than others, but each is recognizable today as having had major impact, although this might not have been seen at the time. Some have been shift-enablers, rather than actual shifts in methods or approaches, but these have caused as major a change in how the discipline accomplishes its work. Very early on in the emergence of the field of NLP, there were demonstrations that NLP could develop operational systems with real levels of linguistic processing, in truly end-to-end (although toy) systems. These included SHRDLU by Winograd (1971) and LUNAR by Woods (1970). Each could accomplish a specific task — manipulating blocks in the blocks world or answering questions about samples from the moon. They were able to accomplish their limited goals because they included all the levels of language processing in their interactions with humans, including morphological, lexical, syntactic, semantic, discourse and pragmatic.