Natural Language Processing and Language Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MSU Working Papers in Second Language Studies Volume 7

MSU Working Papers in Second Language Studies Volume 7, Number 1 2015 3 MSU Working Papers in SLS 2016, Vol. 7 ISBELL, RAWAL, & TIGCHELAAR – EDITORS’ MESSAGE Editors’ Message: Seventh Volume of the MSU Working Papers in Second Language Studies The Editorial Team is pleased to introduce the 7th volume of the MSU Working Papers in Second Language Studies. The Working Papers is an open-access, peer-reviewed outlet for disseminating knowledge in the field of second language (L2) research. The Working Papers additionally has a two-layered formative aim. First, we welcome research that is “rough around the edges” and provide constructive feedback in the peer review process to aid researchers in clearly and appropriately reporting their research efforts. Similarly, for scholars working through ideas in literature reviews or research proposals, the peer review process facilitates critical yet constructive exchanges leading to more refined and focused presentation of ideas. Second, we extend an opportunity to in-training or early-career scholars to lend their expertise and serve as reviewers, thereby gaining practical experience on the “other side” of academic publishing and rendering service to the field. Of course, we also value the interviews with prominent L2 researchers and book/textbook reviews we receive, which provide a useful resource for L2 scholars and teachers. We must acknowledge (and in fact are quite glad to) that without the hard work of authors and reviewers, the Working Papers would not be possible. This volume of the Working Papers features two empirical research articles, a research proposal, a literature review, and two reviews. Before introducing these articles in detail, however, we wish to reflect on the history of the Working Papers by answering a simple question: What happens once an article is published in the Working Papers? Life after Publication As an open-access journal, the Working Papers lives on the internet, freely accessible by just about anyone. -

Sentential Switching on Teaching Grammar

Cumhuriyet Üniversitesi Fen Fakültesi Cumhuriyet University Faculty of Science Fen Bilimleri Dergisi (CFD), Cilt:36, No: 3 Özel Sayı (2015) Science Journal (CSJ), Vol. 36, No: 3 Special Issue (2015) ISSN: 1300-1949 ISSN: 1300-1949 The Effect of Intra-sentential, Inter-sentential and Tag- sentential Switching on Teaching Grammar Atefeh ABDOLLAHI1,*, Ramin RAHMANY2, & Ataollah MALEKI3 2Ph.D. of TEFL, Department of Humanities, Faculty of Teaching English and Translation, Takestan Branch, Islamic Azad University, Takestan, Iran. Received: 01.02.2015; Accepted: 05.05.2015 ______________________________________________________________________________________________ Abstract. The present study examined the comparative effect of different types of code-switching, i.e., intra- sentential, inter-sentential, and tag-sentential switching on EFL learners grammar learning and teaching. To this end, a sample of 60 Iranian female and male students in two different institutions in Qazvin was selected. They were assigned to four groups. Each group was randomly assigned to one of the afore-mentioned treatment conditions. After the experimental period, a post-test was taken from students to examine the effect of different types of code- switching on students’ learning of grammar. The results showed that the differences among the effects of the above- mentioned techniques were statistically significant. These findings can have implications for EFL learners, EFL teachers, and materials’ developers. Keywords: Code, Code switching, Inter-sentential switching, Tag-switching, Inter-sentential switching 1. BACKGROUND AND PURPOSE Code switching, i.e., the use of more than one language in speech, has been said to follow regular grammatical and stylistic patterns (Woolford, 1983). Patterns of code switching have been described in terms of grammatical constraints and various theoretical models have been suggested to account for such constraints. -

Enhanced Input in LCTL Pedagogy

Enhanced Input in LCTL Pedagogy Marilyn S. Manley Rowan University Abstract Language materials for the more-commonly-taught languages (MCTLs) often include visual input enhancement (Sharwood Smith 1991, 1993) which makes use of typographical cues like bolding and underlining to enhance the saliency of targeted forms. For a variety of reasons, this paper argues that the use of enhanced input, both visual and oral, is especially important as a tool for the less- commonly-taught languages (LCTLs). As there continues to be a scarcity of teaching resources for the LCTLs, individual teachers must take it upon themselves to incorporate enhanced input into their own self-made materials. Specific examples of how to incorpo- rate both visual and oral enhanced input into language teaching are drawn from the author’s own experiences teaching Cuzco Quechua. Additionally, survey results are presented from the author’s Fall 2010 semester Cuzco Quechua language students, supporting the use of both visual and oral enhanced input. Introduction Sharwood Smith’s input enhancement hypothesis (1991, 1993) responds to why it is that L2 learners often seem to ignore tar- get language norms present in the linguistic input they have received, resulting in non-target-like output. According to Sharwood Smith (1991, 1993), these learners may not be noticing, and therefore not consequently learning, particular target language forms due to the fact that they lack perceptual salience in the linguistic input. Therefore, in order to stimulate the intake of form as well as meaning, Sharwood Smith (1991, 1993) proposes improving the quality of language input through input enhancement, involving increasing the saliency of lin- guistic features for both visual input (ex. -

What the Neurocognitive Study of Inner Language Reveals About Our Inner Space Hélène Loevenbruck

What the neurocognitive study of inner language reveals about our inner space Hélène Loevenbruck To cite this version: Hélène Loevenbruck. What the neurocognitive study of inner language reveals about our inner space. Epistémocritique, épistémocritique : littérature et savoirs, 2018, Langage intérieur - Espaces intérieurs / Inner Speech - Inner Space, 18. hal-02039667 HAL Id: hal-02039667 https://hal.archives-ouvertes.fr/hal-02039667 Submitted on 20 Sep 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Preliminary version produced by the author. In Lœvenbruck H. (2008). Épistémocritique, n° 18 : Langage intérieur - Espaces intérieurs / Inner Speech - Inner Space, Stéphanie Smadja, Pierre-Louis Patoine (eds.) [http://epistemocritique.org/what-the-neurocognitive- study-of-inner-language-reveals-about-our-inner-space/] - hal-02039667 What the neurocognitive study of inner language reveals about our inner space Hélène Lœvenbruck Université Grenoble Alpes, CNRS, Laboratoire de Psychologie et NeuroCognition (LPNC), UMR 5105, 38000, Grenoble France Abstract Our inner space is furnished, and sometimes even stuffed, with verbal material. The nature of inner language has long been under the careful scrutiny of scholars, philosophers and writers, through the practice of introspection. The use of recent experimental methods in the field of cognitive neuroscience provides a new window of insight into the format, properties, qualities and mechanisms of inner language. -

CNS 2014 Program

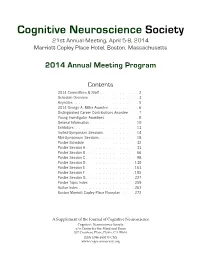

Cognitive Neuroscience Society 21st Annual Meeting, April 5-8, 2014 Marriott Copley Place Hotel, Boston, Massachusetts 2014 Annual Meeting Program Contents 2014 Committees & Staff . 2 Schedule Overview . 3 . Keynotes . 5 2014 George A . Miller Awardee . 6. Distinguished Career Contributions Awardee . 7 . Young Investigator Awardees . 8 . General Information . 10 Exhibitors . 13 . Invited-Symposium Sessions . 14 Mini-Symposium Sessions . 18 Poster Schedule . 32. Poster Session A . 33 Poster Session B . 66 Poster Session C . 98 Poster Session D . 130 Poster Session E . 163 Poster Session F . 195 . Poster Session G . 227 Poster Topic Index . 259. Author Index . 261 . Boston Marriott Copley Place Floorplan . 272. A Supplement of the Journal of Cognitive Neuroscience Cognitive Neuroscience Society c/o Center for the Mind and Brain 267 Cousteau Place, Davis, CA 95616 ISSN 1096-8857 © CNS www.cogneurosociety.org 2014 Committees & Staff Governing Board Mini-Symposium Committee Roberto Cabeza, Ph.D., Duke University David Badre, Ph.D., Brown University (Chair) Marta Kutas, Ph.D., University of California, San Diego Adam Aron, Ph.D., University of California, San Diego Helen Neville, Ph.D., University of Oregon Lila Davachi, Ph.D., New York University Daniel Schacter, Ph.D., Harvard University Elizabeth Kensinger, Ph.D., Boston College Michael S. Gazzaniga, Ph.D., University of California, Gina Kuperberg, Ph.D., Harvard University Santa Barbara (ex officio) Thad Polk, Ph.D., University of Michigan George R. Mangun, Ph.D., University of California, -

The Relative Effects of Enhanced and Non-Enhanced Structured Input on L2 Acquisition of Spanish Past Tense

THE RELATIVE EFFECTS OF ENHANCED AND NON-ENHANCED STRUCTURED INPUT ON L2 ACQUISITION OF SPANISH PAST TENSE by Silvia M. Peart, B.A, M.A., M.A. A Dissertation In SPANISH Submitted to the Graduate Faculty of Texas Tech University in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy in Spanish Approved Andrew P. Farley Idoia Elola Greta Gorsuch Janet Perez Susan Stein Fred Hartmeister Dean of the Graduate School May, 2008 Copyright 2008, Silvia M. Peart Texas Tech University, Silvia M. Peart, May 2008 ACKNOWLEDGMENTS I would like to thank first my mentor, advisor and friend, Dr. Andrew Farley. His guidance helped me learn not only the main topics of second language acquisition, but also led me to develop as a professional. It has been a pleasure to work with him these last three years. I thank him for his support this last year, his mentoring and patience. I want also to express my gratitude to Dr. Idoia Elola whose support also has been a key to my success as a doctoral student. Her encouragement and assistance during this last year has been the fuel that kept me in motion. I would like to thank Dr. Susan Stein and Dr. Beard for their friendship and their encouraging support in all my projects as a student. Finally, I would like to thank my committee for their invaluable feedback. I am also grateful to the friends I made during my career at Texas Tech University. It was also their support that encouragement that kept me on track all these years. -

Talking to Computers in Natural Language

feature Talking to Computers in Natural Language Natural language understanding is as old as computing itself, but recent advances in machine learning and the rising demand of natural-language interfaces make it a promising time to once again tackle the long-standing challenge. By Percy Liang DOI: 10.1145/2659831 s you read this sentence, the words on the page are somehow absorbed into your brain and transformed into concepts, which then enter into a rich network of previously-acquired concepts. This process of language understanding has so far been the sole privilege of humans. But the universality of computation, Aas formalized by Alan Turing in the early 1930s—which states that any computation could be done on a Turing machine—offers a tantalizing possibility that a computer could understand language as well. Later, Turing went on in his seminal 1950 article, “Computing Machinery and Intelligence,” to propose the now-famous Turing test—a bold and speculative method to evaluate cial intelligence at the time. Daniel Bo- Figure 1). SHRDLU could both answer whether a computer actually under- brow built a system for his Ph.D. thesis questions and execute actions, for ex- stands language (or more broadly, is at MIT to solve algebra word problems ample: “Find a block that is taller than “intelligent”). While this test has led to found in high-school algebra books, the one you are holding and put it into the development of amusing chatbots for example: “If the number of custom- the box.” In this case, SHRDLU would that attempt to fool human judges by ers Tom gets is twice the square of 20% first have to understand that the blue engaging in light-hearted banter, the of the number of advertisements he runs, block is the referent and then perform grand challenge of developing serious and the number of advertisements is 45, the action by moving the small green programs that can truly understand then what is the numbers of customers block out of the way and then lifting the language in useful ways remains wide Tom gets?” [1]. -

Revealing the Language of Thought Brent Silby 1

Revealing the Language of Thought Brent Silby 1 Revealing the Language of Thought An e-book by BRENT SILBY This paper was produced at the Department of Philosophy, University of Canterbury, New Zealand Copyright © Brent Silby 2000 Revealing the Language of Thought Brent Silby 2 Contents Abstract Chapter 1: Introduction Chapter 2: Thinking Sentences 1. Preliminary Thoughts 2. The Language of Thought Hypothesis 3. The Map Alternative 4. Problems with Mentalese Chapter 3: Installing New Technology: Natural Language and the Mind 1. Introduction 2. Language... what's it for? 3. Natural Language as the Language of Thought 4. What can we make of the evidence? Chapter 4: The Last Stand... Don't Replace The Old Code Yet 1. The Fight for Mentalese 2. Pinker's Resistance 3. Pinker's Continued Resistance 4. A Concluding Thought about Thought Chapter 5: A Direction for Future Thought 1. The Review 2. The Conclusion 3. Expanding the mind beyond the confines of the biological brain References / Acknowledgments Revealing the Language of Thought Brent Silby 3 Abstract Language of thought theories fall primarily into two views. The first view sees the language of thought as an innate language known as mentalese, which is hypothesized to operate at a level below conscious awareness while at the same time operating at a higher level than the neural events in the brain. The second view supposes that the language of thought is not innate. Rather, the language of thought is natural language. So, as an English speaker, my language of thought would be English. My goal is to defend the second view. -

Identifying Linguistic Cues That Distinguish Text Types: a Comparison of First and Second Language Speakers

Identifying Linguistic Cues that Distinguish Text Types: A Comparison of First and Second Language Speakers Scott A. Crossley, Max M. Louwerse and Danielle S. McNamara (Mississippi State University and University of Memphis) Crossley, Scott A, Max M. Louwerse and Danielle S. McNamara. (2008). Identifying Linguistic Cues that Distinguish Text Types: A Comparison of First and Second Language Speakers. Language Research 44.2, 361-381. The authors examine the degree to which first (L1) and second language (L2) speakers of English are able to distinguish between simplified or authen- tic reading texts from L2 instructional books and whether L1 and L2 speak- ers differ in their ability to process linguistic cues related to this distinction. These human judgments are also compared to computational judgments which are based on indices inspired by cognitive theories of reading process- ing. Results demonstrate that both L1 and L2 speakers of English are able to identify linguistic cues within both text types, but only L1 speakers are able to successfully distinguish between simplified and authentic texts. In addition, the performance of a computational tool was comparable to that of human performance. These findings have important implications for second lan- guage text processing and readability as well as implications for material de- velopment for second language instruction. Keywords: second language reading, text readability, materials development, text processing, psycholinguistics, corpus linguistics, computa- tional linguistics 1. Introduction Recent research has demonstrated that text readability for second language (L2) learners is better measured using cognitively inspired indices based on theories of text comprehension as compared to readability formulas that de- pend on surface level linguistic features (Crossley, Greenfield & McNamara 2008). -

Natural Language Processing (NLP) for Requirements Engineering: a Systematic Mapping Study

Natural Language Processing (NLP) for Requirements Engineering: A Systematic Mapping Study Liping Zhao† Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] Waad Alhoshan Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] Alessio Ferrari Consiglio Nazionale delle Ricerche, Istituto di Scienza e Tecnologie dell'Informazione "A. Faedo" (CNR-ISTI), Pisa, Italy, [email protected] Keletso J. Letsholo Faculty of Computer Information Science, Higher Colleges of Technology, Abu Dhabi, United Arab Emirates, [email protected] Muideen A. Ajagbe Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] Erol-Valeriu Chioasca Exgence Ltd., CORE, Manchester, United Kingdom, [email protected] Riza T. Batista-Navarro Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] † Corresponding author. Context: NLP4RE – Natural language processing (NLP) supported requirements engineering (RE) – is an area of research and development that seeks to apply NLP techniques, tools and resources to a variety of requirements documents or artifacts to support a range of linguistic analysis tasks performed at various RE phases. Such tasks include detecting language issues, identifying key domain concepts and establishing traceability links between requirements. Objective: This article surveys the landscape of NLP4RE research to understand the state of the art and identify open problems. Method: The systematic mapping study approach is used to conduct this survey, which identified 404 relevant primary studies and reviewed them according to five research questions, cutting across five aspects of NLP4RE research, concerning the state of the literature, the state of empirical research, the research focus, the state of the practice, and the NLP technologies used. -

The Effect of Input Enhancement, Individual Output, and Collaborative Output on Foreign Language Learning: the Case of English Inversion Structures

RESLA 26 (2013), 267-288 THE EFFECT OF INPUT ENHANCEMENT, INDIVIDUAL OUTPUT, AND COLLABORATIVE OUTPUT ON FOREIGN LANGUAGE LEARNING: THE CASE OF ENGLISH INVERSION STRUCTURES SHADAB JABBARPOOR* Islamic Azad University, Garmrar Branch (Iran) ZIA TAJEDDIN Allameh Tabataba ‘i University (Iran) ABSTRACT. The present study compares the short term and long term effects of three focus-on-form tasks on the acquisition of English inversion structures by EFL learners. It also quantitatively investigates learners’ trend of development during the process of acquisition. Ninety freshmen from a B.A. program in TEFL were randomly divided into the three task groups. The tasks included textual enhancement in Group 1 and dictogloss in Group 2 and 3, where texts were reconstructed individually and collaboratively, respectively. Alongside a pretest and a posttest, production tests were administered to assess the trend of development in each group. Results revealed that the impacts of input and collaborative output tasks were greater than that of the individual output task. Moreover, the findings documented that the trend of development in the output group was not a linear additive process, but a rather U-shaped one with backsliding. This study supports previous studies that have combined enhancement with instructional assistance. KEY WORDS. Collaborative output, individual output, input enhancement, dictogloss, inversion structures. RESUMEN. Este estudio compara los efectos a corto y largo plazo de tres tareas de foco en la forma en la adquisición de estructuras de inversión por parte de apren- dices de inglés como lengua extranjera. Asimismo, se presenta una investigación cuan- titativa de las pautas de desarrollo durante el proceso de adquisición. -

Detecting Politeness in Natural Language by Michael Yeomans, Alejandro Kantor, Dustin Tingley

CONTRIBUTED RESEARCH ARTICLES 489 The politeness Package: Detecting Politeness in Natural Language by Michael Yeomans, Alejandro Kantor, Dustin Tingley Abstract This package provides tools to extract politeness markers in English natural language. It also allows researchers to easily visualize and quantify politeness between groups of documents. This package combines and extends prior research on the linguistic markers of politeness (Brown and Levinson, 1987; Danescu-Niculescu-Mizil et al., 2013; Voigt et al., 2017). We demonstrate two applications for detecting politeness in natural language during consequential social interactions— distributive negotiations, and speed dating. Introduction Politeness is a universal dimension of human communication (Goffman, 1967; Lakoff, 1973; Brown and Levinson, 1987). In practically all settings, a speaker can choose to be more or less polite to their audience, and this can have consequences for the speakers’ social goals. Politeness is encoded in a discrete and rich set of linguistic markers that modify the information content of an utterance. Sometimes politeness is an act of commission (for example, saying “please” and “thank you”) <and sometimes it is an act of omission (for example, declining to be contradictory). Furthermore, the mapping of politeness markers often varies by culture, by context (work vs. family vs. friends), by a speaker’s characteristics (male vs. female), or the goals (buyer vs. seller). Many branches of social science might be interested in how (and when) people express politeness to one another, as one mechanism by which social co-ordination is achieved. The politeness package is designed to make it easier to detect politeness in English natural language, by quantifying relevant characteristics of polite speech, and comparing them to other data about the speaker.