NAOMS Reference Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Airline Competition Plan Final Report

Final Report Airline Competition Plan Philadelphia International Airport Prepared for Federal Aviation Administration in compliance with requirements of AIR21 Prepared by City of Philadelphia Division of Aviation Philadelphia, Pennsylvania August 31, 2000 Final Report Airline Competition Plan Philadelphia International Airport Prepared for Federal Aviation Administration in compliance with requirements of AIR21 Prepared by City of Philadelphia Division of Aviation Philadelphia, Pennsylvania August 31, 2000 SUMMARY S-1 Summary AIRLINE COMPETITION PLAN Philadelphia International Airport The City of Philadelphia, owner and operator of Philadelphia International Airport, is required to submit annually to the Federal Aviation Administration an airline competition plan. The City’s plan for 2000, as documented in the accompanying report, provides information regarding the availability of passenger terminal facilities, the use of passenger facility charge (PFC) revenues to fund terminal facilities, airline leasing arrangements, patterns of airline service, and average airfares for passengers originating their journeys at the Airport. The plan also sets forth the City’s current and planned initiatives to encourage competitive airline service at the Airport, construct terminal facilities needed to accommodate additional airline service, and ensure that access is provided to airlines wishing to serve the Airport on fair, reasonable, and nondiscriminatory terms. These initiatives are summarized in the following paragraphs. Encourage New Airline Service Airlines that have recently started scheduled domestic service at Philadelphia International Airport include AirTran Airways, America West Airlines, American Trans Air, Midway Airlines, Midwest Express Airlines, and National Airlines. Airlines that have recently started scheduled international service at the Airport include Air France and Lufthansa. The City intends to continue its programs to encourage airlines to begin or increase service at the Airport. -

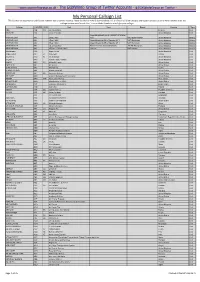

My Personal Callsign List This List Was Not Designed for Publication However Due to Several Requests I Have Decided to Make It Downloadable

- www.egxwinfogroup.co.uk - The EGXWinfo Group of Twitter Accounts - @EGXWinfoGroup on Twitter - My Personal Callsign List This list was not designed for publication however due to several requests I have decided to make it downloadable. It is a mixture of listed callsigns and logged callsigns so some have numbers after the callsign as they were heard. Use CTL+F in Adobe Reader to search for your callsign Callsign ICAO/PRI IATA Unit Type Based Country Type ABG AAB W9 Abelag Aviation Belgium Civil ARMYAIR AAC Army Air Corps United Kingdom Civil AgustaWestland Lynx AH.9A/AW159 Wildcat ARMYAIR 200# AAC 2Regt | AAC AH.1 AAC Middle Wallop United Kingdom Military ARMYAIR 300# AAC 3Regt | AAC AgustaWestland AH-64 Apache AH.1 RAF Wattisham United Kingdom Military ARMYAIR 400# AAC 4Regt | AAC AgustaWestland AH-64 Apache AH.1 RAF Wattisham United Kingdom Military ARMYAIR 500# AAC 5Regt AAC/RAF Britten-Norman Islander/Defender JHCFS Aldergrove United Kingdom Military ARMYAIR 600# AAC 657Sqn | JSFAW | AAC Various RAF Odiham United Kingdom Military Ambassador AAD Mann Air Ltd United Kingdom Civil AIGLE AZUR AAF ZI Aigle Azur France Civil ATLANTIC AAG KI Air Atlantique United Kingdom Civil ATLANTIC AAG Atlantic Flight Training United Kingdom Civil ALOHA AAH KH Aloha Air Cargo United States Civil BOREALIS AAI Air Aurora United States Civil ALFA SUDAN AAJ Alfa Airlines Sudan Civil ALASKA ISLAND AAK Alaska Island Air United States Civil AMERICAN AAL AA American Airlines United States Civil AM CORP AAM Aviation Management Corporation United States Civil -

Airline Schedules

Airline Schedules This finding aid was produced using ArchivesSpace on January 08, 2019. English (eng) Describing Archives: A Content Standard Special Collections and Archives Division, History of Aviation Archives. 3020 Waterview Pkwy SP2 Suite 11.206 Richardson, Texas 75080 [email protected]. URL: https://www.utdallas.edu/library/special-collections-and-archives/ Airline Schedules Table of Contents Summary Information .................................................................................................................................... 3 Scope and Content ......................................................................................................................................... 3 Series Description .......................................................................................................................................... 4 Administrative Information ............................................................................................................................ 4 Related Materials ........................................................................................................................................... 5 Controlled Access Headings .......................................................................................................................... 5 Collection Inventory ....................................................................................................................................... 6 - Page 2 - Airline Schedules Summary Information Repository: -

Air Passenger Origin and Destination, Canada-United States Report

Catalogue no. 51-205-XIE Air Passenger Origin and Destination, Canada-United States Report 2005 How to obtain more information Specific inquiries about this product and related statistics or services should be directed to: Aviation Statistics Centre, Transportation Division, Statistics Canada, Ottawa, Ontario, K1A 0T6 (Telephone: 1-613-951-0068; Internet: [email protected]). For information on the wide range of data available from Statistics Canada, you can contact us by calling one of our toll-free numbers. You can also contact us by e-mail or by visiting our website at www.statcan.ca. National inquiries line 1-800-263-1136 National telecommunications device for the hearing impaired 1-800-363-7629 Depository Services Program inquiries 1-800-700-1033 Fax line for Depository Services Program 1-800-889-9734 E-mail inquiries [email protected] Website www.statcan.ca Information to access the product This product, catalogue no. 51-205-XIE, is available for free in electronic format. To obtain a single issue, visit our website at www.statcan.ca and select Publications. Standards of service to the public Statistics Canada is committed to serving its clients in a prompt, reliable and courteous manner. To this end, the Agency has developed standards of service which its employees observe in serving its clients. To obtain a copy of these service standards, please contact Statistics Canada toll free at 1-800-263-1136. The service standards are also published on www.statcan.ca under About us > Providing services to Canadians. Statistics Canada Transportation Division Aviation Statistics Centre Air Passenger Origin and Destination, Canada-United States Report 2005 Published by authority of the Minister responsible for Statistics Canada © Minister of Industry, 2007 All rights reserved. -

Overview and Trends

9310-01 Chapter 1 10/12/99 14:48 Page 15 1 M Overview and Trends The Transportation Research Board (TRB) study committee that pro- duced Winds of Change held its final meeting in the spring of 1991. The committee had reviewed the general experience of the U.S. airline in- dustry during the more than a dozen years since legislation ended gov- ernment economic regulation of entry, pricing, and ticket distribution in the domestic market.1 The committee examined issues ranging from passenger fares and service in small communities to aviation safety and the federal government’s performance in accommodating the escalating demands on air traffic control. At the time, it was still being debated whether airline deregulation was favorable to consumers. Once viewed as contrary to the public interest,2 the vigorous airline competition 1 The Airline Deregulation Act of 1978 was preceded by market-oriented administra- tive reforms adopted by the Civil Aeronautics Board (CAB) beginning in 1975. 2 Congress adopted the public utility form of regulation for the airline industry when it created CAB, partly out of concern that the small scale of the industry and number of willing entrants would lead to excessive competition and capacity, ultimately having neg- ative effects on service and perhaps leading to monopolies and having adverse effects on consumers in the end (Levine 1965; Meyer et al. 1959). 15 9310-01 Chapter 1 10/12/99 14:48 Page 16 16 ENTRY AND COMPETITION IN THE U.S. AIRLINE INDUSTRY spurred by deregulation now is commonly credited with generating large and lasting public benefits. -

Accident Reports (Incl

MS-012 Richard G. Snyder Papers NTSB reports, safety recommendations, studies, etc. accident reports (incl. international) other reports: Univ. of Michigan GA Investigations Studies Drawer 57-60 Drawer 65-68 Drawer File Title Dates 57 (1 of 2) 1 NTSB 81-10 Northwest Airlines, DC-10-40, N143US, 1981 Leesburg, VA, 1/31/81 57 (1 of 2) 2 NTSB 81-12, N468AC, Air California Boeing 737-293, Santa 1981 Ana, Cal, 2-17-81 57 (1 of 2) 3 NTSB 81-16, DC-9-80, N1002G, Yuma, Arizona, 6-19-80 1981 57 (1 of 2) 4 NTSB-AAR-82-4 1982 Sky Train, Lear 24 Felt, OK, Oct. 1, 1982 1981 57 (1 of 2) 5 NTSB-AAR-82-6 Bell 206B/ Piper PA-34 Midair NJ, 9/23/81 1982 57 (1 of 2) 6 NTSB-AAR-82-7 1982 Pilgrim, DH6 Providence RI, Feb. 21, 1982 82 57 (1 of 2) 7 NTSB-AAR-82-8 1982, Jan 13, Air Flor.737, Wash, DC 1982 57 (1 of 2) 8 NTSB-AAR-82-9 3/27/82, Lufkin Beech BE-200 Parker, CO 1982 57 (1 of 2) 9 NTSB-AAR-82-10 1982 Midair col, FlllD Cessna 206 Clovis, 1982 NM, 2/6/80 57 (1 of 2) 10 NTSB-AAR-82-11 1982, Jan.5, Empire Piper-31, Ithaca, NY 1982 57 (1 of 2) 11 NTSB-84-02 Western Hellicopters, Bell UH-1B, N87701, 1984 Valencia, Cal, 7/23/83 57 (1 of 2) 12 NTSB-AAR-82-12 1/3/82 Ashland Cessna 414A Ashland VA 1982 57 (1 of 2) 13 NTSB-82-14 Reeve Aleutian Airways, N1HON-YS-11A 1982 N169RV, King Salmon, Alaska, 2/16/82 57 (1 of 2) 14 NTSB-82-15 World Airways, DC-10-30CF, N113WA, Boston, 1982 MA, 1/23/82 57 (1 of 2) 15 NTSB-82-16 Gifford Aviation, DHC-6, N103AQ, Hooper Bay, 1982 Alaska, 5/16/82 57 (1 of 2) 16 NTSB-83-01 Ibex Corp, Learjet 23, N100TA, Atlantic Ocean, 1983 5/6/82 57 (1 of 2) 17 NTSB-83-02 Pan Am, Boeing, 727-235, N4737, Kenner, 1983 Louisiana, 7/9/82 57 (1 of 2) 18 NTSB AAR-83/04 1983, Cessna Citation II N2CA, Mt, View, 1983 MO, 11/18/82 57 (1 of 2) 19 NTSB-83-05 A.G. -

Bankruptcy Tilts Playing Field Frank Boroch, CFA 212 272-6335 [email protected]

Equity Research Airlines / Rated: Market Underweight September 15, 2005 Research Analyst(s): David Strine 212 272-7869 [email protected] Bankruptcy tilts playing field Frank Boroch, CFA 212 272-6335 [email protected] Key Points *** TWIN BANKRUPTCY FILINGS TILT PLAYING FIELD. NWAC and DAL filed for Chapter 11 protection yesterday, becoming the 20 and 21st airlines to do so since 2000. Now with 47% of industry capacity in bankruptcy, the playing field looks set to become even more lopsided pressuring non-bankrupt legacies to lower costs further and low cost carriers to reassess their shrinking CASM advantage. *** CAPACITY PULLBACK. Over the past 20 years, bankrupt carriers decreased capacity by 5-10% on avg in the year following their filing. If we assume DAL and NWAC shrink by 7.5% (the midpoint) in '06, our domestic industry ASM forecast goes from +2% y/y to flat, which could potentially be favorable for airline pricing (yields). *** NWAC AND DAL INTIMATE CAPACITY RESTRAINT. After their filing yesterday, NWAC's CEO indicated 4Q:05 capacity could decline 5-6% y/y, while Delta announced plans to accelerate its fleet simplification plan, removing four aircraft types by the end of 2006. *** BIGGEST BENEFICIARIES LIKELY TO BE LOW COST CARRIERS. NWAC and DAL account for roughly 26% of domestic capacity, which, if trimmed by 7.5% equates to a 2% pt reduction in industry capacity. We believe LCC-heavy routes are likely to see a disproportionate benefit from potential reductions at DAL and NWAC, with AAI, AWA, and JBLU in particular having an easier path for growth. -

363 Part 238—Contracts With

Immigration and Naturalization Service, Justice § 238.3 (2) The country where the alien was mented on Form I±420. The contracts born; with transportation lines referred to in (3) The country where the alien has a section 238(c) of the Act shall be made residence; or by the Commissioner on behalf of the (4) Any country willing to accept the government and shall be documented alien. on Form I±426. The contracts with (c) Contiguous territory and adjacent transportation lines desiring their pas- islands. Any alien ordered excluded who sengers to be preinspected at places boarded an aircraft or vessel in foreign outside the United States shall be contiguous territory or in any adjacent made by the Commissioner on behalf of island shall be deported to such foreign the government and shall be docu- contiguous territory or adjacent island mented on Form I±425; except that con- if the alien is a native, citizen, subject, tracts for irregularly operated charter or national of such foreign contiguous flights may be entered into by the Ex- territory or adjacent island, or if the ecutive Associate Commissioner for alien has a residence in such foreign Operations or an Immigration Officer contiguous territory or adjacent is- designated by the Executive Associate land. Otherwise, the alien shall be de- Commissioner for Operations and hav- ported, in the first instance, to the ing jurisdiction over the location country in which is located the port at where the inspection will take place. which the alien embarked for such for- [57 FR 59907, Dec. 17, 1992] eign contiguous territory or adjacent island. -

Uncontrolled Descent and Collision with Terrain, United Airlines 585

PB2001-910401 NTSB/AAR-01/01 DCA91MA023 NATIONAL TRANSPORTATION SAFETY BOARD WASHINGTON, D.C. 20594 AIRCRAFT ACCIDENT REPORT Uncontrolled Descent and Collision With Terrain United Airlines Flight 585 Boeing 737-200, N999UA 4 Miles South of Colorado Springs Municipal Airport Colorado Springs, Colorado March 3, 1991 5498C Aircraft Accident Report Uncontrolled Descent and Collision With Terrain United Airlines Flight 585 Boeing 737-200, N999UA 4 Miles South of Colorado Springs Municipal Airport Colorado Springs, Colorado March 3, 1991 RAN S P T O L R A T LURIBUS N P UNUM A E O T I I O T N A N S A D FE R NTSB/AAR-01/01 T Y B OA PB2001-910401 National Transportation Safety Board Notation 5498C 490 L’Enfant Plaza, S.W. Adopted March 27, 2001 Washington, D.C. 20594 National Transportation Safety Board. 2001. Uncontrolled Descent and Collision With Terrain, United Airlines Flight 585, Boeing 737-200, N999UA, 4 Miles South of Colorado Springs Municipal Airport, Colorado, Springs, Colorado, March 3, 1991. Aircraft Accident Report NTSB/AAR-01/01. Washington, DC. Abstract: This amended report explains the accident involving United Airlines flight 585, a Boeing 737-200, which entered an uncontrolled descent and impacted terrain 4 miles south of Colorado Springs Municipal Airport, Colorado Springs, Colorado, on March 3, 1991. Safety issues discussed in the report are the potential meterological hazards to airplanes in the area of Colorado Springs; 737 rudder malfunctions, including rudder reversals; and the design of the main rudder power control unit servo valve. The National Transportation Safety Board is an independent Federal agency dedicated to promoting aviation, railroad, highway, marine, pipeline, and hazardous materials safety. -

Community Relations Plan

Miami International Airport Community Relations Plan Preface .............................................................................................................. 1 Overview of the CRP ......................................................................................... 2 NCP Background ............................................................................................... 3 National Contingency Plan .............................................................................................................. 3 Government Oversight.................................................................................................................... 4 Site Description and History ............................................................................. 5 Site Description .............................................................................................................................. 5 Site History .................................................................................................................................... 5 Goals of the CRP ............................................................................................... 8 Community Relations Activities........................................................................ 9 Appendix A – Site Map .................................................................................... 10 Appendix B – Contact List............................................................................... 11 Federal Officials .......................................................................................................................... -

Change 3, FAA Order 7340.2A Contractions

U.S. DEPARTMENT OF TRANSPORTATION CHANGE FEDERAL AVIATION ADMINISTRATION 7340.2A CHG 3 SUBJ: CONTRACTIONS 1. PURPOSE. This change transmits revised pages to Order JO 7340.2A, Contractions. 2. DISTRIBUTION. This change is distributed to select offices in Washington and regional headquarters, the William J. Hughes Technical Center, and the Mike Monroney Aeronautical Center; to all air traffic field offices and field facilities; to all airway facilities field offices; to all international aviation field offices, airport district offices, and flight standards district offices; and to the interested aviation public. 3. EFFECTIVE DATE. July 29, 2010. 4. EXPLANATION OF CHANGES. Changes, additions, and modifications (CAM) are listed in the CAM section of this change. Changes within sections are indicated by a vertical bar. 5. DISPOSITION OF TRANSMITTAL. Retain this transmittal until superseded by a new basic order. 6. PAGE CONTROL CHART. See the page control chart attachment. Y[fa\.Uj-Koef p^/2, Nancy B. Kalinowski Vice President, System Operations Services Air Traffic Organization Date: k/^///V/<+///0 Distribution: ZAT-734, ZAT-464 Initiated by: AJR-0 Vice President, System Operations Services 7/29/10 JO 7340.2A CHG 3 PAGE CONTROL CHART REMOVE PAGES DATED INSERT PAGES DATED CAM−1−1 through CAM−1−2 . 4/8/10 CAM−1−1 through CAM−1−2 . 7/29/10 1−1−1 . 8/27/09 1−1−1 . 7/29/10 2−1−23 through 2−1−27 . 4/8/10 2−1−23 through 2−1−27 . 7/29/10 2−2−28 . 4/8/10 2−2−28 . 4/8/10 2−2−23 . -

Fields Listed in Part I. Group (8)

Chile Group (1) All fields listed in part I. Group (2) 28. Recognized Medical Specializations (including, but not limited to: Anesthesiology, AUdiology, Cardiography, Cardiology, Dermatology, Embryology, Epidemiology, Forensic Medicine, Gastroenterology, Hematology, Immunology, Internal Medicine, Neurological Surgery, Obstetrics and Gynecology, Oncology, Ophthalmology, Orthopedic Surgery, Otolaryngology, Pathology, Pediatrics, Pharmacology and Pharmaceutics, Physical Medicine and Rehabilitation, Physiology, Plastic Surgery, Preventive Medicine, Proctology, Psychiatry and Neurology, Radiology, Speech Pathology, Sports Medicine, Surgery, Thoracic Surgery, Toxicology, Urology and Virology) 2C. Veterinary Medicine 2D. Emergency Medicine 2E. Nuclear Medicine 2F. Geriatrics 2G. Nursing (including, but not limited to registered nurses, practical nurses, physician's receptionists and medical records clerks) 21. Dentistry 2M. Medical Cybernetics 2N. All Therapies, Prosthetics and Healing (except Medicine, Osteopathy or Osteopathic Medicine, Nursing, Dentistry, Chiropractic and Optometry) 20. Medical Statistics and Documentation 2P. Cancer Research 20. Medical Photography 2R. Environmental Health Group (3) All fields listed in part I. Group (4) All fields listed in part I. Group (5) All fields listed in part I. Group (6) 6A. Sociology (except Economics and including Criminology) 68. Psychology (including, but not limited to Child Psychology, Psychometrics and Psychobiology) 6C. History (including Art History) 60. Philosophy (including Humanities)