An Iterated Learning Framework for Unsupervised Part-Of-Speech Induction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Bibliography of Berber Language Materials Kyra Jucovy and John

A Bibliography of Berber Language Materials Kyra Jucovy and John Alderete Swarthmore College, June 2001 (updated August 2006) This bibliography is intended as a resource for research on Berber languages. The references below are primarily devoted to linguistic research on Berber languages, but the bibliography may be of use to those interested in Berber literature, poetry, and music. This bibliography was supported in part by the National Science Foundation (BCS-0104604). Abdel Massih, Ernest T. 1969. Tamazight Verb Structure: A Generative Approach. Dissertation Abstracts International: Pt. A, 0419-4209; Pt.B, 0419-4217; Pt. C, 0307-6075. PubLg: English. Cat: Berber language, tamazight, morphology. Abel, Hans. 1913. Ein Erzahlung im Dialekt von Ermenne. Abh. Kais. Sach. Akad. Wissensch., vol. 29. Leipzig. PubLg: German. Cat: texts, Nubian, historical linguistics, comparative linguistics. Abercromby, John A. 1917. The language of the Canary Islanders. Harvard African Studies 1: 95-129. PubLg: English. Cat: Berber language, canary islands. Abes, M. 1916. Premiere annee de berbere. Rabat. PubLg: French. Cat: Textbook, Morocco, grammar, sample texts, glossary. Abes, M. 1917, 1919. Les Ait Ndhir. Les Archives berberes, vol. 2, vol. 3. PubLg: French. Cat: ethnography, Ait Ndhir, Morocco, Tamazight. Abes, M. 1919. Chansons d’amour chez les Berberes. France-Maroc. PubLg: French. Cat: songs. Ahmad ibn Khauwas. 1881a. Dialogues francais-kabyles. Algiers. PubLg: French. Cat: sample texts, Kabyle. Ahmad ibn Khauwas. 1881b. Notions succinctes de grammaire kabyle. Algiers. PubLg: French. Cat: grammar, Kabyle. Aikhenvald, Aleksandra Yu. 1986. On the Reconstruction of Syntactic System in Berber Lybic. Zeitschrift fur Phonetik, Sprachwissenschaft und Kommunikationsforschung 39:5: 527-539. PubLg: English. -

Corel Ventura

Anthropology / Middle East / World Music Goodman BERBER “Sure to interest a number of different audiences, BERBER from language and music scholars to specialists on North Africa. [A] superb book, clearly written, CULTURE analytically incisive, about very important issues that have not been described elsewhere.” ON THE —John Bowen, Washington University CULTURE WORLD STAGE In this nuanced study of the performance of cultural identity, Jane E. Goodman travels from contemporary Kabyle Berber communities in Algeria and France to the colonial archives, identifying the products, performances, and media through which Berber identity has developed. ON In the 1990s, with a major Islamist insurgency underway in Algeria, Berber cultural associations created performance forms that challenged THE Islamist premises while critiquing their own village practices. Goodman describes the phenomenon of new Kabyle song, a form of world music that transformed village songs for global audiences. WORLD She follows new songs as they move from their producers to the copyright agency to the Parisian stage, highlighting the networks of circulation and exchange through which Berbers have achieved From Village global visibility. to Video STAGE JANE E. GOODMAN is Associate Professor of Communication and Culture at Indiana University. While training to become a cultural anthropologist, she performed with the women’s world music group Libana. Cover photographs: Yamina Djouadou, Algeria, 1993, by Jane E. Goodman. Textile photograph by Michael Cavanagh. The textile is from a Berber women’s fuda, or outer-skirt. Jane E. Goodman http://iupress.indiana.edu 1-800-842-6796 INDIANA Berber Culture on the World Stage JANE E. GOODMAN Berber Culture on the World Stage From Village to Video indiana university press Bloomington and Indianapolis This book is a publication of Indiana University Press 601 North Morton Street Bloomington, IN 47404-3797 USA http://iupress.indiana.edu Telephone orders 800-842-6796 Fax orders 812-855-7931 Orders by e-mail [email protected] © 2005 by Jane E. -

29. Semitic Influence in Celtic? Yes and No *1

29. Semitic influence in Celtic? Yes and No *1 Abstract Filppula (1999) explains the sparing use of Yes and No made in Irish English as a result of language contact: Irish lacks words for 'Yes' and 'No' and substitutes what Filppula calls the Modal-only type of answer instead. This usage is carried by Irish learners of English from their native language into the target language. In my paper on Yes and No in the history of English (Vennemann 2009a), I explain the rela tively sparing use the English make of Yes and No and the use instead of the Mo dal-only type of short answer [(Yes,) I will, (No,) I can't, etc. I likewise as a contact feature: Brittonic speakers learning Anglo-Saxon earned their usage essentially the same as in Irish from their native language into the target language. In the present paper I deal with the question which is prompted by this explanation: How did Insular Celtic itself develop this method of answering Yes/No-questions with Modal-only sentences? The best answer to this question would be one which uses the same model, namely reference to substrata in this case, pre-Celtic substrata - of the Isles. Fortunately there exists a theory of such substrata: the Hamito-Semitic theory of John Morris Jones (1900) and Julius Pokorny (1927-30). Accounting for the Insular Celtic response system in terms of these substrata would yield another instance of what I have called the transitivity of language contact in Vennemann 2002d and illustrated with the loss of external possessors in Vennemann 2002c. -

ORS PRICE MF-$0.83 HC-$3.50 Plus Postage

DOCUME_T RESUME ED 132 834 FL 008 226 AUTHOR 'Johnson, Dora E.; And Others TITLE. languagee of the Middle East and Nor h'Africa. A Survey of MaterialS for the:Study of the Uncommonly' Taught Langtmges. INSTITU- ION Center for Applied Linguistics, Arlington,. Va. SPONS AGENCY Office of Education (DHEW), Washington, D.C. PUB DATE 76 cONT_ACT 300-75-0201 , NOTE 54p. AVA -LABIE FROM Center for Applied Linguist cs, 1611 North Kent Street, Arlington, Virginia 22209 ($3.95 .each fascicle; Complete Set of 8, $26.50 ORS PRICE MF-$0.83 HC-$3.50 Plus Postage. DESCRIPTORS Adult 'Education; African LanguageS; Afro Asiatic Languages; *Annotated Bibliographies; Arabic; Baluchi;,*Berber Languages; -Chad Languages; Dialects;, Dictionaries; .Hebrew; Indo European languages; Instructional Materials; Kabyle; Kurdish; Language Instruction; Language Variation;,Pashto; Persian;- Reading Materials *Semitic Languages;_Tajik: *Turlcic Languages; Turkish; *Uncommonly Taught'Ianguages; Uralic Altaic Languages' 1DENTIFIE S. - Afghan.Persian; Algerian; Djebel. Nafusii Egyptian; *Itanian;-iragi; Linyan;.Maliese; Mauritanian; Moroccan; Rif; Senhaya; Shavia; Shilha; Sivi; Sudanese; Syrianl Tamashek; Tamazight; Tuareg; Tunisian; Zenaga ABSTRACT This is an annotated bibliography of basic,tools of accessfor the'study-ofthe uncommonly taught language8 of the Middle East and North Africa. It is one of eight fascicleS which constitute- .a revision of "A Provisional Survey of Materials for:the Study of the, Neglected Languages" (cAL 1969). Th emphasis is oh materials for the adOlt learner whose native language is English. Languages are grouped according.to the following- classifications: Turkic; Iranian; Semitic;# .BerberUnder each language'heading,,the items are arranged- as follows: CO teaching materials; (2! readers; (3) grammars; and' 00 dictionaries. -

GOO-80-02119 392P

DOCUMENT RESUME ED 228 863 FL 013 634 AUTHOR Hatfield, Deborah H.; And Others TITLE A Survey of Materials for the Study of theUncommonly Taught Languages: Supplement, 1976-1981. INSTITUTION Center for Applied Linguistics, Washington, D.C. SPONS AGENCY Department of Education, Washington, D.C.Div. of International Education. PUB DATE Jul 82 CONTRACT GOO-79-03415; GOO-80-02119 NOTE 392p.; For related documents, see ED 130 537-538, ED 132 833-835, ED 132 860, and ED 166 949-950. PUB TYPE Reference Materials Bibliographies (131) EDRS PRICE MF01/PC16 Plus Postage. DESCRIPTORS Annotated Bibliographies; Dictionaries; *InStructional Materials; Postsecondary Edtmation; *Second Language Instruction; Textbooks; *Uncommonly Taught Languages ABSTRACT This annotated bibliography is a supplement tothe previous survey published in 1976. It coverslanguages and language groups in the following divisions:(1) Western Europe/Pidgins and Creoles (European-based); (2) Eastern Europeand the Soviet Union; (3) the Middle East and North Africa; (4) SouthAsia;(5) Eastern Asia; (6) Sub-Saharan Africa; (7) SoutheastAsia and the Pacific; and (8) North, Central, and South Anerica. The primaryemphasis of the bibliography is on materials for the use of theadult learner whose native language is English. Under each languageheading, the items are arranged as follows:teaching materials, readers, grammars, and dictionaries. The annotations are descriptive.Whenever possible, each entry contains standardbibliographical information, including notations about reprints and accompanyingtapes/records -

1090 Book Reviews That Those Who Are More Experienced Will Not Find Anything of Use

1090 Book reviews that those who are more experienced will not find anything of use. For example, most bilingual researchers would benefit from following more closely Deuchar and Quay’s example of focusing on the subject’s linguistic environment as a whole, thereby including all sources of input to a child rather than only those from the primary caregivers, as it reflects the true state of things for the developing child more accurately. In addition, both the arguments in support of the existence of a rudimentary syntax even at the two-word stage and those outlining the difficulties in determining the language (s) of any given utterance are quite convincing, giving many researchers reasonable cause to reevaluate data. Although their goal of discerning whether one system or two was at work in the areas of phonology, lexical development, and syntax was not conclusively met, it is strangely encouraging that despite such careful methodology and extensive data as theirs, this issue still remains unre solved. It is quite possibly a question that will not be answered definitively for quite some time (if ever). Indeed, it may be of better use to step outside of the framework that the ‘‘one system versus two’’ issue has imposed on the field, as it may be greatly oversimplifying things. The proposition that ‘‘the alternative to two initial systems is not necessarily one initial system; it may be no initial system’’ (p. 111) is quite tantalizing indeed, and with a book such as Bilingual Acquisition in hand, the stage is set to continue along some of the paths for which Deuchar and Quay have laid foundations. -

Introduction to Kabyle Linguistics*

Introduction to Kabyle Linguistics* Nicolas Baier, Jessica Coon, and Morgan Sonderegger McGill University SUMMARY This short article provides an introduction to the volume, including background on the language and its speakers, information on the field methods classes and Kabyle projects at McGill University out of which these papers developed, and a short introduction to the articles contained in the volume. RÉSUMÉ Ce court article sert d’introduction au volume, incluant de l’information pertinente concernant le kabyle et ses locuteurs, des renseignements sur le cours de méthodes de terrain et sur les projets entourant le kabyle à l’université McGill parmi lesquels se sont développés les articles de ce volume, ainsi qu’une courte introduction à ces articles. 1. THE KABYLE LANGUAGE Kabyle, also known as Taqbaylit, is an Amazigh (“Berber”) language of the Afroasiatic family. Kable is spoken primarily in the Kabylia region of northern Algeria, where it is the dominant language in both personal and official use (Chaker 2011). The language is spoken by around 5.5 million people, with 3–3.5 million of those speakers in Kabylia itself (Chaker 2011); this makes Kabyle the second most populous language in Algeria, after Algerian Arabic, the country’s official language (Belkadi 2010:25). An additional 2–2.5 million people speak the language in diaspora, the majority of whom live in France (around 1 million, Chaker 2011). There is also a sizable population of Kabyle speakers in Montreal, where the data for the papers in this volume were *Special thanks to Karima Ouazar and Sadia Nahi, without whom this volume would not have been possible. -

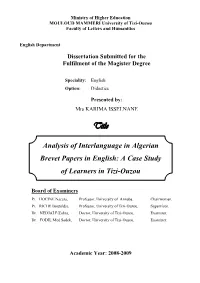

Analysis of Interlanguage in Algerian Brevet Papers in English: a Case

Ministry of Higher Education MOULOUD MAMMERI University of Tizi-Ouzou Faculty of Letters and Humanities English Department Dissertation Submitted for the Fulfilment of the Magister Degree Speciality: English Option: Didactics Presented by: Mrs KARIMA ISSELNANE Title Analysis of Interlanguage in Algerian Brevet Papers in English: A Case Study of Learners in Tizi-Ouzou Board of Examiners Pr. HOCINE Nacera, Professor, University of Annaba. Chairwoman. Pr. RICHE Bouteldja, Professor, University of Tizi-Ouzou. Supervisor. Dr. NEDJAÏ F/Zohra, Doctor, University of Tizi-Ouzou. Examiner. Dr. FODIL Med Sadek, Doctor, University of Tizi-Ouzou. Examiner. Academic Year: 2008-2009 CONTENTS LIST OF SYMBOLS …………………………………………………….. IV DEDICACE ……………………………………………………………… VI ACKNOWLEDGEMENT………………………………………………... VII ABSTRACT ……………………………………………………………… VIII GENERAL INTRODUCTION…………………………………………… 1 Theoretical Background ………………..………........................................ 2 Purpose of the Study…………………………………................................ 3 The Importance and Scope of the Study…………….................................. 4 Research Questions…………………………………………….................. 5 Methodology……………………………………….................................... 6 Subjects …………………………………………....................................... 6 Research tools……………………………………….................................. 6 Data Analysis ……………………………………….................................. 7 Definition of Terms ………………………………..................................... 8 References ………………………………………………………………... 9 CHAPTER ONE THEORETICAL -

Documenting Language Learning Difficulty

Modern Languages Compared MODERN LANGUAGES COMPARED Documenting Language Learning Difficulty ISBN 0-915935-19-8 Library of Congress Catalog Card Number Copyright 1994 Wesley Edward Arnold However I now make it publicly available to the world. Published in Warren Michigan Wesley Arnold Warren Mi 48091 CONTENTS INTRODUCTION THE CURRENT LANGUAGE SITUATION HOW COMPARISONS ARE DETERMINED SUITABILITY FOR INTERNATIONAL USE HOW SUITABILITY DETERMINED HOW LANGUAGES COMPARE THE EASIEST LANGUAGE LANGUAGES WITH OVER 1 MILLION LANGUAGES UNDER 1 MILLION SPEAKERS BIBLIOGRAPHY INDEX INTRODUCTION The purpose of this book is to provide the reader with a general information book about the languages found currently on Planet Earth. The information presented is factual and comes from the most reliable sources which are documented in the bibliography. This book came about because in doing university research on languages I did not find a single book that contained the information that this book has. Compiling language information is very time consuming and very difficult to verify even if one finds a native speaker. If I asked how many idioms, tenses, voices, moods, inflections, and exceptions were in your language most native speakers even teachers and even many university professors do not know. second purpose of this book is to help solve a terrible problem that has cost thousands of lives and continues to bring suffering to thousands. This problem is language misunderstanding-non-understanding, and the mistrust, hate and violence caused by it. If you think it doesn't happen here remember the people that are killed all over this country because they failed to stop when told to freeze or to put their hands up instead of in their pockets. -

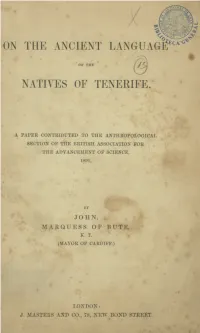

On the Ancient Language of the Natives of Tenerife

2010 universitaria, Biblioteca ULPGC. por LONDONI realizada PRINTED EY J. MA8TEH8 AND CO., ALBION BUILDINUS, 3. BAETnoLOMEW CL08E. Digitalización autores. los documento, Del © ON THE ANCIENT LANGUAGE OF 2010 THE NATIVES OF TENERIFE. universitaria, To read a paper before the present audience is an act of such temerity upon my part, that I feel that I ought to Biblioteca begin by explaining the circumstances which lead me to hope that it may not be altogether without interest. In the spring of this year the state of my health made it ULPGC. desirable that I should go abroad for some weeks, and I por selected Tenerife, not only for the sake of the singularly perfect climate, and of the shortness and ease of the journey, but also to gratify ray curiosity by the sight of realizada a región until then cntirely unknown to me. Those who know Tenerife at all, know that, especially in the case of an invalid, it is necessary, in order to have any occupation, to take up some line of study; and it oc- Digitalización curred to me to turn my attention to the language spoken by the inhabitants at the time of the Spanish conquest. I was the more encouraged in this because, as far as my autores. native informants could tell me, the subject had hitherto los been treated in only a very slight and superficial way, and, in especial, no attempt had been made to discover the grammatical iiiflections, by the examination, not only of the vvords, but also of the few sentences which have documento, been handed down to us. -

Benali-Mohamed Rachid.Pdf

Democratic and Popular Republic of Algeria Ministry of Higher Education and Scientific Research University of Oran Faculty of Letters, Languages and Arts Department of Anglo-Saxon Languages Section of English A SOCIOLINGUITIC INVESTIGATION OF TAMAZIGHT IN ALGERIA WITH SPECIAL REFERENCE TO THE KABYLE VARIETY Doctorat d’État Thesis in Sociolinguistics Presented by: Under the supervision of: Benali-Mohamed Rachid Pr Bouamrane Ali Members of the Jury: President: Professor Farouk Bouhadiba (Oran University) Supervisor: Professor Ali Bouamrane (Oran University) Examiner: Professor Mohamed Dekkak (Oran University) Examiner: Doctor Abbes Bahous (Mostaganem University) Examiner: Doctor Zoubir Dendane (Tlemcen University) 2007 This is to certify that i. The thesis comprises only my original work towards the Doctorat d’Etat; ii. Due acknowledgement has been made in the text to all other material used Signed Rachid Benali-Mohamed To the memory of my father To my mother To Bouchra To Syphax and Anir To my sisters and brothers To all those who through their efforts and sacrifice made the presentation of such a work in an Algerian university possible ACKNOWLEGEMENTS I am deeply indebted to Pr professor A. Bouamrane whose moral help and scientific orientations have been the key for the achievement of this work. His patience and critical mind have been factors without which this work would not have been achieved. My special thanks also go to Pr F. Bouhadiba and Pr M. Dekkak for their constant encouragement and scientific inspiration. I am also grateful to Pr D. Caubet and Pr J. Lentin from the INALCO, Paris for their scientific inspiration either through their seminars or through discussions on the subject of this work. -

The Substratum in Insular Celtic

Ranko Matasović University of Zagreb The substratum in Insular Celtic The discussion focuses on the problem of pre-Celtic substratum languages in the British Is- lands. The article by R. Matasović begins by dealing with the syntactic features of Insular Celtic languages (Brittonic and Goidelic): the author analyses numerous innovations in In- sular Celtic and finds certain parallels in languages of the Afro-Asiatic macrofamily. The second part of his paper contains the analysis of that particular part of the Celtic lexicon which cannot be attributed to the PIE layer. A number of words for which only a substratum origin can be assumed is attested only in Brittonic and Goidelic. The author proposes to re- construct Proto-Insular Celtic forms for this section of the vocabulary. This idea encounters objections from T. Mikhailova, who prefers to qualify common non-Celtic lexicon of Goidelic and Brittonic as parallel loanwords from the same substratum language. The genetic value of this language, however, remains enigmatic for both authors. Keywords: Pre-Celtic substratum, Goidelic, Brittonic, Insular Celtic, classification of Celtic languages, etymology, reconstruction, loanwords, wandering words. 1. Introduction We will never know which language or languages were spoken in the British Isles before the coming of the Celts. The Pictish language, very few documents of which have been preserved in the Ogam script, may actually have been Celtic (Forsyth 1997). If there ever was a pre-Celtic Pictish language, virtually nothing is known about its structure, to say nothing about its ge- netic affiliation. Moreover, the nature of Insular Celtic is a very debated issue.