Sentiment Analysis for Sinhala Language Using Deep Learning Techniques

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction

Introduction Mahagama Sekera (1929–76) was a Sinhalese lyricist and poet from Sri Lanka. In 1966 Sekera gave a lecture in which he argued that a test of a good song was to take away the music and see whether the lyric could stand on its own as a piece of literature.1 Here I have translated the Sinhala-language song Sekera presented as one that aced the test.2 The subject of this composition, like the themes of many songs broadcast on Sri Lanka’s radio since the late 1930s, was related to Buddhism, the religion of the country’s majority. The Niranjana River Flowed slowly along the sandy plains The day the Buddha reached enlightenment. The Chief of the Three Worlds attained samadhi in meditation. He was liberated at that moment. In the cool shade of the snowy mountain ranges The flowers’ fragrant pollen Wafted through the sandalwood trees Mixed with the soft wind And floated on. When the leaves and sprouts Of the great Bodhi tree shook slightly The seven musical notes rang out. A beautiful song came alive Moving to the tāla. 1 2 Introduction The day the Venerable Sanghamitta Brought the branch of the Bodhi tree to Mahamevuna Park The leaves of the Bodhi tree danced As if there was such a thing as a “Mahabō Vannama.”3 The writer of this song is Chandrarathna Manawasinghe (1913–64).4 In the Sinhala language he is credited as the gīta racakayā (lyricist). Manawasinghe alludes in the text to two Buddhist legends and a Sinhalese style of dance. -

Modern Contours: Sinhala Poetry in Sri Lanka, 1913-56

South Asia: Journal of South Asian Studies ISSN: 0085-6401 (Print) 1479-0270 (Online) Journal homepage: http://www.tandfonline.com/loi/csas20 Modern Contours: Sinhala Poetry in Sri Lanka, 1913–56 Garrett M. Field To cite this article: Garrett M. Field (2016): Modern Contours: Sinhala Poetry in Sri Lanka, 1913–56, South Asia: Journal of South Asian Studies, DOI: 10.1080/00856401.2016.1152436 To link to this article: http://dx.doi.org/10.1080/00856401.2016.1152436 Published online: 12 Apr 2016. Submit your article to this journal View related articles View Crossmark data Full Terms & Conditions of access and use can be found at http://www.tandfonline.com/action/journalInformation?journalCode=csas20 Download by: [Garrett Field] Date: 13 April 2016, At: 04:41 SOUTH ASIA: JOURNAL OF SOUTH ASIAN STUDIES, 2016 http://dx.doi.org/10.1080/00856401.2016.1152436 ARTICLE Modern Contours: Sinhala Poetry in Sri Lanka, 1913À56 Garrett M. Field Ohio University, Athens, OH, USA ABSTRACT KEYWORDS A consensus is growing among scholars of modern Indian literature Modernist realism; that the thematic development of Hindi, Urdu and Bangla poetry Rabindranath Tagore; was consistent to a considerable extent. I use the term ‘consistent’ romanticism; Sinhala poetry; to refer to the transitions between 1900 and 1960 from didacticism Siri Gunasinghe; South Asian literary history; Sri Lanka; to romanticism to modernist realism. The purpose of this article is to superposition build upon this consensus by revealing that as far south as Sri Lanka, Sinhala-language poetry developed along the same trajectory. To bear out this argument, I explore the works of four Sri Lankan poets, analysing the didacticism of Ananda Rajakaruna, the romanticism of P.B. -

Shihan DE SILVA JAYASURIYA King’S College, London

www.reseau-asie.com Enseignants, Chercheurs, Experts sur l’Asie et le Pacifique / Scholars, Professors and Experts on Asia and Pacific Communication L'empreinte portugaise au Sri Lanka : langage, musique et danse / Portuguese imprint on Sri Lanka: language, music and dance Shihan DE SILVA JAYASURIYA King’s College, London 3ème Congrès du Réseau Asie - IMASIE / 3rd Congress of Réseau Asie - IMASIE 26-27-28 sept. 2007, Paris, France Maison de la Chimie, Ecole des Hautes Etudes en Sciences Sociales, Fondation Maison des Sciences de l’Homme Thématique 4 / Theme 4 : Histoire, processus et enjeux identitaires / History and identity processes Atelier 24 / Workshop 24 : Portugal Índico après l’age d’or de l’Estado da Índia (XVIIe et XVIII siècles). Stratégies pour survivre dans un monde changé / Portugal Índico after the golden age of the Estado da Índia (17th and 18th centuries). Survival strategies in a changed world © 2007 – Shihan DE SILVA JAYASURIYA - Protection des documents / All rights reserved Les utilisateurs du site : http://www.reseau-asie.com s'engagent à respecter les règles de propriété intellectuelle des divers contenus proposés sur le site (loi n°92.597 du 1er juillet 1992, JO du 3 juillet). En particulier, tous les textes, sons, cartes ou images du 1er Congrès, sont soumis aux lois du droit d’auteur. Leur utilisation autorisée pour un usage non commercial requiert cependant la mention des sources complètes et celle du nom et prénom de l'auteur. The users of the website : http://www.reseau-asie.com are allowed to download and copy the materials of textual and multimedia information (sound, image, text, etc.) in the Web site, in particular documents of the 1st Congress, for their own personal, non-commercial use, or for classroom use, subject to the condition that any use should be accompanied by an acknowledgement of the source, citing the uniform resource locator (URL) of the page, name & first name of the authors (Title of the material, © author, URL). -

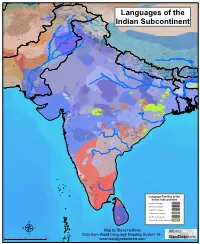

Map by Steve Huffman; Data from World Language Mapping System

Svalbard Greenland Jan Mayen Norwegian Norwegian Icelandic Iceland Finland Norway Swedish Sweden Swedish Faroese FaroeseFaroese Faroese Faroese Norwegian Russia Swedish Swedish Swedish Estonia Scottish Gaelic Russian Scottish Gaelic Scottish Gaelic Latvia Latvian Scots Denmark Scottish Gaelic Danish Scottish Gaelic Scottish Gaelic Danish Danish Lithuania Lithuanian Standard German Swedish Irish Gaelic Northern Frisian English Danish Isle of Man Northern FrisianNorthern Frisian Irish Gaelic English United Kingdom Kashubian Irish Gaelic English Belarusan Irish Gaelic Belarus Welsh English Western FrisianGronings Ireland DrentsEastern Frisian Dutch Sallands Irish Gaelic VeluwsTwents Poland Polish Irish Gaelic Welsh Achterhoeks Irish Gaelic Zeeuws Dutch Upper Sorbian Russian Zeeuws Netherlands Vlaams Upper Sorbian Vlaams Dutch Germany Standard German Vlaams Limburgish Limburgish PicardBelgium Standard German Standard German WalloonFrench Standard German Picard Picard Polish FrenchLuxembourgeois Russian French Czech Republic Czech Ukrainian Polish French Luxembourgeois Polish Polish Luxembourgeois Polish Ukrainian French Rusyn Ukraine Swiss German Czech Slovakia Slovak Ukrainian Slovak Rusyn Breton Croatian Romanian Carpathian Romani Kazakhstan Balkan Romani Ukrainian Croatian Moldova Standard German Hungary Switzerland Standard German Romanian Austria Greek Swiss GermanWalser CroatianStandard German Mongolia RomanschWalser Standard German Bulgarian Russian France French Slovene Bulgarian Russian French LombardRomansch Ladin Slovene Standard -

![Downloaded by [University of Defence] at 21:30 19 May 2016 South Asian Religions](https://docslib.b-cdn.net/cover/5576/downloaded-by-university-of-defence-at-21-30-19-may-2016-south-asian-religions-1065576.webp)

Downloaded by [University of Defence] at 21:30 19 May 2016 South Asian Religions

Downloaded by [University of Defence] at 21:30 19 May 2016 South Asian Religions The religious landscape of South Asia is complex and fascinating. While existing literature tends to focus on the majority religions of Hinduism and Buddhism, much less attention is given to Jainism, Sikhism, Islam or Christianity. While not neglecting the majority traditions, this valuable resource also explores the important role which the minority traditions play in the religious life of the subcontinent, covering popular as well as elite expressions of religious faith. By examining the realities of religious life, and the ways in which the traditions are practiced on the ground, this book provides an illuminating introduction to Asian religions. Karen Pechilis is NEH Distinguished Professor of Humanities and Chair and Professor of Religion at Drew University, USA. Her books for Routledge include Interpreting Devotion: The Poetry and Legacy of a Female Bhakti Saint of India (2011). Selva J. Raj (1952–2008) was Chair and Stanley S. Kresge Professor of Religious Studies at Albion College, USA. He served as chair of the Conference on the Study of Religions of India and co-edited several books on South Asia. Downloaded by [University of Defence] at 21:30 19 May 2016 South Asian Religions Tradition and today Edited by Karen Pechilis and Selva J. Raj Downloaded by [University of Defence] at 21:30 19 May 2016 First published 2013 by Routledge 2 Park Square, Milton Park, Abingdon, Oxon OX14 4RN Simultaneously published in the USA and Canada by Routledge 711 Third Avenue, New York, NY 10017 Routledge is an imprint of the Taylor & Francis Group, an informa business © 2013 Karen Pechilis and the estate of Selva J. -

Language Politics and State Policy in Nepal: a Newar Perspective

Language Politics and State Policy in Nepal: A Newar Perspective A Dissertation Submitted to the University of Tsukuba In Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy in International Public Policy Suwarn VAJRACHARYA 2014 To my mother, who taught me the value in a mother tongue and my father, who shared the virtue of empathy. ii Map-1: Original Nepal (Constituted of 12 districts) and Present Nepal iii Map-2: Nepal Mandala (Original Nepal demarcated by Mandalas) iv Map-3: Gorkha Nepal Expansion (1795-1816) v Map-4: Present Nepal by Ecological Zones (Mountain, Hill and Tarai zones) vi Map-5: Nepal by Language Families vii TABLE OF CONTENTS Table of Contents viii List of Maps and Tables xiv Acknowledgements xv Acronyms and Abbreviations xix INTRODUCTION Research Objectives 1 Research Background 2 Research Questions 5 Research Methodology 5 Significance of the Study 6 Organization of Study 7 PART I NATIONALISM AND LANGUAGE POLITICS: VICTIMS OF HISTORY 10 CHAPTER ONE NEPAL: A REFLECTION OF UNITY IN DIVERSITY 1.1. Topography: A Unique Variety 11 1.2. Cultural Pluralism 13 1.3. Religiousness of People and the State 16 1.4. Linguistic Reality, ‘Official’ and ‘National’ Languages 17 CHAPTER TWO THE NEWAR: AN ACCOUNT OF AUTHORS & VICTIMS OF THEIR HISTORY 2.1. The Newar as Authors of their history 24 2.1.1. Definition of Nepal and Newar 25 2.1.2. Nepal Mandala and Nepal 27 Territory of Nepal Mandala 28 viii 2.1.3. The Newar as a Nation: Conglomeration of Diverse People 29 2.1.4. -

Map by Steve Huffman Data from World Language Mapping System 16

Tajiki Tajiki Tajiki Shughni Southern Pashto Shughni Tajiki Wakhi Wakhi Wakhi Mandarin Chinese Sanglechi-Ishkashimi Sanglechi-Ishkashimi Wakhi Domaaki Sanglechi-Ishkashimi Khowar Khowar Khowar Kati Yidgha Eastern Farsi Munji Kalasha Kati KatiKati Phalura Kalami Indus Kohistani Shina Kati Prasuni Kamviri Dameli Kalami Languages of the Gawar-Bati To rw al i Chilisso Waigali Gawar-Bati Ushojo Kohistani Shina Balti Parachi Ashkun Tregami Gowro Northwest Pashayi Southwest Pashayi Grangali Bateri Ladakhi Northeast Pashayi Southeast Pashayi Shina Purik Shina Brokskat Aimaq Parya Northern Hindko Kashmiri Northern Pashto Purik Hazaragi Ladakhi Indian Subcontinent Changthang Ormuri Gujari Kashmiri Pahari-Potwari Gujari Bhadrawahi Zangskari Southern Hindko Kashmiri Ladakhi Pangwali Churahi Dogri Pattani Gahri Ormuri Chambeali Tinani Bhattiyali Gaddi Kanashi Tinani Southern Pashto Ladakhi Central Pashto Khams Tibetan Kullu Pahari KinnauriBhoti Kinnauri Sunam Majhi Western Panjabi Mandeali Jangshung Tukpa Bilaspuri Chitkuli Kinnauri Mahasu Pahari Eastern Panjabi Panang Jaunsari Western Balochi Southern Pashto Garhwali Khetrani Hazaragi Humla Rawat Central Tibetan Waneci Rawat Brahui Seraiki DarmiyaByangsi ChaudangsiDarmiya Western Balochi Kumaoni Chaudangsi Mugom Dehwari Bagri Nepali Dolpo Haryanvi Jumli Urdu Buksa Lowa Raute Eastern Balochi Tichurong Seke Sholaga Kaike Raji Rana Tharu Sonha Nar Phu ChantyalThakali Seraiki Raji Western Parbate Kham Manangba Tibetan Kathoriya Tharu Tibetan Eastern Parbate Kham Nubri Marwari Ts um Gamale Kham Eastern -

Copyrighted Material

Index Note: Page numbers in italics refer to figures and tables. 16R dune site, 36, 43, 440 Adittanallur, 484 Adivasi peoples see tribal peoples Abhaipur, 498 Adiyaman dynasty, 317 Achaemenid Empire, 278, 279 Afghanistan Acharyya, S.K., 81 in “Aryan invasion” hypothesis, 205 Acheulean industry see also Paleolithic era in history of agriculture, 128, 346 in Bangladesh, 406, 408 in human dispersals, 64 dating of, 33, 35, 38, 63 in isotope analysis of Harappan earliest discovery of, 72 migrants, 196 handaxes, 63, 72, 414, 441 skeletal remains found near, 483 in the Hunsgi and Baichbal valleys, 441–443 as source of raw materials, 132, 134 lack of evidence in northeastern India for, 45 Africa major sites of, 42, 62–63 cultigens from, 179, 347, 362–363, 370 in Nepal, 414 COPYRIGHTEDhominoid MATERIAL migrations to and from, 23, 24 in Pakistan, 415 Horn of, 65 related hominin finds, 73, 81, 82 human migrations from, 51–52 scholarship on, 43, 441 museums in, 471 Adam, 302, 334, 498 Paleolithic tools in, 40, 43 Adamgarh, 90, 101 research on stature in, 103 Addanki, 498 subsistence economies in, 348, 353 Adi Badri, 498 Agara Orathur, 498 Adichchanallur, 317, 498 Agartala, 407 Adilabad, 455 Agni Purana, 320 A Companion to South Asia in the Past, First Edition. Edited by Gwen Robbins Schug and Subhash R. Walimbe. © 2016 John Wiley & Sons, Inc. Published 2016 by John Wiley & Sons, Inc. 0002649130.indd 534 2/17/2016 3:57:33 PM INDEX 535 Agra, 337 Ammapur, 414 agriculture see also millet; rice; sedentism; water Amreli district, 247, 325 management Amri, -

An Imperative for Sri Lanka!

ANMEEZAN ACADEMIC AND PROFESSIONAL JOURNAL COMPRISING SCHOLARLY AND STUDENT ARTICLES 53RD EDITION EDITED BY: Shabna Rafeek Shimlah Usuph Shafna Abul Hudha All rights reserved, The Law Students’ Muslim Majlis Sri Lanka Law College Cover Design: Arqam Muneer Copyrights All material in this publication is protected by copyright, subject to statutory exceptions. Any unauthorized of the Law Students’ Muslim Majlis, may invoke inter alia liability for infringement of copyright. Disclaimer All views expressed in this production are those of the respective author and do not represent the opinion of the Law Students’ Muslim Majlis or Sri Lanka Law College. Unless expressly stated, the views expressed are the author’s own and are not to be attributed to any instruction he or she may present. Submission of material for future publications The Law Students’ Muslim Majlis welcomes previously unpublished original articles or manuscripts for publication in future editions of the “Meezan”. However, publication of such material would be at the discretion of the Law Students’ Muslim Majlis, in whose opinion, such material must be worthy of publication. All such submissions should be in duplicate accompanied with a compact disk and contact details of the contributor. All communication could be made via the address given below. Citation of Material contained herein Citation of this publication may be made due regard to its protection by copyright, in the following fashion: 2019, issue 53rd Meezan, LSMM Review, Responses and Criticisms The Law Students’ Muslim Majlis welcome any reviews, responses and criticisms of the content published in this issue. The President, The Law Students’ Muslim Majlis Sri Lanka Law College, 244, Hulftsdorp Street, Colombo 12 Tel - 011 2323759 (Local) - 0094112323749 (International) Email - [email protected] EVENT DETAILS The Launch of the 53rd Edition of the Annual Law Journal -MEEZAN- Chief Guest Hon. -

Acta Linguistica Asiatica

Acta Linguistica Asiatica Volume 5, Issue 1, 2015 ACTA LINGUISTICA ASIATICA Volume 5, Issue 1, 2015 Editors: Andrej Bekeš, Nina Golob, Mateja Petrovčič Editorial Board: Bi Yanli (China), Cao Hongquan (China), Luka Culiberg (Slovenia), Tamara Ditrich (Slovenia), Kristina Hmeljak Sangawa (Slovenia), Ichimiya Yufuko (Japan), Terry Andrew Joyce (Japan), Jens Karlsson (Sweden), Lee Yong (Korea), Lin Ming-chang (Taiwan), Arun Prakash Mishra (India), Nagisa Moritoki Škof (Slovenia), Nishina Kikuko (Japan), Sawada Hiroko (Japan), Chikako Shigemori Bučar (Slovenia), Irena Srdanović (Japan). © University of Ljubljana, Faculty of Arts, 2015 All rights reserved. Published by: Znanstvena založba Filozofske fakultete Univerze v Ljubljani (Ljubljana University Press, Faculty of Arts) Issued by: Department of Asian and African Studies For the publisher: Dr. Branka Kalenić Ramšak, Dean of the Faculty of Arts The journal is licensed under a Creative Commons Attribution 3.0 Unported (CC BY 3.0). Journal’s web page: http://revije.ff.uni-lj.si/ala/ The journal is published in the scope of Open Journal Systems ISSN: 2232-3317 Abstracting and Indexing Services: COBISS, dLib, Directory of Open Access Journals, MLA International Bibliography, Open J-Gate, Google Scholar and ERIH PLUS. Publication is free of charge. Address: University of Ljubljana, Faculty of Arts Department of Asian and African Studies Aškerčeva 2, SI-1000 Ljubljana, Slovenia E-mail: [email protected] TABLE OF CONTENTS Foreword ................................................................................................................. -

Research Report on Phonetics and Phonology of Sinhala

Research Report on Phonetics and Phonology of Sinhala Asanka Wasala and Kumudu Gamage Language Technology Research Laboratory, University of Colombo School of Computing, Sri Lanka. [email protected],[email protected] Abstract phonetics and phonology for improving the naturalness and intelligibility for our Sinhala TTS system. This report examines the major characteristics of Many researches have been carried out in the linguistic Sinhala language related to Phonetics and Phonology. domain regarding Sinhala phonetics and phonology. The main topics under study are Segmental and These researches cover both aspects of segmental and Supra-segmental sounds in Spoken Sinhala. The first suprasegmental features of spoken Sinhala. Some of part presents Sinhala Phonemic Inventory, which the themes elaborated in these researches are; Sinhala describes phonemes with their associated features and sound change-rules, Sinhala Prosody, Duration and phonotactics of Sinhala. Supra-segmental features like Stress, and Syllabification. Syllabification, Stress, Pitch and Intonation, followed In the domain of Sinhala Speech Processing, a by the procedure for letter to sound conversion are very little number of researches were reported and described in the latter part. most of them were carried out by undergraduates for The research on Syllabification leads to the their final year projects. Some restricted domain identification of set of rules for syllabifying a given Sinhala TTS systems developed by undergraduates are word. The syllabication algorithm achieved an already working in a number of domains. The study of accuracy of 99.95%. Due to the phonetic nature of the such projects laid the foundation for our project. Sinhala alphabet, the letter-to-phoneme mapping was However, an extensive research was done in all- easily done, however to produce the correct aspects of Sinhala Phonetics and Phonology; in order phonetized version of a word, some modifications identify the areas to be deeply concerned in developing were needed to be done. -

Sinhala Song and the Arya and Hela Schools of Cultural Nationalism in Colonial Sri Lanka

The Journal of Asian Studies http://journals.cambridge.org/JAS Additional services for The Journal of Asian Studies: Email alerts: Click here Subscriptions: Click here Commercial reprints: Click here Terms of use : Click here Music for Inner Domains: Sinhala Song and the Arya and Hela Schools of Cultural Nationalism in Colonial Sri Lanka Garrett M. Field The Journal of Asian Studies / Volume 73 / Issue 04 / November 2014, pp 1043 - 1058 DOI: 10.1017/S0021911814001028, Published online: 20 November 2014 Link to this article: http://journals.cambridge.org/abstract_S0021911814001028 How to cite this article: Garrett M. Field (2014). Music for Inner Domains: Sinhala Song and the Arya and Hela Schools of Cultural Nationalism in Colonial Sri Lanka. The Journal of Asian Studies, 73, pp 1043-1058 doi:10.1017/S0021911814001028 Request Permissions : Click here Downloaded from http://journals.cambridge.org/JAS, IP address: 70.60.17.66 on 21 Nov 2014 The Journal of Asian Studies Vol. 73, No. 4 (November) 2014: 1043–1058. © The Association for Asian Studies, Inc., 2014 doi:10.1017/S0021911814001028 Music for Inner Domains: Sinhala Song and the Arya and Hela Schools of Cultural Nationalism in Colonial Sri Lanka GARRETT M. FIELD In this article, I juxtapose the ways the “father of modern Sinhala drama,” John De Silva, and the Sinhala language reformer, Munidasa Cumaratunga, utilized music for different nationalist projects. First, I explore how De Silva created musicals that articulated Arya- Sinhala nationalism to support the Buddhist Revival. Second, I investigate how Cumar- atunga, who spearheaded the Hela-Sinhala movement, asserted that genuine Sinhala song should be rid of North Indian influence but full of lyrics composed in “pure” Sinhala.