Using Character Overlap to Improve Language Transformation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Language Contact at the Romance-Germanic Language Border

Language Contact at the Romance–Germanic Language Border Other Books of Interest from Multilingual Matters Beyond Bilingualism: Multilingualism and Multilingual Education Jasone Cenoz and Fred Genesee (eds) Beyond Boundaries: Language and Identity in Contemporary Europe Paul Gubbins and Mike Holt (eds) Bilingualism: Beyond Basic Principles Jean-Marc Dewaele, Alex Housen and Li wei (eds) Can Threatened Languages be Saved? Joshua Fishman (ed.) Chtimi: The Urban Vernaculars of Northern France Timothy Pooley Community and Communication Sue Wright A Dynamic Model of Multilingualism Philip Herdina and Ulrike Jessner Encyclopedia of Bilingual Education and Bilingualism Colin Baker and Sylvia Prys Jones Identity, Insecurity and Image: France and Language Dennis Ager Language, Culture and Communication in Contemporary Europe Charlotte Hoffman (ed.) Language and Society in a Changing Italy Arturo Tosi Language Planning in Malawi, Mozambique and the Philippines Robert B. Kaplan and Richard B. Baldauf, Jr. (eds) Language Planning in Nepal, Taiwan and Sweden Richard B. Baldauf, Jr. and Robert B. Kaplan (eds) Language Planning: From Practice to Theory Robert B. Kaplan and Richard B. Baldauf, Jr. (eds) Language Reclamation Hubisi Nwenmely Linguistic Minorities in Central and Eastern Europe Christina Bratt Paulston and Donald Peckham (eds) Motivation in Language Planning and Language Policy Dennis Ager Multilingualism in Spain M. Teresa Turell (ed.) The Other Languages of Europe Guus Extra and Durk Gorter (eds) A Reader in French Sociolinguistics Malcolm Offord (ed.) Please contact us for the latest book information: Multilingual Matters, Frankfurt Lodge, Clevedon Hall, Victoria Road, Clevedon, BS21 7HH, England http://www.multilingual-matters.com Language Contact at the Romance–Germanic Language Border Edited by Jeanine Treffers-Daller and Roland Willemyns MULTILINGUAL MATTERS LTD Clevedon • Buffalo • Toronto • Sydney Library of Congress Cataloging in Publication Data Language Contact at Romance-Germanic Language Border/Edited by Jeanine Treffers-Daller and Roland Willemyns. -

Old Frisian, an Introduction To

An Introduction to Old Frisian An Introduction to Old Frisian History, Grammar, Reader, Glossary Rolf H. Bremmer, Jr. University of Leiden John Benjamins Publishing Company Amsterdam / Philadelphia TM The paper used in this publication meets the minimum requirements of 8 American National Standard for Information Sciences — Permanence of Paper for Printed Library Materials, ANSI Z39.48-1984. Library of Congress Cataloging-in-Publication Data Bremmer, Rolf H. (Rolf Hendrik), 1950- An introduction to Old Frisian : history, grammar, reader, glossary / Rolf H. Bremmer, Jr. p. cm. Includes bibliographical references and index. 1. Frisian language--To 1500--Grammar. 2. Frisian language--To 1500--History. 3. Frisian language--To 1550--Texts. I. Title. PF1421.B74 2009 439’.2--dc22 2008045390 isbn 978 90 272 3255 7 (Hb; alk. paper) isbn 978 90 272 3256 4 (Pb; alk. paper) © 2009 – John Benjamins B.V. No part of this book may be reproduced in any form, by print, photoprint, microfilm, or any other means, without written permission from the publisher. John Benjamins Publishing Co. · P.O. Box 36224 · 1020 me Amsterdam · The Netherlands John Benjamins North America · P.O. Box 27519 · Philadelphia pa 19118-0519 · usa Table of contents Preface ix chapter i History: The when, where and what of Old Frisian 1 The Frisians. A short history (§§1–8); Texts and manuscripts (§§9–14); Language (§§15–18); The scope of Old Frisian studies (§§19–21) chapter ii Phonology: The sounds of Old Frisian 21 A. Introductory remarks (§§22–27): Spelling and pronunciation (§§22–23); Axioms and method (§§24–25); West Germanic vowel inventory (§26); A common West Germanic sound-change: gemination (§27) B. -

Modeling a Historical Variety of a Low-Resource Language: Language Contact Effects in the Verbal Cluster of Early-Modern Frisian

Modeling a historical variety of a low-resource language: Language contact effects in the verbal cluster of Early-Modern Frisian Jelke Bloem Arjen Versloot Fred Weerman ILLC ACLC ACLC University of Amsterdam University of Amsterdam University of Amsterdam [email protected] [email protected] [email protected] Abstract which are by definition used less, and are less likely to have computational resources available. For ex- Certain phenomena of interest to linguists ample, in cases of language contact where there is mainly occur in low-resource languages, such as contact-induced language change. We show a majority language and a lesser used language, that it is possible to study contact-induced contact-induced language change is more likely language change computationally in a histor- to occur in the lesser used language (Weinreich, ical variety of a low-resource language, Early- 1979). Furthermore, certain phenomena are better Modern Frisian, by creating a model using studied in historical varieties of languages. Taking features that were established to be relevant the example of language change, it is more interest- in a closely related language, modern Dutch. ing to study a specific language change once it has This allows us to test two hypotheses on two already been completed, such that one can study types of language contact that may have taken place between Frisian and Dutch during this the change itself in historical texts as well as the time. Our model shows that Frisian verb clus- subsequent outcome of the change. ter word orders are associated with different For these reasons, contact-induced language context features than Dutch verb orders, sup- change is difficult to study computationally, and porting the ‘learned borrowing’ hypothesis. -

PDF Hosted at the Radboud Repository of the Radboud University Nijmegen

PDF hosted at the Radboud Repository of the Radboud University Nijmegen The following full text is a publisher's version. For additional information about this publication click this link. http://hdl.handle.net/2066/147512 Please be advised that this information was generated on 2021-10-09 and may be subject to change. 'NAAR', 'NAAST', TANGS' en 'IN' Een onomasiologisch-semasiologische studie over enige voorzetsels met een locaal betekeniskenmerk in de Nederlandse dialecten en in het Fries door J. L. A. HEESTERMANS MARTINUS NIJHOFF — DEN HAAG 'NAAR', 'NAAST, 'LANGS' en 'IN' 'NAAR', 'NAAST', 'LANGS' en 'IN' Een onomasiologisch-semasiologische studie over enige voorzetsels met een locaal betekeniskenmerk in de Nederlandse dialecten en in het Fries PROEFSCHRIFT TER VERKRIJGING VAN DE GRAAD VAN DOCTOR IN DE LETTEREN AAN DE KATHOLIEKE UNIVERSITEIT VAN NIJMEGEN OP GEZAG VAN DE RECTOR MAGNIFICUS PROF. DR. P.G. A.B. WIJDEVELD VOLGENS BESLUIT VAN HET COLLEGE VAN DECANEN IN HET OPENBAAR TE VERDEDIGEN OP VRIJDAG 16MAART 1979 TE 14 OOPRECIES DOOR JOHANNES LEVINUS ADRIANUS HEESTERMANS GEBOREN TE OL'DVOSSEMEER (ZLD.) MARTINUS NIJHOFF/DEN HAAG/1979 PROMOTOR: PROF. DR. A.A. WEIJNEN ISBN 90 247 2165 2 © 1979 Uitgeverij Martinus Nijhoff В V Den Haag Niets uit deze uitgave mag worden verveelvoudigd en/of openbaar gemaakt door middel van druk, fotokopie, microfilm of op welke aiid<.i<. wijze ook, zonder voorafgaande schriftelijke toestemming van de uitgever Printed in the Netherlands VOORWOORD Zonder de hulp van een aantal vrienden en collega's zou de voltooiing van deze studie aanmerkelijk later hebben plaatsgevonden. Het is passend'hun hier dank te zeggen. -

A Study of the Variation and Change in the Vowels of the Achterhoeks Dialect

A STUDY OF THE VARIATION AND CHANGE IN THE VOWELS OF THE ACHTERHOEKS DIALECT MELODY REBECCA PATTISON PhD UNIVERSITY OF YORK LANGUAGE AND LINGUISTIC SCIENCE JANUARY 2018 Abstract The Achterhoeks dialect, spoken in the eastern Dutch province of Gelderland near the German border, is a Low Saxon dialect that differs noticeably from Standard Dutch in all linguistic areas. Previous research has comprehensively covered the differences in lexicon (see, for example, Schaars, 1984; Van Prooije, 2011), but less has been done on the phonology in this area (the most notable exception being Kloeke, 1927). There has been research conducted on the changes observed in other Dutch dialects, such as Brabants (Hagen, 1987; Swanenberg, 2009) and Limburgs (Hinskens, 1992), but not so much in Achterhoeks, and whether the trends observed in other dialects are also occurring in the Achterhoek area. It is claimed that the regional Dutch dialects are slowly converging towards the standard variety (Wieling, Nerbonne & Baayen, 2011), and this study aims to not only fill some of the gaps in Achterhoeks dialectology, but also to test to what extent the vowels are converging on the standard. This research examines changes in six lexical sets from 1979 to 2015 in speakers’ conscious representation of dialect. This conscious representation was an important aspect of the study, as what it means to speak in dialect may differ from person to person, and so the salience of vowels can be measured based on the number of their occurrences in self-described dialectal speech. Through a perception task, this research also presents a view of the typical Achterhoeks speaker as seen by other Dutch speakers, in order to provide a sociolinguistic explanation for the initial descriptive account of any vowel change observed in dialectal speech. -

Vocalisations: Evidence from Germanic Gary Taylor-Raebel A

Vocalisations: Evidence from Germanic Gary Taylor-Raebel A thesis submitted for the degree of doctor of philosophy Department of Language and Linguistics University of Essex October 2016 Abstract A vocalisation may be described as a historical linguistic change where a sound which is formerly consonantal within a language becomes pronounced as a vowel. Although vocalisations have occurred sporadically in many languages they are particularly prevalent in the history of Germanic languages and have affected sounds from all places of articulation. This study will address two main questions. The first is why vocalisations happen so regularly in Germanic languages in comparison with other language families. The second is what exactly happens in the vocalisation process. For the first question there will be a discussion of the concept of ‘drift’ where related languages undergo similar changes independently and this will therefore describe the features of the earliest Germanic languages which have been the basis for later changes. The second question will include a comprehensive presentation of vocalisations which have occurred in Germanic languages with a description of underlying features in each of the sounds which have vocalised. When considering phonological changes a degree of phonetic information must necessarily be included which may be irrelevant synchronically, but forms the basis of the change diachronically. A phonological representation of vocalisations must therefore address how best to display the phonological information whilst allowing for the inclusion of relevant diachronic phonetic information. Vocalisations involve a small articulatory change, but using a model which describes vowels and consonants with separate terminology would conceal the subtleness of change in a vocalisation. -

Dutch. a Linguistic History of Holland and Belgium

Dutch. A linguistic history of Holland and Belgium Bruce Donaldson bron Bruce Donaldson, Dutch. A linguistic history of Holland and Belgium. Uitgeverij Martinus Nijhoff, Leiden 1983 Zie voor verantwoording: http://www.dbnl.org/tekst/dona001dutc02_01/colofon.php © 2013 dbnl / Bruce Donaldson II To my mother Bruce Donaldson, Dutch. A linguistic history of Holland and Belgium VII Preface There has long been a need for a book in English about the Dutch language that presents important, interesting information in a form accessible even to those who know no Dutch and have no immediate intention of learning it. The need for such a book became all the more obvious to me, when, once employed in a position that entailed the dissemination of Dutch language and culture in an Anglo-Saxon society, I was continually amazed by the ignorance that prevails with regard to the Dutch language, even among colleagues involved in the teaching of other European languages. How often does one hear that Dutch is a dialect of German, or that Flemish and Dutch are closely related (but presumably separate) languages? To my knowledge there has never been a book in English that sets out to clarify such matters and to present other relevant issues to the general and studying public.1. Holland's contributions to European and world history, to art, to shipbuilding, hydraulic engineering, bulb growing and cheese manufacture for example, are all aspects of Dutch culture which have attracted the interest of other nations, and consequently there are numerous books in English and other languages on these subjects. But the language of the people that achieved so much in all those fields has been almost completely neglected by other nations, and to a degree even by the Dutch themselves who have long been admired for their polyglot talents but whose lack of interest in their own language seems never to have disturbed them. -

University of Groningen Mechanisms of Language Change. Vowel

University of Groningen Mechanisms of Language Change. Vowel Reduction in 15th Century West Frisian Versloot, Arianus Pieter IMPORTANT NOTE: You are advised to consult the publisher's version (publisher's PDF) if you wish to cite from it. Please check the document version below. Document Version Publisher's PDF, also known as Version of record Publication date: 2008 Link to publication in University of Groningen/UMCG research database Citation for published version (APA): Versloot, A. P. (2008). Mechanisms of Language Change. Vowel Reduction in 15th Century West Frisian. Utrecht: LOT. Copyright Other than for strictly personal use, it is not permitted to download or to forward/distribute the text or part of it without the consent of the author(s) and/or copyright holder(s), unless the work is under an open content license (like Creative Commons). Take-down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. Downloaded from the University of Groningen/UMCG research database (Pure): http://www.rug.nl/research/portal. For technical reasons the number of authors shown on this cover page is limited to 10 maximum. Download date: 12-11-2019 A.P. Versloot: Mechanisms of Language Change 2. Description of processes Chapter two describes the reduction of unstressed Old Frisian vowels and other processes connected with this development. Detailed reconstructions in chapter two are needed: • To provide evidence for the hypothesis in chapter four that Middle Frisian had phonologically contrasting tone contours, similar to the modern North Germanic languages Norwegian and Swedish; • To formulate, calibrate and test the models of language change in chapter five. -

09 Middle Dutch.Key

Middle Dutch Heterogeneity In A Small Space Quick Review Old Low Franconian (Hebban olla voglas …) No Manifestations of the Second Consonant Shift Ingvæonisms Middle Dutch A collective name for dialects spoken and written in what is now the Netherlands and Flanders (N. Belgium) between 1150 - 1500 Middle Dutch is therefore a collection of dialects Vlaams (Flemish), Brabants, Hollands, Limburgs, and East Dutch Middle Dutch A Middle Dutch author by the name of Jacob van Maerlant described the situation like this: Men moet om de rime te soucken One must in order the rhimes to seek (out) misselike tonghe in bouken: various tongues in books: Duuts, Dietsch, Brabants, Vlaamsch, Zeeus, people’s language (x2), Brabants, Flemish, Zeeuws Walsch, Latijn, Grix ende Hebreeus. French, Latin, Greek and Hebrew General Features Middle Dutch retains the grammatical features of Germanic, but to a far lesser degree than Middle High or Middle Low German Morphology begins to break down quickly Word order becomes more important Prepositions become much more important Considerable foreign influence & variation Noun & Adjective Inflection Masculine Nouns ⇒ Neuter Nouns ⇒ Feminine Nouns ⇒ Personal Pronouns First & Second Person 1 sg. 1 pl. 2 sg. 2 pl.* Nom. ic wi du ghi Gen. mijns onser dijns uwer, uw Dat. mi ons di u Acc. mi ons di u *2nd Person Plural forms used for polite address in the 2nd Person Singular Personal Pronouns Third Person 3 sg. M 3 sg. N 3 Sg. F 3 Sg. Pl Nom. hi het si si Gen. sijns hets haer haer Dat. hem hem haer hem Acc. hem het haer hem Verb Classes Inf. -

The Development of English and Dutch Pronouns an Analysis of Changes and Similarities in the Paradigms

The Development of English and Dutch Pronouns an analysis of changes and similarities in the paradigms BA Thesis English Language and Culture Utrecht University Name: Marieke Graef Student number: 3506983 Supervisor: dr. W.C.H. Philip Second Reader: dr. M.C.J. Keijzer Completed on: 17 August 2012 Table of Contents Introduction 3 Theories on Language Relationship and Language Change 5 Language Relationship and Proto-Indo-European 5 Theories on Language Change 7 The Language Mavens 10 Old English (450-1150) and Old Dutch (500-1200) 11 Middle English (1150-1500) and Middle Dutch (1200-1500) 16 Early Modern English and early Modern Dutch (1500-1700) 23 Modern English and Modern Dutch (1700- the present) 26 Conclusion 29 Bibliography 31 2 Introduction If you compare an English text from the fifteenth century with today’s newspaper, it becomes obvious that the language has changed considerably. Though everybody agrees that languages change, opinions widely differ on what motivates these changes. There are, of course, many different reasons why languages change and the theories themselves have also changed over the last two centuries. While Jakob Grimm once suggested that the “superior gentleness and moderation” of northern Germanic tribes prevented their language from undergoing the Second Sound Shift, today, the most important theories on language change involve language acquisition and language contact (Crowley and Bowern, 12). Most historical linguists believe that language change is gradual. Lightfoot, however, argues that while languages may change gradually, in grammar, abrupt changes occur (83). In addition, he claims that whether language change seems gradual depends on which lens is used: “If we think macroscopically, […], using a wide-angle lens, then change always seems to be gradual” (ibid., 83). -

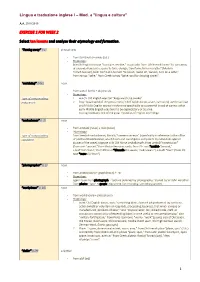

Exercise 1 for Week 2

Lingua e traduzione inglese I – Mod. a "lingua e cultura" A.A. 2018/2019 EXERCISE 1 FOR WEEK 2 Select ten lexems and analyse their etymology and formation. ‘’Turning away’’ (l.1) phrasal verb - from turn (verb) + away (adj.) - Etymology: late Old English turnian "to rotate, revolve," in part also from Old French torner "to turn away or around; draw aside, cause to turn; change, transform; turn on a lathe" (Modern French tourner), both from Latin tornare "to polish, round off, fashion, turn on a lathe," from tornus "lathe," from Greek tornos "lathe, tool for drawing circles’’ ‘’watchdog’’ (title) noun - from watch (verb) + dog (noun) - Etymology: Type of compounding: 1. watch: Old English wæccan "keep watch, be awake’’ endocentric 2. Dog: "quadruped of the genus Canis," Old English docga, a late, rare word, used in at least one Middle English source in reference specifically to a powerful breed of canine; other early Middle English uses tend to be depreciatory or abusive. Its origin remains one of the great mysteries of English etymology. ‘’ombudsman’’ (l. 3) noun - from ombuds (noun) + man (noun) - Etymology: Type of compounding: from Swedish ombudsman, literally "commission man" (specifically in reference to the office copulative of justitieombudsmannen, which hears and investigates complaints by individuals against abuses of the state); cognate with Old Norse umboðsmaðr, from umboð "commission" (from um- "around," from Proto-Germanic umbi, from PIE root *ambhi- "around," + boð "command," from PIE root *bheudh-"be aware, make aware") + maðr "man" (from PIE root *man- (1) "man"). ‘’photographer’’ (l. 5) noun - from photo (noun) + graph (noun) + -er - Etymology: agent noun from photograph : "picture obtained by photography," coined by Sir John Herschel from photo- "light" + -graph"instrument for recording; something written. -

Apperception and Linguistic Contact Between German and Afrikaans By

Apperception and Linguistic Contact between German and Afrikaans By Jeremy Bergerson A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in German in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Irmengard Rauch, Co-Chair Professor Thomas Shannon, Co-Chair Professor John Lindow Assistant Professor Jeroen Dewulf Spring 2011 1 Abstract Apperception and Linguistic Contact between German and Afrikaans by Jeremy Bergerson Doctor of Philosophy in German University of California, Berkeley Proffs. Irmengard Rauch & Thomas Shannon, Co-Chairs Speakers of German and Afrikaans have been interacting with one another in Southern Africa for over three hundred and fifty years. In this study, the linguistic results of this intra- Germanic contact are addressed and divided into two sections: 1) the influence of German (both Low and High German) on Cape Dutch/Afrikaans in the years 1652–1810; and 2) the influence of Afrikaans on Namibian German in the years 1840–present. The focus here has been on the lexicon, since lexemes are the first items to be borrowed in contact situations, though other grammatical borrowings come under scrutiny as well. The guiding principle of this line of inquiry is how the cognitive phenonemon of Herbartian apperception, or, Peircean abduction, has driven the bulk of the borrowings between the languages. Apperception is, simply put, the act of identifying a new perception as analogous to a previously existing one. The following central example to this dissertation will serve to illustrate this. When Dutch, Low German, and Malay speakers were all in contact in Capetown in the 1600 and 1700s, there were three mostly homophonous and synonymous words they were using.