Constructed Action in American Sign Language: a Look at Second Language Learners in a Second Modality

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sign Language Typology Series

SIGN LANGUAGE TYPOLOGY SERIES The Sign Language Typology Series is dedicated to the comparative study of sign languages around the world. Individual or collective works that systematically explore typological variation across sign languages are the focus of this series, with particular emphasis on undocumented, underdescribed and endangered sign languages. The scope of the series primarily includes cross-linguistic studies of grammatical domains across a larger or smaller sample of sign languages, but also encompasses the study of individual sign languages from a typological perspective and comparison between signed and spoken languages in terms of language modality, as well as theoretical and methodological contributions to sign language typology. Interrogative and Negative Constructions in Sign Languages Edited by Ulrike Zeshan Sign Language Typology Series No. 1 / Interrogative and negative constructions in sign languages / Ulrike Zeshan (ed.) / Nijmegen: Ishara Press 2006. ISBN-10: 90-8656-001-6 ISBN-13: 978-90-8656-001-1 © Ishara Press Stichting DEF Wundtlaan 1 6525XD Nijmegen The Netherlands Fax: +31-24-3521213 email: [email protected] http://ishara.def-intl.org Cover design: Sibaji Panda Printed in the Netherlands First published 2006 Catalogue copy of this book available at Depot van Nederlandse Publicaties, Koninklijke Bibliotheek, Den Haag (www.kb.nl/depot) To the deaf pioneers in developing countries who have inspired all my work Contents Preface........................................................................................................10 -

On the Status of the Icelandic Language and Icelandic Sign Language

No. 61/2011 7 June 2011 Act on the status of the Icelandic language and Icelandic sign language. THE EXECUTANTS OF THE POWERS OF THE PRESIDENT OF ICELAND under Article 8 of the Constitution, the Prime Minister, the Speaker of the Althingi and the President of the Supreme Court, make known: the Althingi has passed this Act, and we confirm it by our signatures: Article 1 National language – official language. Icelandic is the national language of the Icelandic people and the official language in Iceland. Article 2 The Icelandic language. The national language is the common language of the people of Iceland. The government authorities shall ensure that it is possible to use it in all areas of the life of the nation. Everyone who is resident in Iceland shall have the opportunity of using Icelandic for general participation in the life of the Icelandic nation as provided for in further detail in separate legislation. Article 3 Icelandic sign language. Icelandic sign language is the first language of those who have to rely on it for expression and communication, and of their children. The government authorities shall nurture and support it. All those who need to use sign language shall have the opportunity to learn and use Icelandic sign language as soon as their language acquisition process begins, or from the time when deafness, hearing impairment or deaf-blindness is diagnosed. Their immediate family members shall have the same right. Article 4 Icelandic Braille. Icelandic Braille is the first written language of those who have to rely on it for expression and communication. -

Passenger Information Using a Sign Language Avatar Individual Travel Assistance for Passengers with Special Needs in Public Transport

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Institute of Transport Research:Publications STRATEGIES Travel Assistance Photo: Helmer Photo: Passenger information using a sign language avatar Individual travel assistance for passengers with special needs in public transport Public transport, passenger information, travel assistance, information and communication technology, reduced mobility, accessibility, inclusion Public transport operators are legally obliged to ensure equal access to transportation services. This includes equal access to information and communication related to those services. Deaf passengers mostly prefer to communicate in sign language. For this reason, the specific needs of deaf and hard-of- hearing passengers still are not adequately addressed – despite the tremendous efforts public transport operators have put in providing accessible communication services to their passengers. This article describes a novel approach to passenger information in sign language based on the automatic translation of natural (written) language text into sign language. This includes the use of a sign language avatar to display the information to deaf and hard-of-hearing passengers. Authors: Lars Schnieder, Georg Tschare ublic transport operators provide nature and causes of disruptions [2]. The tion on the Rights of Persons with Disabili- real-time passenger information passenger information system may be used ties oblige all ratifying countries to ensure via electronic information systems both physically within a transportation hub that persons with disabilities have the in order to keep passengers up to as well as remotely via mobile devices used opportunity to live independently and par- Pdate about the current status of the public by the passengers. -

Is the Icelandic Sign Language an Endangered Language?

Is the Icelandic Sign Language an endangered language? RANNVEIG SVERRISDÓTTIR This article appears in: Endangered languages and the land: Mapping landscapes of multilingualism Proceedings of the 22nd Annual Conference of the Foundation for Endangered Languages (FEL XXII / 2018) Vigdís World Language Centre, Reykjavík, 23–25. August 2018 Editors: Sebastian Drude, Nicholas Ostler, Marielle Moser ISBN: 978-1-9160726-0-2 Cite this article: Sverrisdóttir, Rannveig. 2018. Is the Icelandic Sign Language an endangered language? In S. Drude, N. Ostler & M. Moser (eds.), Endangered languages and the land: Mapping landscapes of multilingualism, Proceedings of FEL XXII/2018 in Reykjavík, 113–114. London: FEL & EL Publishing. Note: this article has not been peer reviewed First published: December 2018 Link to this article: http://www.elpublishing.org/PID/4017 This article is published under a Creative Commons License CC-BY-NC (Attribution-NonCommercial). The licence permits users to use, reproduce, disseminate or display the article provided that the author is attributed as the original creator and that the reuse is restricted to non-commercial purposes i.e. research or educational use. See http://creativecommons.org/licenses/by-nc/4.0/ Foundation for Endangered Languages: http://www.ogmios.org EL Publishing: http://www.elpublishing.org Is the Icelandic Sign Language an endangered language? Rannveig Sverrisdóttir Centre for Sign Language Research, Faculty of Icelandic and Comparative Cultural Studies The University of Iceland • Sæmundargata 2 • 101 Reykjavík • Iceland [[email protected]] Abstract Icelandic Sign Language (ÍTM) is a minority language, only spoken by the Deaf community in Iceland. Origins of the language can be traced back to the 19th century but for more than 100 years the language was banned as other signed languages in the world. -

Sign Language Acronyms

Sign language acronyms Throughout the Manual, the following abbreviations for sign languages are used (some of which are acronyms based on the name of the sign language used in the respective countries): ABSL Al Sayyid Bedouin Sign Language AdaSL Adamorobe Sign Language (Ghana) ASL American Sign Language Auslan Australian Sign Language BSL British Sign Language CSL Chinese Sign Language DGS German Sign Language (Deutsche Gebärdensprache) DSGS Swiss-German Sign Language (Deutsch-Schweizerische Gebärdensprache) DTS Danish Sign Language (Dansk Tegnsprog) FinSL Finnish Sign Language GSL Greek Sign Language HKSL Hong Kong Sign Language HZJ Croatian Sign Language (Hrvatski Znakovni Jezik) IPSL Indopakistani Sign Language Inuit SL Inuit Sign Language (Canada) Irish SL Irish Sign Language Israeli SL Israeli Sign Language ÍTM Icelandic Sign Language (Íslenskt táknmál) KK Sign Language of Desa Kolok, Bali (Kata Kolok) KSL Korean Sign Language LIS Italian Sign Language (Lingua dei Segni Italiana) LIU Jordanian Sign Language (Lughat il-Ishaara il-Urdunia) LSA Argentine Sign Language (Lengua de Señas Argentina) Libras Brazilian Sign Language (Língua de Sinais Brasileira) LSC Catalan Sign Language (Llengua de Signes Catalana) LSCol Colombian Sign Language (Lengua de Señas Colombiana) LSE Spanish Sign Language (Lengua de Signos Española) LSF French Sign Language (Langue des Signes Française) LSQ Quebec Sign Language (Langue des Signes Québécoise) NGT Sign Language of the Netherlands (Nederlandse Gebarentaal) NicSL Nicaraguan Sign Language NS Japanese Sign Language (Nihon Syuwa) NSL Norwegian Sign Language NZSL New Zealand Sign Language DOI 10.1515/9781501511806-003, © 2017 Josep Quer, Carlo Cecchetto, Caterina Donati, Carlo Geraci, Meltem Kelepir, Roland Pfau, and Markus Steinbach, published by De Gruyter. -

Sign Language Legislation in the European Union 4

Sign Language Legislation in the European Union Mark Wheatley & Annika Pabsch European Union of the Deaf Brussels, Belgium 3 Sign Language Legislation in the European Union All rights reserved. No part of this book may be reproduced or transmitted by any person or entity, including internet search engines or retailers, in any form or by any means, electronic or mechanical, including photocopying, recording, scanning or by any information storage and retrieval system without the prior written permission of the authors. ISBN 978-90-816-3390-1 © European Union of the Deaf, September 2012. Printed at Brussels, Belgium. Design: Churchill’s I/S- www.churchills.dk This publication was sponsored by Significan’t Significan’t is a (Deaf and Sign Language led ) social business that was established in 2003 and its Managing Director, Jeff McWhinney, was the CEO of the British Deaf Association when it secured a verbal recognition of BSL as one of UK official languages by a Minister of the UK Government. SignVideo is committed to delivering the best service and support to its customers. Today SignVideo provides immediate access to high quality video relay service and video interpreters for health, public and voluntary services, transforming access and career prospects for Deaf people in employment and empowering Deaf entrepreneurs in their own businesses. www.signvideo.co.uk 4 Contents Welcome message by EUD President Berglind Stefánsdóttir ..................... 6 Foreword by Dr Ádám Kósa, MEP ................................................................ -

Jutta Ransmayr Monolingual Country? Multilingual Society

Jutta Ransmayr Monolingual country? Multilingual society. Aspects of language use in public administration in Austria. o official languages o languages spoken in Austria o numbers of speakers Federal Constitutional Law Art. 8 (1) German is the official language of the Republic without prejudice to the rights provided by Federal law for linguistic minorities. (2) The Republic of Austria (the Federation, Länder and municipalities) is committed to its linguistic and cultural diversity which has evolved in the course of time and finds its expression in the autochthonous ethnic groups. The language and culture, continued existence and protection of these ethnic groups shall be respected, safeguarded and promoted. (3) The Austrian Sign Language (Österreichische Gebärdensprache,ÖGS) is a language in its own right, recognized in law. For details, see the relevant legal provisions. Official language(s) of the Republic of Austria 1. German 2. Languages of the Austrian autochthonous ethnic groups 3. Austrian sign language Resident population – everyday language – nationality (2001) Everyday language Resident population Austrian citizens total: 8.032.926 total: 7.322.000 German 7.115.780 6.991.388 88,58% 95,48% Languages of the Austrian autochthonous 119.667 82.522 ethnic groups 1,49% 1,13% Austrian sign language approx. 10.000 Languages of the former Yugoslav states 348.629 41.944 4,34% 0,57% Turkish, Kurdish 185.578 61.167 2,31% 0,84% World languages 79.514 43.469 0,99% 0,59% Languages of resident population 2001 in % German languages of autochthonous -

Mental Space Theory and Icelandic Sign Language

The ITB Journal Volume 9 Issue 1 Article 2 2008 Mental Space Theory and Icelandic Sign Language Gudny Bjork Thorvaldsdottir Follow this and additional works at: https://arrow.tudublin.ie/itbj Part of the Linguistics Commons Recommended Citation Thorvaldsdottir, Gudny Bjork (2008) "Mental Space Theory and Icelandic Sign Language," The ITB Journal: Vol. 9: Iss. 1, Article 2. doi:10.21427/D7NT71 Available at: https://arrow.tudublin.ie/itbj/vol9/iss1/2 This Article is brought to you for free and open access by the Ceased publication at ARROW@TU Dublin. It has been accepted for inclusion in The ITB Journal by an authorized administrator of ARROW@TU Dublin. For more information, please contact [email protected], [email protected]. This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 License ITB Journal Mental Space Theory and Icelandic Sign Language Gudny Bjork Thorvaldsdottir Centre for Deaf Studies, School of Linguistic, Speech and Communication Sciences. Trinity College Dublin 1. Introduction Signed languages are articulated in space and this is why the use of space is one of their most important features. They make use of 3-dimesional space and the way the space is organised during discourse is grammatically and semantically significant (Liddell 1990, Engberg-Pedersen 1993). The Theory of Mental Spaces (Fauconnier 1985) has been applied to signed languages and proves to be an especially good method when it comes to conceptual understanding of various aspects of signed languages. This paper is based on an M. Phil (Linguistics) dissertation submitted to University of Dublin, Trinity College, in 2007 and discusses how Mental Space Theory may be applied to Icelandic Sign Language (ÍTM). -

![Icelandic Sign Language [Icl] (A Language of Iceland)](https://docslib.b-cdn.net/cover/8983/icelandic-sign-language-icl-a-language-of-iceland-3088983.webp)

Icelandic Sign Language [Icl] (A Language of Iceland)

“Icelandic Sign Language [icl] (A language of Iceland) • Alternate Names: Íslenskt Táknmál, ÍTM • Population: 300 (2010 The Ministry of Education, Science and Culture). 250 (2014 EUD). 1,400 (2014 IMB). • Location: Mainly in the Reykjavik area. • Language Status: 6b (Threatened). Recognized language (2011, Act. No. 61). • Dialects: None known. Significant mutual intelligibility between Icelandic Sign Language and Danish Sign Language [dsl] (Brynjólfsdóttir etal. 2012). Based on Danish Sign Language [dsl]. Until 1910, deaf children were sent to school in Denmark; but the languages have diverged since then (Aldersson and McEntee-Atalianis 2007). Lexical similarity: 66% with Danish Sign Language [dsl] (Aldersson and McEntee-Atalianis 2007). Fingerspelling system similar to French Sign Language[fsl]. • Typology: SVO; One-handed fingerspelling. • Language Use: Used natively by hearing children of Deaf parents and as L2 by many other hearing people (2014 R. Sverrisdóttir). The language is taught as a subject within one school in the language area. The language of introduction is Icelandic (isl) mixed with ÍTM. Home, school (mixed language use), media, community. Mostly adults. Neutral attitudes. Most are bilingual in Icelandic [isl], mostly written. • Language Development: New media. Theater. TV. Dictionary. • Other Comments: 27 sign language interpreters (2014 EUD). Committee on national sign language. Research institute. Signed interpretation provided for college students. Instruction for parents of deaf children. The Communication Centre for the Deaf and Hard of Hearing provides interpreter services, ÍTM courses and consultation for families of deaf children. Interpreter training, ÍTM grammar and Deaf studies are taught at the University of Iceland. Christian (Protestant).” Lewis, M. Paul, Gary F. Simons, and Charles D. -

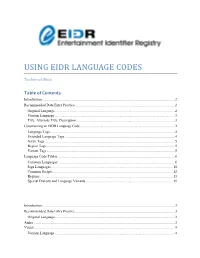

Using Eidr Language Codes

USING EIDR LANGUAGE CODES Technical Note Table of Contents Introduction ................................................................................................................................................... 2 Recommended Data Entry Practice .............................................................................................................. 2 Original Language..................................................................................................................................... 2 Version Language ..................................................................................................................................... 3 Title, Alternate Title, Description ............................................................................................................. 3 Constructing an EIDR Language Code ......................................................................................................... 3 Language Tags .......................................................................................................................................... 4 Extended Language Tags .......................................................................................................................... 4 Script Tags ................................................................................................................................................ 5 Region Tags ............................................................................................................................................. -

MA Thesis Bayt Al-Hikmah

MA thesis Religious Studies Bayt al-Hikmah – House of Wisdom The future of Islam and Muslims in Iceland Identity Construction and Solidarity through Interculturalism, Communicative Dialogue and Mutual Understanding Halldór Nikulás Lár Supervisor Magnús Þorkell Bernharðsson June 2021 University of Iceland School of Humanities Religious Studies Bayt al-Hikmah – House of Wisdom The future of Islam and Muslims in Iceland Identity Construction and Solidarity through Interculturalism, Communicative Dialogue and Mutual Understanding M.A.-thesis Halldór Nikulás Lárusson Kt.: 070854-2369 Supervisor: Magnús Þorkell Bernharðsson June 2021 2 Útdráttur Þjóðerniseinangrun múslima er vaxandi vandamál í Evrópu, þar sem tilhneigingin er að skilgreina alla múslima saman í einn einsleitan hóp. Þessi rannsókn sýnir fram á að túlkun Jihadista á íslam, með þeim alvarlegu afleiðingum sem henni fylgja, er meginástæða þessarar félagslegu afmörkunar múslima. Í því er vandamálið fólgið, ekki í íslam sem trú, heldur í ákveðinni túlkun á henni og viðbrögðum við þeirri túlkun. Allt bendir til þess að túlkun öfgahópa íslam sé langt frá því að vera nákvæm framsetning á trúnni. Vandamálin í samskiptum meginlands Evrópu og múslima eiga ekki við um Ísland, en þessi breyting í átt að aðskilnaði ætti að vera lærdómur fyrir landið. Rannsóknargögnin staðfesta að þetta þarf ekki að vera vandamál. Erfið aðlögun múslima í Evrópu stafar fyrst og fremst af a) félagslegri afmörkun múslima sem byggir á mótun ákveðinnar staðalímyndar, b) skilningsleysi og vanhæfni fjölmenningarstefnunnar (e. multiculturalism) til að takast á við andlega menningu múslima og löngun þeirra til trúarlegs lífs í veraldlegu samfélagi. Því horfir Evrópa nú til sammenningarstefnunnar (þýðing höfundar á enska orðinu interculturalism) eftir aðstoð. -

Applying Lexicostatistical Methods to Sign Languages: How Not to Delineate Sign Language Varieties

Palfreyman, Nick (2014) Applying lexicostatistical methods to sign languages: How not to delineate sign language varieties. Unpublished paper available from clok.uclan.ac.uk/37838 Applying lexicostatistical methods to sign languages: How not to delineate sign language varieties.1 Nick Palfreyman ([email protected]) International Institute for Sign Languages and Deaf Studies, University of Central Lancashire. The reliability and general efficacy of lexicostatistical methods have been called into question by many spoken language linguists, some of whom are vociferous in expressing their concerns. Dixon (1997) and Campbell (2004) go as far as to reject the validity of lexicostatistics entirely, and Dixon cites several publications in support of his argument that lexicostatistics has been ‘decisively discredited’, including Hoijer (1956), Arndt (1959), Bergslund and Vogt (1962), Teeter (1963), Campbell (1977) and Embleton (1992). In the field of sign language research, however, some linguists continue to produce lexicostatistical studies that delineate sign language varieties along the language-dialect continuum. Papers referring to lexicostatistical methods continue to be submitted to conferences on sign language linguistics, suggesting that these methods are – in some corners of the field, at least – as popular as ever. Given the ongoing popularity of lexicostatistical methods with some sign language linguists, it is necessary to be as clear as possible about how these methods may generate misleading results when applied to sign language varieties, which is the central aim of this paper. Furthermore, in several cases, lexicostatistical methods seem to have been deployed for a quite different purpose – that of establishing mutual intelligibility – but the suitability of lexicostatistical methods for such a purpose has not been openly addressed, and this is dealt with in Section 4.