Architecture Basics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Benchmarking the Intel FPGA SDK for Opencl Memory Interface

The Memory Controller Wall: Benchmarking the Intel FPGA SDK for OpenCL Memory Interface Hamid Reza Zohouri*†1, Satoshi Matsuoka*‡ *Tokyo Institute of Technology, †Edgecortix Inc. Japan, ‡RIKEN Center for Computational Science (R-CCS) {zohour.h.aa@m, matsu@is}.titech.ac.jp Abstract—Supported by their high power efficiency and efficiency on Intel FPGAs with different configurations recent advancements in High Level Synthesis (HLS), FPGAs are for input/output arrays, vector size, interleaving, kernel quickly finding their way into HPC and cloud systems. Large programming model, on-chip channels, operating amounts of work have been done so far on loop and area frequency, padding, and multiple types of blocking. optimizations for different applications on FPGAs using HLS. However, a comprehensive analysis of the behavior and • We outline one performance bug in Intel’s compiler, and efficiency of the memory controller of FPGAs is missing in multiple deficiencies in the memory controller, leading literature, which becomes even more crucial when the limited to significant loss of memory performance for typical memory bandwidth of modern FPGAs compared to their GPU applications. In some of these cases, we provide work- counterparts is taken into account. In this work, we will analyze arounds to improve the memory performance. the memory interface generated by Intel FPGA SDK for OpenCL with different configurations for input/output arrays, II. METHODOLOGY vector size, interleaving, kernel programming model, on-chip channels, operating frequency, padding, and multiple types of A. Memory Benchmark Suite overlapped blocking. Our results point to multiple shortcomings For our evaluation, we develop an open-source benchmark in the memory controller of Intel FPGAs, especially with respect suite called FPGAMemBench, available at https://github.com/ to memory access alignment, that can hinder the programmer’s zohourih/FPGAMemBench. -

Understanding Performance Numbers in Integrated Circuit Design Oprecomp Summer School 2019, Perugia Italy 5 September 2019

Understanding performance numbers in Integrated Circuit Design Oprecomp summer school 2019, Perugia Italy 5 September 2019 Frank K. G¨urkaynak [email protected] Integrated Systems Laboratory Introduction Cost Design Flow Area Speed Area/Speed Trade-offs Power Conclusions 2/74 Who Am I? Born in Istanbul, Turkey Studied and worked at: Istanbul Technical University, Istanbul, Turkey EPFL, Lausanne, Switzerland Worcester Polytechnic Institute, Worcester MA, USA Since 2008: Integrated Systems Laboratory, ETH Zurich Director, Microelectronics Design Center Senior Scientist, group of Prof. Luca Benini Interests: Digital Integrated Circuits Cryptographic Hardware Design Design Flows for Digital Design Processor Design Open Source Hardware Integrated Systems Laboratory Introduction Cost Design Flow Area Speed Area/Speed Trade-offs Power Conclusions 3/74 What Will We Discuss Today? Introduction Cost Structure of Integrated Circuits (ICs) Measuring performance of ICs Why is it difficult? EDA tools should give us a number Area How do people report area? Is that fair? Speed How fast does my circuit actually work? Power These days much more important, but also much harder to get right Integrated Systems Laboratory The performance establishes the solution space Finally the cost sets a limit to what is possible Introduction Cost Design Flow Area Speed Area/Speed Trade-offs Power Conclusions 4/74 System Design Requirements System Requirements Functionality Functionality determines what the system will do Integrated Systems Laboratory Finally the cost sets a limit -

A Modern Primer on Processing in Memory

A Modern Primer on Processing in Memory Onur Mutlua,b, Saugata Ghoseb,c, Juan Gomez-Luna´ a, Rachata Ausavarungnirund SAFARI Research Group aETH Z¨urich bCarnegie Mellon University cUniversity of Illinois at Urbana-Champaign dKing Mongkut’s University of Technology North Bangkok Abstract Modern computing systems are overwhelmingly designed to move data to computation. This design choice goes directly against at least three key trends in computing that cause performance, scalability and energy bottlenecks: (1) data access is a key bottleneck as many important applications are increasingly data-intensive, and memory bandwidth and energy do not scale well, (2) energy consumption is a key limiter in almost all computing platforms, especially server and mobile systems, (3) data movement, especially off-chip to on-chip, is very expensive in terms of bandwidth, energy and latency, much more so than computation. These trends are especially severely-felt in the data-intensive server and energy-constrained mobile systems of today. At the same time, conventional memory technology is facing many technology scaling challenges in terms of reliability, energy, and performance. As a result, memory system architects are open to organizing memory in different ways and making it more intelligent, at the expense of higher cost. The emergence of 3D-stacked memory plus logic, the adoption of error correcting codes inside the latest DRAM chips, proliferation of different main memory standards and chips, specialized for different purposes (e.g., graphics, low-power, high bandwidth, low latency), and the necessity of designing new solutions to serious reliability and security issues, such as the RowHammer phenomenon, are an evidence of this trend. -

EE 434 Lecture 2

EE 330 Lecture 6 • PU and PD Networks • Complex Logic Gates • Pass Transistor Logic • Improved Switch-Level Model • Propagation Delay Review from Last Time MOS Transistor Qualitative Discussion of n-channel Operation Source Gate Drain Drain Bulk Gate n-channel MOSFET Source Equivalent Circuit for n-channel MOSFET D D • Source assumed connected to (or close to) ground • VGS=0 denoted as Boolean gate voltage G=0 G = 0 G = 1 • VGS=VDD denoted as Boolean gate voltage G=1 • Boolean G is relative to ground potential S S This is the first model we have for the n-channel MOSFET ! Ideal switch-level model Review from Last Time MOS Transistor Qualitative Discussion of p-channel Operation Source Gate Drain Drain Bulk Gate Source p-channel MOSFET Equivalent Circuit for p-channel MOSFET D D • Source assumed connected to (or close to) positive G = 0 G = 1 VDD • VGS=0 denoted as Boolean gate voltage G=1 • VGS= -VDD denoted as Boolean gate voltage G=0 S S • Boolean G is relative to ground potential This is the first model we have for the p-channel MOSFET ! Review from Last Time Logic Circuits VDD Truth Table A B A B 0 1 1 0 Inverter Review from Last Time Logic Circuits VDD Truth Table A B C 0 0 1 0 1 0 A C 1 0 0 B 1 1 0 NOR Gate Review from Last Time Logic Circuits VDD Truth Table A B C A C 0 0 1 B 0 1 1 1 0 1 1 1 0 NAND Gate Logic Circuits Approach can be extended to arbitrary number of inputs n-input NOR n-input NAND gate gate VDD VDD A1 A1 A2 An A2 F A1 An F A2 A1 A2 An An A1 A 1 A2 F A2 F An An Complete Logic Family Family of n-input NOR gates forms -

CSCE 5730 Digital CMOS VLSI Design

Lecture 2: Overview CSCE 5730 Digital CMOS VLSI Design Instructor: Saraju P. Mohanty, Ph. D. NOTE: The figures, text etc included in slides are borrowed from various books, websites, authors pages, and other sources for academic purpose only. The instructor does not claim any originality. CSCE 5730: Digital CMOS VLSI Design 1 Lecture Outline • Historical development of computers • Introduction to a basic digital computer • Five classic components of a computer • Microprocessor • IC design abstraction level • Intel processor family • Developmental trends of ICs • Moore’s Law CSCE 5730: Digital CMOS VLSI Design 2 Introduction to Digital Circuits CSCE 5730: Digital CMOS VLSI Design 3 What is a digital Computer ? A fast electronic machine that accepts digitized input information, processes it according to a list of internally stored instruction, and produces the resulting output information. List of instructions Æ Computer program Internal storage Æ Memory CSCE 5730: Digital CMOS VLSI Design 4 Different Types and Forms of Computer • Personal Computers (Desktop PCs) • Notebook computers (Laptop computers) • Handheld PCs • Pocket PCs • Workstations (SGI, HP, IBM, SUN) • ATM (Embedded systems) • Supercomputers CSCE 5730: Digital CMOS VLSI Design 5 Five classic components of a Computer Computer Processor Memory Devices Control Input Datapath Output (1) Input, (2) Output, (3) Datapath, (4) Controller, and (5) Memory CSCE 5730: Digital CMOS VLSI Design 6 What is a microprocessor ? • A microprocessor is an integrated circuit (IC) built on a tiny piece of silicon. It contains thousands, or even millions, of transistors, which are interconnected via superfine traces of aluminum. The transistors work together to store and manipulate data so that the microprocessor can perform a wide variety of useful functions. -

Chap01: Computer Abstractions and Technology

CHAPTER 1 Computer Abstractions and Technology 1.1 Introduction 3 1.2 Eight Great Ideas in Computer Architecture 11 1.3 Below Your Program 13 1.4 Under the Covers 16 1.5 Technologies for Building Processors and Memory 24 1.6 Performance 28 1.7 The Power Wall 40 1.8 The Sea Change: The Switch from Uniprocessors to Multiprocessors 43 1.9 Real Stuff: Benchmarking the Intel Core i7 46 1.10 Fallacies and Pitfalls 49 1.11 Concluding Remarks 52 1.12 Historical Perspective and Further Reading 54 1.13 Exercises 54 CMPS290 Class Notes (Chap01) Page 1 / 24 by Kuo-pao Yang 1.1 Introduction 3 Modern computer technology requires professionals of every computing specialty to understand both hardware and software. Classes of Computing Applications and Their Characteristics Personal computers o A computer designed for use by an individual, usually incorporating a graphics display, a keyboard, and a mouse. o Personal computers emphasize delivery of good performance to single users at low cost and usually execute third-party software. o This class of computing drove the evolution of many computing technologies, which is only about 35 years old! Server computers o A computer used for running larger programs for multiple users, often simultaneously, and typically accessed only via a network. o Servers are built from the same basic technology as desktop computers, but provide for greater computing, storage, and input/output capacity. Supercomputers o A class of computers with the highest performance and cost o Supercomputers consist of tens of thousands of processors and many terabytes of memory, and cost tens to hundreds of millions of dollars. -

Advanced X86

Advanced x86: BIOS and System Management Mode Internals Input/Output Xeno Kovah && Corey Kallenberg LegbaCore, LLC All materials are licensed under a Creative Commons “Share Alike” license. http://creativecommons.org/licenses/by-sa/3.0/ ABribuEon condiEon: You must indicate that derivave work "Is derived from John BuBerworth & Xeno Kovah’s ’Advanced Intel x86: BIOS and SMM’ class posted at hBp://opensecuritytraining.info/IntroBIOS.html” 2 Input/Output (I/O) I/O, I/O, it’s off to work we go… 2 Types of I/O 1. Memory-Mapped I/O (MMIO) 2. Port I/O (PIO) – Also called Isolated I/O or port-mapped IO (PMIO) • X86 systems employ both-types of I/O • Both methods map peripheral devices • Address space of each is accessed using instructions – typically requires Ring 0 privileges – Real-Addressing mode has no implementation of rings, so no privilege escalation needed • I/O ports can be mapped so that they appear in the I/O address space or the physical-memory address space (memory mapped I/O) or both – Example: PCI configuration space in a PCIe system – both memory-mapped and accessible via port I/O. We’ll learn about that in the next section • The I/O Controller Hub contains the registers that are located in both the I/O Address Space and the Memory-Mapped address space 4 Memory-Mapped I/O • Devices can also be mapped to the physical address space instead of (or in addition to) the I/O address space • Even though it is a hardware device on the other end of that access request, you can operate on it like it's memory: – Any of the processor’s instructions -

Digital Logic and Design (Course Code: EE222) Ltlecture 6: Lliogic Famili Es

Indian Institute of Technology Jodhpur, Year 2018‐2019 Digital Logic and Design (Course Code: EE222) LtLecture 6: LiLogic Fam ilies Course Instructor: Shree Prakash Tiwari EilEmail: sptiwari@iitj .ac.i n Webpage: http://home.iitj.ac.in/~sptiwari/ Course related documents will be uploaded on http://home.iitj.ac.in/~sptiwari/DLD/ Note: The information provided in the slides are taken form text books Digital Electronics (including Mano & Ciletti), and various other resources from internet, for teaching/academic use only 1 Overview •Early families (DL, RTL) • TTL •Evolution of TTL family • CMOS family and its evolution 2 Logic families Diode Logic (DL) •simpp;lest; does not scale •NOT not possible (need = an active element) Resistor-Transistor Logic (RTL) • replace diode switch with a tittransistor switc h •can be cascaded = • large power draw 3 Logic families Diode-Transistor Logic (DTL) • essentially diode logic with transistor amplification • reduced power consumption •faster than RTL = DL AND gate Saturating inverter 4 Logic Families • The bipolar transistor as a logical switch TTL Bipolar Transistor-Transistor Logic (TTL) •First introduced by in 1964 (Texas Instruments) •TTL has shaped digital technology in many ways • Standard TTL family (e.g. 7400) is obsolete •Newer TTL families used (e.g. 74ALS00) 6 TTL Bipolar Transistor-Transistor Logic (TTL) Distinct features •Multi‐emitter transistors 7 TTL A Standard TTL NAND gate 8 TTL A standard TTL NAND gate with open collector output 9 TTL evolution Schottky series (74LS00) TTL •A major -

Motorola Mpc107 Pci Bridge/Integrated Memory Controller

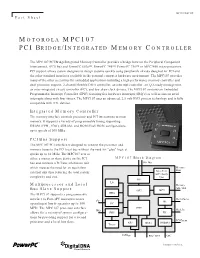

MPC107FACT/D Fact Sheet MOTOROLA MPC107 PCI BRIDGE/INTEGRATED MEMORY CONTROLLER The MPC107 PCI Bridge/Integrated Memory Controller provides a bridge between the Peripheral Component Interconnect, (PCI) bus and PowerPC 603e™, PowerPC 740™, PowerPC 750™ or MPC7400 microprocessors. PCI support allows system designers to design systems quickly using peripherals already designed for PCI and the other standard interfaces available in the personal computer hardware environment. The MPC107 provides many of the other necessities for embedded applications including a high-performance memory controller and dual processor support, 2-channel flexible DMA controller, an interrupt controller, an I2O-ready message unit, an inter-integrated circuit controller (I2C), and low skew clock drivers. The MPC107 contains an Embedded Programmable Interrupt Controller (EPIC) featuring five hardware interrupts (IRQ’s) as well as sixteen serial interrupts along with four timers. The MPC107 uses an advanced, 2.5-volt HiP3 process technology and is fully compatible with TTL devices. Integrated Memory Controller The memory interface controls processor and PCI interactions to main memory. It supports a variety of programmable timing supporting DRAM (FPM, EDO), SDRAM, and ROM/Flash ROM configurations, up to speeds of 100 MHz. PCI Bus Support The MPC107 PCI interface is designed to connect the processor and memory buses to the PCI local bus without the need for "glue" logic at speeds up to 66 MHz. The MPC107 acts as either a master or slave device on the PCI MPC107 Block Diagram bus and contains a PCI bus arbitration unit 60x Bus which reduces the need for an equivalent Memory Data external unit thus reducing the total system Data Path ECC / Parity complexity and cost. -

Chapter6-6.Pdf

MEMS1082 Chapter 6 Digital Circuit 6-6 Department of Mechanical Engineering TTL and CMOS ICs , TTL and CMOS output circuit totem pole configuration When the upper transistor is forward biased and the bottom When input is high, the p- transistor is off, the output is type transistor (top) is off, high. The resistor, transistor, n-type is on. So the and diode drop the actual output is pulled low. The output voltage to a value device sinks current typically about 3.4 V. When the lower transistor is forward When input is low, the n- biased and the top transistor is type transistor (bottom) is off, the output is low. off, p-type is on. So the The TTL device sources current output is pulled high. The when there is a high output and device sources current. sinks current when the output is low. TTL device dissipates power continuously regardless of whether the output is high or low. Department of Mechanical Engineering The MOSFET and MOSFET switching states There are presently two general types of MOSFETs: depletion and enhancement. MOS digital ICs use enhancement MOSFETs exclusively The direction of the arrow indicates either P- or N-channel. The symbols show a broken line between the source and drain to indicate that there is normally no conducting channel between these electrodes. Symbol also shows a separation between the gate and the other terminals to indicate the very high resistance (typically around 1012 Ω ) between the gate and channel. Department of Mechanical Engineering The MOSFET and MOSFET switching states Department of Mechanical Engineering N-MOS Inverter Department of Mechanical Engineering N-MOS NAND Gate Department of Mechanical Engineering N-MOS NOR Gate Department of Mechanical Engineering CMOS Logic The complementary MOS (CMOS) logic family uses both P- and N- channel MOSFETs in the same circuit to realize several advantages over the P-MOS and N- MOS families. -

Photovoltaic Couplers for MOSFET Drive for Relays

Photocoupler Application Notes Basic Electrical Characteristics and Application Circuit Design of Photovoltaic Couplers for MOSFET Drive for Relays Outline: Photovoltaic-output photocouplers(photovoltaic couplers), which incorporate a photodiode array as an output device, are commonly used in combination with a discrete MOSFET(s) to form a semiconductor relay. This application note discusses the electrical characteristics and application circuits of photovoltaic-output photocouplers. ©2019 1 Rev. 1.0 2019-04-25 Toshiba Electronic Devices & Storage Corporation Photocoupler Application Notes Table of Contents 1. What is a photovoltaic-output photocoupler? ............................................................ 3 1.1 Structure of a photovoltaic-output photocoupler .................................................... 3 1.2 Principle of operation of a photovoltaic-output photocoupler .................................... 3 1.3 Basic usage of photovoltaic-output photocouplers .................................................. 4 1.4 Advantages of PV+MOSFET combinations ............................................................. 5 1.5 Types of photovoltaic-output photocouplers .......................................................... 7 2. Major electrical characteristics and behavior of photovoltaic-output photocouplers ........ 8 2.1 VOC-IF characteristics .......................................................................................... 9 2.2 VOC-Ta characteristic ........................................................................................ -

COSC 6385 Computer Architecture - Multi-Processors (IV) Simultaneous Multi-Threading and Multi-Core Processors Edgar Gabriel Spring 2011

COSC 6385 Computer Architecture - Multi-Processors (IV) Simultaneous multi-threading and multi-core processors Edgar Gabriel Spring 2011 Edgar Gabriel Moore’s Law • Long-term trend on the number of transistor per integrated circuit • Number of transistors double every ~18 month Source: http://en.wikipedia.org/wki/Images:Moores_law.svg COSC 6385 – Computer Architecture Edgar Gabriel 1 What do we do with that many transistors? • Optimizing the execution of a single instruction stream through – Pipelining • Overlap the execution of multiple instructions • Example: all RISC architectures; Intel x86 underneath the hood – Out-of-order execution: • Allow instructions to overtake each other in accordance with code dependencies (RAW, WAW, WAR) • Example: all commercial processors (Intel, AMD, IBM, SUN) – Branch prediction and speculative execution: • Reduce the number of stall cycles due to unresolved branches • Example: (nearly) all commercial processors COSC 6385 – Computer Architecture Edgar Gabriel What do we do with that many transistors? (II) – Multi-issue processors: • Allow multiple instructions to start execution per clock cycle • Superscalar (Intel x86, AMD, …) vs. VLIW architectures – VLIW/EPIC architectures: • Allow compilers to indicate independent instructions per issue packet • Example: Intel Itanium series – Vector units: • Allow for the efficient expression and execution of vector operations • Example: SSE, SSE2, SSE3, SSE4 instructions COSC 6385 – Computer Architecture Edgar Gabriel 2 Limitations of optimizing a single instruction