Benchmarking the Intel FPGA SDK for Opencl Memory Interface

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

BCL: a Cross-Platform Distributed Data Structures Library

BCL: A Cross-Platform Distributed Data Structures Library Benjamin Brock, Aydın Buluç, Katherine Yelick University of California, Berkeley Lawrence Berkeley National Laboratory {brock,abuluc,yelick}@cs.berkeley.edu ABSTRACT high-performance computing, including several using the Parti- One-sided communication is a useful paradigm for irregular paral- tioned Global Address Space (PGAS) model: Titanium, UPC, Coarray lel applications, but most one-sided programming environments, Fortran, X10, and Chapel [9, 11, 12, 25, 29, 30]. These languages are including MPI’s one-sided interface and PGAS programming lan- especially well-suited to problems that require asynchronous one- guages, lack application-level libraries to support these applica- sided communication, or communication that takes place without tions. We present the Berkeley Container Library, a set of generic, a matching receive operation or outside of a global collective. How- cross-platform, high-performance data structures for irregular ap- ever, PGAS languages lack the kind of high level libraries that exist plications, including queues, hash tables, Bloom filters and more. in other popular programming environments. For example, high- BCL is written in C++ using an internal DSL called the BCL Core performance scientific simulations written in MPI can leverage a that provides one-sided communication primitives such as remote broad set of numerical libraries for dense or sparse matrices, or get and remote put operations. The BCL Core has backends for for structured, unstructured, or adaptive meshes. PGAS languages MPI, OpenSHMEM, GASNet-EX, and UPC++, allowing BCL data can sometimes use those numerical libraries, but are missing the structures to be used natively in programs written using any of data structures that are important in some of the most irregular these programming environments. -

Advances, Applications and Performance of The

1 Introduction The two predominant classes of programming models for MIMD concurrent computing are distributed memory and ADVANCES, APPLICATIONS AND shared memory. Both shared memory and distributed mem- PERFORMANCE OF THE GLOBAL ory models have advantages and shortcomings. Shared ARRAYS SHARED MEMORY memory model is much easier to use but it ignores data PROGRAMMING TOOLKIT locality/placement. Given the hierarchical nature of the memory subsystems in modern computers this character- istic can have a negative impact on performance and scal- Jarek Nieplocha1 1 ability. Careful code restructuring to increase data reuse Bruce Palmer and replacing fine grain load/stores with block access to Vinod Tipparaju1 1 shared data can address the problem and yield perform- Manojkumar Krishnan ance for shared memory that is competitive with mes- Harold Trease1 2 sage-passing (Shan and Singh 2000). However, this Edoardo Aprà performance comes at the cost of compromising the ease of use that the shared memory model advertises. Distrib- uted memory models, such as message-passing or one- Abstract sided communication, offer performance and scalability This paper describes capabilities, evolution, performance, but they are difficult to program. and applications of the Global Arrays (GA) toolkit. GA was The Global Arrays toolkit (Nieplocha, Harrison, and created to provide application programmers with an inter- Littlefield 1994, 1996; Nieplocha et al. 2002a) attempts to face that allows them to distribute data while maintaining offer the best features of both models. It implements a the type of global index space and programming syntax shared-memory programming model in which data local- similar to that available when programming on a single ity is managed by the programmer. -

A Modern Primer on Processing in Memory

A Modern Primer on Processing in Memory Onur Mutlua,b, Saugata Ghoseb,c, Juan Gomez-Luna´ a, Rachata Ausavarungnirund SAFARI Research Group aETH Z¨urich bCarnegie Mellon University cUniversity of Illinois at Urbana-Champaign dKing Mongkut’s University of Technology North Bangkok Abstract Modern computing systems are overwhelmingly designed to move data to computation. This design choice goes directly against at least three key trends in computing that cause performance, scalability and energy bottlenecks: (1) data access is a key bottleneck as many important applications are increasingly data-intensive, and memory bandwidth and energy do not scale well, (2) energy consumption is a key limiter in almost all computing platforms, especially server and mobile systems, (3) data movement, especially off-chip to on-chip, is very expensive in terms of bandwidth, energy and latency, much more so than computation. These trends are especially severely-felt in the data-intensive server and energy-constrained mobile systems of today. At the same time, conventional memory technology is facing many technology scaling challenges in terms of reliability, energy, and performance. As a result, memory system architects are open to organizing memory in different ways and making it more intelligent, at the expense of higher cost. The emergence of 3D-stacked memory plus logic, the adoption of error correcting codes inside the latest DRAM chips, proliferation of different main memory standards and chips, specialized for different purposes (e.g., graphics, low-power, high bandwidth, low latency), and the necessity of designing new solutions to serious reliability and security issues, such as the RowHammer phenomenon, are an evidence of this trend. -

Enabling Efficient Use of UPC and Openshmem PGAS Models on GPU Clusters

Enabling Efficient Use of UPC and OpenSHMEM PGAS Models on GPU Clusters Presented at GTC ’15 Presented by Dhabaleswar K. (DK) Panda The Ohio State University E-mail: [email protected] hCp://www.cse.ohio-state.edu/~panda Accelerator Era GTC ’15 • Accelerators are becominG common in hiGh-end system architectures Top 100 – Nov 2014 (28% use Accelerators) 57% use NVIDIA GPUs 57% 28% • IncreasinG number of workloads are beinG ported to take advantage of NVIDIA GPUs • As they scale to larGe GPU clusters with hiGh compute density – hiGher the synchronizaon and communicaon overheads – hiGher the penalty • CriPcal to minimize these overheads to achieve maximum performance 3 Parallel ProGramminG Models Overview GTC ’15 P1 P2 P3 P1 P2 P3 P1 P2 P3 LoGical shared memory Shared Memory Memory Memory Memory Memory Memory Memory Shared Memory Model Distributed Memory Model ParPPoned Global Address Space (PGAS) DSM MPI (Message PassinG Interface) Global Arrays, UPC, Chapel, X10, CAF, … • ProGramminG models provide abstract machine models • Models can be mapped on different types of systems - e.G. Distributed Shared Memory (DSM), MPI within a node, etc. • Each model has strenGths and drawbacks - suite different problems or applicaons 4 Outline GTC ’15 • Overview of PGAS models (UPC and OpenSHMEM) • Limitaons in PGAS models for GPU compuPnG • Proposed DesiGns and Alternaves • Performance Evaluaon • ExploiPnG GPUDirect RDMA 5 ParPPoned Global Address Space (PGAS) Models GTC ’15 • PGAS models, an aracPve alternave to tradiPonal message passinG - Simple -

Advanced X86

Advanced x86: BIOS and System Management Mode Internals Input/Output Xeno Kovah && Corey Kallenberg LegbaCore, LLC All materials are licensed under a Creative Commons “Share Alike” license. http://creativecommons.org/licenses/by-sa/3.0/ ABribuEon condiEon: You must indicate that derivave work "Is derived from John BuBerworth & Xeno Kovah’s ’Advanced Intel x86: BIOS and SMM’ class posted at hBp://opensecuritytraining.info/IntroBIOS.html” 2 Input/Output (I/O) I/O, I/O, it’s off to work we go… 2 Types of I/O 1. Memory-Mapped I/O (MMIO) 2. Port I/O (PIO) – Also called Isolated I/O or port-mapped IO (PMIO) • X86 systems employ both-types of I/O • Both methods map peripheral devices • Address space of each is accessed using instructions – typically requires Ring 0 privileges – Real-Addressing mode has no implementation of rings, so no privilege escalation needed • I/O ports can be mapped so that they appear in the I/O address space or the physical-memory address space (memory mapped I/O) or both – Example: PCI configuration space in a PCIe system – both memory-mapped and accessible via port I/O. We’ll learn about that in the next section • The I/O Controller Hub contains the registers that are located in both the I/O Address Space and the Memory-Mapped address space 4 Memory-Mapped I/O • Devices can also be mapped to the physical address space instead of (or in addition to) the I/O address space • Even though it is a hardware device on the other end of that access request, you can operate on it like it's memory: – Any of the processor’s instructions -

Automatic Handling of Global Variables for Multi-Threaded MPI Programs

Automatic Handling of Global Variables for Multi-threaded MPI Programs Gengbin Zheng, Stas Negara, Celso L. Mendes, Laxmikant V. Kale´ Eduardo R. Rodrigues Department of Computer Science Institute of Informatics University of Illinois at Urbana-Champaign Federal University of Rio Grande do Sul Urbana, IL 61801, USA Porto Alegre, Brazil fgzheng,snegara2,cmendes,[email protected] [email protected] Abstract— necessary to privatize global and static variables to ensure Hybrid programming models, such as MPI combined with the thread-safety of an application. threads, are one of the most efficient ways to write parallel ap- In high-performance computing, one way to exploit multi- plications for current machines comprising multi-socket/multi- core nodes and an interconnection network. Global variables in core platforms is to adopt hybrid programming models that legacy MPI applications, however, present a challenge because combine MPI with threads, where different programming they may be accessed by multiple MPI threads simultaneously. models are used to address issues such as multiple threads Thus, transforming legacy MPI applications to be thread-safe per core, decreasing amount of memory per core, load in order to exploit multi-core architectures requires proper imbalance, etc. When porting legacy MPI applications to handling of global variables. In this paper, we present three approaches to eliminate these hybrid models that involve multiple threads, thread- global variables to ensure thread-safety for an MPI program. safety of the applications needs to be addressed again. These approaches include: (a) a compiler-based refactoring Global variables cause no problem with traditional MPI technique, using a Photran-based tool as an example, which implementations, since each process image contains a sep- automates the source-to-source transformation for programs arate instance of the variable. -

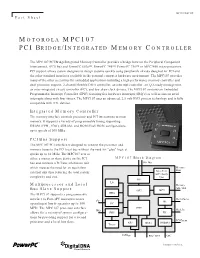

Motorola Mpc107 Pci Bridge/Integrated Memory Controller

MPC107FACT/D Fact Sheet MOTOROLA MPC107 PCI BRIDGE/INTEGRATED MEMORY CONTROLLER The MPC107 PCI Bridge/Integrated Memory Controller provides a bridge between the Peripheral Component Interconnect, (PCI) bus and PowerPC 603e™, PowerPC 740™, PowerPC 750™ or MPC7400 microprocessors. PCI support allows system designers to design systems quickly using peripherals already designed for PCI and the other standard interfaces available in the personal computer hardware environment. The MPC107 provides many of the other necessities for embedded applications including a high-performance memory controller and dual processor support, 2-channel flexible DMA controller, an interrupt controller, an I2O-ready message unit, an inter-integrated circuit controller (I2C), and low skew clock drivers. The MPC107 contains an Embedded Programmable Interrupt Controller (EPIC) featuring five hardware interrupts (IRQ’s) as well as sixteen serial interrupts along with four timers. The MPC107 uses an advanced, 2.5-volt HiP3 process technology and is fully compatible with TTL devices. Integrated Memory Controller The memory interface controls processor and PCI interactions to main memory. It supports a variety of programmable timing supporting DRAM (FPM, EDO), SDRAM, and ROM/Flash ROM configurations, up to speeds of 100 MHz. PCI Bus Support The MPC107 PCI interface is designed to connect the processor and memory buses to the PCI local bus without the need for "glue" logic at speeds up to 66 MHz. The MPC107 acts as either a master or slave device on the PCI MPC107 Block Diagram bus and contains a PCI bus arbitration unit 60x Bus which reduces the need for an equivalent Memory Data external unit thus reducing the total system Data Path ECC / Parity complexity and cost. -

The Impulse Memory Controller

IEEE TRANSACTIONS ON COMPUTERS, VOL. 50, NO. 11, NOVEMBER 2001 1 The Impulse Memory Controller John B. Carter, Member, IEEE, Zhen Fang, Student Member, IEEE, Wilson C. Hsieh, Sally A. McKee, Member, IEEE, and Lixin Zhang, Student Member, IEEE AbstractÐImpulse is a memory system architecture that adds an optional level of address indirection at the memory controller. Applications can use this level of indirection to remap their data structures in memory. As a result, they can control how their data is accessed and cached, which can improve cache and bus utilization. The Impuse design does not require any modification to processor, cache, or bus designs since all the functionality resides at the memory controller. As a result, Impulse can be adopted in conventional systems without major system changes. We describe the design of the Impulse architecture and how an Impulse memory system can be used in a variety of ways to improve the performance of memory-bound applications. Impulse can be used to dynamically create superpages cheaply, to dynamically recolor physical pages, to perform strided fetches, and to perform gathers and scatters through indirection vectors. Our performance results demonstrate the effectiveness of these optimizations in a variety of scenarios. Using Impulse can speed up a range of applications from 20 percent to over a factor of 5. Alternatively, Impulse can be used by the OS for dynamic superpage creation; the best policy for creating superpages using Impulse outperforms previously known superpage creation policies. Index TermsÐComputer architecture, memory systems. æ 1 INTRODUCTION INCE 1987, microprocessor performance has improved at memory. By giving applications control (mediated by the Sa rate of 55 percent per year; in contrast, DRAM latencies OS) over the use of shadow addresses, Impulse supports have improved by only 7 percent per year and DRAM application-specific optimizations that restructure data. -

Overview of the Global Arrays Parallel Software Development Toolkit: Introduction to Global Address Space Programming Models

Overview of the Global Arrays Parallel Software Development Toolkit: Introduction to Global Address Space Programming Models P. Saddayappan2, Bruce Palmer1, Manojkumar Krishnan1, Sriram Krishnamoorthy1, Abhinav Vishnu1, Daniel Chavarría1, Patrick Nichols1, Jeff Daily1 1Pacific Northwest National Laboratory 2Ohio State University Outline of the Tutorial ! " Parallel programming models ! " Global Arrays (GA) programming model ! " GA Operations ! " Writing, compiling and running GA programs ! " Basic, intermediate, and advanced calls ! " With C and Fortran examples ! " GA Hands-on session 2 Performance vs. Abstraction and Generality Domain Specific “Holy Grail” Systems GA CAF MPI OpenMP Scalability Autoparallelized C/Fortran90 Generality 3 Parallel Programming Models ! " Single Threaded ! " Data Parallel, e.g. HPF ! " Multiple Processes ! " Partitioned-Local Data Access ! " MPI ! " Uniform-Global-Shared Data Access ! " OpenMP ! " Partitioned-Global-Shared Data Access ! " Co-Array Fortran ! " Uniform-Global-Shared + Partitioned Data Access ! " UPC, Global Arrays, X10 4 High Performance Fortran ! " Single-threaded view of computation ! " Data parallelism and parallel loops ! " User-specified data distributions for arrays ! " Compiler transforms HPF program to SPMD program ! " Communication optimization critical to performance ! " Programmer may not be conscious of communication implications of parallel program HPF$ Independent HPF$ Independent s=s+1 DO I = 1,N DO I = 1,N A(1:100) = B(0:99)+B(2:101) HPF$ Independent HPF$ Independent HPF$ Independent -

Exascale Computing Project -- Software

Exascale Computing Project -- Software Paul Messina, ECP Director Stephen Lee, ECP Deputy Director ASCAC Meeting, Arlington, VA Crystal City Marriott April 19, 2017 www.ExascaleProject.org ECP scope and goals Develop applications Partner with vendors to tackle a broad to develop computer spectrum of mission Support architectures that critical problems national security support exascale of unprecedented applications complexity Develop a software Train a next-generation Contribute to the stack that is both workforce of economic exascale-capable and computational competitiveness usable on industrial & scientists, engineers, of the nation academic scale and computer systems, in collaboration scientists with vendors 2 Exascale Computing Project, www.exascaleproject.org ECP has formulated a holistic approach that uses co- design and integration to achieve capable exascale Application Software Hardware Exascale Development Technology Technology Systems Science and Scalable and Hardware Integrated mission productive technology exascale applications software elements supercomputers Correctness Visualization Data Analysis Applicationsstack Co-Design Programming models, Math libraries and development environment, Tools Frameworks and runtimes System Software, resource Workflows Resilience management threading, Data Memory scheduling, monitoring, and management and Burst control I/O and file buffer system Node OS, runtimes Hardware interface ECP’s work encompasses applications, system software, hardware technologies and architectures, and workforce -

The Global Arrays User Manual

The Global Arrays User Manual Manojkumar Krishnan, Bruce Palmer, Abhinav Vishnu, Sriram Krishnamoorthy, Jeff Daily, Daniel Chavarria February 8, 2012 1 This document is intended to be used with version 5.1 of Global Arrays (Pacific Northwest National Laboratory Technical Report Number PNNL-13130) DISCLAIMER This material was prepared as an account of work sponsored by an agency of the United States Government. Neither the United States Gov- ernment nor the United States Department of Energy, nor Battelle, nor any of their employees, MAKES ANY WARRANTY, EXPRESS OR IMPLIED, OR ASSUMES ANY LEGAL LIABILITY OR RESPONSIBILITY FOR THE ACCURACY, COMPLETENESS, OR USEFULNESS OF ANY INFORMA- TION, APPARATUS, PRODUCT, SOFTWARE, OR PROCESS DISCLOSED, OR REPRESENTS THAT ITS USE WOULD NOT INFRINGE PRIVATELY OWNED RIGHTS. ACKNOWLEDGMENT This software and its documentation were pro- duced with United States Government support under Contract Number DE- AC05-76RLO-1830 awarded by the United States Department of Energy. The United States Government retains a paid-up non-exclusive, irrevocable world- wide license to reproduce, prepare derivative works, perform publicly and display publicly by or for the US Government, including the right to distribute to other US Government contractors. December, 2011 Contents 1 Introduction 3 1.1 Overview . .3 1.2 Basic Functionality . .4 1.3 Programming Model . .5 1.4 Application Guidelines . .6 1.4.1 When to use GA: . .6 1.4.2 When not to use GA . .6 2 Writing, Building and Running GA Programs 7 2.1 Platform and Library Dependencies . .7 2.1.1 Supported Platforms . .7 2.1.2 Selection of the communication network for ARMCI . -

Optimizing Thread Throughput for Multithreaded Workloads on Memory Constrained Cmps

Optimizing Thread Throughput for Multithreaded Workloads on Memory Constrained CMPs Major Bhadauria and Sally A. Mckee Computer Systems Lab Cornell University Ithaca, NY, USA [email protected], [email protected] ABSTRACT 1. INTRODUCTION Multi-core designs have become the industry imperative, Power and thermal constraints have begun to limit the replacing our reliance on increasingly complicated micro- maximum operating frequency of high performance proces- architectural designs and VLSI improvements to deliver in- sors. The cubic increase in power from increases in fre- creased performance at lower power budgets. Performance quency and higher voltages required to attain those frequen- of these multi-core chips will be limited by the DRAM mem- cies has reached a plateau. By leveraging increasing die ory system: we demonstrate this by modeling a cycle-accurate space for more processing cores (creating chip multiproces- DDR2 memory controller with SPLASH-2 workloads. Sur- sors, or CMPs) and larger caches, designers hope that multi- prisingly, benchmarks that appear to scale well with the threaded programs can exploit shrinking transistor sizes to number of processors fail to do so when memory is accurately deliver equal or higher throughput as single-threaded, single- modeled. We frequently find that the most efficient config- core predecessors. The current software paradigm is based uration is not the one with the most threads. By choosing on the assumption that multi-threaded programs with little the most efficient number of threads for each benchmark, contention for shared data scale (nearly) linearly with the average energy delay efficiency improves by a factor of 3.39, number of processors, yielding power-efficient data through- and performance improves by 19.7%, on average.