Advanced Compiler Technologies for Embedded Streaming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Oriented Conferences Four Solutions

Delivering Solutions and Technology to the World’s Design Engineering Community Four Solutions- Oriented Conferences • System-on-Chip Design Conference • IP World Forum • High-Performance System Design Conference • Wireless and Optical Broadband Design Conference Special Technology Focus Areas January 29 – February 1, 2001 • Internet and Information Exhibits: January 30–31, 2001 Appliance Design Santa Clara Convention Center • Embedded Design Santa Clara, California • RF, Optical, and Analog Design NEW! • IEC Executive Forum International Engineering Register by January 5 and Consortium save $100 – and be entered to win www.iec.org a Palm Pilot! Practical Design Solutions Practical design-engineering solutions presented by practicing engineers—The DesignCon reputation of excellence has been built largely by the practical nature of its sessions. Design engineers hand selected by our team of professionals provide you with the best electronic design and silicon-solutions information available in the industry. DesignCon provides attendees with DesignCon has an established reputation for the high design solutions from peers and professionals. quality of its papers and its expert-level speakers from Silicon Valley and around the world. Each year more than 100 industry pioneers bring to light the design-engineering solutions that are on the leading edge of technology. This elite group of design engineers presents unique case studies, technology innovations, practical techniques, design tips, and application overviews. Who Should Attend Any professionals who need to stay on top of current information regarding design-engineering theories, The most complete educational experience techniques, and application strategies should attend this in the industry conference. DesignCon attracts engineers and allied The four conference options of DesignCon 2001 provide a professionals from all levels and disciplines. -

Important Notice Dear Customer, on 7 February 2017 The

Important notice Dear Customer, On 7 February 2017 the former NXP Standard Product business became a new company with the tradename Nexperia. Nexperia is an industry leading supplier of Discrete, Logic and PowerMOS semiconductors with its focus on the automotive, industrial, computing, consumer and wearable application markets In data sheets and application notes which still contain NXP or Philips Semiconductors references, use the references to Nexperia, as shown below. Instead of http://www.nxp.com, http://www.philips.com/ or http://www.semiconductors.philips.com/, use http://www.nexperia.com Instead of [email protected] or [email protected], use [email protected] (email) Replace the copyright notice at the bottom of each page or elsewhere in the document, depending on the version, as shown below: - © NXP N.V. (year). All rights reserved or © Koninklijke Philips Electronics N.V. (year). All rights reserved Should be replaced with: - © Nexperia B.V. (year). All rights reserved. If you have any questions related to the data sheet, please contact our nearest sales office via e-mail or telephone (details via [email protected]). Thank you for your cooperation and understanding, Kind regards, Team Nexperia PMN27XPE 20 V, single P-channel Trench MOSFET 20 September 2012 Product data sheet 1. Product profile 1.1 General description P-channel enhancement mode Field-Effect Transistor (FET) in a small SOT457 (SC-74) Surface-Mounted Device (SMD) plastic package using Trench MOSFET technology. 1.2 Features and benefits • Fast switching • Trench MOSFET technology • 2 kV ESD protection 1.3 Applications • Relay driver • High-speed line driver • High-side loadswitch • Switching circuits 1.4 Quick reference data Table 1. -

6800/68000 Cmos

Click Here to Request a Large Quantity Quote 6800/68000 CMOS Jameco Manufacturer Manufacturer Description Package Product Type Organization Family Application Price 1 Part # Part # 130366 Major Brands 00130366 420 PIECE DIODE REFILL PACKAGE $29.95 130374 Major Brands 00130374 560 Piece Transistor Kit Refill Package $54.95 130391 Major Brands 00130391 420 Piece 74LS Logic Series Refill Package $214.95 130403 Major Brands 00130403 300 PIECE CD4000 SERIES REFILL PACKAGE $74.95 130411 Major Brands 00130411 480 PIECE LINEAR SERIES REFILL PACKAGE $164.95 1542961 Vishay 1.5KE16CA TVS Diode 22.5V Clamp 66.7A Ipp Through Hole 1500W DO-201 $0.49 1.5KE 2303247 Major Brands 1.5KE8.2C TVS Diode 12.1V Clamp 125.6A Ipp DO-201 DO-201 $0.59 35924 Major Brands 1N1188 Diode 1N1188 400 VOLT 35 AMP SILICON RECTIFIER DO-5 Stud Rectifier $3.95 2197610 Kest Parts 1N1190A Diode 1N1190A Power Silicon Rectifier 40 Amp 600V DO-5 DO-5 Stud Rectifier $4.95 2197628 Kest Parts 1N1190RA Diode 1N1190RA 40A 600V Power Silicon Rectifier DO-5 Stud Rectifier $4.95 2287679 Major Brands 1N1202A 200V 12A Rectifier Stud Mount DO-203 DO-203 Stud Rectifier $1.95 35941 Major Brands 1N270 Diode 1N270 Germanium 1 Volt @ 200Ma DO-7 General $1.19 Purpose 2220533 Major Brands 1N34A Diode 1N34A General Purpose Germanium DO-7 General $1.95 Purpose 35975 Major Brands 1N4001 Diode 1N4001 50 Volt 1 Amp General Purpose Rectifier DO-41 Standard $0.09 76961 Major Brands 1N4002 Diode 1N4002 100 Volt 1A DO-41 package DO-41 Standard $0.09 76970 Major Brands 1N4003 Diode 1N4003 Rectifier 200 Volt -

152F580552eb358625881dd680

Products Catalog Index PART NO. MANUFACTURER DESCRIPTION URL PRICE Q65112A2615 OSRAM OPTO Q65112A2615 http://www.searchdatasheet.com/Q65112A2615-datasheet.html QUOTE SEMICONDUCTORS XR33053ID-F EXAR Transceiver 1Mbps 3.3V/5V http://www.searchdatasheet.com/XR33053ID-F-datasheet.html QUOTE 14-Pin SOIC N Tube Q65111A7362 OSRAM OPTO LED Uni-Color White 2-Pin http://www.searchdatasheet.com/Q65111A7362-datasheet.html QUOTE SEMICONDUCTORS SMD T/R EL-17-21UYC/S530-A2/TR8 EVERLIGHT EL-17-21UYC/S530-A2/TR8 http://www.searchdatasheet.com/EL-17-21UYC%2FS530-A2%2FTR8-datasheet.html QUOTE ELECTRONICS Q65112A2616 OSRAM OPTO Q65112A2616 http://www.searchdatasheet.com/Q65112A2616-datasheet.html QUOTE SEMICONDUCTORS Q65111A9950 OSRAM OPTO Q65111A9950 http://www.searchdatasheet.com/Q65111A9950-datasheet.html QUOTE SEMICONDUCTORS Q65112A2629 OSRAM OPTO Q65112A2629 http://www.searchdatasheet.com/Q65112A2629-datasheet.html QUOTE SEMICONDUCTORS Q65112A0819 OSRAM OPTO LED Uni-Color White 2-Pin http://www.searchdatasheet.com/Q65112A0819-datasheet.html QUOTE SEMICONDUCTORS CSMD EP Q65111A9208 OSRAM OPTO Q65111A9208 http://www.searchdatasheet.com/Q65111A9208-datasheet.html QUOTE SEMICONDUCTORS Q65111A8966 OSRAM OPTO Q65111A8966 http://www.searchdatasheet.com/Q65111A8966-datasheet.html QUOTE SEMICONDUCTORS RLR20C1201GMRE6 VISHAY Res Metal Film 1.2K Ohm http://www.searchdatasheet.com/RLR20C1201GMRE6-datasheet.html QUOTE 2% 0.5W(1/2W) ±100ppm/C 1% Epoxy AXL T/R EVMB Connect One Evaluation Master Kit with http://www.searchdatasheet.com/EVMB-datasheet.html QUOTE Semiconductors -

Case M.8125 - JAC / NEXPERIA

EUROPEAN COMMISSION DG Competition Case M.8125 - JAC / NEXPERIA Only the English text is available and authentic. REGULATION (EC) No 139/2004 MERGER PROCEDURE Article 6(1)(b) NON-OPPOSITION Date: 12/10/2016 In electronic form on the EUR-Lex website under document number 32016M8125 EUROPEAN COMMISSION Brussels, 12.10.2016 C(2016) 6677 final PUBLIC VERSION SIMPLIFIED MERGER PROCEDURE To the notifying parties Dear Sirs, Subject: Case M.8125 - JAC / NEXPERIA Commission decision pursuant to Article 6(1)(b) of Council Regulation (EC) No 139/20041 and Article 57 of the Agreement on the European Economic Area2 1. On 13 September 2016, the European Commission received notification of a proposed concentration pursuant to Article 4 of the Merger Regulation by which the undertaking Beijing Jianguang Asset Management Co., Ltd. ("JAC", People's Republic of China), controlled by the China Investment Corporation (“CIC”, People's Republic of China), acquires within the meaning of Article 3(1)(b) of the Merger Regulation control of the standard products business unit (“Nexperia”) of NXP Semiconductors NV (“NXP”, the Netherlands) by way of purchase of shares.3 2. The business activities of the undertakings concerned are: − JAC is an investment management company which focuses its investments on mergers and acquisitions in the semiconductor industry. It is active in developing, manufacturing, and selling RF power transistors and bipolar based (power) diodes, thyristors and transistors. Its parent company, CIC, is a sovereign wealth fund of the People’s Republic of China, specialized in foreign exchange holdings. − Nexperia is active in the manufacturing and sale of semiconductors, in particular several types of logic integrated circuits ("ICs"), small signal transistors and diodes. -

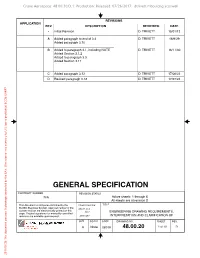

General Specification

Crane Aerospace: 48.00.20,D,1: Production: Released: 07/26/2017: dtrivett mboulang scaswell REVISIONS APPLICATION REV DESCRIPTION REVIEWED DATE - Initial Revision D. TRIVETT 15/01/12 A Added paragraph to end of 3.4 D. TRIVETT 16/9/29 Added paragraph 3.10 B Added to paragraph 3.1, including NOTE D. TRIVETT 16/11/30 Added Section 3.1.2 Added to paragraph 3.3 Added Section 3.11 C Added paragraph 3.12 D. TRIVETT 17/06/22 D Revised paragragh 3.12 D. TRIVETT 17/07/24 GENERAL SPECIFICATION CONTRACT NUMBER REVISION STATUS N/A Active sheets: 1 through 6 All sheets are at revision D This document is computer-controlled by the TOLERANCES ARE: TITLE ELDEC Business System. Approval names for the LINEAR .XX = current revision are electronically printed on this .XXX = ENGINEERING DRAWING REQUIREMENTS, page. Original signatures for manually-controlled revisions are available upon request. ANGULAR = INTERPRETATION AND CLARIFICATION OF SIZE SCALE CAGE DRAWING NO. SHEET REV. A None 08748 48.00.20 1 of 10 D 2017/07/26 This document contains Technology controlled by the EAR. Diversion or use contrary to U.S. law is prohibited. ECCN: EAR99 Crane Aerospace: 48.00.20,D,1: Production: Released: 07/26/2017: dtrivett mboulang scaswell 1 SCOPE This specification establishes general and specific guidelines to aid in the interpretation and application of engineering drawings defining components, materials, or assemblies, whether purchased from suppliers or fabricated at Crane Aerospace & Electronics facilities. 1.1 Applicability This document applies to engineering drawings controlled by the Lynnwood site of Crane Aerospace & Electronics (CRANE), also referred to as ELDEC Corporation. -

Nexperia USA Inc

N74F14D,623 Details PDF N74F14D,623 Part Number: N74F14D,623 Manufacturer: Nexperia USA Inc. Description: IC HEX INVERTER SCHM TRIG 14SOIC Lead Free Status / Lead free / RoHS Compliant RoHS Status: Data sheet: N74F14D,623.pdf INQUIRY Introduction Images are for reference only. See Product Specifications for product details. If you are interested to buy Nexperia N74F14D,623,Just Email us. [email protected] our sales team will reply you within 24 hours Specifications Voltage - Supply: 4.5 V ~ 5.5 V Supplier Device Package: 14-SO Series: 74F Packaging: Original-Reel® Package / Case: 14-SOIC (0.154", 3.90mm Width) Operating Temperature: 0°C ~ 70°C Number of Inputs: 6 Number of Circuits: 6 Mounting Type: Surface Mount Max Propagation Delay @ V, Max CL: 8.5ns @ 5V, 50pF Logic Type: Inverter Logic Level - Low: 0.8V Logic Level - High: 2V Features: Schmitt Trigger Current - Quiescent (Max): - Current - Output High, Low: 1mA, 20mA Email: [email protected] RELATED PARTS FOR N74F14D,623 Image Part Number Manufacturers Description View N74F14D,602 NXP USA Inc. IC HEX INVERTER/SCHM TRIG 14SOIC Inquiry N74F138D,602 NXP USA Inc. IC DECODER/DEMUX 3-8LINE 16SOIC Inquiry N74F157AD,623 NXP USA Inc. IC DATA SELECTOR/MUX 2IN 16SOIC Inquiry N74F154D PHILIPS Inquiry N74F153D,602 NXP USA Inc. IC MULTIPLEXER DUAL 4IN 16SOIC Inquiry N74F138N,602 NXP USA Inc. IC DECODER/DEMUX 3-8LINE 16DIP Inquiry N74F153N,602 NXP USA Inc. IC MULTIPLEXER DUAL 4IN 16DIP Inquiry N74F139N PHI Inquiry N74F153D SIGNETICS Inquiry N74F14D NXP in stock Inquiry N74F157AN,602 NXP USA Inc. IC DATA SELECTOR/MUX 2IN 16-DIP Inquiry N74F139N,602 NXP USA Inc. -

Nexperia Pnx8500

Home Entertainment Engine Nexperia pnx8500 Designed for use in mid- to high-end advanced set-top box (ASTB) and digital television (DTV) systems, the Nexperia™ pnx8500 Home Entertainment Engine deliv- ers comprehesive source decoder, interactive TV, Inter- net, telephony, and digital recording functions on a single chip.This highly flexible IC decodes all high-definition (HD) and standard-definition (SD) MPEG-2 source material. Outputting enhanced SD display, the pnx8500 is primarily targeted for STBs driving existing NTSC, PAL, and SECAM television sets as well as digital TVs and projectors. On a single, flexible IC, the pnx8500 provides a com- plete, future-proof solution for building tomorrow's advanced STB products. In addition to supporting basic and premium TV services, pnx8500-powered STBs can be easily enhanced to deliver advanced TV services such FEATURES as web-browsing, time-shift record/playback, interactive + Dual-CPU design includes a 200-MHz TriMedia VLIW core and video, streaming video and audio media, video telepho- a 150-MHz MIPS 3940 core ny, games, purchase transaction management, and more. + Demultiplexes, descrambles and PID filters multiple DVB or New standards for DTV, communications, or multime- DSS transport streams dia can be supported through software upgrades. + Supports input of two digital video streams (CCIR656, broad- FLEXIBLE, DUAL-CPU ARCHITECTURE cast quality) The pnx8500 dual-CPU architecture combines powerful + Simultaneous MPEG-2 decoding of three SD streams or one MIPS (R3940) and TriMedia (TM32) VLIW processor HD (MP@HL) stream cores that control a range of on-chip hardware components + DVB, MULTI2 and DSS descrambling, and ICAM verification through three system buses. -

Standard Products Towards a Standalone Global Powerhouse Nexperia´S Customers

Customer Presentation November 7, 2016 Standard products towards a standalone global powerhouse Nexperia´s Customers A standalone world-class company for Discretes, Logic and Mosfets NXP‘s announcement about Standard Products June 14th 2016 • NXP has announced a binding agreement to sell its Standard Products business to a consortium of financial investors consisting of Beijing Jianguang Asset Management Co. Ltd (‘JAC Capital’) and Wise Road Capital LTD (‘Wise Road Capital’), leading to a stand-alone world-class leader in Discretes, Logic and PowerMOS to be named Nexperia. • The business will be operated by the Nexperia management team which will be responsible for Nexperia day-to-day business operations and productions. Investors regard Nexperia as a global company and consider the best support to global customers is a key factor for the success of the new company. JAC will continue to make investment to support Nexperia management team and the business including R&D activities, customer supports and growth aspirations. • The transaction is expected close in the first quarter of 2017, pending all required regulatory approval and employee representative consultations. • Transaction is a positive accomplishment for all parties involved, enabling NXP to continue to focus on its HPMS business, and furthering its Secure Connections for a Smarter World strategy. • As stand-alone global powerhouse, Nexperia will continue the product portfolio (Discretes, Logic, PowerMOS) of today. Nexperia will decide efficiently by its own on growth strategies and associated investments. 2 nexperia.com Transaction highlights • The new company, to be named Nexperia, will be headquartered in Nijmegen, the Netherlands. • The new company will become a world-class producer/supplier of standard products. -

PSMN3R7-30YLC N-Channel 30 V 3.95Mω Logic Level MOSFET in LFPAK Using Nextpower

Important notice Dear Customer, On 7 February 2017 the former NXP Standard Product business became a new company with the tradename Nexperia. Nexperia is an industry leading supplier of Discrete, Logic and PowerMOS semiconductors with its focus on the automotive, industrial, computing, consumer and wearable application markets In data sheets and application notes which still contain NXP or Philips Semiconductors references, use the references to Nexperia, as shown below. Instead of http://www.nxp.com, http://www.philips.com/ or http://www.semiconductors.philips.com/, use http://www.nexperia.com Instead of [email protected] or [email protected], use [email protected] (email) Replace the copyright notice at the bottom of each page or elsewhere in the document, depending on the version, as shown below: - © NXP N.V. (year). All rights reserved or © Koninklijke Philips Electronics N.V. (year). All rights reserved Should be replaced with: - © Nexperia B.V. (year). All rights reserved. If you have any questions related to the data sheet, please contact our nearest sales office via e-mail or telephone (details via [email protected]). Thank you for your cooperation and understanding, Kind regards, Team Nexperia PSMN3R7-30YLC LFPAK N-channel 30 V 3.95mΩ logic level MOSFET in LFPAK using NextPower technology Rev. 01 — 2 May 2011 Product data sheet 1. Product profile 1.1 General description Logic level enhancement mode N-channel MOSFET in LFPAK package. This product is designed and qualified for use in a wide range of industrial, communications and domestic equipment. 1.2 Features and benefits High reliability Power SO8 package, Optimised for 4.5V Gate drive utilising qualified to 175°C NextPower Superjunction technology Low parasitic inductance and Ultra low QG, QGD, and QOSS for resistance high system efficiencies at low and high loads 1.3 Applications DC-to-DC converters Server power supplies Load switching Sync rectifier Power OR-ing 1.4 Quick reference data Table 1. -

Terms of Use Nexperia.Com 2017

NEXPERIA TERMS OF USE NEXPERIA.COM 2017 nexperia.com Acceptance of Terms of Use: This Web Site is owned by NEXPERIA B.V. inconsistent with these Terms of Use, such specific terms will supersede having its registered office at Jonkerbosplein 52, 6534 AB Nijmegen. these Terms of Use. The Web Site is intended for the lawful use of NEXPERIA B.V. and/or its subsidiaries (‘NEXPERIA’) customers, employees and members of the NEXPERIA reserves the right to make changes or updates with respect to general public who are over the age of 13. By accessing and using this or in the Content of the Web Site or the format thereof at any time Web Site you agree to be bound by the following Terms of Use and all without notice. NEXPERIA reserves the right to terminate or restrict terms and conditions contained and/or referenced herein or any additional access to the Web Site for any reason whatsoever at its sole discretion. terms and conditions set forth on this Web Site and all such terms shall be deemed accepted by you. If you do NOT agree to all these Terms of Use, Although care has been taken to ensure the accuracy of the information you should NOT access or use this Web Site. If you do not agree to any on this Web Site, financial and product information included, NEXPERIA additional specific terms which apply to particular Content (as defined assumes no responsibility therefor. below) or to particular transactions concluded through this Web Site, then you should NOT access or use the part of the Web Site which contains such Content or through which such transactions may be concluded and you should not use such Content or conclude such transactions. -

Nexperia MOSFET的5G解決方案

Nexperia MOSFET的5G解決方案 Efficiency Wins 安世半導體公司簡介以及5G市場未來趨勢 Jason Yao 姚湘屏 大中華區及南亞區產品行銷總監 Becoming Nexperia: a lifetime of “firsts” Closing in on a century of electronics history 1920 Mullard Radio Valve Company founded 1924 RRF GmbH 1961 Signetics 1975 Philips 2006 Philips 2017 NXP Standard (Valvo) formed in founded in Silicon acquires Signetics Semiconductors Products becomes Hamburg Valley becomes NXP Nexperia 1927 Philips acquires Mullard and Valvo 1920s 1930s 1940s 1950s 1960s 1970s 1980s 1990s 2000s 2010s 2001 First leadless SOD882 2019 Launch 1953 First transistor 1969 Industry / SOT883 2012 GaN FET production, the Valvo standard SOT23 2002 LFPAK, the Smallest OC71 toughest Power- Logic “GX5” 2018 SO8 Smallest Logic “GX4” 3 nexperia.com Empowering every electronic design Success follows our lifetime of commitment to our employees, customers and products Key Facts • Experts in cost-effective production of • Long-term foundry and discrete and MOSFET components subcon partners and Analog & Logic ICs • Over 60 years of expertise in • US$ 1.43 billion revenues (2019) semiconductors • Market share of 14.2 % (2019) • > 12,000 Employees supporting customers globally • High volume production of > 90 billion units annually • Subsidiary of Wingtech Technology • 2 own frontend, 3 own backend • Headquarters in Nijmegen, manufacturing sites The Netherlands 4 nexperia.com company proprietary Discretes, MOSFETs and Analog & Logic ICs Broad portfolio: More than 800 new products every year Diodes & Transistors Protection & Filtering Analog & Logic