New Method for Robotic Systems Architecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Robot Operating System - the Complete Reference (Volume 4) Part of the Studies in Computational Intelligence Book Series (SCI, Volume 831)

Book Robot Operating System - The Complete Reference (Volume 4) Part of the Studies in Computational Intelligence book series (SCI, volume 831) Several authors CISTER-TR-190702 2019 Book CISTER-TR-190702 Robot Operating System - The Complete Reference (Volume 4) Robot Operating System - The Complete Reference (Volume 4) Several authors CISTER Research Centre Rua Dr. António Bernardino de Almeida, 431 4200-072 Porto Portugal Tel.: +351.22.8340509, Fax: +351.22.8321159 E-mail: https://www.cister-labs.pt Abstract This is the fourth volume of the successful series Robot Operating Systems: The Complete Reference, providing a comprehensive overview of robot operating systems (ROS), which is currently the main development framework for robotics applications, as well as the latest trends and contributed systems. The book is divided into four parts: Part 1 features two papers on navigation, discussing SLAM and path planning. Part 2 focuses on the integration of ROS into quadcopters and their control. Part 3 then discusses two emerging applications for robotics: cloud robotics, and video stabilization. Part 4 presents tools developed for ROS; the first is a practical alternative to the roslaunch system, and the second is related to penetration testing. This book is a valuable resource for ROS users and wanting to learn more about ROS capabilities and features. © 2019 CISTER Research Center 1 www.cister-labs.pt Studies in Computational Intelligence 831 Anis Koubaa Editor Robot Operating System (ROS) The Complete Reference (Volume 4) Studies in Computational Intelligence Volume 831 Series Editor Janusz Kacprzyk, Polish Academy of Sciences, Warsaw, Poland [email protected] The series “Studies in Computational Intelligence” (SCI) publishes new develop- ments and advances in the various areas of computational intelligence—quickly and with a high quality. -

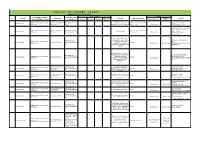

Robotics Laboratory List

Robotics List (ロボット技術関連コースのある大学) Robotics List by University Degree sought English Undergraduate / Graduate Admissions Office No. University Department Professional Keywords Application Deadline Degree in Lab links Schools / Institutes or others Website Bachelor Master’s Doctoral English Admissions Master's English Graduate School of Science and Department of Mechanical http://www.se.chiba- Robotics, Dexterous Doctoral:June and December ○ http://www.em.eng.chiba- 1 Chiba University ○ ○ ○ Engineering Engineering u.jp/en/ Manipulation, Visual Recognition Master's:June (Doctoral only) u.jp/~namiki/index-e.html Laboratory Innovative Therapeutic Engineering directed by Prof. Graduate School of Science and Department of Medical http://www.tms.chiba- Doctoral:June and December ○ 1 Chiba University ○ ○ Surgical Robotics ○ Ryoichi Nakamura Engineering Engineering u.jp/english/index.html Master's:June (Doctoral only) http://www.cfme.chiba- u.jp/~nakamura/ Micro Electro Mechanical Systems, Micro Sensors, Micro Micro System Laboratory (Dohi http://global.chuo- Graduate School of Science and Coil, Magnetic Resonance ○ ○ Lab.) 2 Chuo University Precision Mechanics u.ac.jp/english/admissio ○ ○ October Engineering Imaging, Blood Pressure (Doctoral only) (Doctoral only) http://www.msl.mech.chuo-u.ac.jp/ ns/ Measurement, Arterial Tonometry (Japanese only) Method Assistive Robotics, Human-Robot Communication, Human-Robot Human-Systems Laboratory http://global.chuo- Graduate School of Science and Collaboration, Ambient ○ http://www.mech.chuo- 2 Chuo University -

Design and Control of a Large Modular Robot Hexapod

Design and Control of a Large Modular Robot Hexapod Matt Martone CMU-RI-TR-19-79 November 22, 2019 The Robotics Institute School of Computer Science Carnegie Mellon University Pittsburgh, PA Thesis Committee: Howie Choset, chair Matt Travers Aaron Johnson Julian Whitman Submitted in partial fulfillment of the requirements for the degree of Master of Science in Robotics. Copyright © 2019 Matt Martone. All rights reserved. To all my mentors: past and future iv Abstract Legged robotic systems have made great strides in recent years, but unlike wheeled robots, limbed locomotion does not scale well. Long legs demand huge torques, driving up actuator size and onboard battery mass. This relationship results in massive structures that lack the safety, portabil- ity, and controllability of their smaller limbed counterparts. Innovative transmission design paired with unconventional controller paradigms are the keys to breaking this trend. The Titan 6 project endeavors to build a set of self-sufficient modular joints unified by a novel control architecture to create a spiderlike robot with two-meter legs that is robust, field- repairable, and an order of magnitude lighter than similarly sized systems. This thesis explores how we transformed desired behaviors into a set of workable design constraints, discusses our prototypes in the context of the project and the field, describes how our controller leverages compliance to improve stability, and delves into the electromechanical designs for these modular actuators that enable Titan 6 to be both light and strong. v vi Acknowledgments This work was made possible by a huge group of people who taught and supported me throughout my graduate studies and my time at Carnegie Mellon as a whole. -

Robotic Paradigms and Control Architectures

Robotic Paradigms and Control Architectures Jan Faigl Department of Computer Science Faculty of Electrical Engineering Czech Technical University in Prague Lecture 02 B4M36UIR – Artificial Intelligence in Robotics Jan Faigl, 2020 B4M36UIR – Lecture 02: Robotic Paradigms 1 / 46 Overview of the Lecture Part 1 – Robotic Paradigms and Control Architectures Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Jan Faigl, 2020 B4M36UIR – Lecture 02: Robotic Paradigms 2 / 46 Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Part I Part 1 – Robotic Paradigms and Control Architectures Jan Faigl, 2020 B4M36UIR – Lecture 02: Robotic Paradigms 3 / 46 Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Outline Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Jan Faigl, 2020 B4M36UIR – Lecture 02: Robotic Paradigms 4 / 46 Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Robot A robot perceives an environment using sensors to control its actuators. Sensor Controller Actuators The main parts of the robot correspond to the primitives of robotics: Sense, Plan, and Act. The primitives form a control architecture that is called robotic paradigm. Jan Faigl, 2020 B4M36UIR – Lecture 02: Robotic Paradigms 5 / 46 Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Robotic Paradigms Robotic paradigms define relationship between the robotics primitives: Sense, Plan, and Act. Three fundamental paradigms have been propose. 1. Hierarchical paradigm is purely deliberative system. SENSEPLAN ACT 2. Reactive paradigm represents reactive control. SENSE ACT 3. -

Embodied Evolution in Collective Robotics: a Review Nicolas Bredeche, Evert Haasdijk, Abraham Prieto

Embodied Evolution in Collective Robotics: A Review Nicolas Bredeche, Evert Haasdijk, Abraham Prieto To cite this version: Nicolas Bredeche, Evert Haasdijk, Abraham Prieto. Embodied Evolution in Collective Robotics: A Review. Frontiers in Robotics and AI, Frontiers Media S.A., 2018, 5, pp.12. 10.3389/frobt.2018.00012. hal-03313845 HAL Id: hal-03313845 https://hal.sorbonne-universite.fr/hal-03313845 Submitted on 4 Aug 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. REVIEW published: 22 February 2018 doi: 10.3389/frobt.2018.00012 Embodied Evolution in Collective Robotics: A Review Nicolas Bredeche1*, Evert Haasdijk2 and Abraham Prieto3 1 Sorbonne Université, CNRS, Institute of Intelligent Systems and Robotics, ISIR, Paris, France, 2 Computational Intelligence Group, Department of Computer Science, Vrije Universiteit, Amsterdam, Netherlands, 3 Integrated Group for Engineering Research, Universidade da Coruna, Ferrol, Spain This article provides an overview of evolutionary robotics techniques applied to online distributed evolution for robot collectives, namely, embodied evolution. It provides a definition of embodied evolution as well as a thorough description of the underlying concepts and mechanisms. This article also presents a comprehensive summary of research published in the field since its inception around the year 2000, providing various perspectives to identify the major trends. -

Botball Kit for Teaching Engineering Computing

Botball Kit for Teaching Engineering Computing David P. Miller Charles Winton School of AME Department of CIS University of Oklahoma University of N. Florida Norman, OK 73019 Jacksonville, FL 32224 Abstract Many engineering classes can benefit from hands on use of robots. The KISS Institute Botball kit has found use in many classes at a number of universities. This paper outlines how the kit is used in a few of these different classes at a couple of different universities. This paper also introduces the Collegiate Botball Challenge, and how it can be used as a class project. 1 Introduction Introductory engineering courses are used to teach general principles while introducing the students to all of the engineering disciplines. Robotics, as a multi-disciplinary application can be an ideal subject for projects that stress the different engineering fields. A major consideration in establishing a robotics course emphasizing mobile robots is the type of hands-on laboratory experience that will be incorporated into the course of instruction. Most electrical engineering schools lack the machine shops and expertise needed to create the mechanical aspects of a robot system. Most mechanical engineering schools lack the electronics labs and expertise needed for the actuation, sensing and computational aspects required to support robotics work. The situation is even more dire for most computer science schools. Computer science departments typically do not have the support culture for the kind of laboratories that are more typically associated with engineering programs. On the other hand, it is recognized that computer science students need courses which provide closer to real world experiences via representative hands-on exercises. -

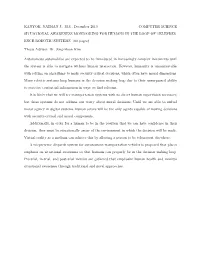

KANYOK, NATHAN J., MS, December 2019 COMPUTER SCIENCE

KANYOK, NATHAN J., M.S., December 2019 COMPUTER SCIENCE SITUATIONAL AWARENESS MONITORING FOR HUMANS-IN-THE-LOOP OF TELEPRES- ENCE ROBOTIC SYSTEMS (80 pages) Thesis Advisor: Dr. Jong-Hoon Kim Autonomous automobiles are expected to be introduced in increasingly complex increments until the system is able to navigate without human interaction. However, humanity is uncomfortable with relying on algorithms to make security critical decisions, which often have moral dimensions. Many robotic systems keep humans in the decision making loop due to their unsurpassed ability to perceive contextual information in ways we find relevant. It is likely that we will see transportation systems with no direct human supervision necessary, but these systems do not address our worry about moral decisions. Until we are able to embed moral agency in digital systems, human actors will be the only agents capable of making decisions with security-critical and moral components. Additionally, in order for a human to be in the position that we can have confidence in their decision, they must be situationally aware of the environment in which the decision will be made. Virtual reality as a medium can achieve this by allowing a person to be telepresent elsewhere. A telepresence dispatch system for autonomous transportation vehicles is proposed that places emphasis on situational awareness so that humans can properly be in the decision making loop. Pre-trial, in-trial, and post-trial metrics are gathered that emphasize human health and monitor situational awareness through traditional and novel approaches. SITUATIONAL AWARENESS MONITORING FOR HUMANS-IN-THE-LOOP OF TELEPRESENCE ROBOTIC SYSTEMS A thesis submitted to Kent State University in partial fulfillment of the requirements for the degree of Master of Science by Nathan J. -

Insect-Computer Hybrid System for Autonomous Search and Rescue Mission

Insect-Computer Hybrid System for Autonomous Search and Rescue Mission Hirotaka Sato ( [email protected] ) Nanyang Technological University P. Thanh Tran-Ngoc Nanyang Technological University Le Duc Long Nanyang Technological University Bing Sheng Chong Nanyang Technological University H. Duoc Nguyen Nanyang Technological University V. Than Dung Nanyang Technological University Feng Cao Nanyang Technological University Yao Li Harbin Institute of Technology Kazuki Kai Nanyang Technological University Jia Hui Gan Nanyang Technological University T. Thang Vo-Doan University of Freiburg T. Luan Nguyen University of Leeds Physical Sciences - Article Keywords: Disaster-hit Areas, Power Consumption, Locomotion Computation Load, Obstacle-avoidance System, Navigation Control Algorithm, Human Presence Detection Posted Date: June 12th, 2021 DOI: https://doi.org/10.21203/rs.3.rs-598481/v1 License: This work is licensed under a Creative Commons Attribution 4.0 International License. Read Full License Insect-Computer Hybrid System for Autonomous Search and Rescue Mission P. Thanh Tran-Ngoc1, D. Long Le1, Bing Sheng Chong1, H. Duoc Nguyen1, V. Than Dung1, Feng Cao1, Yao Li2, Kazuki Kai1, Jia Hui Gan1, T. Thang Vo-Doan3, T. Luan Nguyen4, and Hirotaka Sato1* 1School of Mechanical & Aerospace Engineering, Nanyang Technological University; 50 Nanyang Avenue, 639798, Singapore 2School of Mechanical Engineering and Automation, Harbin Institute of Technology, Shenzhen; University Town, Shenzhen, 518055, China 3University of Freiburg; Hauptstrasse. 1, Freiburg, 79104, Germany 4University of Leeds; Woodhouse, Leeds LS2 9JT, United Kingdom *Corresponding author. Email: [email protected] Abstract: There is still a long way to go before artificial mini robots are really used for search and rescue missions in disaster-hit areas due to hindrance in power consumption, computation load of the locomotion, and obstacle-avoidance system. -

Vision Based Localization of Mobile Robots

Rochester Institute of Technology RIT Scholar Works Theses 2007 Vision based localization of mobile robots Jason Mooberry Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Mooberry, Jason, "Vision based localization of mobile robots" (2007). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Vision Based Localization of Mobile Robots Jason Mooberry August 2007 Zach Butler 0·Y~7 Roxanne Canosa F"!)..J!OI Richard Zanibbi g (;),"3(07 Golisano College of Computing & Infonnation Sciences Rochester Institute of Technology Rochester, NY 14623 Table of Contents Abstract 1 1 Introduction 1 1.1 Localization 1 1.1.1 Localization with Kalman Filters 1 1.1.2 Markov Localization 2 1.1.3 Monte Carlo Localization 2 1 . 1 .4 Vision Based Localization 3 1 .2 Architecture 3 1.2.1 Deliberative 3 1.2.2 Reactive 4 1.2.3 Behavioral 4 1.2.4 Hybrid 4 2 Monte Carlo Localization 5 2.1 Motion Model 6 2.2 Sensor Model 6 3 Vision Based MCL 7 3.1 Research Platform 7 3.2 Motion Estimation 8 3.2.1 Environment 8 3.2.2 Rotation Error Modeling 8 3.2.3 Translation Error Modeling 1 1 3.2.4 Application ofMotion Model 14 3.3 Sensor Model 15 3.3.1 Naive Correlation with Color Histograms 15 3.4 Resampling 20 3.5 Localization Architecture 21 3.6 Trials 22 3.6.1 Success Indicators 22 3.6.2 Position -

Arxiv:1606.05830V4

This paper has been accepted for publication in IEEE Transactions on Robotics. DOI: 10.1109/TRO.2016.2624754 IEEE Explore: http://ieeexplore.ieee.org/document/7747236/ Please cite the paper as: C. Cadena and L. Carlone and H. Carrillo and Y. Latif and D. Scaramuzza and J. Neira and I. Reid and J.J. Leonard, “Past, Present, and Future of Simultaneous Localization And Mapping: Towards the Robust-Perception Age”, in IEEE Transactions on Robotics 32 (6) pp 1309-1332, 2016 bibtex: @articlefCadena16tro-SLAMfuture, title = fPast, Present, and Future of Simultaneous Localization And Mapping: Towards the Robust-Perception Ageg, author = fC. Cadena and L. Carlone and H. Carrillo and Y. Latif and D. Scaramuzza and J. Neira and I. Reid and J.J. Leonardg, journal = ffIEEE Transactions on Roboticsgg, year = f2016g, number = f6g, pages = f1309–1332g, volume = f32g g arXiv:1606.05830v4 [cs.RO] 30 Jan 2017 1 Past, Present, and Future of Simultaneous Localization And Mapping: Towards the Robust-Perception Age Cesar Cadena, Luca Carlone, Henry Carrillo, Yasir Latif, Davide Scaramuzza, Jose´ Neira, Ian Reid, John J. Leonard Abstract—Simultaneous Localization And Mapping (SLAM) I. INTRODUCTION consists in the concurrent construction of a model of the environment (the map), and the estimation of the state of the robot LAM comprises the simultaneous estimation of the state moving within it. The SLAM community has made astonishing S of a robot equipped with on-board sensors, and the con- progress over the last 30 years, enabling large-scale real-world struction of a model (the map) of the environment that the applications, and witnessing a steady transition of this technology to industry. -

Robotics in Germany and Japan DRESDEN PHILOSOPHY of TECHNOLOGY STUDIES DRESDNER STUDIEN ZUR PHILOSOPHIE DER TECHNOLOGIE

Robotics in Germany and Japan DRESDEN PHILOSOPHY OF TECHNOLOGY STUDIES DRESDNER STUDIEN ZUR PHILOSOPHIE DER TECHNOLOGIE Edited by /Herausgegeben von Bernhard Irrgang Vol./Bd. 5 Michael Funk / Bernhard Irrgang (eds.) Robotics in Germany and Japan Philosophical and Technical Perspectives Bibliographic Information published by the Deutsche Nationalbibliothek The Deutsche Nationalbibliothek lists this publication in the Deutsche Nationalbibliografie; detailed bibliographic data is available in the internet at http://dnb.d-nb.de. Library of Congress Cataloging-in-Publication Data Robotics in Germany and Japan : philosophical and technical perspectives / Michael Funk, Bernhard Irrgang (eds.). pages cm ----- (Dresden philosophy of technology perspectives, ISSN 1861- -- 423X ; v. 5) ISBN 978-3-631-62071-7 ----- ISBN 978-3-653-03976-4 (ebook) 1. Robotics-----Germany----- Popular works. 2. Robotics----- Japan--Popular works. 3. Robotics-----Philosophy. I. Funk, Michael, 1985- -- editor of compilation. II. Irrgang, Bernhard, editor of compilation. TJ211.15.R626 2014 629.8'920943----- dc23 2013045885 Cover illustration: Humanoid Robot “ARMAR” (KIT, Germany), Photograph: Michael Funk An electronic version of this book is freely available, thanks to the support of libraries working with Knowledge Unlatched. KU is a collaborative initiative designed to make high quality books Open Access for the public good. More information about the initiative and links to the Open Access version can be found at www.knowledgeunlatched.org ISSN 1861-423X • ISBN 978-3-631-62071-7 (Print) E-ISBN 978-3-653-03976-4 (E-PDF) • E-ISBN 978-3-653-99964-8 (EPUB) E-ISBN 978-3-653-99963-1 (MOBI) • DOI 10.3726/978-3-653-03976-4 Open Access: This work is licensed under a Creative Commons Attribution NonCommercial NoDerivatives 4.0 unported license. -

Intelligent Automation Entering the Business World

Intelligent automation entering the business world Patrick Laurent Thibault Chollet Elsa Herzberg Partner Director Analyst Technology & Enterprise Technology & Enterprise Technology & Enterprise Application Application Application Deloitte Deloitte Deloitte Automation using artificial intelligence might be the next game changer in terms of process efficiency in the financial industry. Robotic process automation or intelligent automation Until recently, robotics has found most of its (the combination of artificial intelligence and applications in the primary sector, automating and automation) is starting to change the way business removing the human element from the production is done in nearly every sector of the economy. chain. Replacing menial tasks was its first foray, and Intelligent automation systems detect and produce many organisations introduced robotics into their vast amounts of information and can automate assembly line, warehouse, and cargo bay operations. entire processes or workflows, learning and adapting as they go. Applications range from the routine to Now, tertiary sector businesses have already started the revolutionary: from collecting, analysing, and to apply new technologies and the robotic paradigm making decisions about textual information to guiding to automate their processes and replace humans in autonomous vehicles and advanced robots. It is already low value-added activities. This is also the case in the helping companies transcend conventional performance financial services industry. trade-offs to achieve unprecedented levels of efficiency and quality. What is intelligent automation and to which processes is it applicable for bank, insurance or fund servicing industries? Robotic process automation combines artificial intelligence—including natural language processing, machine learning, autonomics, and machine vision — with automation. Artificial intelligence and automation are hardly new, but the technologies have progressed substantially in recent years.