Insect-Computer Hybrid System for Autonomous Search and Rescue Mission

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Autonomous Mobile Systems for Long-Term Operations in Spatio-Temporal Environments Cedric Pradalier

Autonomous Mobile Systems for Long-Term Operations in Spatio-Temporal Environments Cedric Pradalier To cite this version: Cedric Pradalier. Autonomous Mobile Systems for Long-Term Operations in Spatio-Temporal Envi- ronments. Robotics [cs.RO]. INP DE TOULOUSE, 2015. tel-01435879 HAL Id: tel-01435879 https://hal.archives-ouvertes.fr/tel-01435879 Submitted on 20 Jan 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution| 4.0 International License Autonomous Mobile Systems for Long-Term Operations in Spatio-Temporal Environments Synth`ese des travaux de recherche r´ealis´es en vue de l’obtention de l’Habilitation `aDiriger des Recherches de l’Institut National Polytechnique de Toulouse C´edric PRADALIER GeorgiaTech Lorraine – UMI 2958 GT-CNRS 2, rue Marconi 57070 METZ, France [email protected] Soutenue le Vendredi 6 Juin 2015 au LAAS/CNRS Membres du jury: Francois Chaumette, INRIA, Rapporteur Tim Barfoot, University of Toronto, Rapporteur Henrik Christensen, Georgia Institute of Technology, Rapporteur Olivier Simonin, INSA Lyon, Examinateur Roland Lenain, IRSTEA, Examinateur Florent Lamiraux, LAAS/CNRS, Examinateur Simon Lacroix, LAAS/CNRS, Examinateur Contents 1 Introduction 2 2 Autonomous mobile systems for natural and unmodified environments 4 2.1 Localization, navigation and control for mobile robotic systems . -

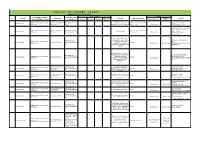

Robotics Laboratory List

Robotics List (ロボット技術関連コースのある大学) Robotics List by University Degree sought English Undergraduate / Graduate Admissions Office No. University Department Professional Keywords Application Deadline Degree in Lab links Schools / Institutes or others Website Bachelor Master’s Doctoral English Admissions Master's English Graduate School of Science and Department of Mechanical http://www.se.chiba- Robotics, Dexterous Doctoral:June and December ○ http://www.em.eng.chiba- 1 Chiba University ○ ○ ○ Engineering Engineering u.jp/en/ Manipulation, Visual Recognition Master's:June (Doctoral only) u.jp/~namiki/index-e.html Laboratory Innovative Therapeutic Engineering directed by Prof. Graduate School of Science and Department of Medical http://www.tms.chiba- Doctoral:June and December ○ 1 Chiba University ○ ○ Surgical Robotics ○ Ryoichi Nakamura Engineering Engineering u.jp/english/index.html Master's:June (Doctoral only) http://www.cfme.chiba- u.jp/~nakamura/ Micro Electro Mechanical Systems, Micro Sensors, Micro Micro System Laboratory (Dohi http://global.chuo- Graduate School of Science and Coil, Magnetic Resonance ○ ○ Lab.) 2 Chuo University Precision Mechanics u.ac.jp/english/admissio ○ ○ October Engineering Imaging, Blood Pressure (Doctoral only) (Doctoral only) http://www.msl.mech.chuo-u.ac.jp/ ns/ Measurement, Arterial Tonometry (Japanese only) Method Assistive Robotics, Human-Robot Communication, Human-Robot Human-Systems Laboratory http://global.chuo- Graduate School of Science and Collaboration, Ambient ○ http://www.mech.chuo- 2 Chuo University -

Design and Control of a Large Modular Robot Hexapod

Design and Control of a Large Modular Robot Hexapod Matt Martone CMU-RI-TR-19-79 November 22, 2019 The Robotics Institute School of Computer Science Carnegie Mellon University Pittsburgh, PA Thesis Committee: Howie Choset, chair Matt Travers Aaron Johnson Julian Whitman Submitted in partial fulfillment of the requirements for the degree of Master of Science in Robotics. Copyright © 2019 Matt Martone. All rights reserved. To all my mentors: past and future iv Abstract Legged robotic systems have made great strides in recent years, but unlike wheeled robots, limbed locomotion does not scale well. Long legs demand huge torques, driving up actuator size and onboard battery mass. This relationship results in massive structures that lack the safety, portabil- ity, and controllability of their smaller limbed counterparts. Innovative transmission design paired with unconventional controller paradigms are the keys to breaking this trend. The Titan 6 project endeavors to build a set of self-sufficient modular joints unified by a novel control architecture to create a spiderlike robot with two-meter legs that is robust, field- repairable, and an order of magnitude lighter than similarly sized systems. This thesis explores how we transformed desired behaviors into a set of workable design constraints, discusses our prototypes in the context of the project and the field, describes how our controller leverages compliance to improve stability, and delves into the electromechanical designs for these modular actuators that enable Titan 6 to be both light and strong. v vi Acknowledgments This work was made possible by a huge group of people who taught and supported me throughout my graduate studies and my time at Carnegie Mellon as a whole. -

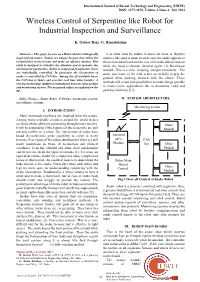

Wireless Control of Serpentine Like Robot for Industrial Inspection and Surveillance Controller, Camera Sensor Part, and Zigbee Network B

International Journal of Recent Technology and Engineering (IJRTE) ISSN: 2277-3878, Volume-2 Issue-3, July 2013 Wireless Control of Serpentine like Robot for Industrial Inspection and Surveillance K. Gafoor Raja, G. Ramakrishna Abstract— This paper focuses on a Robot which is biologically It is often used by snakes to move on loose or slippery inspired from nature. Snakes are unique because they utilize the surfaces like sand or mud, in such cases the snake appears to irregularities in the terrain and make an effective motion. This throw its head forward and the rest of its body follows motion robot is designed to visualize the situation and to measure the while the head is thrown forward again. (4) Rectilinear environment parameters. Snake is composed of segments, those method: This is a slow, creeping, straight movement. The are individually controlled. In particular the locomotion of snake uses some of the wide scales on its belly to grip the snake is controlled by CAN-bus. Among the all available buses ground while pushing forward with the others. These the CAN-bus is faster and provides real time data transfer. A methods will create new possibilities to make things possible wireless technology (ZigBee) is introduced between robot section and monitoring section. The measured values are updated on the in many rescue applications like in disastrous, risky and PC. perilous situations [11]. Index Terms— Snake Robot, CAN-bus, locomotion control, II. SYSTEM ARCHITECTURE surveillance, sensing. Monitoring section I. INTRODUCTION Many manmade machines are inspired from the nature. Among many available creatures around the world snakes are those whose ability to penetrating through many terrains. -

New Method for Robotic Systems Architecture

NEW METHOD FOR ROBOTIC SYSTEMS ARCHITECTURE ANALYSIS, MODELING, AND DESIGN By LU LI Submitted in partial fulfillment of the requirements For the degree of Master of Science Thesis Advisor: Dr. Roger Quinn Department of Mechanical and Aerospace Engineering CASE WESTERN RESERVE UNIVERSITY August 2019 CASE WESTERN RESERVE UNIVERSITY SCHOOL OF GRADUATE STUDIES We hereby approve the thesis/dissertation of Lu Li candidate for the degree of Master of Science. Committee Chair Dr. Roger Quinn Committee Member Dr. Musa Audu Committee Member Dr. Richard Bachmann Date of Defense July 5, 2019 *We also certify that written approval has been obtained for any proprietary material contained therein. ii Table of Contents Table of Contents ................................................................................................................................................ i List of Tables ....................................................................................................................................................... ii List of Figures .................................................................................................................................................... iii Copyright page .................................................................................................................................................. iv Preface ..................................................................................................................................................................... v Acknowledgements -

|||GET||| Robots 1St Edition

ROBOTS 1ST EDITION DOWNLOAD FREE John M Jordan | 9780262529501 | | | | | Building Robots with LEGO Mindstorms NXT It relied primarily on stereo vision to navigate and determine distances. Please note the delivery estimate is greater than 6 business days. Continue shopping. Mark Zug illustrator. Illustrator: Hoban, Robots 1st edition. But the large drum memory made programming time-consuming and Robots 1st edition. Technological unemployment Terrainability Fictional robots. The robot opened up a beer, struck a golfball to its target and even conducted the shows band. First edition, first printing. Commercial and industrial robots are now in widespread use performing jobs more cheaply or with greater accuracy and reliability than humans. Condition: Very Good. For additional information, see the Global Shipping Program terms and conditions - opens in a new window or tab This amount includes applicable customs duties, taxes, Robots 1st edition and other fees. In new protective mylar. Condition: Good. Institutional Subscription. Got one to sell? The robot could move its hands and head and could be controlled by remote control or voice control. Add to Watchlist. From Wikipedia, the free encyclopedia. Seller Image. Graduate students, researchers, academics and professionals in the areas of human Robots 1st edition, robotics, social psychology, and engineering psychology. General Motors had left all its competition behind in the dust with the sheer number of cars it was producing. Users can choose from seven factory presets for tunings, six of which are editable. Himber Lebanon, NJ, U. Printing Year see all. The ultimate attempt at automation was The Turk by Wolfgang von Kempelena sophisticated machine that could play chess against a human opponent and toured Europe. -

Walking Machines: 0-Legged-Robots

limbless mobile robots file:///E|/Eigene Dateien/Leonardo/Stud...NoLegs-CD/RoboterLaufenNoLegsLocal.html Walking machines: 0-legged-robots (only snake-like robots, so far) A compilation by Christian Düntgen Pictorial Overview on Crawling Robots ACM III KORYU I KORYU II OBLIX Chabin's snake GMD-Snake GMD-Snake2 JPL-Snake Mita-Lab Snake NEC-'Quake'-Snake Snakey Shan's Snake 1 of 16 26.08.00 18:45 limbless mobile robots file:///E|/Eigene Dateien/Leonardo/Stud...NoLegs-CD/RoboterLaufenNoLegsLocal.html ATMS KAA Snake 'Henrietta' JHU Metamorphical Robot Model I. Motivation Snakes populate wide regions of our planet. They use different methods to move within varying environments (sand, water, solid surface, within trees, ...). They can even climb obstacles and pass rough, smooth and slippery surfaces. Robotic snake might be used to inspect pipes and underground locations or to explore rough territories. As snakes have a lot of degrees of freedoms, their construction pattern is interesting for manipulators e.g. to work with dangerous materials. Snake-like architectures' high degree of freedoms and allow three-dimensional locomotion and a lot of other tasks as gripping, moving or transporting objects with the body. Advantages of snake like motion 1. Terrainability Snake like robots can traverse rough terrain: they can climb steps whose heights approach its longest linear dimension, pass soft or viscous materials, span gasps, etc. 2. Traction Snakes can use almost their full bodylenght to apply forces to the ground. 3. Efficiency Low costs of body support, no cost of limb motion. But: high friction losses, lateral accelerations of the body. -

Autonomous Navigation Field Results of a Planetary Analog Robot in Antarctica

AUTONOMOUS NAVIGATION FIELD RESULTS OF A PLANETARY ANALOG ROBOT IN ANTARCTICA Stewart Moorehead, Reid Simmons, Dimitrios Apostolopoulos and William "Red" Whittaker The Robotics Institute Carnegie Mellon University 5000 Forbes Ave. Pittsburgh, PA. 15213 U.S.A. phone: 1-412-268-7086, fax: 1-412-268-5895, email: [email protected] phone: 1-412-268-262 1, fax: 1-412-268-5576, email: [email protected] phone: 1-412-268-7224, fax: 1-412-268- 1488, email: [email protected] phone: 1-412-268-6556, fax: 1-412-682-1 793, email: [email protected] ABSTRACT tion in polar terrain and meteorite detection/classification 191. Experiments were also performed on characterizing The Robotic Antarctic Meteorite Search at Curnegie Mel- laser and stereo sensors [14], systematic patterned search lon is developing robotic technologies to allow for auton- [lo], ice and snow mobility, landmark based navigation omous seart.h and class$cation of meteorites in and millimeter wave radar [I]. Foot search by the expedi- Antarctica. In November 1998, the robot Nomad was tion found two meteorites [5]. r1eployc.d in the Patriot Hills region of Antarctica to per- ,form several demonstrations and experiments of these technologies in a polar environment. Nomad drove 10.3km autonomously in Antarctica under a variety of weather and terrain conditions. This paperpre- sents the results of this traverse, the ability of stereo ~lisionand laser scanner to perceive polar terrain and the rlutonomous navigation system used. 1 INTRODUCTION From the Lunakhods on the Moon to Sojourner on Mars 161, mobile robots have demonstrated their usefulness to planetary exploration. -

KANYOK, NATHAN J., MS, December 2019 COMPUTER SCIENCE

KANYOK, NATHAN J., M.S., December 2019 COMPUTER SCIENCE SITUATIONAL AWARENESS MONITORING FOR HUMANS-IN-THE-LOOP OF TELEPRES- ENCE ROBOTIC SYSTEMS (80 pages) Thesis Advisor: Dr. Jong-Hoon Kim Autonomous automobiles are expected to be introduced in increasingly complex increments until the system is able to navigate without human interaction. However, humanity is uncomfortable with relying on algorithms to make security critical decisions, which often have moral dimensions. Many robotic systems keep humans in the decision making loop due to their unsurpassed ability to perceive contextual information in ways we find relevant. It is likely that we will see transportation systems with no direct human supervision necessary, but these systems do not address our worry about moral decisions. Until we are able to embed moral agency in digital systems, human actors will be the only agents capable of making decisions with security-critical and moral components. Additionally, in order for a human to be in the position that we can have confidence in their decision, they must be situationally aware of the environment in which the decision will be made. Virtual reality as a medium can achieve this by allowing a person to be telepresent elsewhere. A telepresence dispatch system for autonomous transportation vehicles is proposed that places emphasis on situational awareness so that humans can properly be in the decision making loop. Pre-trial, in-trial, and post-trial metrics are gathered that emphasize human health and monitor situational awareness through traditional and novel approaches. SITUATIONAL AWARENESS MONITORING FOR HUMANS-IN-THE-LOOP OF TELEPRESENCE ROBOTIC SYSTEMS A thesis submitted to Kent State University in partial fulfillment of the requirements for the degree of Master of Science by Nathan J. -

Arxiv:1606.05830V4

This paper has been accepted for publication in IEEE Transactions on Robotics. DOI: 10.1109/TRO.2016.2624754 IEEE Explore: http://ieeexplore.ieee.org/document/7747236/ Please cite the paper as: C. Cadena and L. Carlone and H. Carrillo and Y. Latif and D. Scaramuzza and J. Neira and I. Reid and J.J. Leonard, “Past, Present, and Future of Simultaneous Localization And Mapping: Towards the Robust-Perception Age”, in IEEE Transactions on Robotics 32 (6) pp 1309-1332, 2016 bibtex: @articlefCadena16tro-SLAMfuture, title = fPast, Present, and Future of Simultaneous Localization And Mapping: Towards the Robust-Perception Ageg, author = fC. Cadena and L. Carlone and H. Carrillo and Y. Latif and D. Scaramuzza and J. Neira and I. Reid and J.J. Leonardg, journal = ffIEEE Transactions on Roboticsgg, year = f2016g, number = f6g, pages = f1309–1332g, volume = f32g g arXiv:1606.05830v4 [cs.RO] 30 Jan 2017 1 Past, Present, and Future of Simultaneous Localization And Mapping: Towards the Robust-Perception Age Cesar Cadena, Luca Carlone, Henry Carrillo, Yasir Latif, Davide Scaramuzza, Jose´ Neira, Ian Reid, John J. Leonard Abstract—Simultaneous Localization And Mapping (SLAM) I. INTRODUCTION consists in the concurrent construction of a model of the environment (the map), and the estimation of the state of the robot LAM comprises the simultaneous estimation of the state moving within it. The SLAM community has made astonishing S of a robot equipped with on-board sensors, and the con- progress over the last 30 years, enabling large-scale real-world struction of a model (the map) of the environment that the applications, and witnessing a steady transition of this technology to industry. -

Systematic Configuration of Robotic Locomotion Dimitrios Apostolopoulos Carnegie Mellon University

Carnegie Mellon University Research Showcase @ CMU Robotics Institute School of Computer Science 1996 Systematic Configuration of Robotic Locomotion Dimitrios Apostolopoulos Carnegie Mellon University Follow this and additional works at: http://repository.cmu.edu/robotics Part of the Robotics Commons This Technical Report is brought to you for free and open access by the School of Computer Science at Research Showcase @ CMU. It has been accepted for inclusion in Robotics Institute by an authorized administrator of Research Showcase @ CMU. For more information, please contact [email protected]. Systematic Configuration of Robotic Locomotion Dimitrios Apostolopoulos CMU-RI-TR-96-30 The Robotics Institute Carnegie Mellon University Pittsburgh, Pennsylvania 15213 July 26, 1996 1996 Dimitrios Apostolopoulos & Carnegie Mellon University This research was sponsored by NASA under grant NAGW-3863. The views and conclusions contained in this document are those of the author and should not be interpreted as representing the official, either expressed or implied, of NASA or the United States Government. Abstract Configuration of robotic locomotion is a process that formulates, rationalizes and validates the robot’s mobility system. The configuration design describes the type and arrangement of traction elements, chassis geometry, actuation schemes for driving and steering, articulation and suspension for three-dimensional motions on terrain. These locomotion attributes are essential to position and move the robot and to negotiate terrain. However, configuration of robotic locomotion does not just involve the electromechanical aspects of design. As such, configuration of robotic locomotion should also be responsive to the issue of robotability, which is the ability to accommodate sensing and teleoperation, and to execute autonomous planning in a reliable and efficient manner. -

A Novel SLAM Quality Evaluation Method

DEGREE PROJECT IN COMPUTER SCIENCE AND ENGINEERING, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2019 A Novel SLAM Quality Evaluation Method FELIX CAESAR KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ELECTRICAL ENGINEERING AND COMPUTER SCIENCE A Novel SLAM Quality Evaluation Method FELIX CAESAR Master’s in System, Control and Robotics Date: July 1, 2019 Supervisor: Jana Tumova Examiner: John Folkesson School of Electrical Engineering and Computer Science Host company: ÅF Swedish title: En ny metod för kvalitetsbedömning av SLAM iii Abstract Autonomous vehicles have grown into a hot topic in both research and indus- try. For a vehicle to be able to run autonomously, it needs several different types of systems to function properly. One of the most important of them is simultaneous localization and mapping (SLAM). It is used for estimating the pose of the vehicle and building a map of the environment around it based on sensor readings. In this thesis we have developed an novel approach to mea- sure and evaluate the quality of a landmark-based SLAM algorithm in a static environment. The error measurement evaluation is a multi-error function and consists of the following error types: average pose error, maximum pose error, number of false negatives, number of false positives and an error relating to the distance when landmarks are added into the map. The error function can be tailored towards specific applications by settings different weights for each error. A small research concept car with several different sensors and an outside tracking system was used to create several datasets. The datasets include three different map layouts and three different power settings on the car’s engine to create a large variability in the datasets.