KANYOK, NATHAN J., MS, December 2019 COMPUTER SCIENCE

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Artificial Intelligence and the Ethics of Self-Learning Robots Shannon Vallor Santa Clara University, [email protected]

Santa Clara University Scholar Commons Philosophy College of Arts & Sciences 10-3-2017 Artificial Intelligence and the Ethics of Self-learning Robots Shannon Vallor Santa Clara University, [email protected] George A. Bekey Follow this and additional works at: http://scholarcommons.scu.edu/phi Part of the Philosophy Commons Recommended Citation Vallor, S., & Bekey, G. A. (2017). Artificial Intelligence and the Ethics of Self-learning Robots. In P. Lin, K. Abney, & R. Jenkins (Eds.), Robot Ethics 2.0 (pp. 338–353). Oxford University Press. This material was originally published in Robot Ethics 2.0 edited by Patrick Lin, Keith Abney, and Ryan Jenkins, and has been reproduced by permission of Oxford University Press. For permission to reuse this material, please visit http://www.oup.co.uk/academic/rights/permissions. This Book Chapter is brought to you for free and open access by the College of Arts & Sciences at Scholar Commons. It has been accepted for inclusion in Philosophy by an authorized administrator of Scholar Commons. For more information, please contact [email protected]. ARTIFICIAL INTELLIGENCE AND 22 THE ETHICS OF SELF - LEARNING ROBOTS Shannon Va ll or and George A. Bekey The convergence of robotics technology with the science of artificial intelligence (or AI) is rapidly enabling the development of robots that emulate a wide range of intelligent human behaviors.1 Recent advances in machine learning techniques have produced significant gains in the ability of artificial agents to perform or even excel in activities for merly thought to be the exclusive province of human intelligence, including abstract problem-solving, perceptual recognition, social interaction, and natural language use. -

User Acceptance of Virtual Reality: an Extended Technology Acceptance Model Camille Sagnier, Emilie Loup-Escande, Domitile Lourdeaux, Indira Thouvenin, Gérard Vallery

User Acceptance of Virtual Reality: An Extended Technology Acceptance Model Camille Sagnier, Emilie Loup-Escande, Domitile Lourdeaux, Indira Thouvenin, Gérard Vallery To cite this version: Camille Sagnier, Emilie Loup-Escande, Domitile Lourdeaux, Indira Thouvenin, Gérard Vallery. User Acceptance of Virtual Reality: An Extended Technology Acceptance Model. International Journal of Human-Computer Interaction, Taylor & Francis, 2020, pp.1-15. 10.1080/10447318.2019.1708612. hal-02446117 HAL Id: hal-02446117 https://hal.archives-ouvertes.fr/hal-02446117 Submitted on 28 Jun 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. User acceptance of virtual reality: an extended technology acceptance model Camille Sagniera*, Emilie Loup-Escandea*, Domitile Lourdeauxb, Indira Thouveninb and Gérard Vallérya a Center for Research on Psychology: Cognition, Psyche and Organizations (CRP-CPO EA 7273), University of Picardy Jules Verne, Amiens, France b Sorbonne universités, Université de Technologie de Compiègne, CNRS UMR 7253 Heudiasyc, Compiègne, France *Corresponding authors, e-mails: [email protected] ; [email protected] 1 User acceptance of virtual reality: an extended technology acceptance model Although virtual reality (VR) has many applications, only few studies have investigated user acceptance of this type of immersive technology. -

An Ethical Framework for Smart Robots Mika Westerlund

An Ethical Framework for Smart Robots Mika Westerlund Never underestimate a droid. Leia Organa Star Wars: The Rise of Skywalker This article focuses on “roboethics” in the age of growing adoption of smart robots, which can now be seen as a new robotic “species”. As autonomous AI systems, they can collaborate with humans and are capable of learning from their operating environment, experiences, and human behaviour feedback in human-machine interaction. This enables smart robots to improve their performance and capabilities. This conceptual article reviews key perspectives to roboethics, as well as establishes a framework to illustrate its main ideas and features. Building on previous literature, roboethics has four major types of implications for smart robots: 1) smart robots as amoral and passive tools, 2) smart robots as recipients of ethical behaviour in society, 3) smart robots as moral and active agents, and 4) smart robots as ethical impact-makers in society. The study contributes to current literature by suggesting that there are two underlying ethical and moral dimensions behind these perspectives, namely the “ethical agency of smart robots” and “object of moral judgment”, as well as what this could look like as smart robots become more widespread in society. The article concludes by suggesting how scientists and smart robot designers can benefit from a framework, discussing the limitations of the present study, and proposing avenues for future research. Introduction capabilities (Lichocki et al., 2011; Petersen, 2007). Hence, Lin et al. (2011) define a “robot” as an Robots are becoming increasingly prevalent in our engineered machine that senses, thinks, and acts, thus daily, social, and professional lives, performing various being able to process information from sensors and work and household tasks, as well as operating other sources, such as an internal set of rules, either driverless vehicles and public transportation systems programmed or learned, that enables the machine to (Leenes et al., 2017). -

Is Virtual Reality Sickness Elicited by Illusory Motion Affected by Gender and Prior Video Gaming Experience?

2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) Is Virtual Reality Sickness Elicited by Illusory Motion Affected by Gender and Prior Video Gaming Experience? Katharina Margareta Theresa Pohlmann¨ * Louise O’Hare Julia Focker¨ University of Lincoln Nottingham Trent University University of Lincoln Adrian Parke Patrick Dickinson University of the West of Scotland University of Lincoln ABSTRACT experience more VR sickness and sway than their male counter- Gaming using VR headsets is becoming increasingly popular; how- parts [11, 12, 14] and that motion sickness can be decreased with ever, these displays can cause VR sickness. To investigate the effects repeated exposure to a sickness-inducing environment [3,5,8]. Ha- of gender and gamer type on VR sickness motion illusions are used bituation to motion sickness has been shown when an individual is as stimuli, being a novel method of inducing the perception of motion repeatedly exposed to the same sickness-inducing virtual (or real) whilst minimising the “accommodation vergence conflict”. Females environment. However, in our case we are interested whether these and those who do not play action games experienced more severe adaptation effects also occur between different virtual environments. VR sickness symptoms compared to males and experienced action More precisely, whether individuals who spend a large amount of gamers. The interaction of the gender and gamer type revealed that time playing action video games (not using VR headsets) build up prior video gaming experience was beneficial for females, however, habituation effects which translate to VR. Thus, our study investi- for males, it did not show the same positive effects. -

A Survey on Simulation Sickness in Virtual Environments

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 7 July 2021 doi:10.20944/preprints202107.0167.v1 1 A Survey on Simulation Sickness in Virtual Environments Factors, Design and Best Practices Ananth N. Ramaseri Chandra, Fatima El Jamiy, Hassan Reza School of Electrical Engineering and Computer Science University of North Dakota Grand Forks, ND 58202 Email: ananthnag.ramaserich, [email protected] Abstract—Virtual Reality(VR) is an emerging technology with a broad range of application in training, entertainment, and business. To maximize the potentials of virtual reality as a medium, the unwelcome feeling of simulation sickness needs to be minimized. Even with advancements in VR, the usability concerns are barriers for a wide-spread acceptance. Several factors (hardware, software, human) play a part towards a pleasant VR experience. The reviewed scientific articles are mostly part of documents indexed in digital libraries. In this paper, we review the potential factors which cause simulation sickness and minimize the usability of virtual reality systems. We review the best practices from a developer’s perspective and some of the safety measures a user must follow while using the VR systems from existing research. Even after following some of the guidelines and best practices VR environments do not guarantee a pleasant experience for users. Limited research in VR environments towards requirement specification, design and development for maximum usability and adaptability was the main motive for this work. Index Terms—Virtual reality(VR), Virtual environment, Simulation sickness, Head mounted display(HMD), Usability, Design, Guidelines, User F 1 INTRODUCTION its history, and how simulation sickness is a usability issues for virtual reality systems. -

Nudging for Good: Robots and the Ethical Appropriateness of Nurturing Empathy and Charitable Behavior

Nudging for Good: Robots and the Ethical Appropriateness of Nurturing Empathy and Charitable Behavior Jason Borenstein* and Ron Arkin** Predictions are being commonly voiced about how robots are going to become an increasingly prominent feature of our day-to-day lives. Beyond the military and industrial sectors, they are in the process of being created to function as butlers, nannies, housekeepers, and even as companions (Wallach and Allen 2009). The design of these robotic technologies and their use in these roles raises numerous ethical issues. Indeed, entire conferences and books are now devoted to the subject (Lin et al. 2014).1 One particular under-examined aspect of human-robot interaction that requires systematic analysis is whether to allow robots to influence a user’s behavior for that person’s own good. However, an even more controversial practice is on the horizon and warrants attention, which is the ethical acceptability of allowing a robot to “nudge” a user’s behavior for the good of society. For the purposes of this paper, we will examine the feasibility of creating robots that would seek to nurture a user’s empathy towards other human beings. We specifically draw attention to whether it would be ethically appropriate for roboticists to pursue this type of design pathway. In our prior work, we examined the ethical aspects of encoding Rawls’ Theory of Social Justice into robots in order to encourage users to act more socially just towards other humans (Borenstein and Arkin 2016). Here, we primarily limit the focus to the performance of charitable acts, which could shed light on a range of socially just actions that a robot could potentially elicit from a user and what the associated ethical concerns may be. -

Pedagogical Aspects of VR Learning KU LEUVEN

VRinSight IO2 – Pedagogical aspects of VR learning KU LEUVEN KU LEUVEN 2 Table of Contents Introduction ............................................................................................................................................ 3 1. VR/AR/MR ....................................................................................................................................... 3 2. Virtual reality (VR) ........................................................................................................................... 5 3. Typical VR characteristics ................................................................................................................ 7 4. Benefits of using VR in education ................................................................................................... 8 5. Benefits of VR for policy makers ................................................................................................... 15 6. Pitfalls of using VR in education .................................................................................................... 16 7. Barriers to VR adoption ................................................................................................................. 17 8. Social VR ........................................................................................................................................ 21 9. Using VR in education ................................................................................................................... 25 Assignments -

Robotics Laboratory List

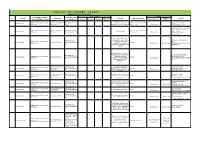

Robotics List (ロボット技術関連コースのある大学) Robotics List by University Degree sought English Undergraduate / Graduate Admissions Office No. University Department Professional Keywords Application Deadline Degree in Lab links Schools / Institutes or others Website Bachelor Master’s Doctoral English Admissions Master's English Graduate School of Science and Department of Mechanical http://www.se.chiba- Robotics, Dexterous Doctoral:June and December ○ http://www.em.eng.chiba- 1 Chiba University ○ ○ ○ Engineering Engineering u.jp/en/ Manipulation, Visual Recognition Master's:June (Doctoral only) u.jp/~namiki/index-e.html Laboratory Innovative Therapeutic Engineering directed by Prof. Graduate School of Science and Department of Medical http://www.tms.chiba- Doctoral:June and December ○ 1 Chiba University ○ ○ Surgical Robotics ○ Ryoichi Nakamura Engineering Engineering u.jp/english/index.html Master's:June (Doctoral only) http://www.cfme.chiba- u.jp/~nakamura/ Micro Electro Mechanical Systems, Micro Sensors, Micro Micro System Laboratory (Dohi http://global.chuo- Graduate School of Science and Coil, Magnetic Resonance ○ ○ Lab.) 2 Chuo University Precision Mechanics u.ac.jp/english/admissio ○ ○ October Engineering Imaging, Blood Pressure (Doctoral only) (Doctoral only) http://www.msl.mech.chuo-u.ac.jp/ ns/ Measurement, Arterial Tonometry (Japanese only) Method Assistive Robotics, Human-Robot Communication, Human-Robot Human-Systems Laboratory http://global.chuo- Graduate School of Science and Collaboration, Ambient ○ http://www.mech.chuo- 2 Chuo University -

Design and Control of a Large Modular Robot Hexapod

Design and Control of a Large Modular Robot Hexapod Matt Martone CMU-RI-TR-19-79 November 22, 2019 The Robotics Institute School of Computer Science Carnegie Mellon University Pittsburgh, PA Thesis Committee: Howie Choset, chair Matt Travers Aaron Johnson Julian Whitman Submitted in partial fulfillment of the requirements for the degree of Master of Science in Robotics. Copyright © 2019 Matt Martone. All rights reserved. To all my mentors: past and future iv Abstract Legged robotic systems have made great strides in recent years, but unlike wheeled robots, limbed locomotion does not scale well. Long legs demand huge torques, driving up actuator size and onboard battery mass. This relationship results in massive structures that lack the safety, portabil- ity, and controllability of their smaller limbed counterparts. Innovative transmission design paired with unconventional controller paradigms are the keys to breaking this trend. The Titan 6 project endeavors to build a set of self-sufficient modular joints unified by a novel control architecture to create a spiderlike robot with two-meter legs that is robust, field- repairable, and an order of magnitude lighter than similarly sized systems. This thesis explores how we transformed desired behaviors into a set of workable design constraints, discusses our prototypes in the context of the project and the field, describes how our controller leverages compliance to improve stability, and delves into the electromechanical designs for these modular actuators that enable Titan 6 to be both light and strong. v vi Acknowledgments This work was made possible by a huge group of people who taught and supported me throughout my graduate studies and my time at Carnegie Mellon as a whole. -

New Method for Robotic Systems Architecture

NEW METHOD FOR ROBOTIC SYSTEMS ARCHITECTURE ANALYSIS, MODELING, AND DESIGN By LU LI Submitted in partial fulfillment of the requirements For the degree of Master of Science Thesis Advisor: Dr. Roger Quinn Department of Mechanical and Aerospace Engineering CASE WESTERN RESERVE UNIVERSITY August 2019 CASE WESTERN RESERVE UNIVERSITY SCHOOL OF GRADUATE STUDIES We hereby approve the thesis/dissertation of Lu Li candidate for the degree of Master of Science. Committee Chair Dr. Roger Quinn Committee Member Dr. Musa Audu Committee Member Dr. Richard Bachmann Date of Defense July 5, 2019 *We also certify that written approval has been obtained for any proprietary material contained therein. ii Table of Contents Table of Contents ................................................................................................................................................ i List of Tables ....................................................................................................................................................... ii List of Figures .................................................................................................................................................... iii Copyright page .................................................................................................................................................. iv Preface ..................................................................................................................................................................... v Acknowledgements -

Virtual Reality Sickness During Immersion: an Investigation of Potential Obstacles Towards General Accessibility of VR Technology

Examensarbete 30hp August 2016 Virtual Reality sickness during immersion: An investigation of potential obstacles towards general accessibility of VR technology. A controlled study for investigating the accessibility of modern VR hardware and the usability of HTC Vive’s motion controllers. Dongsheng Lu Abstract People call the year of 2016 as the year of virtual reality. As the world leading tech giants are releasing their own Virtual Reality (VR) products, the technology of VR has been more available than ever for the mass market now. However, the fact that the technology becomes cheaper and by that reaches a mass-market, does not in itself imply that long-standing usability issues with VR have been addressed. Problems regarding motion sickness (MS) and motion control (MC) has been two of the most important obstacles for VR technology in the past. The main research question of this study is: “Are there persistent universal access issues with VR related to motion control and motion sickness?” In this study a mixed method approach has been utilized for finding more answers related to these two important aspects. A literature review in the area of VR, MS and MC was followed by a quantitative controlled study and a qualitative evaluation. 32 participants were carefully selected for this study, they were divided into different groups and the quantitative data collected from them were processed and analyzed by using statistical test. An interview was also carried out with all of the participants of this study in order to gather more details about the usability of the motion controllers used in this study. -

Analysis of User Preferences for Robot Motions in Immersive Telepresence

Analysis of User Preferences for Robot Motions in Immersive Telepresence Katherine J. Mimnaugh1, Markku Suomalainen1, Israel Becerra2, Eliezer Lozano2, Rafael Murrieta-Cid2, and Steven M. LaValle1 Abstract— This paper considers how the motions of a telep- resence robot moving autonomously affect a person immersed in the robot through a head-mounted display. In particular, we explore the preference, comfort, and naturalness of elements of piecewise linear paths compared to the same elements on a smooth path. In a user study, thirty-six subjects watched panoramic videos of three different paths through a simulated museum in virtual reality and responded to questionnaires regarding each path. Preference for a particular path was influ- enced the most by comfort, forward speed, and characteristics of the turns. Preference was also strongly associated with the users’ perceived naturalness, which was primarily determined by the ability to see salient objects, the distance to the walls and objects, as well as the turns. Participants favored the paths that had a one meter per second forward speed and rated the path with the least amount of turns as the most comfortable. I. INTRODUCTION Immersive robotic telepresence enables people to embody Fig. 1. Top: a screenshot from inside the gallery of the virtual museum, a robot in a remote location, such that they can move around seen by the participant from the point of view of the virtual robot. Bottom and feel as if they were really there instead of the robot. left: a screenshot of the hallway entrance in the virtual museum. Bottom Currently, the most scalable technology with the potential to right: a participant watching a video of a path in VR during the user study.