Robot Operating System - the Complete Reference (Volume 4) Part of the Studies in Computational Intelligence Book Series (SCI, Volume 831)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction to ROS – Robot Operating System –

Introduction to ROS – Robot Operating System – Daniel Serrano Department of Intelligent Systems, ASCAMM Technology Center Parc Tecnològic del Vallès, Av. Universitat Autònoma, 23 08290 Cerdanyola del Vallès SPAIN [email protected] ABSTRACT This paper gives an introduction to the open-source Robot Operating System (ROS. ROS has been widely adopted by the research robotics community as it provides a powerful software framework to develop autonomous unmanned systems. In this paper, we discuss some of the components that are available on the ROS repository. 1.0 INTRODUCTION The Robot Operating System (ROS) [1] is an open source robot operating system. It provides libraries and tools to help software developers create robot applications. It offers hardware abstraction, device drivers, libraries, visualizers, message-passing, package management, and more. ROS is similar in some respects to 'robot frameworks,' such as Player, YARP, Orocos, CARMEN, Orca, MOOS, and Microsoft Robotics Studio. The primary goal of ROS is to support code reuse in robotics research and development. It has been quickly adopted by many robotics research institutions and companies as their standard development framework. The list of robots using ROS is quite extent and it includes platforms from diverse domains, ranging from aerial and ground robots to humanoids and underwater vehicles. To name some, the humanoid robots PR-2 and REEM-C, ground robots such as the ClearPath platforms and aerial platforms such as the ones from Ascending Technologies are fully developed in ROS. Furthermore, a quite extent list of popular sensors for robots is already supported in ROS. To name some, sensors such as Inertial Measurement Units, GPS receivers, cameras and lasers can already be accessed from the drivers widely available on the ROS system. -

New Method for Robotic Systems Architecture

NEW METHOD FOR ROBOTIC SYSTEMS ARCHITECTURE ANALYSIS, MODELING, AND DESIGN By LU LI Submitted in partial fulfillment of the requirements For the degree of Master of Science Thesis Advisor: Dr. Roger Quinn Department of Mechanical and Aerospace Engineering CASE WESTERN RESERVE UNIVERSITY August 2019 CASE WESTERN RESERVE UNIVERSITY SCHOOL OF GRADUATE STUDIES We hereby approve the thesis/dissertation of Lu Li candidate for the degree of Master of Science. Committee Chair Dr. Roger Quinn Committee Member Dr. Musa Audu Committee Member Dr. Richard Bachmann Date of Defense July 5, 2019 *We also certify that written approval has been obtained for any proprietary material contained therein. ii Table of Contents Table of Contents ................................................................................................................................................ i List of Tables ....................................................................................................................................................... ii List of Figures .................................................................................................................................................... iii Copyright page .................................................................................................................................................. iv Preface ..................................................................................................................................................................... v Acknowledgements -

Ii ASHESI UNIVERSITY COLLEGE the MOLAM PROJECT

ASHESI UNIVERSITY COLLEGE THE MOLAM PROJECT: THE DESIGN OF A CONTEXT SPECIFIC NAVIGATION FRAMEWORK FOR A MOBILE ROBOT DANIEL NII TETTEY BOTCHWAY Dissertation submitted to the Department of Computer Science, Ashesi University College In partial fulfillment of Science degree in Computer Science APRIL 2013 ii Declaration I hereby declare that this dissertation is the result of my own original work and that no part of it has been presented for another degree in this university or elsewhere. Candidate’s Signature: ………………………………………………………………………………… Candidate’s Name : ………………………………………………………………………………… Date : ………………………………………………………………………………… I hereby declare that the preparation and presentation of the dissertation were supervised in accordance with the guidelines on supervision of dissertation laid down by Ashesi University College. Supervisor’s Signature: ………………………………………………………………………………… Supervisor’s Name : ………………………………………………………………………………… Date : ………………………………………………………………………………… iii Acknowledgements This dissertation would not have been possible without the guidance and the help of several individuals who in one way or another contributed and extended their valuable assistance in the preparation and completion of this project. First and foremost, my utmost gratitude goes to my supervisor and first robotics lecturer, Dr. G. Ayorkor Korsah whose insightful directions and assistance made this project a rewarding journey. I am truly grateful to Mr. Aelaf Dafla, Dr. Nathan Amanquah, Prof. Edwin Kay, and basically all the lecturers in the Computer Science Department for their immense contributions to shaping my mind. I would also like to thank my colleagues and friends in the Computer Science Class of 2013 for all their inspiration as we overcame all obstacles during the last four years. Last but not the least; I would like to thank my family and the one above all of us, the omnipresent God. -

Robotic Paradigms and Control Architectures

Robotic Paradigms and Control Architectures Jan Faigl Department of Computer Science Faculty of Electrical Engineering Czech Technical University in Prague Lecture 02 B4M36UIR – Artificial Intelligence in Robotics Jan Faigl, 2020 B4M36UIR – Lecture 02: Robotic Paradigms 1 / 46 Overview of the Lecture Part 1 – Robotic Paradigms and Control Architectures Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Jan Faigl, 2020 B4M36UIR – Lecture 02: Robotic Paradigms 2 / 46 Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Part I Part 1 – Robotic Paradigms and Control Architectures Jan Faigl, 2020 B4M36UIR – Lecture 02: Robotic Paradigms 3 / 46 Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Outline Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Jan Faigl, 2020 B4M36UIR – Lecture 02: Robotic Paradigms 4 / 46 Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Robot A robot perceives an environment using sensors to control its actuators. Sensor Controller Actuators The main parts of the robot correspond to the primitives of robotics: Sense, Plan, and Act. The primitives form a control architecture that is called robotic paradigm. Jan Faigl, 2020 B4M36UIR – Lecture 02: Robotic Paradigms 5 / 46 Robotics Paradigms Hierarchical Paradigm Reactive Paradigm Hybrid Paradigm Example of Collision Avoidance Robot Control Robotic Paradigms Robotic paradigms define relationship between the robotics primitives: Sense, Plan, and Act. Three fundamental paradigms have been propose. 1. Hierarchical paradigm is purely deliberative system. SENSEPLAN ACT 2. Reactive paradigm represents reactive control. SENSE ACT 3. -

Lab Lecture 1: Introduction to Ros

16-311-Q INTRODUCTION TO ROBOTICS LAB LECTURE 1: INTRODUCTION TO ROS INSTRUCTOR: GIANNI A. DI CARO PROBLEM(S) IN ROBOTICS DEVELOPMENT In Robotics, before ROS • Lack of standards • Little code reusability • Keeping reinventing (or rewriting) device drivers, access to robot’s interfaces, management of on- board processes, inter-process communication protocols, … • Keeping re-coding standard algorithms • New robot in the lab (or in the factory) start re-coding (mostly) from scratch 2 ROBOT OPERATING SYSTEM (ROS) http://www.ros.org 3 WHAT IS ROS? . ROS is an open-source robot operating system . A set of software libraries and tools that help you build robot applications that work across a wide variety of robotic platforms . Originally developed in 2007 at the Stanford Artificial Intelligence Laboratory and development continued at Willow Garage . Since 2013 managed by OSRF (Open Source Robotics Foundation) Note: Some of the following slides are adapted from Roi Yehoshua 4 ROS MAIN FEATURES ROS has two "sides" . The operating system side, which provides standard operating system services such as: o hardware abstraction o low-level device control o implementation of commonly used functionality o message-passing between processes o package management . A suite of user contributed packages that implement common robot functionality such as SLAM, planning, perception, vision, manipulation, etc. 5 ROS MAIN FEATURES 6 ROS PHILOSOPHY . Peer to Peer o ROS systems consist of many small programs (nodes) which connect to each other and continuously exchange messages . Tools-based o There are many small, generic programs that perform tasks such as visualization, logging, plotting data streams, etc. -

Embodied Evolution in Collective Robotics: a Review Nicolas Bredeche, Evert Haasdijk, Abraham Prieto

Embodied Evolution in Collective Robotics: A Review Nicolas Bredeche, Evert Haasdijk, Abraham Prieto To cite this version: Nicolas Bredeche, Evert Haasdijk, Abraham Prieto. Embodied Evolution in Collective Robotics: A Review. Frontiers in Robotics and AI, Frontiers Media S.A., 2018, 5, pp.12. 10.3389/frobt.2018.00012. hal-03313845 HAL Id: hal-03313845 https://hal.sorbonne-universite.fr/hal-03313845 Submitted on 4 Aug 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. REVIEW published: 22 February 2018 doi: 10.3389/frobt.2018.00012 Embodied Evolution in Collective Robotics: A Review Nicolas Bredeche1*, Evert Haasdijk2 and Abraham Prieto3 1 Sorbonne Université, CNRS, Institute of Intelligent Systems and Robotics, ISIR, Paris, France, 2 Computational Intelligence Group, Department of Computer Science, Vrije Universiteit, Amsterdam, Netherlands, 3 Integrated Group for Engineering Research, Universidade da Coruna, Ferrol, Spain This article provides an overview of evolutionary robotics techniques applied to online distributed evolution for robot collectives, namely, embodied evolution. It provides a definition of embodied evolution as well as a thorough description of the underlying concepts and mechanisms. This article also presents a comprehensive summary of research published in the field since its inception around the year 2000, providing various perspectives to identify the major trends. -

Architecture of a Software System for Robotics Control

Architecture of a software system for robotics control Aleksey V. Shevchenko Oksana S. Mezentseva Information Systems Technologies Dept. Information Systems Technologies Dept. Stavropol City, NCFU Stavropol City, NCFU [email protected] [email protected] Dmitriy V. Mezentsev Konstantin Y. Ganshin Information Systems Technologies Dept. Information Systems Technologies Dept. Stavropol City, NCFU Stavropol City, NCFU [email protected] [email protected] Abstract This paper describes the architecture and features of RoboStudio, a software system for robotics control that allows complete and simulta- neous monitoring of all electronic components of a robotic system. This increases the safety of operating expensive equipment and allows for flexible configuration of different robotics components without changes to the source code. 1 Introduction Today, there are no clear standards for robotics programming. Each manufacturer creates their own software and hardware architecture based on their own ideas for optimizing the development process. Large manufacturers often buy their software systems from third parties, while small ones create theirs independently. As a result, the software often targets a specific platform (more often, a specific modification of a given platform). Thus, even minor upgrades of an existing robotic system often require a complete software rewrite. The lack of universal software products imposes large time and resource costs on developers, and often leads to the curtailment of promising projects. Some developers of robotic systems software partially solve the problem of universal software using the free Robotics Operation System (ROS), which also has limitations and is not always able to satisfy all the requirements of the manufacturers. 2 Equipment The research was conducted using two robots produced by the SPA "Android Technics" the "Mechatronics" stand and the full-size anthropomorphic robot AR-601E (figure 1). -

Design and Implementation of an Autonomous Robotics Simulator

DESIGN AND IMPLEMENTATION OF AN AUTONOMOUS ROBOTICS SIMULATOR by Adam Carlton Harris A thesis submitted to the faculty of The University of North Carolina at Charlotte in partial fulfillment of the requirements for the degree of Master of Science in Electrical Engineering Charlotte 2011 Approved by: _______________________________ Dr. James M. Conrad _______________________________ Dr. Ronald R. Sass _______________________________ Dr. Bharat Joshi ii © 2011 Adam Carlton Harris ALL RIGHTS RESERVED iii ABSTRACT ADAM CARLTON HARRIS. Design and implementation of an autonomous robotics simulator. (Under the direction of DR. JAMES M. CONRAD) Robotics simulators are important tools that can save both time and money for developers. Being able to accurately and easily simulate robotic vehicles is invaluable. In the past two decades, corporations, robotics labs, and software development groups have released many robotics simulators to developers. Commercial simulators have proven to be very accurate and many are easy to use, however they are closed source and generally expensive. Open source simulators have recently had an explosion of popularity, but most are not easy to use. This thesis describes the design criteria and implementation of an easy to use open source robotics simulator. SEAR (Simulation Environment for Autonomous Robots) is designed to be an open source cross-platform 3D (3 dimensional) robotics simulator written in Java using jMonkeyEngine3 and the Bullet Physics engine. Users can import custom-designed 3D models of robotic vehicles and terrains to be used in testing their own robotics control code. Several sensor types (GPS, triple-axis accelerometer, triple-axis gyroscope, and a compass) have been simulated and early work on infrared and ultrasonic distance sensors as well as LIDAR simulators has been undertaken. -

ROS History and Basics

Computer Vision Laboratory Robot Vision Systems Lecture 9: ROS History and Basics Michael Felsberg [email protected] Goals • System design and programming in –OpenCV 3.0 rc1 –ROS (robot operating system) Indigo (Jade ?) • focus part 2: robot vision systems –distributed computing with ROS –efficient use of OpenCV in ROS • access to robotic hardware is not part of the course – make use of available resources from your lab • simulation as fallback Organization • lectures –systems basics in ROS Jade –Python introduction given by Hannes • seminars –participants who only participate in the ROS part (and did not read the OpenCV course): one seminar presentation required for credits • exercises –installation of ROS –going through essential first steps • project (example application) Organization • credits: 9hp if –project work –80% presence –one seminar presentation • without the project work: 6hp • note: if you have participated in the course ‘visual computing with OpenCV’, you can only get 6hp (3hp without project) • 'listen-only’: 0hp What is ROS? • Open Source Library for robotics middleware • Standford AI now supported by Willow Garage / Open Source Robotics Foundation • Free for use under the open source BSD license (most parts) • Linux (Ubuntu) –Experimental support Win, Mac, other Linux –rosjava (platform independent) Android, Matlab History of ROS • 2007: “Switchyard” by the Stanford Artificial Intelligence Laboratory • 2008-2013: development primarily at Willow Garage • Since 2013: Open Source Robotics Foundation Versions • 2010: -

Botball Kit for Teaching Engineering Computing

Botball Kit for Teaching Engineering Computing David P. Miller Charles Winton School of AME Department of CIS University of Oklahoma University of N. Florida Norman, OK 73019 Jacksonville, FL 32224 Abstract Many engineering classes can benefit from hands on use of robots. The KISS Institute Botball kit has found use in many classes at a number of universities. This paper outlines how the kit is used in a few of these different classes at a couple of different universities. This paper also introduces the Collegiate Botball Challenge, and how it can be used as a class project. 1 Introduction Introductory engineering courses are used to teach general principles while introducing the students to all of the engineering disciplines. Robotics, as a multi-disciplinary application can be an ideal subject for projects that stress the different engineering fields. A major consideration in establishing a robotics course emphasizing mobile robots is the type of hands-on laboratory experience that will be incorporated into the course of instruction. Most electrical engineering schools lack the machine shops and expertise needed to create the mechanical aspects of a robot system. Most mechanical engineering schools lack the electronics labs and expertise needed for the actuation, sensing and computational aspects required to support robotics work. The situation is even more dire for most computer science schools. Computer science departments typically do not have the support culture for the kind of laboratories that are more typically associated with engineering programs. On the other hand, it is recognized that computer science students need courses which provide closer to real world experiences via representative hands-on exercises. -

Key Considerations When Choosing a Robot's Operating System

Key considerations when choosing a robot’s operating system August 2018 Introduction Robots have been the subject of science fiction films for decades, but with technological advances, including the rise of internet connected devices, a real robotic revolution is beginning to emerge. Converging with the growth of artificial intelligence and machine learning, robots are being adopted in every sector imaginable, including consumer, industrial, healthcare, and retail. According to IDC, worldwide robotic spending, encompassing hardware, software and related services, is set to reach US$230.7bn by 2021. Due to the sizeable market potential, demand, and evolution of technology, companies are seizing the opportunity to build robots. However as with many other initiatives in the wider Internet of Things (IoT) industry, there are key decisions that robotic manufacturers need to make from the development stage onwards to ensure they are building a sustainable, future-proof, and secure robot. One of the most important decisions to be made is that of the robot’s operating system. Unfortunately, this importance is not always obvious until the company is too invested to change it, which can lead to a slew of delays or issues to overcome. The operating system that’s perfect for hacking things together may be impossible to maintain once the robot reaches production. Similarly, the build-your-own option means maintaining the entire operating system (backporting upstream security updates, etc.) for the lifetime of the robot. The rock-solid, stable option may end up having versions of dependencies that are too old to use. That snazzy new one created by a niche startup that seems to tick all the boxes is less appealing if they suddenly go out of business. -

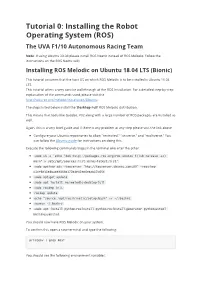

Tutorial 0: Installing the Robot Operating System (ROS) the UVA F1/10 Autonomous Racing Team

Tutorial 0: Installing the Robot Operating System (ROS) The UVA F1/10 Autonomous Racing Team Note: If using Ubuntu 20.04 please install ROS Noetic instead of ROS Melodic. Follow the instructions on the ROS Noetic wiki: Installing ROS Melodic on Ubuntu 18.04 LTS (Bionic) This tutorial assumes that the host OS on which ROS Melodic is to be installed is Ubuntu 18.04 LTS. This tutorial offers a very concise walkthrough of the ROS Installation. For a detailed step-by-step explanation of the commands used, please visit the http://wiki.ros.org/melodic/Installation/Ubuntu The steps listed below install the ‘Desktop-Full’ ROS Melodic distribution. This means that tools like Gazebo, rViz along with a large number of ROS packages are installed as well. Again, this is a very brief guide and if there is any problem at any step please visit the link above. Configure your Ubuntu repositories to allow "restricted," "universe," and "multiverse." You can follow the Ubuntu guide for instructions on doing this. Execute the following commands/steps in the terminal one after the other: sudo sh -c 'echo "deb http://packages.ros.org/ros/ubuntu $(lsb_release -sc) main" > /etc/apt/sources.list.d/ros-latest.list' sudo apt-key adv --keyserver 'hkp://keyserver.ubuntu.com:80' --recv-key C1CF6E31E6BADE8868B172B4F42ED6FBAB17C654 sudo apt-get update sudo apt install ros-melodic-desktop-full sudo rosdep init rosdep update echo "source /opt/ros/kinetic/setup.bash" >> ~/.bashrc source ~/.bashrc sudo apt install python-rosinstall python-rosinstall-generator python-wstool build-essential You should now have ROS Melodic on your system.