Databases and Information Systems Balticdb&IS'2012

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Best Practices on Public Warning Systems for Climate-Induced

Best practices on Public Warning Systems for Climate-Induced Natural Hazards Abstract: This study presents an overview of the Public Warning System, focusing on approaches, technical standards and communication systems related to the generation and the public sharing of early warnings. The analysis focuses on the definition of a set of best practices and guidelines to implement an effective public warning system that can be deployed at multiple geographic scales, from local communities up to the national and also transboundary level. Finally, a set of recommendations are provided to support decision makers in upgrading the national Public Warning System and to help policy makers in outlining future directives. Authors: Claudio Rossi Giacomo Falcone Antonella Frisiello Fabrizio Dominici Version: 30 September 2018 Table of Contents List of Figures .................................................................................................................................. 2 List of Tables ................................................................................................................................... 4 Acronyms ........................................................................................................................................ 4 Core Definitions .............................................................................................................................. 7 1. Introduction ......................................................................................................................... -

Mandate M/487 to Establish Security Standards Final Report Phase 2

In assignment of: European Commission DG Enterprise and Industry Security Research and Development Mandate M/487 to Establish Security Standards Final Report Phase 2 Proposed standardization work programmes and road maps NEN Industry P.O. Box 5059 2600 GB Delft Vlinderweg 6 2623 AX Delft The Netherlands T +31 15 2690135 F +31 15 2690207 [email protected] www.nen.nl Netherlands Standardization Institute M/487 has been accepted by the European Standards Organizations (ESOs). The work has been allocated to CEN/TC 391 ‘Societal and Citizen Security’ whose secretariat is provided by the Netherlands Standardization Institute (NEN). Mandate M/487 to Establish Security Standards Final Report Phase 2 Proposed standardization work programmes and road maps REPORT VERSION REPORT DATE Final report 05-07-2013 The copyright on this document produced in the Although the utmost care has been taken with framework of M/487 response, consisting of this publication, errors and omissions cannot be contributions from CEN/TC 391 and other security entirely excluded. The European Committee for stakeholders shall remain the exclusive property of Standardization (CEN) and/or the members of CEN and/or CENELEC and/ or ETSI in any and all the committees therefore accept no liability, not countries. even for direct or indirect damage, occurring due to or in relation with the application of publications issued by the European Committee for Standardization (CEN). Contents Page Executive summary ........................................................................................................................................... -

Business Continuity and Disaster Recovery Plan for Information Security Vyshnavi Jorrigala St

St. Cloud State University theRepository at St. Cloud State Culminating Projects in Information Assurance Department of Information Systems 12-2017 Business Continuity and Disaster Recovery Plan for Information Security Vyshnavi Jorrigala St. Cloud State University, [email protected] Follow this and additional works at: https://repository.stcloudstate.edu/msia_etds Recommended Citation Jorrigala, Vyshnavi, "Business Continuity and Disaster Recovery Plan for Information Security" (2017). Culminating Projects in Information Assurance. 44. https://repository.stcloudstate.edu/msia_etds/44 This Starred Paper is brought to you for free and open access by the Department of Information Systems at theRepository at St. Cloud State. It has been accepted for inclusion in Culminating Projects in Information Assurance by an authorized administrator of theRepository at St. Cloud State. For more information, please contact [email protected]. Business Continuity and Disaster Recovery Plan for Information Security by Vyshnavi Devi Jorrigala A Starred Paper Submitted to the Graduate Faculty of Saint Cloud State University in Partial Fulfillment of the Requirements for the Degree, of Master of Science in Information Assurance December, 2018 Starred Paper Committee: Susantha Herath, Chairperson Dien D. Phan Balasubramanian Kasi 2 Abstract Business continuity planning and Disaster recovery planning are the most crucial elements of a business but are often ignored. Businesses must make a well-structured plan and document for disaster recovery and business continuation, even before a catastrophe occurs. Disasters can be short or may last for a long time, but when an organization is ready for any adversity, it thrives hard and survives. This paper will clearly distinguish the difference between disaster recovery plan and business continuity plan, will describe the components of each plan and finally, will provide an approach that organizations can follow to make better contingency plan so that they will not go out of business when something unexpected happens. -

Document Number Title Most Recent Publication Date Scope Sector 1 Sector 2 Sector 3 Sector 4 Contents

Document Number Title Most Recent Scope Sector 1 Sector 2 Sector 3 Sector 4 Contents Publication Date ARINC 654 ENVIRONMENTAL DESIGN 9/12/1994 Refers electromagnetic Transportation Systems 1.0 INTRODUCTION GUIDELINES FOR compatibility, shielding, 1.1 Objectives INTEGRATED MODULAR thermal management, 1.2 Scope AVIONICS PACKAGING AND vibration and shock of IMA 1.3 References INTERFACES systems. Emphasis is placed 2.0 VIBRATION AND SHOCK both on the design of IMA 2.1 Introduction components and their 2.2 Vibration and Shock Isolation electrical, optical and electro- 3.0 THERMAL CONSIDERATIONS mechanical interfaces. 3.1 Thermal Management 3.1.1 Electronic System Thermal Design Objectives 3.1.2 Design Condition Definitions 3.1.3 Air Flow 3.1.4 Fully Enclosed and Flow- Through Cooling 3.1.5 Thermal Design Conditions 3.1.6 Cooling Hole Sizes - Limit Cases 3.2 Electronic Parts Application 3.3 Ambient Temperatures 3.4 Equipment Sidewall Temperature 3.5 LRM Thermal Appraisal 3.6 Thermal Interface Information 3.7 Materials for Thermal Design 4.0 DESIGN LIFE 4.1 Operational Design Life 4.2 Failure Modes ARINC 666 ITEM 7.0 Encryption and 2002 Information Technology f l/ Authentication ATIS 0300100 IP NETWORK DISASTER 1/12/2009 Pertains to enumerate Information Technology Emergency Services RECOVERY FRAMEWORK potential proactive or automatic policy-driven network traffic management actions that should be performed prior to, during, and immediately following disaster conditions. ATIS 0300202 Internetwork Operations 1/11/2009 Describes the cooperative Information Technology Emergency Services communications Guidelines for Network network management Management of the Public actions (that may be) Telecommunications required of interconnected Networks under Disaster network operators during Conditions emergency conditions associated with disasters that threaten life or property and case congestion in the public telecommunications networks. -

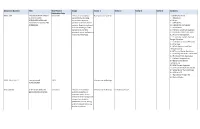

![Analysis of Relevant Standards Which Was Conducted by Using the Standards Database Perinorm [2]](https://docslib.b-cdn.net/cover/5280/analysis-of-relevant-standards-which-was-conducted-by-using-the-standards-database-perinorm-2-3015280.webp)

Analysis of Relevant Standards Which Was Conducted by Using the Standards Database Perinorm [2]

D6.1 EXISTING STANDARDS AND STANDARDIZATION ACTIVITIES REPORT. SMART MATURE RESILIENCE D6.1 EXISTING STANDARDS AND STANDARDIZATION ACTIVITIES REPORT DIN | 31/05/2016 www.smr-project.eu 1 D6.1 EXISTING STANDARDS AND STANDARDIZATION ACTIVITIES REPORT. Existing standards and standardization activities report Deliverable no. D6.1 Work package WP6 Dissemination Level Public Author (s) René Lindner and Bernhard Kempen (DIN) Co-author(s) Maider Sainz (TECNUN) Date 31/05/2016 File Name D6.1.SMR_Existing_standards_report Revision Reviewed by (if Jose J. Gonzalez (CIEM), Torbjørg Træland Meum (CIEM), Jaziar applicable) Radianti (CIEM), Vasileios P. Latinos (ICLEI) This document has been prepared in the framework of the European project SMR – SMART MATURE RESILIENCE. This project has received funding from the European Union’s Horizon 2020 Research and Innovation programme under Grant Agreement no. 653569. The sole responsibility for the content of this publication lies with the authors. It does not necessarily represent the opinion of the European Union. Neither the REA nor the European Commission is responsible for any use that may be made of the information contained therein. Funded by the Horizon 2020 programme of the European Union www.smr-project.eu 2 D6.1 EXISTING STANDARDS AND STANDARDIZATION ACTIVITIES REPORT. EXECUTIVE SUMMARY The aim of this report is to disseminate knowledge about relevant existing standards and standardization activities amongst project partners and to support the awareness rising of possible missing standards. Thus this document will show the current resilience and Smart City standardization landscape, specifically taken into consideration the security aspects, and will list and briefly assess the standards relevant for this project. -

KRITIS-Normen-Matrix, Stand 2021-07-14

KRITIS-Normen-Matrix, Stand 2021-07-14 KRITIS-Sektor Informations- Transport Finanz- und Medien Norm zuständiges Gremium/Titel der Norm technik/ Staat und Energie und Gesundheit Wasser Ernährung Versiche- und Telekom- Verwaltung Verkehr rungswesen Kultur munikation DIN-Normenausschuss Feuerwehrwesen (FNFW) NA 031-05-02 AA Organisations- und Steuerungsnormen für den Bevölkerungsschutz DIN CEN/TS 17091:2019-01 Krisenmanagement - Strategische Grundsätze DIN EN ISO 22300:2021-06 Sicherheit und Resilienz - Begriffe Sicherheit und Resilienz - Business Continuity Management DIN EN ISO 22301:2020-06 System - Anforderungen Sicherheit und Resilienz - Business Continuity Management DIN EN ISO 22313:2020-10 System - Anleitung zur Verwendung von ISO 22301 Sicherheit und Schutz des Gemeinwesens - DIN EN ISO 22315:2018-12 Massenevakuierung - Leitfaden für die Planung Sicherheit und Resilienz - Resilienz der Gesellschaft - E DIN EN ISO 22319:2021-02 Leitfaden für die Planung der Einbindung spontaner freiwilliger Helfer Sicherheit und Resilienz - Gefahrenabwehr - Leitfaden für die Organisation der Gefahrenabwehr bei DIN ISO 22320:2019-07 Schadensereignissen Sicherheit und Schutz des Gemeinwesens - ISO 22322:2015-05 Gefahrenabwehr - System zur Warnung der Öffentlichkeit Sicherheit und Resilienz - Gefahrenabwehr - Leitfaden für die Nutzung sozialer Medien im ISO/DIS 22329:2020-12 Gefahrenabwehrmanagement Krisenmanagement: Leitlinien für die Entwicklung einer ISO/CD 22361 Strategie Security and resilience - Community resilience - Guidelines ISO/TS 22393 -

ISO Committee on Consumer Policy (COPOLCO) 38Th Meeting Geneva, Switzerland 17 June 2016

ISO Committee on consumer policy (COPOLCO) 38th meeting Geneva, Switzerland 17 June 2016 Working documents 1 AGENDA ITEM 1 WELCOME AND OPENING OF THE MEETING 2 AGENDA ITEM 2 ADOPTION OF THE AGENDA 3 COPOLCO N203/2016 DRAFT AGENDA FOR THE 38TH MEETING OF COPOLCO 17 June 2016 – Mövenpick Hotel, Geneva, Switzerland, starting at 9:00 Item Document Action Rapporteur R. Nadarajan, 1. Welcome and opening of the meeting - N SNV representative, K. McKinley 2. Adoption of the agenda N203 C R. Nadarajan 3. Key developments across ISO: Oral report Strategies and programmes TMB – issues/updates on standards development C environment and stakeholder engagement O. Peyrat – DEVCO/Academy events CASCO – issues and events Tabling of the minutes of the 37th COPOLCO meeting 4. held in Geneva on 14 May 2015 N184 C R. Nadarajan Chair’s and Secretary's reports on items not otherwise R. Nadarajan 5. N204 C covered in the agenda D.Kissinger-Matray New work items and issues – general matters Raising the profile of consumer interests in the ISO system R. Devi Nadarajan 6. N205 D (awareness and capacity building) D. Kissinger-Matray 7. Strategy implementation for ISO/COPOLCO N206 D R. Devi Nadarajan R. Nadarajan 8. 2016 workshop – Results and follow-up actions N207 D K. McKinley A. Pindar 9. Consumer priorities in standardization on services N208 D Liu Chengyang New work items and issues – working groups A. Pindar 10 Revision of ISO/IEC Guide 76 N209 D Liu Chengyang M. Murvold 11. Key areas working group N210 D T. Nakakuki Consumer protection in the global marketplace working 12. -

How Standards Contribute to Business Resilience in Crisis Situations

Physikalisch-Technische Bundesanstalt INTERNATIONAL Braunschweig und Berlin National Metrology Institute COOPERATION Current Situation How Standards Contribute to Business Resilience in Crisis Situations Niels Ferdinand, Richard Prem On behalf of the Federal Government of Germany, the Physikalisch-Technische Bundesanstalt promotes the improvement of the framework conditions for economic, social and environmentally friendly action and thus supports the development of quality infrastructure. 2 CONTENTS 1. Background 4 2. The significance of standards on increasing business resilience 4 2.1. Defining resilience and business continuity management 4 2.2. Fields of action for increasing business resilience 5 2.3. The correlation between standardisation and business resilience 5 2.4. Risk management in standardisation 7 3. Specific standards for resilience and business continuity management 8 3.1. Topic overview for specific standards 8 3.2. Effectiveness of standards in social and economic crises 10 Abbreviations 11 References 12 An overview of existing standards, specifically in regard to business resilience 14 E-Learning resources 21 Notes 22 3 1. BacKgroUND 1. Background The global corona virus crisis is presenting enormous This paper summarises the current state of affairs of challenges for companies worldwide. Companies in de- standardisation in the area of business resilience. It will veloping and emerging nations are especially affected, as explain the general contributions standardisation can the crisis has severe ramifications for them, and they have make towards promoting resilience. On this basis, it will less access to support. This raises the question of how we give an overview to specific standards relevant to busi- can use standardisation to improve the resilience of com- ness resilience. -

Volume 4, No. 4, April 2013, ISSN 2226-1095

a Volume 4, No. 4, April 2013, ISSN 2226-1095 YOU a #contents #me #me Me ? – Why should I care ? ........................................................................................... 1 Me ? #worldscene ISO Focus+ is published 10 times a year International events and international standardization ............................................... 2 (single issues : July-August, Why should I care ? November-December). #guestinterview It is available in English and French. By you .......................................................................................................................... 3 www.iso.org/isofocus+ You may be wondering why you about them because they are sources need to learn about standards. There’s ISO Update : www.iso.org/isoupdate #you of international best practice no denying they are a good idea, but and can open up doors to global The electronic edition (PDF file) of For you ........................................................................................................................ 8 ISO Focus+ is accessible free of charge it’s the experts’ job to deal with them, markets. on the ISO Website www.iso.org/isofocus+ #fun | Adventure tourism – More excitement, less risk ............................................... 10 right ? Wrong. Standards concern us 3. You should get involved. An annual subscription to the paper edition all. Why ? Because we are the ultimate costs 38 Swiss francs. #fun | Roller-coaster thrills – Amusement for all ages without danger ........................ 12 You have the -

Real-Time Code Generation in Virtualizing Runtime Environments

Department of Computer Science Real-time Code Generation in Virtualizing Runtime Environments Dissertation submitted in fulfillment of the requirements for the academic degree doctor of engineering (Dr.-Ing.) to the Department of Computer Science of the Chemnitz University of Technology from: Dipl.-Inf. (Univ.) Martin Däumler born on: 03.01.1985 born in: Plauen Assessors: Prof. Dr.-Ing. habil. Matthias Werner Prof. Dr. rer. nat. habil. Wolfram Hardt Chemnitz, March 3, 2015 Acknowledgements The work on this research topic was a professional and personal development, rather than just writing this thesis. I would like to thank my doctoral adviser Prof. Dr. Matthias Werner for the excellent supervision and the time spent on numerous professional discus- sions. He taught me the art of academia and research. I would like to thank Alexej Schepeljanski for the time spent on discussions, his advices on technical problems and the very good working climate. I also thank Prof. Dr. Wolfram Hardt for the feedback and the cooperation. I am deeply grateful to my family and my girlfriend for the trust, patience and support they gave to me. Abstract Modern general purpose programming languages like Java or C# provide a rich feature set and a higher degree of abstraction than conventional real-time programming languages like C/C++ or Ada. Applications developed with these modern languages are typically deployed via platform independent intermediate code. The intermediate code is typi- cally executed by a virtualizing runtime environment. This allows for a high portability. Prominent examples are the Dalvik Virtual Machine of the Android operating system, the Java Virtual Machine as well as Microsoft .NET’s Common Language Runtime. -

![Request for Proposal for Selection of System Integrator for Implementation of [City] Smart City Solutions](https://docslib.b-cdn.net/cover/1955/request-for-proposal-for-selection-of-system-integrator-for-implementation-of-city-smart-city-solutions-6191955.webp)

Request for Proposal for Selection of System Integrator for Implementation of [City] Smart City Solutions

Request for proposal for Selection of System Integrator for Implementation of [city] Smart City Solutions Volume 2: Scope of Work RFP for System Integrator for Implementation of [city] Smart City Solutions Contents 1. Project Scope of Work .................................................................................................................... 5 1.1.1 Overview ....................................................................................................................... 13 1.1.2 Solution requirements .................................................................................................. 14 1.1.3 Scope of Work ............................................................................................................... 19 1.2. SOLUTION 2 – City WiFi ......................................................................................................... 26 1.2.1 Overview ....................................................................................................................... 26 1.2.2 Solution requirements .................................................................................................. 26 1.2.3 Scope of Work ............................................................................................................... 27 1.3 SOLUTION 3 – City Surveillance ............................................................................................ 31 1.3.1 Overview ...................................................................................................................... -

Samsung Gets Great Value and Benefit from ISO Standards.”

Volume 3, No. 6, June 2012, ISSN 2226-1095 Innovation • Samsung CEO : “Samsung gets great value and benefit from ISO standards.” • ISO 20121 for sustainable events Contents Comment Comment ISO standards Dr. Boris Aleshin, ISO President 2011-2012 ISO standards – In support of innovation ................................................................. 1 ISO Focus+ is published 10 times a year World Scene (single issues : July-August, In support of innovation November-December). International events and international standardization ............................................ 2 It is available in English and French. Guest Interview Bonus articles : www.iso.org/isofocus+ ISO Update : www.iso.org/isoupdate Geesung Choi – Samsung Electronics ...................................................................... 3 Innovation emerges at every moment, at every location in the world. goal, ISO is working on ways to innovate As Theodore Levitt, former economist and professor at Harvard Busi- and improve the standards development The electronic edition (PDF file) ofISO Special Report ness School said: “Just as energy is the basis of life itself, and ideas process, to shorten development timetables Focus+ is accessible free of charge on the while providing higher quality documents International Standards – A benchmark for innovation ........................................... 6 ISO Website www.iso.org/isofocus+ the sources of innovation, so is innovation the vital spark of all hu- and improving the relevance of the work An annual subscription to