Automatic Diacritization of Tunisian Dialect Text Using Recurrent Neural Network

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alif and Hamza Alif) Is One of the Simplest Letters of the Alphabet

’alif and hamza alif) is one of the simplest letters of the alphabet. Its isolated form is simply a vertical’) ﺍ stroke, written from top to bottom. In its final position it is written as the same vertical stroke, but joined at the base to the preceding letter. Because of this connecting line – and this is very important – it is written from bottom to top instead of top to bottom. Practise these to get the feel of the direction of the stroke. The letter 'alif is one of a number of non-connecting letters. This means that it is never connected to the letter that comes after it. Non-connecting letters therefore have no initial or medial forms. They can appear in only two ways: isolated or final, meaning connected to the preceding letter. Reminder about pronunciation The letter 'alif represents the long vowel aa. Usually this vowel sounds like a lengthened version of the a in pat. In some positions, however (we will explain this later), it sounds more like the a in father. One of the most important functions of 'alif is not as an independent sound but as the You can look back at what we said about .(ﺀ) carrier, or a ‘bearer’, of another letter: hamza hamza. Later we will discuss hamza in more detail. Here we will go through one of the most common uses of hamza: its combination with 'alif at the beginning or a word. One of the rules of the Arabic language is that no word can begin with a vowel. Many Arabic words may sound to the beginner as though they start with a vowel, but in fact they begin with a glottal stop: that little catch in the voice that is represented by hamza. -

Word Stress and Vowel Neutralization in Modern Standard Arabic

Word Stress and Vowel Neutralization in Modern Standard Arabic Jack Halpern (春遍雀來) The CJK Dictionary Institute (日中韓辭典研究所) 34-14, 2-chome, Tohoku, Niiza-shi, Saitama 352-0001, Japan [email protected] rules differ somewhat from those used in liturgi- Abstract cal Arabic. Word stress in Modern Standard Arabic is of Arabic word stress and vowel neutralization great importance to language learners, while rules have been the object of various studies, precise stress rules can help enhance Arabic such as Janssens (1972), Mitchell (1990) and speech technology applications. Though Ara- Ryding (2005). Though some grammar books bic word stress and vowel neutralization rules offer stress rules that appear short and simple, have been the object of various studies, the lit- erature is sometimes inaccurate or contradic- upon careful examination they turn out to be in- tory. Most Arabic grammar books give stress complete, ambiguous or inaccurate. Moreover, rules that are inadequate or incomplete, while the linguistic literature often contains inaccura- vowel neutralization is hardly mentioned. The cies, partially because little or no distinction is aim of this paper is to present stress and neu- made between MSA and liturgical Arabic, or tralization rules that are both linguistically ac- because the rules are based on Egyptian-accented curate and pedagogically useful based on how MSA (Mitchell, 1990), which differs from stan- spoken MSA is actually pronounced. dard MSA in important ways. 1 Introduction Arabic stress and neutralization rules are wor- Word stress in both Modern Standard Arabic thy of serious investigation. Other than being of (MSA) and the dialects is non-phonemic. -

Mpub10110094.Pdf

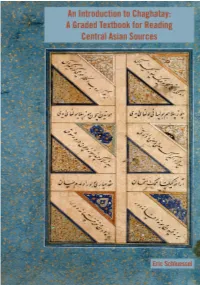

An Introduction to Chaghatay: A Graded Textbook for Reading Central Asian Sources Eric Schluessel Copyright © 2018 by Eric Schluessel Some rights reserved This work is licensed under the Creative Commons Attribution-NonCommercial- NoDerivatives 4.0 International License. To view a copy of this license, visit http:// creativecommons.org/licenses/by-nc-nd/4.0/ or send a letter to Creative Commons, PO Box 1866, Mountain View, California, 94042, USA. Published in the United States of America by Michigan Publishing Manufactured in the United States of America DOI: 10.3998/mpub.10110094 ISBN 978-1-60785-495-1 (paper) ISBN 978-1-60785-496-8 (e-book) An imprint of Michigan Publishing, Maize Books serves the publishing needs of the University of Michigan community by making high-quality scholarship widely available in print and online. It represents a new model for authors seeking to share their work within and beyond the academy, offering streamlined selection, production, and distribution processes. Maize Books is intended as a complement to more formal modes of publication in a wide range of disciplinary areas. http://www.maizebooks.org Cover Illustration: "Islamic Calligraphy in the Nasta`liq style." (Credit: Wellcome Collection, https://wellcomecollection.org/works/chengwfg/, licensed under CC BY 4.0) Contents Acknowledgments v Introduction vi How to Read the Alphabet xi 1 Basic Word Order and Copular Sentences 1 2 Existence 6 3 Plural, Palatal Harmony, and Case Endings 12 4 People and Questions 20 5 The Present-Future Tense 27 6 Possessive -

Accordance Fonts(November 2010)

Accordance Fonts (November 2010) Contents Installing the Fonts . 2. OS X Font Display in Accordance and above . 2. Font Display in Other OS X Applications . 3. Converting to Unicode . 4. Other Font Tips . 4. Helena Greek Font . 5. Additional Character Positions for Helena . 5. Helena Greek Font . 6. Yehudit Hebrew Font . 7. Table of Hebrew Vowels and Other Characters . 7. Yehudit Hebrew Font . 8. Notes on Yehudit Keyboard . 9. Table of Diacritical Characters(not used in the Hebrew Bible) . 9. Table of Accents (Cantillation Marks, Te‘amim) — see notes at end . 9. Notes on the Accent Table . 1. 2 Peshitta Syriac Font . 1. 3 Characters in non-standard positions . 1. 3 Peshitta Syriac Font . 1. 4 Syriac vowels, other diacriticals, and punctuation: . 1. 5 Rosetta Transliteration Font . 1. 6 Character Positions for Rosetta: . 1. 6 Sylvanus Uncial/Coptic Font . 1. 8 Sylvanus Uncial Font . 1. 9 MSS Font for Manuscript Citation . 2. 1 MSS Manuscript Font . 2. 2 Salaam Arabic Font . 2. 4 Characters in non-standard positions . 2. 4 Salaam Arabic Font . 2. 5 Arabic vowels and other diacriticals: . 2. 6 Page 1 Accordance Fonts OS X font Seven fonts are supplied for use with Accordance: Helena for Greek, Yehudit for Hebrew, Peshitta for Syriac, Rosetta for files transliteration characters, Sylvanus for uncial manuscripts, and Salaam for Arabic . An additional MSS font is used for manuscript citations . Once installed, the fonts are also available to any other program such as a word processor . These fonts each include special accents and other characters which occur in various overstrike positions for different characters . -

Modern Standard Arabic 3

® Modern Standard Arabic 3 “I have completed the entire Pimsleur Spanish series. I have always wanted to learn, but failed on numerous occasions. Shockingly, this method worked beautifully. ” R. Rydzewsk (Burlington, NC) “The thing is, Pimsleur is PHENOMENALLY EFFICIENT at advancing your oral skills wherever you are, and you don’t have to make an appointment or be at your computer or deal with other students. ” Ellen Jovin (NY, NY) “I looked at a number of different online and self-taught courses before settling on the Pimsleur courses. I could not have made a better choice. ” M. Jaffe (Mesa, AZ) Modern Standard Arabic 3 Travelers should always check with their nation's State Department for current advisories on local conditions before traveling abroad. Booklet Design: Maia Kennedy © and ‰ Recorded Program 2015 Simon & Schuster, Inc. © Reading Booklet 2015 Simon & Schuster, Inc. Pimsleur® is an imprint of Simon & Schuster Audio, a division of Simon & Schuster, Inc. Mfg. in USA. All rights reserved. ii Modern Standard Arabic 3 ACKNOWLEDGMENTS VOICES English-Speaking Instructor . Ray Brown Arabic-Speaking Instructor. Husam Karzoun Female Arabic Speaker . Maya Asmar Male Arabic Speaker . .Bayhas Kana COURSE WRITERS Dr. Mahdi Alosh ♦ Shannon Rossi EDITORS Beverly D. Heinle ♦ Mary E. Green REVIEWERS Ibtisam Alama ♦ Duha Shamaileh Bayhas Kana PRODUCER Sarah H. McInnis RECORDING ENGINEER Peter S. Turpin Simon & Schuster Studios, Concord, MA iii Modern Standard Arabic 3 Table of Contents Introduction. 1 The Arabic Alphabet . 3 Reading Lessons . 7 Arabic Alphabet Chart . 8 Diacritical Marks . 10 Lesson One . 11 Lesson Two . 14 Lesson Three . 17 Lesson Four . 20 Lesson Five . -

Middle East-I 9 Modern and Liturgical Scripts

The Unicode® Standard Version 13.0 – Core Specification To learn about the latest version of the Unicode Standard, see http://www.unicode.org/versions/latest/. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trade- mark claim, the designations have been printed with initial capital letters or in all capitals. Unicode and the Unicode Logo are registered trademarks of Unicode, Inc., in the United States and other countries. The authors and publisher have taken care in the preparation of this specification, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The Unicode Character Database and other files are provided as-is by Unicode, Inc. No claims are made as to fitness for any particular purpose. No warranties of any kind are expressed or implied. The recipient agrees to determine applicability of information provided. © 2020 Unicode, Inc. All rights reserved. This publication is protected by copyright, and permission must be obtained from the publisher prior to any prohibited reproduction. For information regarding permissions, inquire at http://www.unicode.org/reporting.html. For information about the Unicode terms of use, please see http://www.unicode.org/copyright.html. The Unicode Standard / the Unicode Consortium; edited by the Unicode Consortium. — Version 13.0. Includes index. ISBN 978-1-936213-26-9 (http://www.unicode.org/versions/Unicode13.0.0/) 1. -

Reading Booklet Modern Standard Arabic 1

® Modern Standard Arabic 1 Reading Booklet Modern Standard Arabic 1 Travelers should always check with their nation's State Department for current advisories on local conditions before traveling abroad. Booklet Design: Maia Kennedy © and ‰ Recorded Program 2012 Simon & Schuster, Inc. © Reading Booklet 2012 Simon & Schuster, Inc. Pimsleur® is an imprint of Simon & Schuster Audio, a division of Simon & Schuster, Inc. Mfg. in USA. All rights reserved. ii Modern Standard Arabic 1 ACKNOWLEDGMENTS VOICES English-Speaking Instructor . Ray Brown Arabic-Speaking Instructor. Alex Tamer Female Arabic Speaker . Gheed Murtadi Male Arabic Speaker . Mohamad Mohamad COURSE WRITERS Dr. Mahdi Alosh ♦ Marie-Pierre Grandin-Gillette EDITORS Shannon Rossi ♦ Mary E. Green REVIEWER Ibtisam Alama EDITOR & EXECUTIVE PRODUCER Beverly D. Heinle PRODUCER Sarah H. McInnis RECORDING ENGINEERS Peter S. Turpin ♦ Kelly Saux Simon & Schuster Studios, Concord, MA iii Modern Standard Arabic 1 Table of Contents Introduction. 1 The Arabic Alphabet . 3 Reading Lessons . 6 Arabic Alphabet Chart . 8 Diacritical Marks . 10 Lesson One . 11 Lesson Two . 12 Lesson Three . 13 Lesson Four . 14 Lesson Five . 15 Lesson Six . 16 Lesson Seven . 17 Lesson Eight . 18 Lesson Nine . 19 Lesson Ten . 20 Lesson Eleven . 21 Lesson Twelve . 22 Lesson Thirteen . 23 Lesson Fourteen . 24 Lesson Fifteen . 25 Lesson Sixteen. 26 Lesson Seventeen . 27 Lesson Eighteen . 28 Lesson Nineteen . 30 Lesson Twenty (with diacritics) . 32 Lesson Twenty (without diacritics) . 34 iv Modern Standard Arabic 1 Introduction Modern Standard Arabic (MSA), also known as Literary or Standard Arabic, is the official language of an estimated 320 million people in the 22 Arab countries represented in the Arab League. -

COLABA: Arabic Dialect Annotation and Processing

COLABA: Arabic Dialect Annotation and Processing Mona Diab, Nizar Habash, Owen Rambow, Mohamed Altantawy, Yassine Benajiba Center for Computational Learning Systems 475 Riverside Drive, Suite 850 New York, NY 10115 Columbia University {mdiab,habash,rambow,mtantawy,ybenajiba}@ccls.columbia.edu Abstract In this paper, we describe COLABA, a large effort to create resources and processing tools for Dialectal Arabic Blogs. We describe the objectives of the project, the process flow and the interaction between the different components. We briefly describe the manual annotation effort and the resources created. Finally, we sketch how these resources and tools are put together to create DIRA, a term- expansion tool for information retrieval over dialectal Arabic collections using Modern Standard Arabic queries. 1. Introduction these genres. In fact, applying NLP tools designed for MSA directly to DA yields significantly lower performance, mak- The Arabic language is a collection of historically related ing it imperative to direct the research to building resources variants. Arabic dialects, collectively henceforth Dialectal and dedicated tools for DA processing. Arabic (DA), are the day to day vernaculars spoken in the DA lacks large amounts of consistent data due to two fac- Arab world. They live side by side with Modern Standard tors: a lack of orthographic standards for the dialects, and Arabic (MSA). As spoken varieties of Arabic, they differ a lack of overall Arabic content on the web, let alone DA from MSA on all levels of linguistic representation, from content. These lead to a severe deficiency in the availabil- phonology, morphology and lexicon to syntax, semantics, ity of computational annotations for DA data. -

English and Arabic Speech Translation

Normalization for Automated Metrics: English and Arabic Speech Translation Sherri Condon*, Gregory A. Sanders†, Dan Parvaz*, Alan Rubenstein*, Christy Doran*, John Aberdeen*, and Beatrice Oshika* *The MITRE Corporation †National Institute of Standards and Technology 7525 Colshire Drive 100 Bureau Drive, Stop 8940 McLean, Virginia 22102 Gaithersburg, Maryland 20899–8940 {scondon, dparvaz, Rubenstein, cdoran, aberdeen, bea}@mitre.org / [email protected] eral different protocols and offline evaluations in Abstract which the systems process audio recordings and transcripts of interactions. Details of the The Defense Advanced Research Projects TRANSTAC evaluation methods are described in Agency (DARPA) Spoken Language Com- Weiss et al. (2008), Sanders et al. (2008) and Con- munication and Translation System for Tac- don et al. (2008). tical Use (TRANSTAC) program has Because the inputs in the offline evaluation are experimented with applying automated me- the same for each system, we can analyze transla- trics to speech translation dialogues. For trans- lations into English, BLEU, TER, and tions using automated metrics. Measures such as METEOR scores correlate well with human BiLingual Evaluation Understudy (BLEU) (Papi- judgments, but scores for translation into neni et al., 2002), Translation Edit Rate (TER) Arabic correlate with human judgments less (Snover et al., 2006), and Metric for Evaluation of strongly. This paper provides evidence to sup- Translation with Explicit word Ordering port the hypothesis that automated measures (METEOR) (Banerjee and Lavie, 2005) have been of Arabic are lower due to variation and in- developed and widely used for translations of text flection in Arabic by demonstrating that nor- and broadcast material, which have very different malization operations improve correlation properties than dialog. -

Arabic Alphabet 1 Arabic Alphabet

Arabic alphabet 1 Arabic alphabet Arabic abjad Type Abjad Languages Arabic Time period 400 to the present Parent systems Proto-Sinaitic • Phoenician • Aramaic • Syriac • Nabataean • Arabic abjad Child systems N'Ko alphabet ISO 15924 Arab, 160 Direction Right-to-left Unicode alias Arabic Unicode range [1] U+0600 to U+06FF [2] U+0750 to U+077F [3] U+08A0 to U+08FF [4] U+FB50 to U+FDFF [5] U+FE70 to U+FEFF [6] U+1EE00 to U+1EEFF the Arabic alphabet of the Arabic script ﻍ ﻉ ﻅ ﻁ ﺽ ﺹ ﺵ ﺱ ﺯ ﺭ ﺫ ﺩ ﺥ ﺡ ﺝ ﺙ ﺕ ﺏ ﺍ ﻱ ﻭ ﻩ ﻥ ﻡ ﻝ ﻙ ﻕ ﻑ • history • diacritics • hamza • numerals • numeration abjadiyyah ‘arabiyyah) or Arabic abjad is the Arabic script as it is’ ﺃَﺑْﺠَﺪِﻳَّﺔ ﻋَﺮَﺑِﻴَّﺔ :The Arabic alphabet (Arabic codified for writing the Arabic language. It is written from right to left, in a cursive style, and includes 28 letters. Because letters usually[7] stand for consonants, it is classified as an abjad. Arabic alphabet 2 Consonants The basic Arabic alphabet contains 28 letters. Adaptations of the Arabic script for other languages added and removed some letters, such as Persian, Ottoman, Sindhi, Urdu, Malay, Pashto, and Arabi Malayalam have additional letters, shown below. There are no distinct upper and lower case letter forms. Many letters look similar but are distinguished from one another by dots (’i‘jām) above or below their central part, called rasm. These dots are an integral part of a letter, since they distinguish between letters that represent different sounds. -

MSR-4: Annotated Repertoire Tables, Non-CJK

Maximal Starting Repertoire - MSR-4 Annotated Repertoire Tables, Non-CJK Integration Panel Date: 2019-01-25 How to read this file: This file shows all non-CJK characters that are included in the MSR-4 with a yellow background. The set of these code points matches the repertoire specified in the XML format of the MSR. Where present, annotations on individual code points indicate some or all of the languages a code point is used for. This file lists only those Unicode blocks containing non-CJK code points included in the MSR. Code points listed in this document, which are PVALID in IDNA2008 but excluded from the MSR for various reasons are shown with pinkish annotations indicating the primary rationale for excluding the code points, together with other information about usage background, where present. Code points shown with a white background are not PVALID in IDNA2008. Repertoire corresponding to the CJK Unified Ideographs: Main (4E00-9FFF), Extension-A (3400-4DBF), Extension B (20000- 2A6DF), and Hangul Syllables (AC00-D7A3) are included in separate files. For links to these files see "Maximal Starting Repertoire - MSR-4: Overview and Rationale". How the repertoire was chosen: This file only provides a brief categorization of code points that are PVALID in IDNA2008 but excluded from the MSR. For a complete discussion of the principles and guidelines followed by the Integration Panel in creating the MSR, as well as links to the other files, please see “Maximal Starting Repertoire - MSR-4: Overview and Rationale”. Brief description of exclusion -

Arabic Diacritics 1 Arabic Diacritics

Arabic diacritics 1 Arabic diacritics Arabic alphabet ﻱ ﻭ ﻩ ﻥ ﻡ ﻝ ﻙ ﻕ ﻑ ﻍ ﻉ ﻅ ﻁ ﺽ ﺹ ﺵ ﺱ ﺯ ﺭ ﺫ ﺩ ﺥ ﺡ ﺝ ﺙ ﺕ ﺏ ﺍ Arabic script • History • Transliteration • Diacritics • Hamza • Numerals • Numeration • v • t [1] • e 〈ﺗَﺸْﻜِﻴﻞ〉 i‘jām, consonant pointing), and tashkil) 〈ﺇِﻋْﺠَﺎﻡ〉 The Arabic script has numerous diacritics, including i'jam .(〈ﺣَﺮَﻛَﺔ〉 vowel marks; singular: ḥarakah) 〈ﺣَﺮَﻛَﺎﺕ〉 tashkīl, supplementary diacritics). The latter include the ḥarakāt) The Arabic script is an impure abjad, where short consonants and long vowels are represented by letters but short vowels and consonant length are not generally indicated in writing. Tashkīl is optional to represent missing vowels and consonant length. Modern Arabic is nearly always written with consonant pointing, but occasionally unpointed texts are still seen. Early texts such as the Qur'an were initially written without pointing, and pointing was added later to determine the expected readings and interpretations. Tashkil (marks used as phonetic guides) The literal meaning of tashkīl is 'forming'. As the normal Arabic text does not provide enough information about the correct pronunciation, the main purpose of tashkīl (and ḥarakāt) is to provide a phonetic guide or a phonetic aid; i.e. show the correct pronunciation. It serves the same purpose as furigana (also called "ruby") in Japanese or pinyin or zhuyin in Mandarin Chinese for children who are learning to read or foreign learners. The bulk of Arabic script is written without ḥarakāt (or short vowels). However, they are commonly used in some al-Qur’ān). It is not) 〈ﺍﻟْﻘُﺮْﺁﻥ〉 religious texts that demand strict adherence to pronunciation rules such as Qur'an al-ḥadīth; plural: aḥādīth) as well.