English and Arabic Speech Translation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alif and Hamza Alif) Is One of the Simplest Letters of the Alphabet

’alif and hamza alif) is one of the simplest letters of the alphabet. Its isolated form is simply a vertical’) ﺍ stroke, written from top to bottom. In its final position it is written as the same vertical stroke, but joined at the base to the preceding letter. Because of this connecting line – and this is very important – it is written from bottom to top instead of top to bottom. Practise these to get the feel of the direction of the stroke. The letter 'alif is one of a number of non-connecting letters. This means that it is never connected to the letter that comes after it. Non-connecting letters therefore have no initial or medial forms. They can appear in only two ways: isolated or final, meaning connected to the preceding letter. Reminder about pronunciation The letter 'alif represents the long vowel aa. Usually this vowel sounds like a lengthened version of the a in pat. In some positions, however (we will explain this later), it sounds more like the a in father. One of the most important functions of 'alif is not as an independent sound but as the You can look back at what we said about .(ﺀ) carrier, or a ‘bearer’, of another letter: hamza hamza. Later we will discuss hamza in more detail. Here we will go through one of the most common uses of hamza: its combination with 'alif at the beginning or a word. One of the rules of the Arabic language is that no word can begin with a vowel. Many Arabic words may sound to the beginner as though they start with a vowel, but in fact they begin with a glottal stop: that little catch in the voice that is represented by hamza. -

BLEU Might Be Guilty but References Are Not Innocent

BLEU might be Guilty but References are not Innocent Markus Freitag, David Grangier, Isaac Caswell Google Research ffreitag,grangier,[email protected] Abstract past, especially when comparing rule-based and statistical systems (Bojar et al., 2016b; Koehn and The quality of automatic metrics for machine translation has been increasingly called into Monz, 2006; Callison-Burch et al., 2006). question, especially for high-quality systems. Automated evaluations are however of crucial This paper demonstrates that, while choice importance, especially for system development. of metric is important, the nature of the ref- Most decisions for architecture selection, hyper- erences is also critical. We study differ- parameter search and data filtering rely on auto- ent methods to collect references and com- mated evaluation at a pace and scale that would pare their value in automated evaluation by not be sustainable with human evaluations. Au- reporting correlation with human evaluation for a variety of systems and metrics. Mo- tomated evaluation (Koehn, 2010; Papineni et al., tivated by the finding that typical references 2002) typically relies on two crucial ingredients: exhibit poor diversity, concentrating around a metric and a reference translation. Metrics gen- translationese language, we develop a para- erally measure the quality of a translation by as- phrasing task for linguists to perform on exist- sessing the overlap between the system output and ing reference translations, which counteracts the reference translation. Different overlap metrics this bias. Our method yields higher correla- have been proposed, aiming to improve correla- tion with human judgment not only for the submissions of WMT 2019 English!German, tion between human and automated evaluations. -

Word Stress and Vowel Neutralization in Modern Standard Arabic

Word Stress and Vowel Neutralization in Modern Standard Arabic Jack Halpern (春遍雀來) The CJK Dictionary Institute (日中韓辭典研究所) 34-14, 2-chome, Tohoku, Niiza-shi, Saitama 352-0001, Japan [email protected] rules differ somewhat from those used in liturgi- Abstract cal Arabic. Word stress in Modern Standard Arabic is of Arabic word stress and vowel neutralization great importance to language learners, while rules have been the object of various studies, precise stress rules can help enhance Arabic such as Janssens (1972), Mitchell (1990) and speech technology applications. Though Ara- Ryding (2005). Though some grammar books bic word stress and vowel neutralization rules offer stress rules that appear short and simple, have been the object of various studies, the lit- erature is sometimes inaccurate or contradic- upon careful examination they turn out to be in- tory. Most Arabic grammar books give stress complete, ambiguous or inaccurate. Moreover, rules that are inadequate or incomplete, while the linguistic literature often contains inaccura- vowel neutralization is hardly mentioned. The cies, partially because little or no distinction is aim of this paper is to present stress and neu- made between MSA and liturgical Arabic, or tralization rules that are both linguistically ac- because the rules are based on Egyptian-accented curate and pedagogically useful based on how MSA (Mitchell, 1990), which differs from stan- spoken MSA is actually pronounced. dard MSA in important ways. 1 Introduction Arabic stress and neutralization rules are wor- Word stress in both Modern Standard Arabic thy of serious investigation. Other than being of (MSA) and the dialects is non-phonemic. -

Evaluation of 2-Way Iraqi Arabic–English Speech Translation Systems Using Automated Metrics

Mach Translat (2012) 26:159–176 DOI 10.1007/s10590-011-9105-x Evaluation of 2-way Iraqi Arabic–English speech translation systems using automated metrics Sherri Condon · Mark Arehart · Dan Parvaz · Gregory Sanders · Christy Doran · John Aberdeen Received: 12 July 2010 / Accepted: 9 August 2011 / Published online: 22 September 2011 © Springer Science+Business Media B.V. 2011 Abstract The Defense Advanced Research Projects Agency (DARPA) Spoken Language Communication and Translation System for Tactical Use (TRANSTAC) program (http://1.usa.gov/transtac) faced many challenges in applying automated measures of translation quality to Iraqi Arabic–English speech translation dialogues. Features of speech data in general and of Iraqi Arabic data in particular undermine basic assumptions of automated measures that depend on matching system outputs to refer- ence translations. These features are described along with the challenges they present for evaluating machine translation quality using automated metrics. We show that scores for translation into Iraqi Arabic exhibit higher correlations with human judg- ments when they are computed from normalized system outputs and reference trans- lations. Orthographic normalization, lexical normalization, and operations involving light stemming resulted in higher correlations with human judgments. Approved for Public Release: 11-0118. Distribution Unlimited. The views expressed are those of the author and do not reflect the official policy or position of the Department of Defense or the U.S. Government. Some of the material in this article was originally presented at the Language Resources and Evaluation Conference (LREC) 2008 in Marrakesh, Morocco and at the 2009 MT Summit XII in Ottawa, Canada. S. Condon (B) · M. -

A Visualisation Tool for Inspecting and Evaluating Metric Scores of Machine Translation Output

Vis-Eval Metric Viewer: A Visualisation Tool for Inspecting and Evaluating Metric Scores of Machine Translation Output David Steele and Lucia Specia Department of Computer Science University of Sheffield Sheffield, UK dbsteele1,[email protected] Abstract cised for providing scores that can be non-intuitive and uninformative, especially at the sentence level Machine Translation systems are usually eval- (Zhang et al., 2004; Song et al., 2013; Babych, uated and compared using automated evalua- 2014). Additionally, scores across different met- tion metrics such as BLEU and METEOR to score the generated translations against human rics can be inconsistent with each other. This in- translations. However, the interaction with the consistency can be an indicator of linguistic prop- output from the metrics is relatively limited erties of the translations which should be further and results are commonly a single score along analysed. However, multiple metrics are not al- with a few additional statistics. Whilst this ways used and any discrepancies among them tend may be enough for system comparison it does to be ignored. not provide much useful feedback or a means Vis-Eval Metric Viewer (VEMV) was devel- for inspecting translations and their respective scores. Vis-Eval Metric Viewer (VEMV) is a oped as a tool bearing in mind the aforementioned tool designed to provide visualisation of mul- issues. It enables rapid evaluation of MT output, tiple evaluation scores so they can be easily in- currently employing up to eight popular metrics. terpreted by a user. VEMV takes in the source, Results can be easily inspected (using a typical reference, and hypothesis files as parameters, web browser) especially at the segment level, with and scores the hypotheses using several popu- each sentence (source, reference, and hypothesis) lar evaluation metrics simultaneously. -

Why Word Error Rate Is Not a Good Metric for Speech Recognizer Training for the Speech Translation Task?

WHY WORD ERROR RATE IS NOT A GOOD METRIC FOR SPEECH RECOGNIZER TRAINING FOR THE SPEECH TRANSLATION TASK? Xiaodong He, Li Deng, and Alex Acero Microsoft Research, One Microsoft Way, Redmond, WA 98052, USA ABSTRACT ASR, where the widely used metric is word error rate (WER), the translation accuracy is usually measured by the quantities Speech translation (ST) is an enabling technology for cross-lingual including BLEU (Bi-Lingual Evaluation Understudy), NIST-score, oral communication. A ST system consists of two major and Translation Edit Rate (TER) [12][14]. BLEU measures the n- components: an automatic speech recognizer (ASR) and a machine gram matches between the translation hypothesis and the translator (MT). Nowadays, most ASR systems are trained and reference(s). In [1][2], it was reported that translation accuracy tuned by minimizing word error rate (WER). However, WER degrades 8 to 10 BLEU points when the ASR output was used to counts word errors at the surface level. It does not consider the replace the verbatim ASR transcript (i.e., assuming no ASR error). contextual and syntactic roles of a word, which are often critical On the other hand, although WER is widely accepted as the de for MT. In the end-to-end ST scenarios, whether WER is a good facto metric for ASR, it only measures word errors at the surface metric for the ASR component of the full ST system is an open level. It takes no consideration of the contextual and syntactic roles issue and lacks systematic studies. In this paper, we report our of a word. -

Machine Translation Evaluation

Machine Translation Evaluation Sara Stymne Partly based on Philipp Koehn's slides for chapter 8 Why Evaluation? How good is a given machine translation system? Which one is the best system for our purpose? How much did we improve our system? How can we tune our system to become better? Hard problem, since many different translations acceptable ! semantic equivalence / similarity Ten Translations of a Chinese Sentence Israeli officials are responsible for airport security. Israel is in charge of the security at this airport. The security work for this airport is the responsibility of the Israel government. Israeli side was in charge of the security of this airport. Israel is responsible for the airport's security. Israel is responsible for safety work at this airport. Israel presides over the security of the airport. Israel took charge of the airport security. The safety of this airport is taken charge of by Israel. This airport's security is the responsibility of the Israeli security officials. (a typical example from the 2001 NIST evaluation set) Which translation is best? Source F¨arjetransporterna har minskat med 20,3 procent i ˚ar. Gloss The-ferry-transports have decreased by 20.3 percent in year. Ref Ferry transports are down by 20.3% in 2008. Sys1 The ferry transports has reduced by 20,3% this year. Sys2 This year, there has been a reduction of transports by ferry of 20.3 procent. Sys3 F¨arjetransporterna are down by 20.3% in 2003. Sys4 Ferry transports have a reduction of 20.3 percent in year. Sys5 Transports are down by 20.3% in year. -

Mpub10110094.Pdf

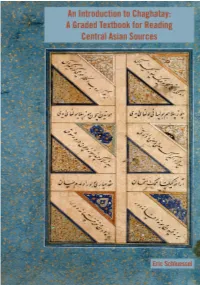

An Introduction to Chaghatay: A Graded Textbook for Reading Central Asian Sources Eric Schluessel Copyright © 2018 by Eric Schluessel Some rights reserved This work is licensed under the Creative Commons Attribution-NonCommercial- NoDerivatives 4.0 International License. To view a copy of this license, visit http:// creativecommons.org/licenses/by-nc-nd/4.0/ or send a letter to Creative Commons, PO Box 1866, Mountain View, California, 94042, USA. Published in the United States of America by Michigan Publishing Manufactured in the United States of America DOI: 10.3998/mpub.10110094 ISBN 978-1-60785-495-1 (paper) ISBN 978-1-60785-496-8 (e-book) An imprint of Michigan Publishing, Maize Books serves the publishing needs of the University of Michigan community by making high-quality scholarship widely available in print and online. It represents a new model for authors seeking to share their work within and beyond the academy, offering streamlined selection, production, and distribution processes. Maize Books is intended as a complement to more formal modes of publication in a wide range of disciplinary areas. http://www.maizebooks.org Cover Illustration: "Islamic Calligraphy in the Nasta`liq style." (Credit: Wellcome Collection, https://wellcomecollection.org/works/chengwfg/, licensed under CC BY 4.0) Contents Acknowledgments v Introduction vi How to Read the Alphabet xi 1 Basic Word Order and Copular Sentences 1 2 Existence 6 3 Plural, Palatal Harmony, and Case Endings 12 4 People and Questions 20 5 The Present-Future Tense 27 6 Possessive -

Accordance Fonts(November 2010)

Accordance Fonts (November 2010) Contents Installing the Fonts . 2. OS X Font Display in Accordance and above . 2. Font Display in Other OS X Applications . 3. Converting to Unicode . 4. Other Font Tips . 4. Helena Greek Font . 5. Additional Character Positions for Helena . 5. Helena Greek Font . 6. Yehudit Hebrew Font . 7. Table of Hebrew Vowels and Other Characters . 7. Yehudit Hebrew Font . 8. Notes on Yehudit Keyboard . 9. Table of Diacritical Characters(not used in the Hebrew Bible) . 9. Table of Accents (Cantillation Marks, Te‘amim) — see notes at end . 9. Notes on the Accent Table . 1. 2 Peshitta Syriac Font . 1. 3 Characters in non-standard positions . 1. 3 Peshitta Syriac Font . 1. 4 Syriac vowels, other diacriticals, and punctuation: . 1. 5 Rosetta Transliteration Font . 1. 6 Character Positions for Rosetta: . 1. 6 Sylvanus Uncial/Coptic Font . 1. 8 Sylvanus Uncial Font . 1. 9 MSS Font for Manuscript Citation . 2. 1 MSS Manuscript Font . 2. 2 Salaam Arabic Font . 2. 4 Characters in non-standard positions . 2. 4 Salaam Arabic Font . 2. 5 Arabic vowels and other diacriticals: . 2. 6 Page 1 Accordance Fonts OS X font Seven fonts are supplied for use with Accordance: Helena for Greek, Yehudit for Hebrew, Peshitta for Syriac, Rosetta for files transliteration characters, Sylvanus for uncial manuscripts, and Salaam for Arabic . An additional MSS font is used for manuscript citations . Once installed, the fonts are also available to any other program such as a word processor . These fonts each include special accents and other characters which occur in various overstrike positions for different characters . -

Download 1 File

Strokes and Hairlines Elegant Writing and its Place in Muslim Book Culture Adam Gacek Strokes and Hairlines Elegant Writing and its Place in Muslim Book Culture An Exhibition in Celebration of the 60th Anniversary of The Institute of Islamic Studies, McGill University February 11– June 30, 2013 McLennan Library Building Lobby Curated by Adam Gacek On the front cover: folio 1a from MS Rare Books and Special Collections AC156; for a description of and another illustration from the same manuscript see p.28. On the back cover: photograph of Morrice Hall from Islamic Studies Library archives Copyright © 2013 McGill University Library ISBN 978-1-77096-209-5 3 Contents Foreword ..............................................................................................5 Introduction ........................................................................................7 Case 1a: The Qurʾan ............................................................................9 Case 1b: Scripts and Hands ..............................................................17 Case 2a: Calligraphers’ Diplomas ...................................................25 Case 2b: The Alif and Other Letterforms .......................................33 Case 3a: Calligraphy and Painted Decoration ...............................41 Case 3b: Writing Implements .........................................................49 To a Fledgling Calligrapher .............................................................57 A Scribe’s Lament .............................................................................58 -

![Arxiv:2006.14799V2 [Cs.CL] 18 May 2021 the Same User Input](https://docslib.b-cdn.net/cover/5976/arxiv-2006-14799v2-cs-cl-18-may-2021-the-same-user-input-1685976.webp)

Arxiv:2006.14799V2 [Cs.CL] 18 May 2021 the Same User Input

Evaluation of Text Generation: A Survey Evaluation of Text Generation: A Survey Asli Celikyilmaz [email protected] Facebook AI Research Elizabeth Clark [email protected] University of Washington Jianfeng Gao [email protected] Microsoft Research Abstract The paper surveys evaluation methods of natural language generation (NLG) systems that have been developed in the last few years. We group NLG evaluation methods into three categories: (1) human-centric evaluation metrics, (2) automatic metrics that require no training, and (3) machine-learned metrics. For each category, we discuss the progress that has been made and the challenges still being faced, with a focus on the evaluation of recently proposed NLG tasks and neural NLG models. We then present two examples for task-specific NLG evaluations for automatic text summarization and long text generation, and conclude the paper by proposing future research directions.1 1. Introduction Natural language generation (NLG), a sub-field of natural language processing (NLP), deals with building software systems that can produce coherent and readable text (Reiter & Dale, 2000a) NLG is commonly considered a general term which encompasses a wide range of tasks that take a form of input (e.g., a structured input like a dataset or a table, a natural language prompt or even an image) and output a sequence of text that is coherent and understandable by humans. Hence, the field of NLG can be applied to a broad range of NLP tasks, such as generating responses to user questions in a chatbot, translating a sentence or a document from one language into another, offering suggestions to help write a story, or generating summaries of time-intensive data analysis. -

Creating Standards

Creating Standards Unauthenticated Download Date | 6/17/19 6:48 PM Studies in Manuscript Cultures Edited by Michael Friedrich Harunaga Isaacson Jörg B. Quenzer Volume 16 Unauthenticated Download Date | 6/17/19 6:48 PM Creating Standards Interactions with Arabic Script in 12 Manuscript Cultures Edited by Dmitry Bondarev Alessandro Gori Lameen Souag Unauthenticated Download Date | 6/17/19 6:48 PM ISBN 978-3-11-063498-3 e-ISBN (PDF) 978-3-11-063906-3 e-ISBN (EPUB) 978-3-11-063508-9 ISSN 2365-9696 This work is licensed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 License. For details go to http://creativecommons.org/licenses/by-nc-nd/4.0/. Library of Congress Control Number: 2019935659 Bibliographic information published by the Deutsche Nationalbibliothek The Deutsche Nationalbibliothek lists this publication in the Deutsche Nationalbibliografie; detailed bibliographic data are available on the Internet at http://dnb.dnb.de. © 2019 Dmitry Bondarev, Alessandro Gori, Lameen Souag, published by Walter de Gruyter GmbH, Berlin/Boston Printing and binding: CPI books GmbH, Leck www.degruyter.com Unauthenticated Download Date | 6/17/19 6:48 PM Contents The Editors Preface VII Transliteration of Arabic and some Arabic-based Script Graphemes used in this Volume (including Persian and Malay) IX Dmitry Bondarev Introduction: Orthographic Polyphony in Arabic Script 1 Paola Orsatti Persian Language in Arabic Script: The Formation of the Orthographic Standard and the Different Graphic Traditions of Iran in the First Centuries of