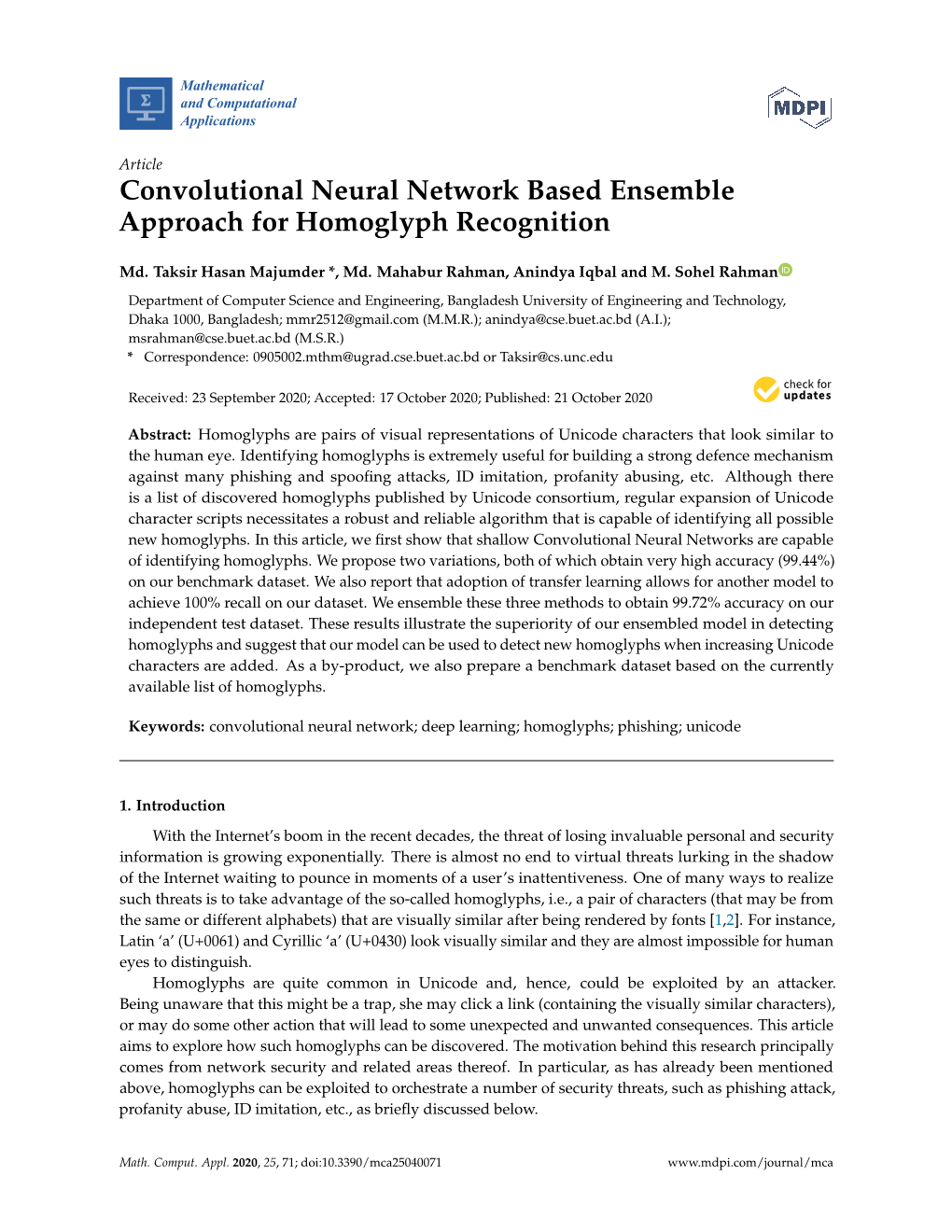

Convolutional Neural Network Based Ensemble Approach for Homoglyph Recognition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Unicode Cookbook for Linguists: Managing Writing Systems Using Orthography Profiles

Zurich Open Repository and Archive University of Zurich Main Library Strickhofstrasse 39 CH-8057 Zurich www.zora.uzh.ch Year: 2017 The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles Moran, Steven ; Cysouw, Michael DOI: https://doi.org/10.5281/zenodo.290662 Posted at the Zurich Open Repository and Archive, University of Zurich ZORA URL: https://doi.org/10.5167/uzh-135400 Monograph The following work is licensed under a Creative Commons: Attribution 4.0 International (CC BY 4.0) License. Originally published at: Moran, Steven; Cysouw, Michael (2017). The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles. CERN Data Centre: Zenodo. DOI: https://doi.org/10.5281/zenodo.290662 The Unicode Cookbook for Linguists Managing writing systems using orthography profiles Steven Moran & Michael Cysouw Change dedication in localmetadata.tex Preface This text is meant as a practical guide for linguists, and programmers, whowork with data in multilingual computational environments. We introduce the basic concepts needed to understand how writing systems and character encodings function, and how they work together. The intersection of the Unicode Standard and the International Phonetic Al- phabet is often not met without frustration by users. Nevertheless, thetwo standards have provided language researchers with a consistent computational architecture needed to process, publish and analyze data from many different languages. We bring to light common, but not always transparent, pitfalls that researchers face when working with Unicode and IPA. Our research uses quantitative methods to compare languages and uncover and clarify their phylogenetic relations. However, the majority of lexical data available from the world’s languages is in author- or document-specific orthogra- phies. -

International Language Environments Guide for Oracle® Solaris 11.4

International Language Environments ® Guide for Oracle Solaris 11.4 Part No: E61001 November 2020 International Language Environments Guide for Oracle Solaris 11.4 Part No: E61001 Copyright © 2011, 2020, Oracle and/or its affiliates. License Restrictions Warranty/Consequential Damages Disclaimer This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. Warranty Disclaimer The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. Restricted Rights Notice If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are -

Rtorrent-Ps Documentation Release 1.2-Dev

rtorrent-ps Documentation Release 1.2-dev PyroScope Project Aug 05, 2021 Getting Started 1 Overview 3 1.1 Feature Overview............................................4 1.2 Supported Platforms...........................................4 1.3 Launching a Demo in Docker......................................4 2 Installation Guide 7 2.1 General Installation Options.......................................7 2.2 OS-Specific Installation Options.....................................8 2.3 Manual Turn-Key System Setup.....................................9 3 Setup & Configuration 17 3.1 Setting up Your Terminal Emulator................................... 17 3.2 Trouble-Shooting Guide......................................... 19 4 User’s Manual 25 4.1 Additional Features........................................... 25 4.2 Extended Canvas Explained....................................... 27 4.3 Command Extensions.......................................... 29 5 Tips & How-Tos 33 5.1 Checking Details of the Standard Configuration............................. 33 5.2 Validate Self-Signed Certs........................................ 33 6 Advanced Customization 35 6.1 Color Scheme Configuration....................................... 35 6.2 Customizing the Display Layout..................................... 37 7 Development Guide 43 7.1 Running Integration Tests........................................ 43 7.2 The Build Script............................................. 44 7.3 Creating a Release............................................ 45 7.4 Building the Debian Package..................................... -

Unifoundry.Com GNU Unifont Glyphs

Unifoundry.com GNU Unifont Glyphs Home GNU Unifont Archive Unicode Utilities Unicode Tutorial Hangul Fonts Unifont 9.0 Chart Fontforge Poll Downloads GNU Unifont is part of the GNU Project. This page contains the latest release of GNU Unifont, with glyphs for every printable code point in the Unicode 9.0 Basic Multilingual Plane (BMP). The BMP occupies the first 65,536 code points of the Unicode space, denoted as U+0000..U+FFFF. There is also growing coverage of the Supplemental Multilingual Plane (SMP), in the range U+010000..U+01FFFF, and of Michael Everson's ConScript Unicode Registry (CSUR). These font files are licensed under the GNU General Public License, either Version 2 or (at your option) a later version, with the exception that embedding the font in a document does not in itself constitute a violation of the GNU GPL. The full terms of the license are in LICENSE.txt. The standard font build — with and without Michael Everson's ConScript Unicode Registry (CSUR) Private Use Area (PUA) glyphs. Download in your favorite format: TrueType: The Standard Unifont TTF Download: unifont-9.0.01.ttf (12 Mbytes) Glyphs above the Unicode Basic Multilingual Plane: unifont_upper-9.0.01.ttf (1 Mbyte) Unicode Basic Multilingual Plane with CSUR PUA Glyphs: unifont_csur-9.0.01.ttf (12 Mbytes) Glyphs above the Unicode Basic Multilingual Plane with CSUR PUA Glyphs: unifont_upper_csur-9.0.01.ttf (1 Mbyte) PCF: unifont-9.0.01.pcf.gz (1 Mbyte) BDF: unifont-9.0.01.bdf.gz (1 Mbyte) Specialized versions — built by request: SBIT: Special version at the request -

Juhtolv-Fontsamples

juhtolv-fontsamples Juhapekka Tolvanen Contents 1 Copyright and contact information 3 2 Introduction 5 3 How to use these PDFs 6 3.1 Decompressing ............................... 6 3.1.1 bzip2 and tar ............................. 8 3.1.2 7zip .................................. 11 3.2 Finding right PDFs ............................. 12 4 How to edit these PDFs 16 4.1 What each script do ............................ 16 4.2 About shell environment .......................... 19 4.3 How to ensure each PDF take just one page .............. 20 4.4 Programs used ................................ 20 4.5 Fonts used .................................. 23 4.5.1 Proportional Gothic ......................... 24 4.5.2 Proportional Mincho ........................ 26 4.5.3 Monospace (Gothic and Mincho) ................. 27 4.5.4 Handwriting ............................. 28 4.5.5 Fonts that can not be used .................... 29 5 Thanks 30 2 1 Copyright and contact information Author of all these files is Juhapekka Tolvanen. Author’s E-Mail address is: juhtolv (at) iki (dot) fi This publication has included material from these dictionary files in ac- cordance with the licence provisions of the Electronic Dictionaries Research Group: • kanjd212 • kanjidic • kanjidic2.xml • kradfile • kradfile2 See these WWW-pages: • http://www.edrdg.org/ • http://www.edrdg.org/edrdg/licence.html • http://www.csse.monash.edu.au/~jwb/edict.html • http://www.csse.monash.edu.au/~jwb/kanjidic_doc.html • http://www.csse.monash.edu.au/~jwb/kanjd212_doc.html • http://www.csse.monash.edu.au/~jwb/kanjidic2/ • http://www.csse.monash.edu.au/~jwb/kradinf.html 3 All generated PDF- and TEX-files of each kanji-character use exactly the same license as kanjidic2.xml uses; Name of that license is Creative Commons Attribution-ShareAlike Licence (V3.0). -

Third Party Software Licensing Agreements RL6 6.8

Third Party Software Licensing Agreements RL6 6.8 Updated: April 16, 2019 www.rlsolutions.com m THIRD PARTY SOFTWARE LICENSING AGREEMENTS CONTENTS OPEN SOURCE LICENSES ................................................................................................................. 10 Apache v2 License ...................................................................................................................... 10 Apache License ................................................................................................................. 11 BSD License ................................................................................................................................ 14 .Net API for HL7 FHIR (fhir-net-api) .................................................................................. 15 ANTLR 3 C# Target ........................................................................................................... 16 JQuery Sparkline ............................................................................................................... 17 Microsoft Ajax Control Toolkit ............................................................................................ 18 Mvp.Xml ............................................................................................................................. 19 NSubstitute ........................................................................................................................ 20 Remap-istanbul ................................................................................................................ -

Publishing Text in Indigenous African Languages

Publishing text in indigenous African languages A workshop paper prepared by Conrad Taylor for the BarCamp Africa UK conference in London on 7 Nov 2009. Copies downloadable from http://barcampafrica-uk.wikispaces.com/Publishing+technology, and http://www.conradiator.com – most markedly in the Americas and in Africa, where most 1: Background; how the problem arose cultures did not have a preiexisting writing system. In each European country’s imperial sphere of influence, its own UBLISHING, if we take it to mean the written word made language first became a lingua franca for trade; then as the Pinto many copies by the scribe or the printing press, has great late-19th century colonial landgrab of Africa got going, held a privileged position for hundreds of years as a vehicle colonial languages became the languages of administration, for transmitting stories & ideas, information & knowledge education and Christian religious proselytisation. over long distances, free from limitations of time & space. Written mass communication is so commonplace today that African indigenous-language literacy: perhaps we forget some of its demanding preconditions: the old non-latins a shared language – for which there must be a shared set of written characters; plus the skill of literacy. How did indigenous African languages take written form? Will text remain as privileged a mode of communication The first point to note is that literacy in Africa is very ancient. in the future? Text is a powerful medium, and the Internet Kemetic (ancient Egyptian) scripts were probably not the has empowered it further with email, the Web and Google. first writing system in the world – Mesopotamians most But remote person-to-person communi cation today is more likely achieved that first – but writing developed in Egypt likely to be done by phone than email; radio and television very early, about five thousand years ago. -

Rong Kit Introduction

ᰛᰀ Digital Lepcha for Windows, Mac OS X and Linux © Sikkim Bhutia Lepcha Apex Committee - 2016 Foreword Written language is incredibly important, as it is a fundamental way of communicating, and doing so indirectly and over time. It is a main way civilizations accrue and record their technology, educate their citizens, and keep a historical record. This “collective wisdom” for lack of a better term is a hallmark of many great civilizations. Writing is the physical manifestation of a spoken language. It is thought that human beings developed language c. 35,000 BCE as evidenced by cave paintings from the period of the Cro-Magnon Man (c. 50,000-30,000 BCE) which appear to express concepts concerning daily life. These images suggest a language because, in some instances, they seem to tell a story (say, of a hunting expedition in which specific events occurred) rather than being simply pictures of animals and people. Written language, however, does not emerge until its invention in Sumer, southern Mesopotamia, c. 3500 -3000 BCE. This early writing was called cuneiform and consisted of making specific marks in wet clay with a reed implement. The writing system of the Egyptians was already in use before the rise of the Early Dynastic Period (c. 3150 BCE) and is thought to have developed from Mesopotamian cuneiform (though this theory is disputed) and came to be known as hieroglyphics. The phonetic writing systems of the Greeks, and later the Romans, came from Phoenicia (hence the name). The Phoenician writing system, though quite different from that of Mesopotamia, still owes its development to the Sumerians and their advances in the written word. -

The Unicode Cookbook for Linguists

The Unicode Cookbook for Linguists Managing writing systems using orthography profiles Steven Moran Michael Cysouw language Translation and Multilingual Natural science press Language Processing 10 Translation and Multilingual Natural Language Processing Editors: Oliver Czulo (Universität Leipzig), Silvia Hansen-Schirra (Johannes Gutenberg-Universität Mainz), Reinhard Rapp (Johannes Gutenberg-Universität Mainz) In this series: 1. Fantinuoli, Claudio & Federico Zanettin (eds.). New directions in corpus-based translation studies. 2. Hansen-Schirra, Silvia & Sambor Grucza (eds.). Eyetracking and Applied Linguistics. 3. Neumann, Stella, Oliver Čulo & Silvia Hansen-Schirra (eds.). Annotation, exploitation and evaluation of parallel corpora: TC3 I. 4. Czulo, Oliver & Silvia Hansen-Schirra (eds.). Crossroads between Contrastive Linguistics, Translation Studies and Machine Translation: TC3 II. 5. Rehm, Georg, Felix Sasaki, Daniel Stein & Andreas Witt (eds.). Language technologies for a multilingual Europe: TC3 III. 6. Menzel, Katrin, Ekaterina Lapshinova-Koltunski & Kerstin Anna Kunz (eds.). New perspectives on cohesion and coherence: Implications for translation. 7. Hansen-Schirra, Silvia, Oliver Czulo & Sascha Hofmann (eds). Empirical modelling of translation and interpreting. 8. Svoboda, Tomáš, Łucja Biel & Krzysztof Łoboda (eds.). Quality aspects in institutional translation. 9. Fox, Wendy. Can integrated titles improve the viewing experience? Investigating the impact of subtitling on the reception and enjoyment of film using eye tracking and questionnaire data. 10. Moran, Steven & Michael Cysouw. The Unicode cookbook for linguists: Managing writing systems using orthography profiles ISSN: 2364-8899 The Unicode Cookbook for Linguists Managing writing systems using orthography profiles Steven Moran Michael Cysouw language science press Steven Moran & Michael Cysouw. 2018. The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles (Translation and Multilingual Natural Language Processing 10). -

Getting Reasonable Chinese Characters in LATEX Documents

Getting Reasonable Chinese Characters in LATEX Documents Paul E. Johnson January 22, 2009 From the LYX wiki (http://wiki.lyx.org/LYX/XeTEX) , I learned that the program xelatex from the X TE EX addon for LATEX can replace the latex program. The xelatex program can generate pdf documents from TEX files that include Chinese and other international characters that are in the utf-8 font format. It is not just for Chinese, but that was my major purpose when I started to look into this. XeTEX is supposed to accept all unicode characters. X TE EX may accept all characters, but that does not mean they show up in the output. I was very discouraged after I experimented with the file “xetex.lyx” on the LYX wiki. While the five Chinese characters in the demonstration do work, a user quickly realizes that additional characters are almost all missing from output. I installed the SCIM input system and typed in 20 or 30 characters and about 3/4 of them were missing from the pdf output. The characters show on the screen if there is an X11 screen font for them, but the fonts selected in that example file are less comprehensive. How discouraging. And the worst part is that the missing characters show in pdf output as “blanks”, not as errors. So the author of a long document may never realize that a character is missing. That is simply unacceptable, as far as I’m concerned. Finding X TE EX discouraging, I started researching an alternative approach, the so-called CJK-LATEX approach. -

On the Design of Text Editors

On the design of text editors Nicolas P. Rougier Inria Bordeaux Sud-Ouest Bordeaux, France [email protected] Figure 1: GNU Emacs screenshots with hacked settings. ABSTRACT Atom (2014) and Sublime Text (2008), for the more recent ones. Each editor offers some specific features (e.g. modal editing, syntax Code editors are written by and for developers. They come with a colorization, code folding, plugins, etc.) that is supposed to differ- large set of default and implicit choices in terms of layout, typog- entiate it from the others at time of release. There exists however raphy, colorization and interaction that hardly change from one one common trait for virtually all of text editors: they are written editor to the other. It is not clear if these implicit choices derive by and for developers. Consequently, design is generally not the from the ignorance of alternatives or if they derive from developers’ primary concern and the final product does not usually enforce habits, reproducing what they are used to. The goal of this article best recommendations in terms of appearance, interface design is to characterize these implicit choices and to illustrate what are or even user interaction. The most striking example is certainly alternatives, without prescribing one or the other. the syntax colorization that seems to go against every good de- sign principles in a majority of text editors and the motto guiding CCS CONCEPTS design could be summarized by “Let’s add more colors” (using regex). • Human-centered computing ! HCI theory, concepts and arXiv:2008.06030v2 [cs.HC] 3 Sep 2020 models; Interaction design theory, concepts and paradigms; More generally, modern text editors comes with a large set of default Text input. -

Nimsoft Server Installation and User Guide

Nimsoft Server Installation and User Guide 5.1 Contact Nimsoft For your convenience, Nimsoft provides a single site where you can access information about Nimsoft products. At http://support.nimsoft.com/, you can access the following: ■ Online and telephone contact information for technical assistance and customer services ■ Information about user communities and forums ■ Product and documentation downloads ■ Nimsoft Support policies and guidelines ■ Other helpful resources appropriate for your product Provide Feedback If you have comments or questions about Nimsoft product documentation, you can send a message to [email protected]. Legal Notices Copyright © 2011, Nimsoft Corporation Warranty The material contained in this document is provided "as is," and is subject to being changed, without notice, in future editions. Further, to the maximum extent permitted by applicable law, Nimsoft Corporation disclaims all warranties, either express or implied, with regard to this manual and any information contained herein, including but not limited to the implied warranties of merchantability and fitness for a particular purpose. Nimsoft Corporation shall not be liable for errors or for incidental or consequential damages in connection with the furnishing, use, or performance of this document or of any information contained herein. Should Nimsoft Corporation and the user have a separate written agreement with warranty terms covering the material in this document that conflict with these terms, the warranty terms in the separate agreement shall control. Technology Licenses The hardware and/or software described in this document are furnished under a license and may be used or copied only in accordance with the terms of such license. No part of this manual may be reproduced in any form or by any means (including electronic storage and retrieval or translation into a foreign language) without prior agreement and written consent from Nimsoft Corporation as governed by United States and international copyright laws.