Juhtolv-Fontsamples

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Musescore 2.0 Handbook

Handbook Downloaded from musescore.org on Jun 08 2017 Released under Creative Commons Attribution-ShareAlike GETTING STARTED 17 INSTALLATION 17 INSTALL ON WINDOWS 17 Install 17 Start MuseScore 21 Uninstall 21 Troubleshooting 21 External links 21 CREATE A NEW SCORE 21 Start center 21 Create new score 22 Title, composer and other information 22 Select template 23 Choose instruments or voice parts 24 Add staff 25 Add Linked Staff 25 Select key signature and tempo 25 Time signature, pickup measure (anacrusis), and number of measures 26 Adjustments to score after creation 27 Add/delete measures 27 Add/edit text 27 Change instrument set-up 27 Templates 27 User template folder 27 System template folder 27 See also 28 External links 28 INSTALL ON MACOS 28 Install 28 Uninstall 29 Install with Apple Remote Desktop 29 External links 29 INSTALL ON LINUX 29 AppImage 29 Step 1 - Download 29 Step 2 - Give execute permission 30 Step 3 - Run it! 30 Installing the AppImage (optional) 30 Using command line options 30 Distribution Packages 31 Fedora 31 External links 31 INSTALL ON CHROMEBOOK 31 External links 31 LANGUAGE SETTINGS AND TRANSLATION UPDATES 31 Change language 32 Update translation 32 See also 33 External links 33 CHECKING FOR UPDATES 33 Automated update check 33 Check for update 34 See also 34 BASICS 35 2 NOTE INPUT 35 Basic note entry (Step-time) 35 Step 1: Starting position 35 Step 2: Note input mode 35 Step 3: Duration of the note (or rest) 35 Step 4: Enter pitch (or rest) 35 Other input modes 35 Input devices 36 Mouse 36 Keyboard 36 MIDI -

Translators' Tool

The Translator’s Tool Box A Computer Primer for Translators by Jost Zetzsche Version 9, December 2010 Copyright © 2010 International Writers’ Group, LLC. All rights reserved. This document, or any part thereof, may not be reproduced or transmitted electronically or by any other means without the prior written permission of International Writers’ Group, LLC. ABBYY FineReader and PDF Transformer are copyrighted by ABBYY Software House. Acrobat, Acrobat Reader, Dreamweaver, FrameMaker, HomeSite, InDesign, Illustrator, PageMaker, Photoshop, and RoboHelp are registered trademarks of Adobe Systems Inc. Acrocheck is copyrighted by acrolinx GmbH. Acronis True Image is a trademark of Acronis, Inc. Across is a trademark of Nero AG. AllChars is copyrighted by Jeroen Laarhoven. ApSIC Xbench and Comparator are copyrighted by ApSIC S.L. Araxis Merge is copyrighted by Araxis Ltd. ASAP Utilities is copyrighted by eGate Internet Solutions. Authoring Memory Tool is copyrighted by Sajan. Belarc Advisor is a trademark of Belarc, Inc. Catalyst and Publisher are trademarks of Alchemy Software Development Ltd. ClipMate is a trademark of Thornsoft Development. ColourProof, ColourTagger, and QA Solution are copyrighted by Yamagata Europe. Complete Word Count is copyrighted by Shauna Kelly. CopyFlow is a trademark of North Atlantic Publishing Systems, Inc. CrossCheck is copyrighted by Global Databases, Ltd. Déjà Vu is a trademark of ATRIL Language Engineering, S.L. Docucom PDF Driver is copyrighted by Zeon Corporation. dtSearch is a trademark of dtSearch Corp. EasyCleaner is a trademark of ToniArts. ExamDiff Pro is a trademark of Prestosoft. EmEditor is copyrighted by Emura Software inc. Error Spy is copyrighted by D.O.G. GmbH. FileHippo is copyrighted by FileHippo.com. -

Man'yogana.Pdf (574.0Kb)

Bulletin of the School of Oriental and African Studies http://journals.cambridge.org/BSO Additional services for Bulletin of the School of Oriental and African Studies: Email alerts: Click here Subscriptions: Click here Commercial reprints: Click here Terms of use : Click here The origin of man'yogana John R. BENTLEY Bulletin of the School of Oriental and African Studies / Volume 64 / Issue 01 / February 2001, pp 59 73 DOI: 10.1017/S0041977X01000040, Published online: 18 April 2001 Link to this article: http://journals.cambridge.org/abstract_S0041977X01000040 How to cite this article: John R. BENTLEY (2001). The origin of man'yogana. Bulletin of the School of Oriental and African Studies, 64, pp 5973 doi:10.1017/S0041977X01000040 Request Permissions : Click here Downloaded from http://journals.cambridge.org/BSO, IP address: 131.156.159.213 on 05 Mar 2013 The origin of man'yo:gana1 . Northern Illinois University 1. Introduction2 The origin of man'yo:gana, the phonetic writing system used by the Japanese who originally had no script, is shrouded in mystery and myth. There is even a tradition that prior to the importation of Chinese script, the Japanese had a native script of their own, known as jindai moji ( , age of the gods script). Christopher Seeley (1991: 3) suggests that by the late thirteenth century, Shoku nihongi, a compilation of various earlier commentaries on Nihon shoki (Japan's first official historical record, 720 ..), circulated the idea that Yamato3 had written script from the age of the gods, a mythical period when the deity Susanoo was believed by the Japanese court to have composed Japan's first poem, and the Sun goddess declared her son would rule the land below. -

ACS – the Archival Cytometry Standard

http://flowcyt.sf.net/acs/latest.pdf ACS – the Archival Cytometry Standard Archival Cytometry Standard ACS International Society for Advancement of Cytometry Candidate Recommendation DRAFT Document Status The Archival Cytometry Standard (ACS) has undergone several revisions since its initial development in June 2007. The current proposal is an ISAC Candidate Recommendation Draft. It is assumed, however not guaranteed, that significant features and design aspects will remain unchanged for the final version of the Recommendation. This specification has been formally tested to comply with the W3C XML schema version 1.0 specification but no position is taken with respect to whether a particular software implementing this specification performs according to medical or other valid regulations. The work may be used under the terms of the Creative Commons Attribution-ShareAlike 3.0 Unported license. You are free to share (copy, distribute and transmit), and adapt the work under the conditions specified at http://creativecommons.org/licenses/by-sa/3.0/legalcode. Disclaimer of Liability The International Society for Advancement of Cytometry (ISAC) disclaims liability for any injury, harm, or other damage of any nature whatsoever, to persons or property, whether direct, indirect, consequential or compensatory, directly or indirectly resulting from publication, use of, or reliance on this Specification, and users of this Specification, as a condition of use, forever release ISAC from such liability and waive all claims against ISAC that may in any manner arise out of such liability. ISAC further disclaims all warranties, whether express, implied or statutory, and makes no assurances as to the accuracy or completeness of any information published in the Specification. -

The Unicode Cookbook for Linguists: Managing Writing Systems Using Orthography Profiles

Zurich Open Repository and Archive University of Zurich Main Library Strickhofstrasse 39 CH-8057 Zurich www.zora.uzh.ch Year: 2017 The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles Moran, Steven ; Cysouw, Michael DOI: https://doi.org/10.5281/zenodo.290662 Posted at the Zurich Open Repository and Archive, University of Zurich ZORA URL: https://doi.org/10.5167/uzh-135400 Monograph The following work is licensed under a Creative Commons: Attribution 4.0 International (CC BY 4.0) License. Originally published at: Moran, Steven; Cysouw, Michael (2017). The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles. CERN Data Centre: Zenodo. DOI: https://doi.org/10.5281/zenodo.290662 The Unicode Cookbook for Linguists Managing writing systems using orthography profiles Steven Moran & Michael Cysouw Change dedication in localmetadata.tex Preface This text is meant as a practical guide for linguists, and programmers, whowork with data in multilingual computational environments. We introduce the basic concepts needed to understand how writing systems and character encodings function, and how they work together. The intersection of the Unicode Standard and the International Phonetic Al- phabet is often not met without frustration by users. Nevertheless, thetwo standards have provided language researchers with a consistent computational architecture needed to process, publish and analyze data from many different languages. We bring to light common, but not always transparent, pitfalls that researchers face when working with Unicode and IPA. Our research uses quantitative methods to compare languages and uncover and clarify their phylogenetic relations. However, the majority of lexical data available from the world’s languages is in author- or document-specific orthogra- phies. -

Archive Utility Download

archive utility download mac Question: Q: finding archive utility app and uninstalling winzip? i have zip files that now default to WINZIP which i downloaded for a demo and never uninstalled. can someone remind me how to uninstall it again? ALSO, i am trying to set ZIPPED files to open with ARCHIVE UTILITY but i don't see anywhere to set this with the OPEN WITH dialogs. i checked in the UTILITY FOLDER in applications but it is not there. i forget if this is something obvious or what. Mac Pro, macOS 10.13. Posted on Jul 15, 2020 11:46 AM. Anyone who has purchased the product from the WinZip store* within 30 days can get a refund of the purchase price. If you want to arrange a refund, please contact WinZip Service or mail a request to: Mansfield, CT 06268-0540. Please include your name, order number, and postal address in your request. To qualify for a refund, please remove the software from any computers on which you've installed it. Also, please destroy the CD, if you received one. The best way to remove WinZip Mac from your computer is as follows: Click the WinZip icon on the dock Click the WinZip drop down menu and then the Uninstall menu item. Then contact WinZip Service, state that you have removed the software from any computers on which you've installed it (and have destroyed the CD if applicable), and also state that you will no longer be using the software. * Note: If you have purchased WinZip through the Apple Store and believe you are in need of a refund, you must contact the Apple Store. -

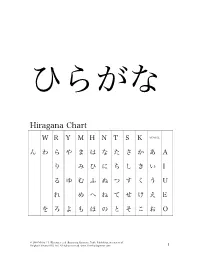

Hiragana Chart

ひらがな Hiragana Chart W R Y M H N T S K VOWEL ん わ ら や ま は な た さ か あ A り み ひ に ち し き い I る ゆ む ふ ぬ つ す く う U れ め へ ね て せ け え E を ろ よ も ほ の と そ こ お O © 2010 Michael L. Kluemper et al. Beginning Japanese, Tuttle Publishing, an imprint of Periplus Editions (HK) Ltd. All rights reserved. www.TimeForJapanese.com. 1 Beginning Japanese 名前: ________________________ 1-1 Hiragana Activity Book 日付: ___月 ___日 一、 Practice: あいうえお かきくけこ がぎぐげご O E U I A お え う い あ あ お え う い あ お う あ え い あ お え う い お う い あ お え あ KO KE KU KI KA こ け く き か か こ け く き か こ け く く き か か こ き き か こ こ け か け く く き き こ け か © 2010 Michael L. Kluemper et al. Beginning Japanese, Tuttle Publishing, an imprint of Periplus Editions (HK) Ltd. All rights reserved. www.TimeForJapanese.com. 2 GO GE GU GI GA ご げ ぐ ぎ が が ご げ ぐ ぎ が ご ご げ ぐ ぐ ぎ ぎ が が ご げ ぎ が ご ご げ が げ ぐ ぐ ぎ ぎ ご げ が 二、 Fill in each blank with the correct HIRAGANA. SE N SE I KI A RA NA MA E 1. -

Pack, Encrypt, Authenticate Document Revision: 2021 05 02

PEA Pack, Encrypt, Authenticate Document revision: 2021 05 02 Author: Giorgio Tani Translation: Giorgio Tani This document refers to: PEA file format specification version 1 revision 3 (1.3); PEA file format specification version 2.0; PEA 1.01 executable implementation; Present documentation is released under GNU GFDL License. PEA executable implementation is released under GNU LGPL License; please note that all units provided by the Author are released under LGPL, while Wolfgang Ehrhardt’s crypto library units used in PEA are released under zlib/libpng License. PEA file format and PCOMPRESS specifications are hereby released under PUBLIC DOMAIN: the Author neither has, nor is aware of, any patents or pending patents relevant to this technology and do not intend to apply for any patents covering it. As far as the Author knows, PEA file format in all of it’s parts is free and unencumbered for all uses. Pea is on PeaZip project official site: https://peazip.github.io , https://peazip.org , and https://peazip.sourceforge.io For more information about the licenses: GNU GFDL License, see http://www.gnu.org/licenses/fdl.txt GNU LGPL License, see http://www.gnu.org/licenses/lgpl.txt 1 Content: Section 1: PEA file format ..3 Description ..3 PEA 1.3 file format details ..5 Differences between 1.3 and older revisions ..5 PEA 2.0 file format details ..7 PEA file format’s and implementation’s limitations ..8 PCOMPRESS compression scheme ..9 Algorithms used in PEA format ..9 PEA security model .10 Cryptanalysis of PEA format .12 Data recovery from -

Latex-Test 2016-09-05

latex-test 2016-09-05 Ken Moffat 1 Introduction, or my history with LATEX A frustrating but sometimes educational experience. It is easy to forget that TEX is at heart an old-school programming language, with a lot of additional macros added over the years, and many different options. Like all programming languages, it takes a long time to achieve any level of competence. One day in February 2014, somebody noticed that our (BLFS) build of texlive did not build all of the package (and so, anybody who be- gan by installing the binary install-tl-unx still had programs which were not built from source). I had no experience of this [ insert profanities here ] typesetting system, and my initial attempts to try to use it found many exam- ples which perhaps worked when they were posted, but did not work for me. Eventually, I found a few routines which gave me a little confidence that some of it worked. Getting a working version of xindy to build was fun. Eventually I came back to this, got more of it working, and even- tually got it all working from source (although on one of my ma- chines the binary version of ConTeXt failed - that CPU did not sup- port some SSE options that the contributed binary used, but such is life and anyway we prefer to build from source!). The tests are now here to check that a new version works. Along the way, I have discovered that I really dislike much of TEX it- self, and LATEX too is a bit problematic: • The Fonts are ugly. -

KANA Response Live Organization Administration Tool Guide

This is the most recent version of this document provided by KANA Software, Inc. to Genesys, for the version of the KANA software products licensed for use with the Genesys eServices (Multimedia) products. Click here to access this document. KANA Response Live Organization Administration KANA Response Live Version 10 R2 February 2008 KANA Response Live Organization Administration All contents of this documentation are the property of KANA Software, Inc. (“KANA”) (and if relevant its third party licensors) and protected by United States and international copyright laws. All Rights Reserved. © 2008 KANA Software, Inc. Terms of Use: This software and documentation are provided solely pursuant to the terms of a license agreement between the user and KANA (the “Agreement”) and any use in violation of, or not pursuant to any such Agreement shall be deemed copyright infringement and a violation of KANA's rights in the software and documentation and the user consents to KANA's obtaining of injunctive relief precluding any further such use. KANA assumes no responsibility for any damage that may occur either directly or indirectly, or any consequential damages that may result from the use of this documentation or any KANA software product except as expressly provided in the Agreement, any use hereunder is on an as-is basis, without warranty of any kind, including without limitation the warranties of merchantability, fitness for a particular purpose, and non-infringement. Use, duplication, or disclosure by licensee of any materials provided by KANA is subject to restrictions as set forth in the Agreement. Information contained in this document is subject to change without notice and does not represent a commitment on the part of KANA. -

Writing As Aesthetic in Modern and Contemporary Japanese-Language Literature

At the Intersection of Script and Literature: Writing as Aesthetic in Modern and Contemporary Japanese-language Literature Christopher J Lowy A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Washington 2021 Reading Committee: Edward Mack, Chair Davinder Bhowmik Zev Handel Jeffrey Todd Knight Program Authorized to Offer Degree: Asian Languages and Literature ©Copyright 2021 Christopher J Lowy University of Washington Abstract At the Intersection of Script and Literature: Writing as Aesthetic in Modern and Contemporary Japanese-language Literature Christopher J Lowy Chair of the Supervisory Committee: Edward Mack Department of Asian Languages and Literature This dissertation examines the dynamic relationship between written language and literary fiction in modern and contemporary Japanese-language literature. I analyze how script and narration come together to function as a site of expression, and how they connect to questions of visuality, textuality, and materiality. Informed by work from the field of textual humanities, my project brings together new philological approaches to visual aspects of text in literature written in the Japanese script. Because research in English on the visual textuality of Japanese-language literature is scant, my work serves as a fundamental first-step in creating a new area of critical interest by establishing key terms and a general theoretical framework from which to approach the topic. Chapter One establishes the scope of my project and the vocabulary necessary for an analysis of script relative to narrative content; Chapter Two looks at one author’s relationship with written language; and Chapters Three and Four apply the concepts explored in Chapter One to a variety of modern and contemporary literary texts where script plays a central role. -

Comunicado 23 Técnico

Comunicado 23 ISSN 1415-2118 Abril, 2007 Técnico Campinas, SP Armazenagem e transporte de arquivos extensos André Luiz dos Santos Furtado Fernando Antônio de Pádua Paim Resumo O crescimento no volume de informações transportadas por um mesmo indivíduo ou por uma equipe é uma tendência mundial e a cada dia somos confrontados com mídias de maior capacidade de armazenamento de dados. O objetivo desse comunicado é explorar algumas possibilidades destinadas a permitir a divisão e o transporte de arquivos de grande volume. Neste comunicado, tutoriais para o Winrar, 7-zip, ALZip, programas destinados a compactação de arquivos, são apresentados. É descrita a utilização do Hjsplit, software livre, que permite a divisão de arquivos. Adicionalmente, são apresentados dois sites, o rapidshare e o mediafire, destinados ao compartilhamento e à hospedagem de arquivos. 1. Introdução O crescimento no volume de informações transportadas por um mesmo indivíduo ou por uma equipe é uma tendência mundial. No início da década de 90, mesmo nos países desenvolvidos, um computador com capacidade de armazenamento de 12 GB era inovador. As fitas magnéticas foram substituídas a partir do anos 50, quando a IBM lançou o primogenitor dos atuais discos rígidos, o RAMAC Computer, com a capacidade de 5 MB, pesando aproximadamente uma tonelada (ESTADO DE SÃO PAULO, 2006; PCWORLD, 2006) (Figs. 1 e 2). Figura 1 - Transporte do disco rígido do RAMAC Computer criado pela IBM em 1956, com a capacidade de 5 MB. 2 Figura 2 - Sala de operação do RAMAC Computer. Após três décadas, os primeiros computadores pessoais possuíam discos rígidos com capacidade significativamente superior ao RAMAC, algo em torno de 10 MB, consumiam menos energia, custavam menos e, obviamente, tinham uma massa que não alcançava 100 quilogramas.