Operation and Reference Manual for the NIST AC-DC Calibration Systems and Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alias Manager 4

CHAPTER 4 Alias Manager 4 This chapter describes how your application can use the Alias Manager to establish and resolve alias records, which are data structures that describe file system objects (that is, files, directories, and volumes). You create an alias record to take a “fingerprint” of a file system object, usually a file, that you might need to locate again later. You can store the alias record, instead of a file system specification, and then let the Alias Manager find the file again when it’s needed. The Alias Manager contains algorithms for locating files that have been moved, renamed, copied, or restored from backup. Note The Alias Manager lets you manage alias records. It does not directly manipulate Finder aliases, which the user creates and manages through the Finder. The chapter “Finder Interface” in Inside Macintosh: Macintosh Toolbox Essentials describes Finder aliases and ways to accommodate them in your application. ◆ The Alias Manager is available only in system software version 7.0 or later. Use the Gestalt function, described in the chapter “Gestalt Manager” of Inside Macintosh: Operating System Utilities, to determine whether the Alias Manager is present. Read this chapter if you want your application to create and resolve alias records. You might store an alias record, for example, to identify a customized dictionary from within a word-processing document. When the user runs a spelling checker on the document, your application can ask the Alias Manager to resolve the record to find the correct dictionary. 4 To use this chapter, you should be familiar with the File Manager’s conventions for Alias Manager identifying files, directories, and volumes, as described in the chapter “Introduction to File Management” in this book. -

Television Shows

Libraries TELEVISION SHOWS The Media and Reserve Library, located on the lower level west wing, has over 9,000 videotapes, DVDs and audiobooks covering a multitude of subjects. For more information on these titles, consult the Libraries' online catalog. 1950s TV's Greatest Shows DVD-6687 Age and Attitudes VHS-4872 24 Season 1 (Discs 1-3) DVD-2780 Discs Age of AIDS DVD-1721 24 Season 1 (Discs 1-3) c.2 DVD-2780 Discs Age of Kings, Volume 1 (Discs 1-3) DVD-6678 Discs 24 Season 1 (Discs 4-6) DVD-2780 Discs Age of Kings, Volume 2 (Discs 4-5) DVD-6679 Discs 24 Season 1 (Discs 4-6) c.2 DVD-2780 Discs Alfred Hitchcock Presents Season 1 DVD-7782 24 Season 2 (Discs 1-4) DVD-2282 Discs Alias Season 1 (Discs 1-3) DVD-6165 Discs 24 Season 2 (Discs 5-7) DVD-2282 Discs Alias Season 1 (Discs 4-6) DVD-6165 Discs 30 Days Season 1 DVD-4981 Alias Season 2 (Discs 1-3) DVD-6171 Discs 30 Days Season 2 DVD-4982 Alias Season 2 (Discs 4-6) DVD-6171 Discs 30 Days Season 3 DVD-3708 Alias Season 3 (Discs 1-4) DVD-7355 Discs 30 Rock Season 1 DVD-7976 Alias Season 3 (Discs 5-6) DVD-7355 Discs 90210 Season 1 (Discs 1-3) c.1 DVD-5583 Discs Alias Season 4 (Discs 1-3) DVD-6177 Discs 90210 Season 1 (Discs 1-3) c.2 DVD-5583 Discs Alias Season 4 (Discs 4-6) DVD-6177 Discs 90210 Season 1 (Discs 4-5) c.1 DVD-5583 Discs Alias Season 5 DVD-6183 90210 Season 1 (Discs 4-6) c.2 DVD-5583 Discs All American Girl DVD-3363 Abnormal and Clinical Psychology VHS-3068 All in the Family Season One DVD-2382 Abolitionists DVD-7362 Alternative Fix DVD-0793 Abraham and Mary Lincoln: A House -

Telomerase Activity in Cell Lines of Pediatric Soft Tissue Sarcomas

0031-3998/03/5405-0718 PEDIATRIC RESEARCH Vol. 54, No. 5, 2003 Copyright © 2003 International Pediatric Research Foundation, Inc. Printed in U.S.A. Telomerase Activity in Cell Lines of Pediatric Soft Tissue Sarcomas ELKE KLEIDEITER, MATTHIAS SCHWAB, ULRIKE FRIEDRICH, EWA KOSCIELNIAK, BEAT W. SCHÄFER, AND ULRICH KLOTZ Dr. Margarete Fischer-Bosch Institute of Clinical Pharmacology, D-70376 Stuttgart, Germany [E.K., M.S., U.F., U.K.], Department of Pediatric Oncology/Hematology, Olga Hospital, D-70176 Stuttgart, Germany [E.K.], and Division of Clinical Chemistry and Biochemistry, Department of Pediatrics, University Hospital, CH-8032 Zürich, Switzerland [B.W.S.] ABSTRACT Telomeres and their maintenance by telomerase have been expression of hTERT mRNA. Thus, we suggest that the signif- implicated to play an important role in carcinogenesis. As almost icant difference (p Ͻ 0.01) in the less aggressive clinical behav- all malignant tumors express telomerase (in contrast to normal ior of embryonal rhabdomyosarcomas in comparison to other somatic cells), assessment of its activity has been proposed as a subtypes may be due to differences in telomerase expression. diagnostic and prognostic tool. To test the prognostic value of Taken together, our cell line experiments imply that telomerase telomerase in pediatric soft tissue sarcoma (STS), we analyzed activity might be a biologic marker for stratification between telomere length (by telomere restriction fragment analysis), te- STS with different clinical prognosis. (Pediatr Res 54: 718–723, lomerase activity (by modified telomerase repeat amplification 2003) protocol assay), and expression of human telomerase reverse transcriptase (hTERT) mRNA (by TaqMan technique) in cell Abbreviations lines of different types of STS from 12 children and adolescents. -

Alias Grace by Margaret Atwood Adapted for the Stage by Jennifer Blackmer Directed by RTE Co-Founder Karen Kessler

Contact: Cathy Taylor / Kelsey Moorhouse Cathy Taylor Public Relations, Inc. For Immediate Release [email protected] June 28, 2017 773-564-9564 Rivendell Theatre Ensemble in association with Brian Nitzkin announces cast for the World Premiere of Alias Grace By Margaret Atwood Adapted for the Stage by Jennifer Blackmer Directed by RTE Co-Founder Karen Kessler Cast features RTE members Ashley Neal and Jane Baxter Miller with Steve Haggard, Maura Kidwell, Ayssette Muñoz, David Raymond, Amro Salama and Drew Vidal September 1 – October 14, 2017 Chicago, IL—Rivendell Theatre Ensemble (RTE), Chicago’s only Equity theatre dedicated to producing new work with women at the core, in association with Brian Nitzkin, announces casting for the world premiere of Alias Grace by Margaret Atwood, adapted for the stage by Jennifer Blackmer, and directed by RTE Co-Founder Karen Kessler. Alias Grace runs September 1 – October 14, 2017, at Rivendell Theatre Ensemble, 5779 N. Ridge Avenue in Chicago. The press opening is Wednesday, September 13 at 7:00pm. This production of Alias Grace replaces the previously announced Cal in Camo, which will now be presented in January 2018. The cast includes RTE members Ashley Neal (Grace Marks) and Jane Baxter Miller (Mrs. Humphrey), with Steve Haggard (Simon Jordan), Maura Kidwell (Nancy Montgomery), Ayssette Muñoz (Mary Whitney), David Raymond (James McDermott), Amro Salama (Jerimiah /Jerome Dupont) and Drew Vidal (Thomas Kinnear). The designers include RTE member Elvia Moreno (scenic), RTE member Janice Pytel (costumes) and Michael Mahlum (lighting). A world premiere adaptation of Margaret Atwood's acclaimed novel Alias Grace takes a look at one of Canada's most notorious murderers. -

Spot the Alias

Spot the alias DIRECTIONS: STEP 1: CUT ALONG DOTTED LINE. STEP 2: FOLD IN HALF VERTICALLY. STEP 3: FOLD INTO ACCORDIAN. STEP 4: PLACE IN WALLET! CORN EGGS FISH MILK corn sugar, corn syrup, albumin, conalbumin, (includes crustaceans ammonium caseinate, corn syrup solids, egg substitutes, globulin, and shellfi sh) calcium caseinate, cornstarch, crystalline lecithin (from egg), anchovy, bass, bluefi sh, magnesium caseinate, fructose, crystalline livetin,lysozyme, meringue, calamari, carp, catfi sh, potassium caseinate, glucose, dextrose, ovalbumin, char, clam, cod, cockle, sodium caseinate, glucose, glucose syrup, ovomacroglobulin, conch, crab, crayfi sh, eel, casein, caseinate, * NOTE: SPOT high fructose corn syrup ovomucin, ovomucoid, escargot, halibut, herring, curds, dry milk, hydrolyzed This guide should not (HFCS), lecithin (from corn), ovotransferrin, ovovitellin, lobster, mackerel, casein, hydrolyzed milk be considered the fi nal THE word on your allergen maltodextrin silico-albuminate, mahi-mahi, marlin, protein, lactalbumin, ® and its “aliases” – Simplesse , vitellin mussels, octopus, orange lactate, lactoferrin, ALIAS lactoglobulin, lactose, speak to your doctor roughy, pickerel, pike, about obtaining a An egg by any modifi ed milk ingredients, pollock, prawns, rockfi sh, complete list. other name… salmon, sardine, shark, Opta™, can be confusing! shrimp, scallops, sea sour cream, sour milk Watch for these urchin, smelt, snails, solids, whey, possible aliases snapper, swordfi sh, squid, whey protein concentrate, rennet of common tilapia, -

Alias: a Lifelike Immersive Affective Stimulation System

aaLLIIAASS:: AA LLiiffeelliikkee IImmmmeerrssiivvee AAffffeeccttiivvee SSttiimmuullaattiioonn SSyysstteemm NEW! Virtual Reality System for Neuroscience Research Now researchers can take experimental investigations of emotion and attention to the next level with aLIAS: A Lifelike Immersive Affective Stimulation System. Developed in partnership with WorldViz, the aLIAS package is a plug-and-play system designed to work seamlessly with the latest HMD technology from Oculus* to create a sophisticated stimulation solution that is truly budget friendly. *Oculus Rift sold separately Inducing an emotional state in laboratory settings requires researchers to use various techniques approximating the immersion of the participant in a real-life situation. Such techniques could involve reading stories, looking at pictures and videos or using actors. These methodologies suffer, in various degrees, from limitations in ecological validity and/or experimental control. BIOPAC and WorldViz have developed a virtual reality based stimulation protocol that aims to deliver lifelike stimulation while maintaining very high experimental control. A collection of stimulation scenarios has been created and is constantly augmented; it is designed so that researchers can expand it easily. Physiological data can be recorded throughout the experiment and markers for events and conditions are automatically added to the physiological data record over the network. The physiological data is recorded using the wireless BioNomadix and MP150 system with AcqKnowledge and Network Data Transfer. aLIAS: Complete Researcher Control, Ready-to-Run Scenarios, Fully Customizable aLIAS allows Oculus Rift users to adjust the order of the stimulation, control the inter stimulus interval and ambient sound and other sound effects without the need for programming. This is a ready to run, plug-and-play series of scenarios that are designed to operate with the Oculus DK2* head mounted display. -

Gender Politics and Social Class in Atwood's Alias Grace Through a Lens of Pronominal Reference

European Scientific Journal December 2018 edition Vol.14, No.35 ISSN: 1857 – 7881 (Print) e - ISSN 1857- 7431 Gender Politics and Social Class in Atwood’s Alias Grace Through a Lens of Pronominal Reference Prof. Claudia Monacelli (PhD) Faculty of Interpreting and Translation, UNINT University, Rome, Italy Doi:10.19044/esj.2018.v14n35p150 URL:http://dx.doi.org/10.19044/esj.2018.v14n35p150 Abstract In 1843, a 16-year-old Canadian housemaid named Grace Marks was tried for the murder of her employer and his mistress. The jury delivered a guilty verdict and the trial made headlines throughout the world. Nevertheless, opinion remained resolutely divided about Marks in terms of considering her a scorned woman who had taken out her rage on two, innocent victims, or an unwitting victim herself, implicated in a crime she was too young to understand. In 1996 Canadian author Margaret Atwood reconstructs Grace’s story in her novel Alias Grace. Our analysis probes the story of Grace Marks as it appears in the Canadian television miniseries Alias Grace, consisting of 6 episodes, directed by Mary Harron and based on Margaret Atwood’s novel, adapted by Sarah Polley. The series premiered on CBC on 25 September 2017 and also appeared on Netflix on 3 November 2017. We apply a qualitative (corpus-driven) and qualitative (discourse analytical) approach to examine pronominal reference for what it might reveal about the gender politics and social class in the language of the miniseries. Findings reveal pronouns ‘I’, ‘their’, and ‘he’ in episode 5 of the miniseries highly correlate with both the distinction of gender and social class. -

Assembly Committee on Judiciary-March 1, 2017

MINUTES OF THE MEETING OF THE ASSEMBLY COMMITTEE ON JUDICIARY Seventy-Ninth Session March 1, 2017 The Committee on Judiciary was called to order by Chairman Steve Yeager at 8:01 a.m. on Wednesday, March 1, 2017, in Room 3138 of the Legislative Building, 401 South Carson Street, Carson City, Nevada. The meeting was videoconferenced to Room 4401 of the Grant Sawyer State Office Building, 555 East Washington Avenue, Las Vegas, Nevada. Copies of the minutes, including the Agenda (Exhibit A), the Attendance Roster (Exhibit B), and other substantive exhibits, are available and on file in the Research Library of the Legislative Counsel Bureau and on the Nevada Legislature's website at www.leg.state.nv.us/App/NELIS/REL/79th2017. COMMITTEE MEMBERS PRESENT: Assemblyman Steve Yeager, Chairman Assemblyman James Ohrenschall, Vice Chairman Assemblyman Elliot T. Anderson Assemblywoman Lesley E. Cohen Assemblyman Ozzie Fumo Assemblyman Ira Hansen Assemblywoman Sandra Jauregui Assemblywoman Lisa Krasner Assemblywoman Brittney Miller Assemblyman Keith Pickard Assemblyman Tyrone Thompson Assemblywoman Jill Tolles Assemblyman Justin Watkins COMMITTEE MEMBERS ABSENT: Assemblyman Jim Wheeler (excused) GUEST LEGISLATORS PRESENT: Assemblywoman Irene Bustamante Adams, Assembly District No. 42 Minutes ID: 289 *CM289* Assembly Committee on Judiciary March 1, 2017 Page 2 STAFF MEMBERS PRESENT: Diane C. Thornton, Committee Policy Analyst Brad Wilkinson, Committee Counsel Erin McHam, Committee Secretary Melissa Loomis, Committee Assistant OTHERS PRESENT: Gloria Allred, -

Conducting Business Under an Alias Or an Assumed Name

Verification Assistance Brief Conducting Business Under an Alias or an Assumed Name Issue: • When a business registers an alias or an assumed name with a state or local This brief explains the Center for Verification government unit (i.e., one of the 50 states, and Evaluation’s (CVE) requirements concerning the District of Columbia, Puerto Rico, or any an applicant applying for verification with an territory of the United States), it is alias or an assumed name. announcing that it may be using that alias or assumed name to conduct business. (For purposes of this brief, applicant refers to the business entity applying for verification; and • Depending on the state or local statute, an participant refers to a business entity that has alias or an assumed name may be defined already been verified.) as: a doing business as d/b/a name, a fictitious name, an assumed name, or a trading as t/a name. There is no universal What This Means: titling or designation for an alias or an assumed name. • Each applicant for the Vendor Information Pages (VIP) Verification Program applies as • An applicant is not required to list its alias a sole proprietor, a corporation, a or assumed name on its VIP profile or on VA partnership, a limited liability company or Form 0877. Listing an alias or assumed any other business entity recognized by name is optional. state or local law. • However, CVE does not allow a company to • In the case of a sole proprietorship, a utilize an alias or assumed name unless it person’s official name is generally found on has been registered with a state or local his or her birth certificate. -

Essentials for Attorneys in Child Support Enforcement. INSTITUTION National Inst

DOCUMENT RESUME ED 284 135 CG 020 068 AUTHOR Henry, Michael R.; And Others TITLE Essentials for Attorneys in Child Support Enforcement. INSTITUTION National Inst. for Child Support Enforcemert, Chevy Chase, HD. SPONS AGENCY Office of Child Support Enforcement (DHHS), Washington, DC. PUB DATE 86 CONTRACT 600-83-0001 NOTE 418p. PUB TYPE Guides - General (050) -- Legal/Legislative/Regulatory Materials (090) EDRS PRICE MF01/PC17 Plus Postage. DESCRIPTORS Children; *Child Welfare; *Court Litigation; Divorce; Ethics; *Family Problems; Financial Support; Law Enforcement; Laws; *Lawyers; Legal Problems; *Parent Responsibility; *Resource Materials IDENTIFIERS *Child Support; *Child Support Enforcement Program; *Paternity Establishment ABSTRACT This handbook presents a course developed to provide a national perspective for attorneys who represent state and local child support enforcement agencies operating under Title IV-D of the Social Security Act. The introduction provides an overview of the child support problem in the United States, citing causes and effects of the problem and explaining the current status of the Child Support Enforcement Program. Chapter 1 explores the federal role in the Child Support Enforcement Program and examines the functions of the federal Office of Child Support Enforcement. Chapter 2 focuseson state and local roles in the Child Support Enforcement Program. Chapter 3 looks at the role of the attorney in child support enforcement and addresses several specific ethical problems. Chapter 4 concerns the pretrial activities of interviewing witnesses, negotiation, and discovery. The establishment of support obligations is discussed in chapter 5 and enforcing those obligations is considered in chapter 6. Chapter 7 presents defenses to enforcement and chapter 8 looks at expedited processes. -

NEW HAMPSHIRE FISH &GAME DEPARTMENT APPLICATION for VOLUNTEER INSTRUCTOR HUNTER EDUCATION PROGRAM Choose Program You Would L

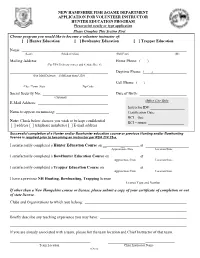

NEW HAMPSHIRE FISH &GAME DEPARTMENT APPLICATION FOR VOLUNTEER INSTRUCTOR HUNTER EDUCATION PROGRAM Please print neatly or type application Please Complete This Section First Choose program you would like to become a volunteer instructor of: [ ] Hunter Education [ ] Bowhunter Education [ ] Trapper Education Name: ____________________________________________________________________________________ (Last) (Maiden/Alias) (Full First) (M) Mailing Address: Home Phone: ( ) (For UPS Delivery (Street and #, State Rte. #) Daytime Phone: ( ) (For Mail Delivery – if different from UPS) Cell Phone: ( )_____________________ City / Town State Zip Code Social Security No: Date of Birth: ________________________ (Optional) Office Use Only E-Mail Address: Instructor ID#: ___________________ Name to appear on nametag: ___________________________ Certification Date: ________________ BCI – Out: ______________________ Note: Check below choices you wish to be kept confidential BCI – return: ____________________ [ ] address [ ] telephone number(s) [ ] E-mail address Successful completion of a Hunter and/or Bowhunter education course or previous Hunting and/or Bowhunting license is required prior to becoming an instructor per RSA 214:23-a. I satisfactorily completed a Hunter Education Course on __ __ at ______________________ Approximate Date Location/State I satisfactorily completed a BowHunter Education Course on __ _ at ______________________ Approximate Date Location/State I satisfactorily completed a Trapper Education Course on __ _ at ______________________ Approximate Date Location/State I have a previous NH Hunting, Bowhunting, Trapping license _______________________________________ License Type and Number If other than a New Hampshire course or license, please submit a copy of your certificate of completion or out of state license. Clubs and Organizations to which you belong: Briefly describe any teaching experience you may have: If you are already associated with a team, please list the team location and Chief Instructor of that team. -

Television Shows

Libraries TELEVISION SHOWS The Media and Reserve Library, located on the lower level west wing, has over 9,000 videotapes, DVDs and audiobooks covering a multitude of subjects. For more information on these titles, consult the Libraries' online catalog. 1950s TV's Greatest Shows DVD-6687 Alias Season 2 (Discs 4-6) DVD-6171 Discs 4 24 Season 1 (Discs 1-3) DVD-2780 Discs 1 Alias Season 3 (Discs 1-4) DVD-7355 Discs 1 24 Season 1 (Discs 1-3) c.2 DVD-2780 Discs 1 Alias Season 3 (Discs 5-6) DVD-7355 Discs 5 24 Season 1 (Discs 4-6) DVD-2780 Discs 4 Alias Season 4 (Discs 1-3) DVD-6177 Discs 1 24 Season 1 (Discs 4-6) c.2 DVD-2780 Discs 4 Alias Season 4 (Discs 4-6) DVD-6177 Discs 4 24 Season 2 (Discs 1-4) DVD-2282 Discs 1 Alias Season 5 DVD-6183 24 Season 2 (Discs 5-7) DVD-2282 Discs 5 All American Girl DVD-3363 30 Days Season 1 DVD-4981 Alternative Fix DVD-0793 30 Days Season 2 DVD-4982 Amazing Race Season 1 DVD-0925 30 Days Season 3 DVD-3708 America in Primetime DVD-5425 30 Rock Season 1 DVD-7976 American Horror Story Season 1 DVD-7048 Abolitionists DVD-7362 American Horror Story Season 2: Asylum DVD-7367 Abraham and Mary Lincoln: A House Divided DVD-0001 American Horror Story Season 3: Coven DVD-7891 Adam Bede DVD-7149 American Horror Story Season 4: Freak Show DVD-9562 Adventures of Ozzie and Harriet DVD-0831 American Horror Story Season 5: Hotel DVD-9563 Afghan Star DVD-9194 American Horror Story Season 7: Cult DVD-9564 Age of AIDS DVD-1721 Animaniacs Season 1 (Discs 1-3) c.2 DVD-1686 Discs 1 Age of Kings, Volume 1 (Discs 1-3) DVD-6678 Discs