SD-QA: Spoken Dialectal Question Answering for the Real World

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SOAS Sylheti

Sylheti Language Lessons 2014 - 2015 SOAS Sylheti Project After an invitation from the director of the Surma Centre, Camden, during Endangered Languages Week presentations at SOAS in 2012, the SOAS Sylheti Project was created. For the past three years, SOAS MA linguistics students have participated in this extracurricular project to document Sylheti spoken by users of the Surma Centre. The SOAS Fieldmethods course has also worked with Sylheti speakers to document Sylheti grammar. Besides other sub-projects, the SOAS Sylheti Project is compiling a dictionary for the Sylheti language and launching a dictionary app. Contact the SOAS Sylheti Project via email: [email protected] Facebook group: https://www.facebook.com/groups/soassylhetiproject/ YouTube channel: https://www.youtube.com/user/soassylhetiproject SOAS Sylheti Language Society The SOAS Sylheti Language Society organizes weekly Sylheti language lessons and meets to discuss the grammar of the Sylheti language. Sylheti- speaking members practice teaching and develop lessons. This booklet represents our first efforts to create teaching materials for the Sylheti language. We thank our teachers Kushie, Nadia and Monsur! Facebook page: www.facebook.com/soassylhetilanguagesociety Information on the SOAS Students’ Union webpage: http://soasunion.org/activities/society/7438/ June 2015, London Pictures in this booklet are taken from pixabay.com unless otherwise specified. 2 Contents Lesson 00: Spelling and transcription conventions ............................................. -

Language Documentation and Description

Language Documentation and Description ISSN 1740-6234 ___________________________________________ This article appears in: Language Documentation and Description, vol 18. Editors: Candide Simard, Sarah M. Dopierala & E. Marie Thaut Irrealis? Issues concerning the inflected t-form in Sylheti JONAS LAU Cite this article: Lau, Jonas. 2020. Irrealis? Issues concerning the inflected t-form in Sylheti. In Candide Simard, Sarah M. Dopierala & E. Marie Thaut (eds.) Language Documentation and Description 18, 56- 68. London: EL Publishing. Link to this article: http://www.elpublishing.org/PID/199 This electronic version first published: August 2020 __________________________________________________ This article is published under a Creative Commons License CC-BY-NC (Attribution-NonCommercial). The licence permits users to use, reproduce, disseminate or display the article provided that the author is attributed as the original creator and that the reuse is restricted to non-commercial purposes i.e. research or educational use. See http://creativecommons.org/licenses/by-nc/4.0/ ______________________________________________________ EL Publishing For more EL Publishing articles and services: Website: http://www.elpublishing.org Submissions: http://www.elpublishing.org/submissions Irrealis? Issues concerning the inflected t-form in Sylheti Jonas Lau SOAS, University of London Abstract Among the discussions about cross-linguistic comparability of grammatical categories within the field of linguistic typology (cf. Cristofaro 2009; Haspelmath 2007), one in particular seems to be especially controversial: is there really such a category as irrealis? This term has been used extensively in descriptive works and grammars to name all kinds of grammatical morphemes occurring in various modal and non-modal contexts. However, cross-linguistic evidence for a unitary category that shares invariant semantic features has not been attested (Bybee 1998:266). -

Some Principles of the Use of Macro-Areas Language Dynamics &A

Online Appendix for Harald Hammarstr¨om& Mark Donohue (2014) Some Principles of the Use of Macro-Areas Language Dynamics & Change Harald Hammarstr¨om& Mark Donohue The following document lists the languages of the world and their as- signment to the macro-areas described in the main body of the paper as well as the WALS macro-area for languages featured in the WALS 2005 edi- tion. 7160 languages are included, which represent all languages for which we had coordinates available1. Every language is given with its ISO-639-3 code (if it has one) for proper identification. The mapping between WALS languages and ISO-codes was done by using the mapping downloadable from the 2011 online WALS edition2 (because a number of errors in the mapping were corrected for the 2011 edition). 38 WALS languages are not given an ISO-code in the 2011 mapping, 36 of these have been assigned their appropri- ate iso-code based on the sources the WALS lists for the respective language. This was not possible for Tasmanian (WALS-code: tsm) because the WALS mixes data from very different Tasmanian languages and for Kualan (WALS- code: kua) because no source is given. 17 WALS-languages were assigned ISO-codes which have subsequently been retired { these have been assigned their appropriate updated ISO-code. In many cases, a WALS-language is mapped to several ISO-codes. As this has no bearing for the assignment to macro-areas, multiple mappings have been retained. 1There are another couple of hundred languages which are attested but for which our database currently lacks coordinates. -

Numbers in Bengali Language

NUMBERS IN BENGALI LANGUAGE A dissertation submitted to Assam University, Silchar in partial fulfilment of the requirement for the degree of Masters of Arts in Department of Linguistics. Roll - 011818 No - 2083100012 Registration No 03-120032252 DEPARTMENT OF LINGUISTICS SCHOOL OF LANGUAGE ASSAM UNIVERSITY SILCHAR 788011, INDIA YEAR OF SUBMISSION : 2020 CONTENTS Title Page no. Certificate 1 Declaration by the candidate 2 Acknowledgement 3 Chapter 1: INTRODUCTION 1.1.0 A rapid sketch on Assam 4 1.2.0 Etymology of “Assam” 4 Geographical Location 4-5 State symbols 5 Bengali language and scripts 5-6 Religion 6-9 Culture 9 Festival 9 Food havits 10 Dresses and Ornaments 10-12 Music and Instruments 12-14 Chapter 2: REVIEW OF LITERATURE 15-16 Chapter 3: OBJECTIVES AND METHODOLOGY Objectives 16 Methodology and Sources of Data 16 Chapter 4: NUMBERS 18-20 Chapter 5: CONCLUSION 21 BIBLIOGRAPHY 22 CERTIFICATE DEPARTMENT OF LINGUISTICS SCHOOL OF LANGUAGES ASSAM UNIVERSITY SILCHAR DATE: 15-05-2020 Certified that the dissertation/project entitled “Numbers in Bengali Language” submitted by Roll - 011818 No - 2083100012 Registration No 03-120032252 of 2018-2019 for Master degree in Linguistics in Assam University, Silchar. It is further certified that the candidate has complied with all the formalities as per the requirements of Assam University . I recommend that the dissertation may be placed before examiners for consideration of award of the degree of this university. 5.10.2020 (Asst. Professor Paramita Purkait) Name & Signature of the Supervisor Department of Linguistics Assam University, Silchar 1 DECLARATION I hereby Roll - 011818 No - 2083100012 Registration No – 03-120032252 hereby declare that the subject matter of the dissertation entitled ‘Numbers in Bengali language’ is the record of the work done by me. -

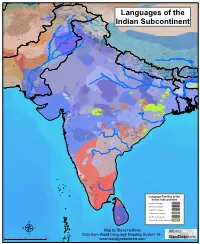

Map by Steve Huffman; Data from World Language Mapping System

Svalbard Greenland Jan Mayen Norwegian Norwegian Icelandic Iceland Finland Norway Swedish Sweden Swedish Faroese FaroeseFaroese Faroese Faroese Norwegian Russia Swedish Swedish Swedish Estonia Scottish Gaelic Russian Scottish Gaelic Scottish Gaelic Latvia Latvian Scots Denmark Scottish Gaelic Danish Scottish Gaelic Scottish Gaelic Danish Danish Lithuania Lithuanian Standard German Swedish Irish Gaelic Northern Frisian English Danish Isle of Man Northern FrisianNorthern Frisian Irish Gaelic English United Kingdom Kashubian Irish Gaelic English Belarusan Irish Gaelic Belarus Welsh English Western FrisianGronings Ireland DrentsEastern Frisian Dutch Sallands Irish Gaelic VeluwsTwents Poland Polish Irish Gaelic Welsh Achterhoeks Irish Gaelic Zeeuws Dutch Upper Sorbian Russian Zeeuws Netherlands Vlaams Upper Sorbian Vlaams Dutch Germany Standard German Vlaams Limburgish Limburgish PicardBelgium Standard German Standard German WalloonFrench Standard German Picard Picard Polish FrenchLuxembourgeois Russian French Czech Republic Czech Ukrainian Polish French Luxembourgeois Polish Polish Luxembourgeois Polish Ukrainian French Rusyn Ukraine Swiss German Czech Slovakia Slovak Ukrainian Slovak Rusyn Breton Croatian Romanian Carpathian Romani Kazakhstan Balkan Romani Ukrainian Croatian Moldova Standard German Hungary Switzerland Standard German Romanian Austria Greek Swiss GermanWalser CroatianStandard German Mongolia RomanschWalser Standard German Bulgarian Russian France French Slovene Bulgarian Russian French LombardRomansch Ladin Slovene Standard -

Essays on Cultural and Institutional Dynamics in Economic Development Using Spatial Analysis

Essays on Cultural and Institutional Dynamics in Economic Development Using Spatial Analysis by Timothy Birabi Submitted in fulfillment of the requirements for the Degree of Doctor of Philosophy Adam Smith Business School College of Social Sciences University of Glasgow April 2016 ©Timothy Birabi i Abstract This thesis seeks to research patterns of economic growth and development from a number of perspectives often resonated in the growth literature. By addressing themes about history, geography, institutions and culture the thesis is able to bring to bear a wide range of inter-related literatures and methodologies within a single content. Additionally, by targeting different administrative levels in its research design and approach, this thesis is also able to provide a comprehensive treatment of the economic growth dilemma from both cross- national and sub-national perspectives. The three chapters herein discuss economic development from two broad dimensions. The first of these chapters takes on the economic growth inquiry by attempting to incorporate cultural geography within a cross-country formal spatial econometric growth framework. By introducing the global cultural dynamics of languages and ethnic groups as spatial network mechanisms, this chapter is able to distinguish economic growth effects accruing from own-country productive efforts from those accruing from interconnections within a global productive network chain. From this, discussions and deductions about the implications for both developed and developing countries are made as regards potentials for gains and losses from such types and levels of productive integration. The second and third chapters take a different spin to the economic development inquiry. They both focus on economic activity in Africa, tackling the relevant issues from a geo-intersected dimension involving historic regional tribal homelands and modern national and subnational administrative territories. -

Map by Steve Huffman Data from World Language Mapping System 16

Tajiki Tajiki Tajiki Shughni Southern Pashto Shughni Tajiki Wakhi Wakhi Wakhi Mandarin Chinese Sanglechi-Ishkashimi Sanglechi-Ishkashimi Wakhi Domaaki Sanglechi-Ishkashimi Khowar Khowar Khowar Kati Yidgha Eastern Farsi Munji Kalasha Kati KatiKati Phalura Kalami Indus Kohistani Shina Kati Prasuni Kamviri Dameli Kalami Languages of the Gawar-Bati To rw al i Chilisso Waigali Gawar-Bati Ushojo Kohistani Shina Balti Parachi Ashkun Tregami Gowro Northwest Pashayi Southwest Pashayi Grangali Bateri Ladakhi Northeast Pashayi Southeast Pashayi Shina Purik Shina Brokskat Aimaq Parya Northern Hindko Kashmiri Northern Pashto Purik Hazaragi Ladakhi Indian Subcontinent Changthang Ormuri Gujari Kashmiri Pahari-Potwari Gujari Bhadrawahi Zangskari Southern Hindko Kashmiri Ladakhi Pangwali Churahi Dogri Pattani Gahri Ormuri Chambeali Tinani Bhattiyali Gaddi Kanashi Tinani Southern Pashto Ladakhi Central Pashto Khams Tibetan Kullu Pahari KinnauriBhoti Kinnauri Sunam Majhi Western Panjabi Mandeali Jangshung Tukpa Bilaspuri Chitkuli Kinnauri Mahasu Pahari Eastern Panjabi Panang Jaunsari Western Balochi Southern Pashto Garhwali Khetrani Hazaragi Humla Rawat Central Tibetan Waneci Rawat Brahui Seraiki DarmiyaByangsi ChaudangsiDarmiya Western Balochi Kumaoni Chaudangsi Mugom Dehwari Bagri Nepali Dolpo Haryanvi Jumli Urdu Buksa Lowa Raute Eastern Balochi Tichurong Seke Sholaga Kaike Raji Rana Tharu Sonha Nar Phu ChantyalThakali Seraiki Raji Western Parbate Kham Manangba Tibetan Kathoriya Tharu Tibetan Eastern Parbate Kham Nubri Marwari Ts um Gamale Kham Eastern -

Introducing the Sylheti Language and Its Speakers, and the SOAS Sylheti Project

Language Documentation and Description ISSN 1740-6234 ___________________________________________ This article appears in: Language Documentation and Description, vol 18. Editors: Candide Simard, Sarah M. Dopierala & E. Marie Thaut Introducing the Sylheti language and its speakers, and the SOAS Sylheti project CANDIDE SIMARD, SARAH M. DOPIERALA & E. MARIE THAUT Cite this article: Simard, Candide, Sarah M. Dopierala & E. Marie Thaut. 2020. Introducing the Sylheti language and its speakers, and the SOAS Sylheti project. In Candide Simard, Sarah M. Dopierala & E. Marie Thaut (eds.) Language Documentation and Description 18, 1-22. London: EL Publishing. Link to this article: http://www.elpublishing.org/PID/196 This electronic version first published: August 2020 __________________________________________________ This article is published under a Creative Commons License CC-BY-NC (Attribution-NonCommercial). The licence permits users to use, reproduce, disseminate or display the article provided that the author is attributed as the original creator and that the reuse is restricted to non-commercial purposes i.e. research or educational use. See http://creativecommons.org/licenses/by-nc/4.0/ ______________________________________________________ EL Publishing For more EL Publishing articles and services: Website: http://www.elpublishing.org Submissions: http://www.elpublishing.org/submissions Introducing the Sylheti language and its speakers, and the SOAS Sylheti project Candide Simard The University of the South Pacific Sarah M. Dopierala Goethe University Frankfurt E. Marie Thaut SOAS, University of London Abstract Sylheti is a minoritised, politically unrecognised, and understudied Eastern Indo-Aryan language with approximately 11 million speakers worldwide, with high speaker concentrations in the Surma and Barak river basins in north-eastern Bangladesh and south Assam, India, and in several diasporic communities around the world (especially UK, USA, and Middle East). -

Languages of India Being a Reprint of Chapter on Languages

THE LANGUAGES OF INDIA BEING A :aEPRINT OF THE CHAPTER ON LANGUAGES CONTRIBUTED BY GEORGE ABRAHAM GRIERSON, C.I.E., PH.D., D.LITT., IllS MAJESTY'S INDIAN CIVIL SERVICE, TO THE REPORT ON THE OENSUS OF INDIA, 1901, TOGETHER WITH THE CENSUS- STATISTIOS OF LANGUAGE. CALCUTTA: OFFICE OF THE SUPERINTENDENT OF GOVERNMENT PRINTING, INDIA. 1903. CALcuttA: GOVERNMENT OF INDIA. CENTRAL PRINTING OFFICE, ~JNGS STRERT. CONTENTS. ... -INTRODUCTION . • Present Knowledge • 1 ~ The Linguistio Survey 1 Number of Languages spoken ~. 1 Ethnology and Philology 2 Tribal dialects • • • 3 Identification and Nomenolature of Indian Languages • 3 General ammgemont of Chapter • 4 THE MALAYa-POLYNESIAN FAMILY. THE MALAY GROUP. Selung 4 NicobaresB 5 THE INDO-CHINESE FAMILY. Early investigations 5 Latest investigations 5 Principles of classification 5 Original home . 6 Mon-Khmers 6 Tibeto-Burmans 7 Two main branches 7 'fibeto-Himalayan Branch 7 Assam-Burmese Branch. Its probable lines of migration 7 Siamese-Chinese 7 Karen 7 Chinese 7 Tai • 7 Summary 8 General characteristics of the Indo-Chinese languages 8 Isolating languages 8 Agglutinating languages 9 Inflecting languages ~ Expression of abstract and concrete ideas 9 Tones 10 Order of words • 11 THE MON-KHME& SUB-FAMILY. In Further India 11 In A.ssam 11 In Burma 11 Connection with Munds, Nicobar, and !lalacca languages 12 Connection with Australia • 12 Palaung a Mon- Khmer dialect 12 Mon. 12 Palaung-Wa group 12 Khaasi 12 B2 ii CONTENTS THE TIllETO-BuRMAN SUll-FAMILY_ < PAG. Tibeto-Himalayan and Assam-Burmese branches 13 North Assam branch 13 ~. Mutual relationship of the three branches 13 Tibeto-H imalayan BTanch. -

Map by Steve Huffman; Data from World Language Mapping System 16 - N Ew Zealand English French Southern and Antarctic Lands

Greenland Svalbard Greenlandic Inuktitut Greenlandic Inuktitut Nganasan Nenets Dolgan Nenets Nganasan Yakut Nenets Nganasan Norwegian Nganasan Nganasan Jan Mayen Nenets Tundra Enets Dolgan North Saami North SaamiNorth Saami Nganasan Greenlandic Inuktitut North Saami Nenets Kven FinnishNorth Saami North Saami Nenets Forest Enets North Saami North Saami Skolt Saami North Saami North Saami Inari SaamiKven Finnish Forest Enets North Saami Norwegian North Saami Nenets Nenets Nenets Nenets Skolt Saami Chukchi North SaamiNorth Saami North SaamiNorth Saami Nenets Yakut North SaamiLule Saami Kildin Saami Nenets North Saami Nenets Nenets Pite Saami Tornedalen Finnish North Saami Te r Sa am i Nenets Pite SaamiPite Saami Lule Saami Nenets Nenets Tornedalen Finnish Even Lule Saami Ume Saami Pite Saami Karelian Selkup Selkup Even Icelandic Ume Saami Pite Saami Evenki Even Chukchi Iceland South Saami South SaamiUme Saami Finland Karelian Komi-Zyrian South Saami Russia Even Finnish Selkup South Saami Nenets Koryak Norway Swedish Even Sweden Swedish Even Faroese Koryak Chukchi Faroese South Saami Koryak South Saami Ludian Selkup Faroese Livvi-Karelian Veps Koryak Norwegian Evenki Alutor Koryak Koryak Veps Koryak Alutor Swedish Swedish Swedish Selkup Finnish Koryak Vod Ingrian Komi-Permyak Alutor Koryak Estonian Selkup Estonian Scottish Gaelic Estonia Evenki Scottish Gaelic Liv Itelmen Udmurt Khakas Scottish Gaelic Latvia Koryak Latvian Liv Russian Scots Denmark Meadow Mari Itelmen Scottish Gaelic Meadow Mari Danish Hill Mari Bashkort Lithuania Bashkort -

The Interference of the Mother Tongue on English Speaking Sylheti Bengali

English as an International Language, Vol. 15, Issue 2 Changing Contours: The Interference of the Mother Tongue on English Speaking Sylheti Bengali Arpita Goswami Kalinga Institute of Industrial Technology, Bhubaneswar, India (Erratum: Republication of previously published paper in EILJ, 2020, Volume 15, Issue 1, pp. 100-134 due to IPA font distortions that occurred in the publication process) Abstract The Sylheti Bangla, a variety of Bangla language is primarily spoken in the Sylhet District of Bangladesh, Barak Valley of Assam, Tripura, especially in North Tripura. The systematic perusal on English spoken by Sylheti Bangla speakers demonstrates that it carries a huge difference with English (RP) specially in respect to pronunciation, syllable structure, stress, intonation. The disparities between Sylheti English and English (RP) are because of a number of divergent variances between Sylheti Bangla and English which often makes the acquisition of English an arduous job for the Sylheti speakers. The errors committed by Sylheti speakers during their speaking in English can be attributed to the interference of the rule of mother tongue in learning the second language. This interference can be of phonological, morphological and sentence structure. However, the domain of this paper is exclusive to phonological interference of L1 on L2 through the contrastive analysis of the phonological aspects of Sylheti Bangla and English (RP). This paper intends to improve the status of English teaching-learning process compared to contemporary practices. Thus, it aspires to contribute to the theory of second language acquisition with a particular focus on learning English by the Sylheti speakers. Keywords: Mother tongue interference, Sylheti English, phonological interference, contrastive analysis Introduction All languages have their own patterns of linguistic system and the nature of this system differs from one another. -

Research on Folklore in Sylhet Region of Bangladesh: a Study of Chowdhury Harun Akbor

International Journal on Studies in English Language and Literature (IJSELL) Volume 2, Issue 8, August 2014, PP 131-134 ISSN 2347-3126 (Print) & ISSN 2347-3134 (Online) www.arcjournals.org Research on Folklore in Sylhet Region of Bangladesh: A Study of Chowdhury Harun Akbor Sofe Ahmed PhD Fellow, Islamic University of Kushtia Abstract: Folklore seems to be a deep-rooted tree in the garden of world literature. Its origin, appeal, and practice are quite antique and popular in the realm of literary culture of Bangladesh particularly of Sylhet region. Sylhet has a diversified geographic, cultural, and literary tradition from early stage of ‘Sylheti’ as well as Bengali literature which vividly influence the language and the theme of ‘Charjapod’, the oldest symphony or sign of Bengali literature (Ali, 2002). This affluent and progressive literary convention pervades and enlightens the mind of dwellers of every level of this area. Consequently literature becomes an inevitable part of the life of ‘Sylheti’ people. Folklore is the child of that masses’ mind of aptitude. ‘Sylheti’ folk-poets basically belong to religious commoners and self educated class, and thus their works thematically largely cover the arena of socio-religious functions of the inhabitant of this region. The modern higher educated successors of those traditional folk-poets get greatly impressed by the folk tradition of predecessor and they feel indebted and obliged to pursue research on their works for making eternal them in the memory of their generations. And they are contributing in this field successfully through continuous efforts. Mr. Harun Akbor is one of those competent and successful successor scholars.