Estudio, Analisis E Implementación Del Vocoder Mediante Procesado

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DOXA Festival 2004

2 table of contents General Festival Information - Tickets, Venues 3 The Documentary Media Society 5 Acknowledgements 6 Partnership Opportunities 7 Greetings 9 Welcome from DOXA 11 Opening Night - The Take 13 Gals of the Great White North: Movies by Canadian Women 14 Inheritance: A Fisherman’s Story 15 Mumbai, India. January, 2004. The World Social Forum. by Arlene Ami 16 Activist Documentaries 18 Personal Politics by Ann Marie Fleming 19 Sherman’s March 20 NFB Master Class with Alanis Obomsawin 21 Festival Schedule 23 Fragments of a Journey in Palestine-Israel 24 Born Into Brothels 25 The Cucumber Incident 26 No Place Called Home 27 Word Wars 29 Trouble in the Image by Alex MacKenzie 30 The Exhibitionists 31 Haack: The King of Techno + Sid Vision 33 A Night Out with the Guys (Reeking of Humanity) 34 Illustrating the Point: The Use of Animation in Documentary 36 Closing Night - Screaming Men 39 Sources 43 3 4 general festival information Tickets Venues Opening Night Fundraising Gala: The Vogue Theatre (VT) 918 Granville Street $20 regular / $10 low income (plus $1.50 venue fee) Pacific Cinémathèque (PC) 1131 Howe Street low income tickets ONLY available at DOXA office (M-F, 10-5pm) All programs take place at Pacific Cinémathèque except Tuesday May Matinee (before 6 pm) screenings: $7 25 - The Take, which is at the Vogue Theatre. Evening (after 6 pm) screenings: $9 Closing Night: $15 screening & reception The Vogue Theatre and Pacific Cinémathèque are Festival Pass: $69 includes closing gala screening & wheelchair accessible. reception (pass excludes Opening Gala) Master Class: Free admission Festival Information www.doxafestival.ca Festival passes are available at Ticketmaster only (pass 604.646.3200 excludes opening night). -

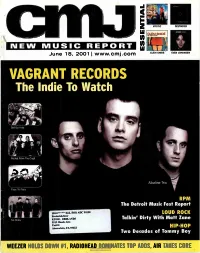

VAGRANT RECORDS the Lndie to Watch

VAGRANT RECORDS The lndie To Watch ,Get Up Kids Rocket From The Crypt Alkaline Trio Face To Face RPM The Detroit Music Fest Report 130.0******ALL FOR ADC 90198 LOUD ROCK Frederick Gier KUOR -REDLANDS Talkin' Dirty With Matt Zane No Motiv 5319 Honda Ave. Unit G Atascadero, CA 93422 HIP-HOP Two Decades of Tommy Boy WEEZER HOLDS DOWN el, RADIOHEAD DOMINATES TOP ADDS AIR TAKES CORE "Tommy's one of the most creative and versatile multi-instrumentalists of our generation." _BEN HARPER HINTO THE "Geggy Tah has a sleek, pointy groove, hitching the melody to one's psyche with the keen handiness of a hat pin." _BILLBOARD AT RADIO NOW RADIO: TYSON HALLER RETAIL: ON FEDDOR BILLY ZARRO 212-253-3154 310-288-2711 201-801-9267 www.virginrecords.com [email protected] [email protected] [email protected] 2001 VIrg. Records Amence. Inc. FEATURING "LAPDFINCE" PARENTAL ADVISORY IN SEARCH OF... EXPLICIT CONTENT %sr* Jeitetyr Co owe Eve« uuwEL. oles 6/18/2001 Issue 719 • Vol 68 • No 1 FEATURES 8 Vagrant Records: become one of the preeminent punk labels The Little Inclie That Could of the new decade. But thanks to a new dis- Boasting a roster that includes the likes of tribution deal with TVT, the label's sales are the Get Up Kids, Alkaline Trio and Rocket proving it to be the indie, punk or otherwise, From The Crypt, Vagrant Records has to watch in 2001. DEPARTMENTS 4 Essential 24 New World Our picks for the best new music of the week: An obit on Cameroonian music legend Mystic, Clem Snide, Destroyer, and Even Francis Bebay, the return of the Free Reed Johansen. -

Autotune Bc.Pdf

MASARYKOVA UNIVERZITA Filozofická fakulta Ústav hudební vědy Teorie interaktivních médií BAKALÁŘSKÁ DIPLOMOVÁ PRÁCE Fenomén auto-tune jako projev automatizace a dehumanizace hudby Václav Římánek Vedoucí práce: PhDr. Aleš Opekar, CSc. 2016 ČESTNÉ PROHLÁŠENÍ Prohlašuji, že jsem tuto bakalářskou práci vypracoval samostatně. Ve své práci jsem použil zdroje a literaturu, které jsou náležitě a řádně citovány v poznámkách pod čarou a jsou všechny uvedeny v bibliografii této práce. V Brně, 11.5. 2016 .................................................. Václav Římánek 2 PODĚKOVÁNÍ Na tomto místě bych chtěl poděkovat PhDr. Aleši Opekarovi, CSc. za vedení, konzultace a přínosné poznámky pro danou práci. Dále bych chtěl poděkovat všem, kteří mě při psaní této práce podporovali. 3 Obsah Úvod.....................................................................................................................................................5 1. Co je to auto-tune?............................................................................................................................6 1.1. Obecná definice........................................................................................................................6 1.2. Jak auto-tune funguje................................................................................................................6 2. Historie.............................................................................................................................................8 2.1. Předchůdci auto-tune................................................................................................................8 -

Perspectives on the Aesthetics & Phenomenology Of

A LIBERATED SONIC SUBLIME: Perspectives On The Aesthetics & Phenomenology Of Sound Synthesis Anders Bach Pedersen IT University, Copenhagen, Den- mark [email protected] ABSTRACT of music, or we can ultimately try to fathom the sounds in their own existence and in conjunction with one another In this paper I will investigate the aesthetics of electronic to provoke a mood, emotional and/or sensory relation sound synthesis, materiality and the contemporary sub- within the listener. The objectification and paradox in the lime in an analysis and discussion of interrelated phe- effort of materializing sound becomes a personal aes- nomenological, philosophical and cultural considerations thetic judgment of timbre and Kantian aesthetics [4], but I through chosen sound and music examples. I argue that will later in this paper argue that it is in fact an active the aesthetic experience of sonic timbres that seem un- participation in a phenomenological liberation of sound earthly to us resembles that of a transcendental sublime in in continuum of the idea presented by Edgard Varèse [5] the uncanny experience of the synthesis of both known in 1936. I do acknowledge specific types of synthesis – and unknown sounds. Both experimental music and additive, subtractive, FM etc. – with regards to their dif- “switched-on” reinterpretations are addressed through ferent timbral qualities, albeit theory in this area does not explorations of sound in time, space and technology and I serve any major importance for the points made in this discuss if we as listeners are able to differentiate materi- discussion as it is mainly based on aesthetics. ality from its superficial cognates when challenged by In this paper I will firstly discuss the nature of sound sonic doppelgängers. -

BEAUTIFUL NOISE Directions in Electronic Music

BEAUTIFUL NOISE Directions in Electronic Music www.ele-mental.org/beautifulnoise/ A WORK IN PROGRESS (3rd rev., Oct 2003) Comments to [email protected] 1 A Few Antecedents The Age of Inventions The 1800s produce a whole series of inventions that set the stage for the creation of electronic music, including the telegraph (1839), the telephone (1876), the phonograph (1877), and many others. Many of the early electronic instruments come about by accident: Elisha Gray’s ‘musical telegraph’ (1876) is an extension of his research into telephone technology; William Du Bois Duddell’s ‘singing arc’ (1899) is an accidental discovery made from the sounds of electric street lights. “The musical telegraph” Elisha Gray’s interesting instrument, 1876 The Telharmonium Thaddeus Cahill's telharmonium (aka the dynamophone) is the most important of the early electronic instruments. Its first public performance is given in Massachusetts in 1906. It is later moved to NYC in the hopes of providing soothing electronic music to area homes, restaurants, and theatres. However, the enormous size, cost, and weight of the instrument (it weighed 200 tons and occupied an entire warehouse), not to mention its interference of local phone service, ensure the telharmonium’s swift demise. Telharmonic Hall No recordings of the instrument survive, but some of Cahill’s 200-ton experiment in canned music, ca. 1910 its principles are later incorporated into the Hammond organ. More importantly, Cahill’s idea of ‘canned music,’ later taken up by Muzak in the 1960s and more recent cable-style systems, is now an inescapable feature of the contemporary landscape. -

The Snow Miser Song 6Ix Toys - Tomorrow's Children (Feat

(Sandy) Alex G - Brite Boy 1910 Fruitgum Company - Indian Giver 2 Live Jews - Shake Your Tuchas 45 Grave - The Snow Miser Song 6ix Toys - Tomorrow's Children (feat. MC Kwasi) 99 Posse;Alborosie;Mama Marjas - Curre curre guagliò still running A Brief View of the Hudson - Wisconsin Window Smasher A Certain Ratio - Lucinda A Place To Bury Strangers - Straight A Tribe Called Quest - After Hours Édith Piaf - Paris Ab-Soul;Danny Brown;Jhene Aiko - Terrorist Threats (feat. Danny Brown & Jhene Aiko) Abbey Lincoln - Lonely House - Remastered Abbey Lincoln - Mr. Tambourine Man Abner Jay - Woke Up This Morning ACID MOTHERS TEMPLE - Are We Experimental? Adolescents - Democracy Adrian Sherwood - No Dog Jazz Afro Latin Vintage Orchestra - Ayodegi Afrob;Telly Tellz;Asmarina Abraha - 808 Walza Afroman - I Wish You Would Roll A New Blunt Afternoons in Stereo - Kalakuta Republik Afu-Ra - Whirlwind Thru Cities Against Me! - Transgender Dysphoria Blues Aim;Qnc - The Force Al Jarreau - Boogie Down Alabama Shakes - Joe - Live From Austin City Limits Albert King - Laundromat Blues Alberta Cross - Old Man Chicago Alex Chilton - Boplexity Alex Chilton;Ben Vaughn;Alan Vega - Fat City Alexia;Aquilani A. - Uh La La La AlgoRythmik - Everybody Gets Funky Alice Russell - Humankind All Good Funk Alliance - In the Rain Allen Toussaint - Yes We Can Can Alvin Cash;The Registers - Doin' the Ali Shuffle Amadou & Mariam - Mon amour, ma chérie Ananda Shankar - Jumpin' Jack Flash Andrew Gold - Thank You For Being A Friend Andrew McMahon in the Wilderness - Brooklyn, You're -

Electronic Music

ELECTRONIC MUSIC Definitions Electronic music refers to music that emphasizes the use of electronic musical instruments or electronic music technology as a central aspect of the sound of the music. Basics Electronic music refers to music that emphasizes the use of electronic musical instruments or electronic music technology as a central aspect of the sound of the music. Historically electronic music was considered to be any music created with the use of electronic musical instruments or electronic processing, but in modern times, that distinction has been lost because almost all recorded music today, and the majority of live music performances, depends on extensive use of electronics. Today, the term electronic music serves to differentiate music that uses electronics as its focal point or inspiration, from music that uses electronics mainly in service of creating an intended production that may have some electronic elements in the sound but does not focus upon them. Contemporary electronic music expresses both art music forms including electronic art music, experimental music, musique concrète, and others; and popular music forms including multiple styles of dance music such as techno, house, trance, electro, breakbeat, drum and bass, industrial music, synth pop, etc. A distinction can be made between instruments that produce sound through electromechanical means as opposed to instruments that produce sound using electronic components. Examples of electromechanical instruments are the teleharmonium, Hammond B3, and the electric guitar, whereas examples of electronic instruments are a Theremin, synthesizer, and a computer. History Late 19th century to early 20th century Before electronic music, there was a growing desire for composers to use emerging technologies for musical purposes. -

Youtube Nightstick

Iron Claw - Skullcrusher - 1970 - YouTube Damon -[1]- Song Of A Gypsy - YouTube Chromakey Dreamcoat - YouTube Nightstick - Dream of the Witches' Sabbath/Massacre of Innocence (Air Attack) - YouTube Nightstick - Four More Years - YouTube Orange Goblin - The Astral Project - YouTube Orange Goblin - Nuclear Guru - YouTube Skepticism - Sign of the Storm - YouTube Cavity - Chase - YouTube Supercollider - YouTube Satin Black - YouTube Today Is The Day - Willpower - 2 - My First Knife - YouTube Alex G - Gnaw - YouTube Stereo Total - Baby Revolution - YouTube Add N To (X) Metal Fingers In My Body - YouTube 'Samurai' by X priest X (Official) - YouTube Bachelorette - I Want to be Your Girlfriend (Bored to Death ending credits) - YouTube Bachelorette - Her Rotating Head (Official) - YouTube The Bug - 'Fuck a Bitch' ft. Death Grips - YouTube Stumble On Tapes - Girlpool - YouTube Joanna Gruesome - Talking To Yr Dick - YouTube Joanna Gruesome - Lemonade Grrl - YouTube BLACK DIRT OAK "Demon Directive" - YouTube Black Dirt Oak - From The Jaguar Priest - YouTube alternative tv how much longer - YouTube Earthless - Lost in the Cold Sun - YouTube Electric Moon - The Doomsday Machine (2011) [Full Album] - YouTube CWC Music Video Holding Out For a Hero - YouTube La Femme - Psycho Tropical Berlin (Full Album) - YouTube Reverend Alicia - YouTube The Phi Mu Washboard Band - Love Hurts - YouTube BJ SNOWDEN IN CANADA - YouTube j-walk - plastic face (prod. cat soup) - YouTube BABY KAELY "SNEAKERHEAD" AMAZING 9 YEAR OLD RAPPER - YouTube Bernard + Edith - Heartache -

Seagram Completes Polygram Buy Job Cuts Seen Starting in January

DECEMBER 28 1998 ISSUE 600 VOL. 57 NO.1 WWW.CMJ.COM MUST HEAR • Seagram Completes PolyGram Buy 5 Job Cuts Seen Starting In January On December 10, Seagram formally completed its $10.2 billion acquisition of PolyGram, creating the combined company Universal Music Group: In a statement on the company's restructuring, UMG CEO Doug Morris didn't disclose the number of layoffs, but industry watchers have speculated that 2,000-3,000 jobs (of the combined 15,500) will be cut. Announcements about staff cuts and artist drops are expected in January. "The integration of these two companies presents a rare opportunity to create an organization that is well positioned for profitable growth," Morris said. "UMG will be a lean, flexible organization that will benefit from economies of scale, while nurturing astrong local entrepreneurial spirit in its management team around the world." UMG's worldwide executive team will also include Vice Chairman Bruce Hack, President/C00 Zach H E LAC 0 PTE RS Horowitz and International Chairman/CEO Jorgen Larsen. The Universal restructuring plans, many of which were first outlined in aLos Angeles Times article in November, include the merger of Island Records and Mercury Records. Jim Caparro and John Reid will run (continued on page 14) Snot Singer Killed In Car Crash Springsteen To Tour Lynn Strait, the 30-year-old singer and lyricist of the hard rock band Snot, With EStreet Band died in a six-vehicle car crash Bruce Springsteen has announced December 11. Reportedly, Strait was plans to launch a worldwide tour exiting California Highway 101 with his legendary E Street Band in between his native Santa Barbara and summer 1999. -

Alternative Histories of Electronic Music 14-16 April 2016 International Conference Staged As Part of the AHRC Funded Project Hugh Davies: Electronic Music Innovator

Alternative Histories of Electronic Music 14-16 April 2016 International conference staged as part of the AHRC funded project Hugh Davies: Electronic Music Innovator Science Museum Dana Research Centre, Queen’s Gate, London The Hugh Davies Project This first international conference on ‘Alternative Histories of Electronic Music’ (AHEM) is being staged as part of an AHRC-funded project exploring the work of the English musician and musicologist Hugh Davies (1943-2005). The project is led by Dr James Mooney (University of Leeds) in partnership with Dr Tim Boon (Science Museum). http://hughdaviesproject.wordpress.com In the late 1960s, Davies produced a comprehensive inventory of electronic music compositions, entitled International Electronic Music Catalog (1968), in which he documented the output of 560 studios in 39 countries. This challenged the hegemony of the Paris, Cologne, and New York schools, whose activities had dominated the literature of the 1950s and 60s. As such, Davies provided what was perhaps the first alternative version of electronic music’s history. While this conference is not directly ‘about’ Hugh Davies, then, it does explore some of the broader issues raised by his work. 2 Table of Contents Schedule ............................................................................................................................................ 4 Session 1: Discourses, narratives and canon formation 1 ............................................ 7 Session 2A: Live Electronics .................................................................................................. -

Mm__L * Liiillenniumprojecu I-Or I-Ou HEAL ALOITI Afld H2

THAT "ALTERNATIVE OR MARGINAL" MAGAZINE FROM CITR 101.9 FM unuon <WEM jomion o-ucc D MIPE**? FUR-*" _\mm__L_* liiiLLEnnium PROJECU i-or i-ou HEAL ALOITI AflD H2» PACCAGE MIAPCA^E FLOORS aFFUM VlslTDUR CANADA'S LARGEST AND BEST KNOWN RECORD STORE «RbAW^ FLOOR 568 SEYMOUR ST. www.samscd.com EEn________™_3_SS_i- Features HOT HOT HEAT MILLENNIUM PROJECT SNAPCASE ATOM AND HIS PACKAGE CHUCK D SUPER FURRY ANIMALS LINTON KWESI JOHNSON the editing times: barbara andersen the ad times: maren hancock the art times: robert horsman the production times: DAS BOOK tristan winch LOUDER THAN A BOMB the design times: VlDEOPHILTER UNDER REVIEW chad christie, rob horsman, REAL LIVE ACTION mike josephson, ken paul, ON THE DIAL tristan winch CHARTS the photography and DATEBOOK illustrations times: rob brownridge, jason da silva, christine gfroerer, ann goncalves, patrick I DIDN'T WANT ANYTHING IN THIS ISSUE TO MAKE REFERENCE TO hemingway, wonder knack OUR ARBITRARY DATING SYSTEM'S IMMINENT TURNOVER, BUT THE the proofing times: CONTRIBUTORS ARE THE BOSS OF ME SO WHAT CAN I DO. nick bradley, chris dryden, NEVERTHELESS, I LOVE THIS COVER BY LOCAL STAY AS You ARE ann goncalves, hancunt, CREATOR BRAD YUNG. DESIGN BY ROB HORSMAN. jannine lasaleta, dorerta "DiSCORDER" 1999 by the Student Radi lau, rowan lipkovits, rsity of British Columbia. All rights reserved. Ci duncan mchugh, christa i, anthony i, payabl* schrag, erin shaw, $15 fore graeme worthy the contributor times: ke cheques tania a, rob b, chris c, DEADLINES: Copy deadline for the February issue is January julie c, mike c, mike d, 1 2. -

The History of Rock Music: 1966-1969

The History of Rock Music: 1966-1969 Genres and musicians of the Sixties History of Rock Music | 1955-66 | 1967-69 | 1970-75 | 1976-89 | The early 1990s | The late 1990s | The 2000s | Alpha index Musicians of 1955-66 | 1967-69 | 1970-76 | 1977-89 | 1990s in the US | 1990s outside the US | 2000s Back to the main Music page Inquire about purchasing the book (Copyright © 2009 Piero Scaruffi) Electronics and Rock 1968-70 TM, ®, Copyright © 2009 Piero Scaruffi All rights reserved. (These are excerpts from my book "A History of Rock and Dance Music") The single event that would eventually revolutionize rock music down to the deepest fiber of its nature was the advent of electronic instruments. In 1966 the USA inventor Robert Moog began selling his "synthesizer", a new kind of instrument, the first instrument that could play more than one "voice" and even imitate the voices of all the other instruments. The avantgarde was quick to seize on the idea. Morton Subotnick, for example, published a free improvisation on synthesizer, Silver Apples of the Moon (1967), which was simply the classical equivalent of acid-rock. Until then, electronic music had been a luxury that very few popular musicians could afford. Most synthesizers were owned by classical music centers or by large recording studios. Despite the practical difficulties, a few visionary composers introduced electronic arrangements in popular music, following the success of the Beach Boys' Good Vibrations (1966). German-born Palestine-raised composer Gershon Kingsley (Goetz-Gustav Ksinski) relocated to the USA in 1946 and in 1955 moved to New York, where he began to work on Broadway musicals.