GPU Architecture Part 2: SIMT Control Flow Management

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reviving the Development of Openchrome

Reviving the Development of OpenChrome Kevin Brace OpenChrome Project Maintainer / Developer XDC2017 September 21st, 2017 Outline ● About Me ● My Personal Story Behind OpenChrome ● Background on VIA Chrome Hardware ● The History of OpenChrome Project ● Past Releases ● Observations about Standby Resume ● Developmental Philosophy ● Developmental Challenges ● Strategies for Further Development ● Future Plans 09/21/2017 XDC2017 2 About Me ● EE (Electrical Engineering) background (B.S.E.E.) who specialized in digital design / computer architecture in college (pretty much the only undergraduate student “still” doing this stuff where I attended college) ● Graduated recently ● First time conference presenter ● Very experienced with Xilinx FPGA (Spartan-II through 7 Series FPGA) ● Fluent in Verilog / VHDL design and verification ● Interest / design experience with external communication interfaces (PCI / PCIe) and external memory interfaces (SDRAM / DDR3 SDRAM) ● Developed a simple DMA engine for PCI I/F validation w/Windows WDM (Windows Driver Model) kernel device driver ● Almost all the knowledge I have is self taught (university engineering classes were not very useful) 09/21/2017 XDC2017 3 Motivations Behind My Work ● General difficulty in obtaining meaningful employment in the digital hardware design field (too many students in the field, difficulty obtaining internship, etc.) ● Collects and repairs abandoned computer hardware (It’s like rescuing puppies!) ● Owns 100+ desktop computers and 20+ laptop computers (mostly abandoned old stuff I -

Inside Intel® Core™ Microarchitecture Setting New Standards for Energy-Efficient Performance

White Paper Inside Intel® Core™ Microarchitecture Setting New Standards for Energy-Efficient Performance Ofri Wechsler Intel Fellow, Mobility Group Director, Mobility Microprocessor Architecture Intel Corporation White Paper Inside Intel®Core™ Microarchitecture Introduction Introduction 2 The Intel® Core™ microarchitecture is a new foundation for Intel®Core™ Microarchitecture Design Goals 3 Intel® architecture-based desktop, mobile, and mainstream server multi-core processors. This state-of-the-art multi-core optimized Delivering Energy-Efficient Performance 4 and power-efficient microarchitecture is designed to deliver Intel®Core™ Microarchitecture Innovations 5 increased performance and performance-per-watt—thus increasing Intel® Wide Dynamic Execution 6 overall energy efficiency. This new microarchitecture extends the energy efficient philosophy first delivered in Intel's mobile Intel® Intelligent Power Capability 8 microarchitecture found in the Intel® Pentium® M processor, and Intel® Advanced Smart Cache 8 greatly enhances it with many new and leading edge microar- Intel® Smart Memory Access 9 chitectural innovations as well as existing Intel NetBurst® microarchitecture features. What’s more, it incorporates many Intel® Advanced Digital Media Boost 10 new and significant innovations designed to optimize the Intel®Core™ Microarchitecture and Software 11 power, performance, and scalability of multi-core processors. Summary 12 The Intel Core microarchitecture shows Intel’s continued Learn More 12 innovation by delivering both greater energy efficiency Author Biographies 12 and compute capability required for the new workloads and usage models now making their way across computing. With its higher performance and low power, the new Intel Core microarchitecture will be the basis for many new solutions and form factors. In the home, these include higher performing, ultra-quiet, sleek and low-power computer designs, and new advances in more sophisticated, user-friendly entertainment systems. -

POWER-AWARE MICROARCHITECTURE: Design and Modeling Challenges for Next-Generation Microprocessors

POWER-AWARE MICROARCHITECTURE: Design and Modeling Challenges for Next-Generation Microprocessors THE ABILITY TO ESTIMATE POWER CONSUMPTION DURING EARLY-STAGE DEFINITION AND TRADE-OFF STUDIES IS A KEY NEW METHODOLOGY ENHANCEMENT. OPPORTUNITIES FOR SAVING POWER CAN BE EXPOSED VIA MICROARCHITECTURE-LEVEL MODELING, PARTICULARLY THROUGH CLOCK- GATING AND DYNAMIC ADAPTATION. Power dissipation limits have Thus far, most of the work done in the area David M. Brooks emerged as a major constraint in the design of high-level power estimation has been focused of microprocessors. At the low end of the per- at the register-transfer-level (RTL) description Pradip Bose formance spectrum, namely in the world of in the processor design flow. Only recently have handheld and portable devices or systems, we seen a surge of interest in estimating power Stanley E. Schuster power has always dominated over perfor- at the microarchitecture definition stage, and mance (execution time) as the primary design specific work on power-efficient microarchi- Hans Jacobson issue. Battery life and system cost constraints tecture design has been reported.2-8 drive the design team to consider power over Here, we describe the approach of using Prabhakar N. Kudva performance in such a scenario. energy-enabled performance simulators in Increasingly, however, power is also a key early design. We examine some of the emerg- Alper Buyuktosunoglu design issue in the workstation and server mar- ing paradigms in processor design and com- kets (see Gowan et al.)1 In this high-end arena ment on their inherent power-performance John-David Wellman the increasing microarchitectural complexities, characteristics. clock frequencies, and die sizes push the chip- Victor Zyuban level—and hence the system-level—power Power-performance efficiency consumption to such levels that traditionally See the “Power-performance fundamentals” Manish Gupta air-cooled multiprocessor server boxes may box. -

Intel® G31 Express Chipset Product Brief

Product Brief Intel® G31 Express Chipset Intel® G31 Express Chipset Flexibility and scalability for essential computing The Intel® G31 Express Chipset supports Intel’s upcoming 45nm processors and enables Windows Vista* premium experience for value conscious consumers. The Intel G31 Express Chipset Desktop PC platforms, combined with either the Intel® Core™2 Duo or the Intel® Core™2 Quad processor, deliver new technologies and innovating capabilities for all consumers. With a 1333MHz system bus, DDR2 memory technology and support for Windows Vista Premium, the Intel G31 Express chipset enables scalability and performance for essential computing. Support for 45nm processor technology and Intel® Fast Memory Access (Intel® FMA) provide increased system performance for today’s computing needs. The Intel G31 Express Chipset enables a Intel® I/O Controller Hub (Intel® ICH7/R) balanced platform for everyday computing needs. The Intel G31 Express Chipset elevates storage performance with Serial Intel® Viiv™ processor technology ATA (SATA) and enhancements to Intel® Matrix Storage Technology2. Intel® Viiv™ processor technology1 is a set of PC technologies designed This chipset has four integrated SATA ports for transfer rates up to 3 for the enjoyment of digital entertainment in the home. The Intel G31 Gb/s (300 MB/s) to SATA hard drives or optical devices. Support for RAID Express Chipset supports Intel Viiv processor technology with either the 0, 1, 5 and 10 allows for different RAID capabilities that address specific Intel® ICH7R or ICH7DH SKUs. needs and usages. For example, critical data can be stored on one array designed for high reliability, while performance-intensive applications like Faster System Performance games can reside on a separate array designed for maximum The Intel® G31 Express Chipset Graphics Memory Controller Hub (GMCH) performance. -

Hardware Architecture

Hardware Architecture Components Computing Infrastructure Components Servers Clients LAN & WLAN Internet Connectivity Computation Software Storage Backup Integration is the Key ! Security Data Network Management Computer Today’s Computer Computer Model: Von Neumann Architecture Computer Model Input: keyboard, mouse, scanner, punch cards Processing: CPU executes the computer program Output: monitor, printer, fax machine Storage: hard drive, optical media, diskettes, magnetic tape Von Neumann architecture - Wiki Article (15 min YouTube Video) Components Computer Components Components Computer Components CPU Memory Hard Disk Mother Board CD/DVD Drives Adaptors Power Supply Display Keyboard Mouse Network Interface I/O ports CPU CPU CPU – Central Processing Unit (Microprocessor) consists of three parts: Control Unit • Execute programs/instructions: the machine language • Move data from one memory location to another • Communicate between other parts of a PC Arithmetic Logic Unit • Arithmetic operations: add, subtract, multiply, divide • Logic operations: and, or, xor • Floating point operations: real number manipulation Registers CPU Processor Architecture See How the CPU Works In One Lesson (20 min YouTube Video) CPU CPU CPU speed is influenced by several factors: Chip Manufacturing Technology: nm (2002: 130 nm, 2004: 90nm, 2006: 65 nm, 2008: 45nm, 2010:32nm, Latest is 22nm) Clock speed: Gigahertz (Typical : 2 – 3 GHz, Maximum 5.5 GHz) Front Side Bus: MHz (Typical: 1333MHz , 1666MHz) Word size : 32-bit or 64-bit word sizes Cache: Level 1 (64 KB per core), Level 2 (256 KB per core) caches on die. Now Level 3 (2 MB to 8 MB shared) cache also on die Instruction set size: X86 (CISC), RISC Microarchitecture: CPU Internal Architecture (Ivy Bridge, Haswell) Single Core/Multi Core Multi Threading Hyper Threading vs. -

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

Microcontroller Serial Interfaces

Microcontroller Serial Interfaces Dr. Francesco Conti [email protected] Microcontroller System Architecture Each MCU (micro-controller unit) is characterized by: • Microprocessor • 8,16,32 bit architecture • Usually “simple” in-order microarchitecture, no FPU Example: STM32F101 MCU Microcontroller System Architecture Each MCU (micro-controller unit) is characterized by: • Microprocessor • 8,16,32 bit architecture • Usually “simple” in-order microarchitecture, no FPU • Memory • RAM (from 512B to 256kB) • FLASH (from 512B to 1MB) Example: STM32F101 MCU Microcontroller System Architecture Each MCU (micro-controller unit) is characterized by: • Microprocessor • 8,16,32 bit architecture • Usually “simple” in-order microarchitecture, no FPU • Memory • RAM (from 512B to 256kB) • FLASH (from 512B to 1MB) • Peripherals • DMA • Timer • Interfaces • Digital Interfaces • Analog Timer DMAs Example: STM32F101 MCU Microcontroller System Architecture Each MCU (micro-controller unit) is characterized by: • Microprocessor • 8,16,32 bit architecture • Usually “simple” in-order microarchitecture, no FPU • Memory • RAM (from 512B to 256kB) • FLASH (from 512B to 1MB) • Peripherals • DMA • Timer • Interfaces • Digital • Analog • Interconnect Example: STM32F101 MCU • AHB system bus (ARM-based MCUs) • APB peripheral bus (ARM-based MCUs) Microcontroller System Architecture Each MCU (micro-controller unit) is characterized by: • Microprocessor • 8,16,32 bit architecture • Usually “simple” in-order microarchitecture, no FPU • Memory • RAM (from 512B to 256kB) • FLASH -

A Programming Model and Processor Architecture for Heterogeneous Multicore Computers

A PROGRAMMING MODEL AND PROCESSOR ARCHITECTURE FOR HETEROGENEOUS MULTICORE COMPUTERS A DISSERTATION SUBMITTED TO THE DEPARTMENT OF ELECTRICAL ENGINEERING AND THE COMMITTEE ON GRADUATE STUDIES OF STANFORD UNIVERSITY IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY Michael D. Linderman February 2009 c Copyright by Michael D. Linderman 2009 All Rights Reserved ii I certify that I have read this dissertation and that, in my opinion, it is fully adequate in scope and quality as a dissertation for the degree of Doctor of Philosophy. (Professor Teresa H. Meng) Principal Adviser I certify that I have read this dissertation and that, in my opinion, it is fully adequate in scope and quality as a dissertation for the degree of Doctor of Philosophy. (Professor Mark Horowitz) I certify that I have read this dissertation and that, in my opinion, it is fully adequate in scope and quality as a dissertation for the degree of Doctor of Philosophy. (Professor Krishna V. Shenoy) Approved for the University Committee on Graduate Studies. iii Abstract Heterogeneous multicore computers, those systems that integrate specialized accelerators into and alongside multicore general-purpose processors (GPPs), provide the scalable performance needed by computationally demanding information processing (informatics) applications. However, these systems often feature instruction sets and functionality that significantly differ from GPPs and for which there is often little or no sophisticated compiler support. Consequently developing applica- tions for these systems is difficult and developer productivity is low. This thesis presents Merge, a general-purpose programming model for heterogeneous multicore systems. The Merge programming model enables the programmer to leverage different processor- specific or application domain-specific toolchains to create software modules specialized for differ- ent hardware configurations; and provides language mechanisms to enable the automatic mapping of processor-agnostic applications to these processor-specific modules. -

Reverse Engineering X86 Processor Microcode

Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz, Ruhr-University Bochum https://www.usenix.org/conference/usenixsecurity17/technical-sessions/presentation/koppe This paper is included in the Proceedings of the 26th USENIX Security Symposium August 16–18, 2017 • Vancouver, BC, Canada ISBN 978-1-931971-40-9 Open access to the Proceedings of the 26th USENIX Security Symposium is sponsored by USENIX Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz Ruhr-Universitat¨ Bochum Abstract hardware modifications [48]. Dedicated hardware units to counter bugs are imperfect [36, 49] and involve non- Microcode is an abstraction layer on top of the phys- negligible hardware costs [8]. The infamous Pentium fdiv ical components of a CPU and present in most general- bug [62] illustrated a clear economic need for field up- purpose CPUs today. In addition to facilitate complex and dates after deployment in order to turn off defective parts vast instruction sets, it also provides an update mechanism and patch erroneous behavior. Note that the implementa- that allows CPUs to be patched in-place without requiring tion of a modern processor involves millions of lines of any special hardware. While it is well-known that CPUs HDL code [55] and verification of functional correctness are regularly updated with this mechanism, very little is for such processors is still an unsolved problem [4, 29]. known about its inner workings given that microcode and the update mechanism are proprietary and have not been Since the 1970s, x86 processor manufacturers have throughly analyzed yet. -

Intel(R) Software Guard Extensions Developer Guide

Intel® Software Guard Extensions (Intel® SGX) Developer Guide Intel(R) Software Guard Extensions Developer Guide Legal Information No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest forecast, sched- ule, specifications and roadmaps. The products and services described may contain defects or errors known as errata which may cause deviations from published specifications. Current char- acterized errata are available on request. Intel technologies features and benefits depend on system configuration and may require enabled hardware, software or service activation. Learn more at Intel.com, or from the OEM or retailer. Copies of documents which have an order number and are referenced in this document may be obtained by calling 1-800-548-4725 or by visiting www.in- tel.com/design/literature.htm. Intel, the Intel logo, Xeon, and Xeon Phi are trademarks of Intel Corporation in the U.S. and/or other countries. Optimization Notice Intel's compilers may or may not optimize to the same degree for non-Intel microprocessors for optimizations that are not unique to Intel micro- processors. These optimizations include SSE2, SSE3, and SSSE3 instruction sets and other optimizations. -

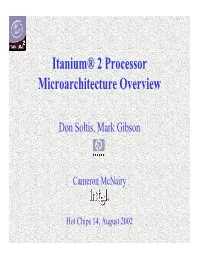

Itanium® 2 Processor Microarchitecture Overview

Itanium® 2 Processor Microarchitecture Overview Don Soltis, Mark Gibson Cameron McNairy Hot Chips 14, August 2002 Itanium® 2 Processor Overview 16KB16KB L1L1 I-cacheI-cache BranchBranch PredictPredict 2 bundles (128 bits each) InstrInstr 22 InstrInstr 11 InstrInstr 00 TemplateTemplate Issue up to 6 instructions to the 11 pipes FF FF M/AM/A M/AM/A M/AM/A M/AM/A I/AI/A I/AI/A BB BB BB 22 FMACsFMACs 66 ALUsALUs BranchBranch UnitUnit 6 Opnds 2 Preds 16KB16KB L1L1 D-cacheD-cache 12 Opnds 2 Results 4 Preds 6 Results 2 Loads, 2 Stores 6 Predicates 128 FP GPRs 128 Int GPRs Consumed 1 Predicate 3 Predicates 128 FP GPRs 4 Predicates 128 Int GPRs 12 Predicates Produced Block Diagram 64 Predicate Registers 4 Loads 64 Predicate Registers 2 Stores 256KB256KB L2L2 CacheCache 3MB3MB L3L3 CacheCache BusBus InterfaceInterface Hot Chips 14 Itanium® 2 Processor Overview EXEEXE DETDET WRBWRB IPGIPG ROTROT EXPEXP RENREN REGREG FP1FP1 FP2FP2 FP3FP3 FP4FP4 WRBWRB IPG: Instruction Pointer Generate, Instruction address to L1 I-cache ROT: Present 2 Instruction Bundles from L1 I-cache to dispersal hardware EXP: Disperse up to 6 instruction syllables from the 2 instruction bundles REN: Rename (or convert) virtual register IDs to physical register IDs REG: Register file read, or bypass results in flight as operands EXE: Execute integer instructions; generate results and predicates DET: Detect exceptions, traps, etc. FP1-4: Execute floating point instructions; generate results and predicates Main Execution Unit Pipeline WRB: Write back results to the register file -

Microarchitecture-Level Soc Design 27 Young-Hwan Park, Amin Khajeh, Jun Yong Shin, Fadi Kurdahi, Ahmed Eltawil, and Nikil Dutt

Microarchitecture-Level SoC Design 27 Young-Hwan Park, Amin Khajeh, Jun Yong Shin, Fadi Kurdahi, Ahmed Eltawil, and Nikil Dutt Abstract In this chapter we consider the issues related to integrating microarchitectural IP blocks into complex SoCs while satisfying performance, power, thermal, and reliability constraints. We first review different abstraction levels for SoC design that promote IP reuse, and which enable fast simulation for early functional validation of the SoC platform. Since SoCs must satisfy a multitude of interrelated constraints, we then present high-level power, thermal, and reliability models for predicting these constraints. These constraints are not unrelated and their interactions must be considered, modeled and evaluated. Once constraints are modeled, we must explore the design space trading off performance, power and reliability. Several case studies are presented illustrating how the design space can be explored across layers, and what modifications could be applied at design time and/or runtime to deal with reliability issues that may arise. Acronyms AHB Advanced High-performance Bus APB Advanced Peripheral Bus ASIC Application-Specific Integrated Circuit BER Bit Error Rate BLB Bit Lock Block Y.-H. Park Digital Media and Communications R&D Center, Samsung Electronics, Seoul, Korea e-mail: [email protected] A. Khajeh Broadcom Corp., San Jose, CA, USA e-mail: [email protected] J. Yong Shin • F. Kurdahi () • A. Eltawil • N. Dutt Center for Embedded and Cyber-Physical Systems, University of California Irvine, Irvine, CA, USA e-mail: [email protected]; [email protected]; [email protected]; [email protected] © Springer Science+Business Media Dordrecht 2017 867 S.