Design & Simulation of 32-Bit Floating Point

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 2 Data Representation in Computer Systems 2.1 Introduction

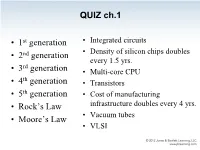

QUIZ ch.1 • 1st generation • Integrated circuits • 2nd generation • Density of silicon chips doubles every 1.5 yrs. rd • 3 generation • Multi-core CPU th • 4 generation • Transistors • 5th generation • Cost of manufacturing • Rock’s Law infrastructure doubles every 4 yrs. • Vacuum tubes • Moore’s Law • VLSI QUIZ ch.1 The highest # of transistors in a CPU commercially available today is about: • 100 million • 500 million • 1 billion • 2 billion • 2.5 billion • 5 billion QUIZ ch.1 The highest # of transistors in a CPU commercially available today is about: • 100 million • 500 million “As of 2012, the highest transistor count in a commercially available CPU is over 2.5 • 1 billion billion transistors, in Intel's 10-core Xeon • 2 billion Westmere-EX. • 2.5 billion Xilinx currently holds the "world-record" for an FPGA containing 6.8 billion transistors.” Source: Wikipedia – Transistor_count Chapter 2 Data Representation in Computer Systems 2.1 Introduction • A bit is the most basic unit of information in a computer. – It is a state of “on” or “off” in a digital circuit. – Sometimes these states are “high” or “low” voltage instead of “on” or “off..” • A byte is a group of eight bits. – A byte is the smallest possible addressable unit of computer storage. – The term, “addressable,” means that a particular byte can be retrieved according to its location in memory. 5 2.1 Introduction A word is a contiguous group of bytes. – Words can be any number of bits or bytes. – Word sizes of 16, 32, or 64 bits are most common. – Intel: 16 bits = 1 word, 32 bits = double word, 64 bits = quad word – In a word-addressable system, a word is the smallest addressable unit of storage. -

Data Representation in Computer Systems

© Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION There are 10 kinds of people in the world—those who understand binary and those who don’t. © Jones & Bartlett Learning, LLC © Jones—Anonymous & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION CHAPTER Data Representation © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE 2OR DISTRIBUTIONin ComputerNOT FOR Systems SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION 2.1 INTRODUCTION he organization of any computer depends considerably on how it represents T numbers, characters, and control information. The converse is also true: Stan- © Jones & Bartlettdards Learning, and conventions LLC established over the years© Jones have determined& Bartlett certain Learning, aspects LLC of computer organization. This chapter describes the various ways in which com- NOT FOR SALE putersOR DISTRIBUTION can store and manipulate numbers andNOT characters. FOR SALE The ideas OR presented DISTRIBUTION in the following sections form the basis for understanding the organization and func- tion of all types of digital systems. The most basic unit of information in a digital computer is called a bit, which is a contraction of binary digit. In the concrete sense, a bit is nothing more than © Jones & Bartlett Learning, aLLC state of “on” or “off ” (or “high”© Jones and “low”)& Bartlett within Learning, a computer LLCcircuit. In 1964, NOT FOR SALE OR DISTRIBUTIONthe designers of the IBM System/360NOT FOR mainframe SALE OR computer DISTRIBUTION established a conven- tion of using groups of 8 bits as the basic unit of addressable computer storage. -

Chap02: Data Representation in Computer Systems

CHAPTER 2 Data Representation in Computer Systems 2.1 Introduction 57 2.2 Positional Numbering Systems 58 2.3 Converting Between Bases 58 2.3.1 Converting Unsigned Whole Numbers 59 2.3.2 Converting Fractions 61 2.3.3 Converting between Power-of-Two Radices 64 2.4 Signed Integer Representation 64 2.4.1 Signed Magnitude 64 2.4.2 Complement Systems 70 2.4.3 Excess-M Representation for Signed Numbers 76 2.4.4 Unsigned Versus Signed Numbers 77 2.4.5 Computers, Arithmetic, and Booth’s Algorithm 78 2.4.6 Carry Versus Overflow 81 2.4.7 Binary Multiplication and Division Using Shifting 82 2.5 Floating-Point Representation 84 2.5.1 A Simple Model 85 2.5.2 Floating-Point Arithmetic 88 2.5.3 Floating-Point Errors 89 2.5.4 The IEEE-754 Floating-Point Standard 90 2.5.5 Range, Precision, and Accuracy 92 2.5.6 Additional Problems with Floating-Point Numbers 93 2.6 Character Codes 96 2.6.1 Binary-Coded Decimal 96 2.6.2 EBCDIC 98 2.6.3 ASCII 99 2.6.4 Unicode 99 2.7 Error Detection and Correction 103 2.7.1 Cyclic Redundancy Check 103 2.7.2 Hamming Codes 106 2.7.3 Reed-Soloman 113 Chapter Summary 114 CMPS375 Class Notes (Chap02) Page 1 / 25 Dr. Kuo-pao Yang 2.1 Introduction 57 This chapter describes the various ways in which computers can store and manipulate numbers and characters. Bit: The most basic unit of information in a digital computer is called a bit, which is a contraction of binary digit. -

Representing Information in English Until 1598

In the twenty-first century, digital computers are everywhere. You al- most certainly have one with you now, although you may call it a “phone.” You are more likely to be reading this book on a digital device than on paper. We do so many things with our digital devices that we may forget the original purpose: computation. When Dr. Howard Figure 1-1 Aiken was in charge of the huge, Howard Aiken, center, and Grace Murray Hopper, mechanical Harvard Mark I calcu- on Aiken’s left, with other members of the Bureau of Ordnance Computation Project, in front of the lator during World War II, he Harvard Mark I computer at Harvard University, wasn’t happy unless the machine 1944. was “making numbers,” that is, U.S. Department of Defense completing the numerical calculations needed for the war effort. We still use computers for purely computational tasks, to make numbers. It may be less ob- vious, but the other things for which we use computers today are, underneath, also computational tasks. To make that phone call, your phone, really a com- puter, converts an electrical signal that’s an analog of your voice to numbers, encodes and compresses them, and passes them onward to a cellular radio which it itself is controlled by numbers. Numbers are fundamental to our digital devices, and so to understand those digital devices, we have to understand the numbers that flow through them. 1.1 Numbers as Abstractions It is easy to suppose that as soon as humans began to accumulate possessions, they wanted to count them and make a record of the count. -

Textbook Section 6.1 Draft

Chapter Six A Computing Machine 6.1 Representing Information . 810 6.2 TOY Machine. 842 6.3 Machine Language Programming . 860 6.4 Simulator . 910 UR GOAL IN THIS CHAPTER IS to show you how simple the computer that you’re Ousing really is. We will describe in detail a simple imaginary machine that has many of the characteristics of real processors at the heart of the computational devices that surround us. You may be surprised to learn that many, many machines share these same properties, even some of the very first computers that were developed. Accordingly, we are able to tell the story in historical context. Imagine a world without comput- ers, and what sort of device might be received with enthusiasm, and that is not far from what we have! We tell out story from the standpoint of scientific computing— there is an equally fascinating story from the standpoint of commercial computing that we touch on just briefly. Next, our aim is to convince you that the basic concepts and constructs that we covered in Java programming are not so difficult to implement on a simple machine, using its own machine language. We will consider in detail conditionals, loops, functions, arrays, and linked structures. Since these are the same basic tools that we examined for Java, it follows that several of the computational tasks that we addressed in the first part of this book are not difficult to address at a lower level. This simple machine is a link on the continuum between your computer and the actual hardware circuits that change state to reflect the action of your programs. -

ISLA112P50 Datasheet

DATASHEET ISLA112P50 FN7604 12-Bit, 500MSPS A/D Converter Rev.3.0 Jul 6, 2021 The ISLA112P50 is a low-power, high-performance, 500MSPS Features analog-to-digital converter designed with Renesas’ proprietary FemtoCharge™ technology on a standard CMOS process. The •1.15GHz Analog Input Bandwidth ISLA112P50 is part of a pin-compatible portfolio of 8, 10 and • 90fs Clock Jitter 12-bit A/Ds. This device an upgrade of the KAD551XP-50 •Automatic Fine Interleave Correction Calibration product family and is pin similar. • Multiple Chip Time Alignment Support via the Synchronous The device utilizes two time-interleaved 250MSPS unit A/Ds to Clock Divider Reset achieve the ultimate sample rate of 500MSPS. A single 500MHz conversion clock is presented to the converter, and all •Programmable Gain, Offset and Skew Control interleave clocking is managed internally. The proprietary • Over-Range Indicator Interleave Engine (I2E) performs automatic fine correction of • Clock Phase Selection offset, gain, and sample time skew mismatches between the unit A/Ds to optimize performance. No external interleaving • Nap and Sleep Modes algorithm is required. • Two’s Complement, Gray Code or Binary Data Format A serial peripheral interface (SPI) port allows for extensive • DDR LVDS-Compatible or LVCMOS Outputs configurability of the A/D. The SPI also controls the interleave correction circuitry, allowing the system to issue continuous •Programmable Test Patterns and Internal Temperature calibration commands as well as configure many dynamic Sensor parameters. Applications Digital output data is presented in selectable LVDS or CMOS formats. The ISLA112P50 is available in a 72 Ld QFN package • Radar and Electronic/Signal Intelligence with an exposed paddle. -

Topic1: Signed Number Representations in Computing, Signed Number Representations Are Required to Encode Negative Numbers in Binary Number Systems

Topic1: Signed number representations In computing, signed number representations are required to encode negative numbers in binary number systems. In mathematics, negative numbers in any base are represented by prefixing them with a minus sign ("−"). However, in computer hardware, numbers are represented only as sequences of bits, without extra symbols. The four best-known methods of extending the binary numeral system to represent signed numbers are: sign-and- magnitude, ones' complement, two's complement, and offset binary. Some of the alternative methods use implicit instead of explicit signs, such as negative binary, using the base −2. Corresponding methods can be devised for other bases, whether positive, negative, fractional, or other elaborations on such themes. There is no definitive criterion by which any of the representations is universally superior. For integers, the representation used in most current computing devices is two's complement, although the Unisys ClearPath Dorado series mainframes use ones' complement. Representation of Signed Binary Numbers The Most Significant Bit MSBMSB of signed binary numbers is used to indicate the sign of the numbers. Hence, it is also called as sign bit. The positive sign is represented by placing ‘0’ in the sign bit. Similarly, the negative sign is represented by placing ‘1’ in the sign bit. If the signed binary number contains ‘N’ bits, then N−1N−1 bits only represent the magnitude of the number since one bit MSBMSB is reserved for representing sign of the number. There are three types of representations for signed binary numbers Sign-Magnitude form 1’s complement form 2’s complement form Representation of a positive number in all these 3 forms is same. -

MT-009: Data Converter Codes-Can You Decode Them?

MT-009 TUTORIAL Data Converter Codes—Can You Decode Them? by Walt Kester INTRODUCTION Analog-to-digital converters (ADCs) translate analog quantities, which are characteristic of most phenomena in the "real world," to digital language, used in information processing, computing, data transmission, and control systems. Digital-to-analog converters (DACs) are used in transforming transmitted or stored data, or the results of digital processing, back to "real-world" variables for control, information display, or further analog processing. The relationships between inputs and outputs of DACs and ADCs are shown in Figure 1. VREF MSB +FS DIGITAL N-BIT ANALOG RANGE INPUT DAC OUTPUT (SPAN) N-BITS LSB 0 OR –FS VREF MSB +FS ANALOG N-BIT DIGITAL RANGE INPUT ADC OUTPUT (SPAN) N-BITS LSB 0 OR –FS Figure 1: Digital-to-Analog Converter (DAC) and Analog-to-Digital Converter (ADC) Input and Output Definitions Analog input variables, whatever their origin, are most frequently converted by transducers into voltages or currents. These electrical quantities may appear (1) as fast or slow "dc" continuous direct measurements of a phenomenon in the time domain, (2) as modulated ac waveforms (using a wide variety of modulation techniques), (3) or in some combination, with a spatial configuration of related variables to represent shaft angles. Examples of the first are outputs of thermocouples, potentiometers on dc references, and analog computing circuitry; of the second, "chopped" optical measurements, ac strain gage or bridge outputs, and digital signals buried in noise; and of the third, synchros and resolvers. Rev.A, 10/08, WK Page 1 of 11 MT-009 The analog variables to be dealt with in this article are those involving voltages or currents representing the actual analog phenomena. -

TI II: Computer Architecture Data Representation and Computer Arithmetic

Prof. Dr.-Ing. Jochen Schiller Computer Systems & Telematics 31 30 23 22 0 Characteristic/ Sg Significand/Mantissa/Fraction Exponent TI II: Computer Architecture Data Representation and Computer Arithmetic (x + y) + z ≠ x + (y + z) Systems Representations Basic Arithmetic 1 + ε = 1, ε > 0 ALU x + y < x, y > 0 Content 1. Introduction 4. Instruction Set Architecture - Single Processor Systems - CISC vs. RISC - Historical overview - Data types, Addressing, Instructions - Six-level computer architecture - Assembler 2. Data representation and Computer arithmetic 5. Memories - Data and number representation - Hierarchy, Types - Basic arithmetic - Physical & Virtual Memory - Segmentation & Paging 3. Microarchitecture - Caches - Microprocessor architecture - Microprogramming - Pipelining TI II - Computer Architecture 2.2 Computer Arithmetic Basics: How to operate on single bits? - Combinational (or: combinatorial) circuits (pure logic), sequential circuits (includes memory) - See lectures on Boolean algebra, circuits, semiconductors etc. https://en.wikipedia.org/wiki/Logic_gate https://en.wikipedia.org/wiki/Flip-flop_(electronics) Here: computer arithmetic serves as an example for handling larger data units (tables, graphics, …) 1. Some more formal basics 2. Methods and circuits for the implementation of the four basic operations +, -, *, / 3. Operation of an ALU (Arithmetic Logic Unit) of a computer TI II – Computer Architecture 2.3 Formal Basics Humans: typically use the decimal system for calculations (although other systems exist) Computers: -

APOLOS Prerequisite

A P O L O S Prerequisite for Subjects Computer Architectures & Logic Systems and Processors Richard Šusta Department of Control Engineering CTU-FEE in Prague Version 1.1 from September 25, 2017 Obsah 1 Introduction to Prerequisite ...................................................................................... 4 2 Logic functions ........................................................................................................ 5 2.1 Description of logic functions by truth tables .................................................. 5 2.2 Value X - don´t care ......................................................................................... 7 2.3 Truth table defined by sets of values ................................................................ 9 2.4 Karnaugh map ................................................................................................. 11 2.4.1 Karnaugh maps for different sizes .............................................................. 13 2.5 Overview of the main logic functions ............................................................ 14 2.6 Operators and logic functions ......................................................................... 16 2.7 Logical diagram .............................................................................................. 17 2.7.1 Bubbles of negations ................................................................................... 19 2.7.2 Implementation of logical diagrams ........................................................... 19 2.7.3 Conversion -

Double Precision Floating-Point Format 1 Double Precision Floating-Point Format

Double precision floating-point format 1 Double precision floating-point format In computing, double precision is a computer numbering format that occupies two adjacent storage locations in computer memory. A double precision number, sometimes simply called a double, may be defined to be an integer, fixed point, or floating point (in which case it is often referred to as FP64). Modern computers with 32-bit storage locations use two memory locations to store a 64-bit double precision number (a single storage location can hold a single precision number). Double precision floating point is an IEEE 754 standard for encoding binary or decimal floating point numbers in 64 bits (8 bytes). Floating point precisions IEEE 754: 16-bit: Half (binary16) 32-bit: Single (binary32), decimal32 64-bit: Double (binary64), decimal64 128-bit: Quadruple (binary128), decimal128 Other: Minifloat · Extended precision Arbitrary-precision Double precision binary floating-point format Double precision binary floating-point is a commonly used format on PCs, due to its wider range over single precision floating point, even if at a performance and bandwidth cost. As with single precision floating point format, it lacks precision on integer numbers when compared with an integer format of the same size. It is commonly known simply as double. The IEEE 754 standard defines a double as: • Sign bit: 1 bit • Exponent width: 11 bits • Significand precision: 53 bits (52 explicitly stored) The format is written with the significand having an implicit integer bit of value 1, unless the written exponent is all zeros. With the 52 bits of the fraction significand appearing in the memory format, the total precision is therefore 53 bits (approximately 16 decimal digits, ). -

Chapter 2 Fundamentals of Sampled Data Systems

FUNDAMENTALS OF SAMPLED DATA SYSTEMS ANALOG-DIGITAL CONVERSION 1. Data Converter History 2. Fundamentals of Sampled Data Systems 2.1 Coding and Quantizing 2.2 Sampling Theory 2.3 Data Converter AC Errors 2.4 General Data Converter Specifications 2.5 Defining the Specifications 3. Data Converter Architectures 4. Data Converter Process Technology 5. Testing Data Converters 6. Interfacing to Data Converters 7. Data Converter Support Circuits 8. Data Converter Applications 9. Hardware Design Techniques I. Index ANALOG-DIGITAL CONVERSION FUNDAMENTALS OF SAMPLED DATA SYSTEMS 2.1 CODING AND QUANTIZING CHAPTER 2 FUNDAMENTALS OF SAMPLED DATA SYSTEMS SECTION 2.1: CODING AND QUANTIZING Walt Kester, Dan Sheingold, James Bryant Analog-to-digital converters (ADCs) translate analog quantities, which are characteristic of most phenomena in the "real world," to digital language, used in information processing, computing, data transmission, and control systems. Digital-to- analog converters (DACs) are used in transforming transmitted or stored data, or the results of digital processing, back to "real-world" variables for control, information display, or further analog processing. The relationships between inputs and outputs of DACs and ADCs are shown in Figure 2.1. VREF MSB +FS DIGITAL N-BIT ANALOG RANGE INPUT DAC OUTPUT (SPAN) N-BITS LSB 0 OR –FS VREF MSB +FS ANALOG N-BIT DIGITAL RANGE INPUT ADC OUTPUT (SPAN) N-BITS LSB 0 OR –FS Figure 2.1: Digital-to-Analog Converter (DAC) and Analog-to-Digital Converter (ADC) Input and Output Definitions Analog input variables, whatever their origin, are most frequently converted by transducers into voltages or currents. These electrical quantities may appear (1) as fast or slow "dc" continuous direct measurements of a phenomenon in the time domain, (2) as modulated ac waveforms (using a wide variety of modulation techniques), (3) or in some combination, with a spatial configuration of related variables to represent shaft angles.