Double-Precision Floating-Point Format

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Arithmetic Algorithms for Extended Precision Using Floating-Point Expansions Mioara Joldes, Olivier Marty, Jean-Michel Muller, Valentina Popescu

Arithmetic algorithms for extended precision using floating-point expansions Mioara Joldes, Olivier Marty, Jean-Michel Muller, Valentina Popescu To cite this version: Mioara Joldes, Olivier Marty, Jean-Michel Muller, Valentina Popescu. Arithmetic algorithms for extended precision using floating-point expansions. IEEE Transactions on Computers, Institute of Electrical and Electronics Engineers, 2016, 65 (4), pp.1197 - 1210. 10.1109/TC.2015.2441714. hal- 01111551v2 HAL Id: hal-01111551 https://hal.archives-ouvertes.fr/hal-01111551v2 Submitted on 2 Jun 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. IEEE TRANSACTIONS ON COMPUTERS, VOL. , 201X 1 Arithmetic algorithms for extended precision using floating-point expansions Mioara Joldes¸, Olivier Marty, Jean-Michel Muller and Valentina Popescu Abstract—Many numerical problems require a higher computing precision than the one offered by standard floating-point (FP) formats. One common way of extending the precision is to represent numbers in a multiple component format. By using the so- called floating-point expansions, real numbers are represented as the unevaluated sum of standard machine precision FP numbers. This representation offers the simplicity of using directly available, hardware implemented and highly optimized, FP operations. -

Floating Points

Jin-Soo Kim ([email protected]) Systems Software & Architecture Lab. Seoul National University Floating Points Fall 2018 ▪ How to represent fractional values with finite number of bits? • 0.1 • 0.612 • 3.14159265358979323846264338327950288... ▪ Wide ranges of numbers • 1 Light-Year = 9,460,730,472,580.8 km • The radius of a hydrogen atom: 0.000000000025 m 4190.308: Computer Architecture | Fall 2018 | Jin-Soo Kim ([email protected]) 2 ▪ Representation • Bits to right of “binary point” represent fractional powers of 2 • Represents rational number: 2i i i–1 k 2 bk 2 k=− j 4 • • • 2 1 bi bi–1 • • • b2 b1 b0 . b–1 b–2 b–3 • • • b–j 1/2 1/4 • • • 1/8 2–j 4190.308: Computer Architecture | Fall 2018 | Jin-Soo Kim ([email protected]) 3 ▪ Examples: Value Representation 5-3/4 101.112 2-7/8 10.1112 63/64 0.1111112 ▪ Observations • Divide by 2 by shifting right • Multiply by 2 by shifting left • Numbers of form 0.111111..2 just below 1.0 – 1/2 + 1/4 + 1/8 + … + 1/2i + … → 1.0 – Use notation 1.0 – 4190.308: Computer Architecture | Fall 2018 | Jin-Soo Kim ([email protected]) 4 ▪ Representable numbers • Can only exactly represent numbers of the form x / 2k • Other numbers have repeating bit representations Value Representation 1/3 0.0101010101[01]…2 1/5 0.001100110011[0011]…2 1/10 0.0001100110011[0011]…2 4190.308: Computer Architecture | Fall 2018 | Jin-Soo Kim ([email protected]) 5 Fixed Points ▪ p.q Fixed-point representation • Use the rightmost q bits of an integer as representing a fraction • Example: 17.14 fixed-point representation -

Decimal Layouts for IEEE 754 Strawman3

IEEE 754-2008 ECMA TC39/TG1 – 25 September 2008 Mike Cowlishaw IBM Fellow Overview • Summary of the new standard • Binary and decimal specifics • Support in hardware, standards, etc. • Questions? 2 Copyright © IBM Corporation 2008. All rights reserved. IEEE 754 revision • Started in December 2000 – 7.7 years – 5.8 years in committee (92 participants + e-mail) – 1.9 years in ballot (101 voters, 8 ballots, 1200+ comments) • Removes many ambiguities from 754-1985 • Incorporates IEEE 854 (radix-independent) • Recommends or requires more operations (functions) and more language support 3 Formats • Separates sets of floating-point numbers (and the arithmetic on them) from their encodings (‘interchange formats’) • Includes the 754-1985 basic formats: – binary32, 24 bits (‘single’) – binary64, 53 bits (‘double’) • Adds three new basic formats: – binary128, 113 bits (‘quad’) – decimal64, 16-digit (‘double’) – decimal128, 34-digit (‘quad’) 4 Why decimal? A web page… • Parking at Manchester airport… • £4.20 per day … … for 10 days … … calculated on-page using ECMAScript Answer shown: 5 Why decimal? A web page… • Parking at Manchester airport… • £4.20 per day … … for 10 days … … calculated on-page using ECMAScript Answer shown: £41.99 (Programmer must have truncated to two places.) 6 Where it costs real money… • Add 5% sales tax to a $ 0.70 telephone call, rounded to the nearest cent • 1.05 x 0.70 using binary double is exactly 0.734999999999999986677323704 49812151491641998291015625 (should have been 0.735) • rounds to $ 0.73, instead of $ 0.74 -

Nios II Custom Instruction User Guide

Nios II Custom Instruction User Guide Subscribe UG-20286 | 2020.04.27 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. Nios II Custom Instruction Overview..............................................................................4 1.1. Custom Instruction Implementation......................................................................... 4 1.1.1. Custom Instruction Hardware Implementation............................................... 5 1.1.2. Custom Instruction Software Implementation................................................ 6 2. Custom Instruction Hardware Interface......................................................................... 7 2.1. Custom Instruction Types....................................................................................... 7 2.1.1. Combinational Custom Instructions.............................................................. 8 2.1.2. Multicycle Custom Instructions...................................................................10 2.1.3. Extended Custom Instructions................................................................... 11 2.1.4. Internal Register File Custom Instructions................................................... 13 2.1.5. External Interface Custom Instructions....................................................... 15 3. Custom Instruction Software Interface.........................................................................16 3.1. Custom Instruction Software Examples................................................................... 16 -

Hacking in C 2020 the C Programming Language Thom Wiggers

Hacking in C 2020 The C programming language Thom Wiggers 1 Table of Contents Introduction Undefined behaviour Abstracting away from bytes in memory Integer representations 2 Table of Contents Introduction Undefined behaviour Abstracting away from bytes in memory Integer representations 3 – Another predecessor is B. • Not one of the first programming languages: ALGOL for example is older. • Closely tied to the development of the Unix operating system • Unix and Linux are mostly written in C • Compilers are widely available for many, many, many platforms • Still in development: latest release of standard is C18. Popular versions are C99 and C11. • Many compilers implement extensions, leading to versions such as gnu18, gnu11. • Default version in GCC gnu11 The C programming language • Invented by Dennis Ritchie in 1972–1973 4 – Another predecessor is B. • Closely tied to the development of the Unix operating system • Unix and Linux are mostly written in C • Compilers are widely available for many, many, many platforms • Still in development: latest release of standard is C18. Popular versions are C99 and C11. • Many compilers implement extensions, leading to versions such as gnu18, gnu11. • Default version in GCC gnu11 The C programming language • Invented by Dennis Ritchie in 1972–1973 • Not one of the first programming languages: ALGOL for example is older. 4 • Closely tied to the development of the Unix operating system • Unix and Linux are mostly written in C • Compilers are widely available for many, many, many platforms • Still in development: latest release of standard is C18. Popular versions are C99 and C11. • Many compilers implement extensions, leading to versions such as gnu18, gnu11. -

Decimal Rounding

Mathematics Instructional Plan – Grade 5 Decimal Rounding Strand: Number and Number Sense Topic: Rounding decimals, through the thousandths, to the nearest whole number, tenth, or hundredth. Primary SOL: 5.1 The student, given a decimal through thousandths, will round to the nearest whole number, tenth, or hundredth. Materials Base-10 blocks Decimal Rounding activity sheet (attached) “Highest or Lowest” Game (attached) Open Number Lines activity sheet (attached) Ten-sided number generators with digits 0–9, or decks of cards Vocabulary approximate, between, closer to, decimal number, decimal point, hundredth, rounding, tenth, thousandth, whole Student/Teacher Actions: What should students be doing? What should teachers be doing? 1. Begin with a review of decimal place value: Display a decimal in the thousandths place, such as 34.726, using base-10 blocks. In pairs, have students discuss how to read the number using place-value names, and review the decimal place each digit holds. Briefly have students share their thoughts with the class by asking: What digit is in the tenths place? The hundredths place? The thousandths place? Who would like to share how to say this decimal using place value? 2. Ask, “If this were a monetary amount, $34.726, how would we determine the amount to the nearest cent?” Allow partners or small groups to discuss this question. It may be helpful if students underlined the place that indicates cents (the hundredths place) in order to keep track of the rounding place. Distribute the Open Number Lines activity sheet and display this number line on the board. Guide students to recall that the nearest cent is the same as the nearest hundredth of a dollar. -

Scilab Textbook Companion for Numerical Methods for Engineers by S

Scilab Textbook Companion for Numerical Methods For Engineers by S. C. Chapra And R. P. Canale1 Created by Meghana Sundaresan Numerical Methods Chemical Engineering VNIT College Teacher Kailas L. Wasewar Cross-Checked by July 31, 2019 1Funded by a grant from the National Mission on Education through ICT, http://spoken-tutorial.org/NMEICT-Intro. This Textbook Companion and Scilab codes written in it can be downloaded from the "Textbook Companion Project" section at the website http://scilab.in Book Description Title: Numerical Methods For Engineers Author: S. C. Chapra And R. P. Canale Publisher: McGraw Hill, New York Edition: 5 Year: 2006 ISBN: 0071244298 1 Scilab numbering policy used in this document and the relation to the above book. Exa Example (Solved example) Eqn Equation (Particular equation of the above book) AP Appendix to Example(Scilab Code that is an Appednix to a particular Example of the above book) For example, Exa 3.51 means solved example 3.51 of this book. Sec 2.3 means a scilab code whose theory is explained in Section 2.3 of the book. 2 Contents List of Scilab Codes4 1 Mathematical Modelling and Engineering Problem Solving6 2 Programming and Software8 3 Approximations and Round off Errors 10 4 Truncation Errors and the Taylor Series 15 5 Bracketing Methods 25 6 Open Methods 34 7 Roots of Polynomials 44 9 Gauss Elimination 51 10 LU Decomposition and matrix inverse 61 11 Special Matrices and gauss seidel 65 13 One dimensional unconstrained optimization 69 14 Multidimensional Unconstrainted Optimization 72 3 15 Constrained -

The MPFR Team [email protected] This Manual Documents How to Install and Use the Multiple Precision Floating-Point Reliable Library, Version 2.2.0

MPFR The Multiple Precision Floating-Point Reliable Library Edition 2.2.0 September 2005 The MPFR team [email protected] This manual documents how to install and use the Multiple Precision Floating-Point Reliable Library, version 2.2.0. Copyright 1991, 1993, 1994, 1995, 1996, 1997, 1998, 1999, 2000, 2001, 2002, 2003, 2004, 2005 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, with the Front-Cover Texts being “A GNU Manual”, and with the Back-Cover Texts being “You have freedom to copy and modify this GNU Manual, like GNU software”. A copy of the license is included in Appendix A [GNU Free Documentation License], page 30. i Table of Contents MPFR Copying Conditions ................................ 1 1 Introduction to MPFR ................................. 2 1.1 How to use this Manual ........................................................ 2 2 Installing MPFR ....................................... 3 2.1 How to install ................................................................. 3 2.2 Other make targets ............................................................ 3 2.3 Known Build Problems ........................................................ 3 2.4 Getting the Latest Version of MPFR ............................................ 4 3 Reporting Bugs ........................................ 5 4 MPFR Basics ......................................... -

Extended Precision Floating Point Arithmetic

Extended Precision Floating Point Numbers for Ill-Conditioned Problems Daniel Davis, Advisor: Dr. Scott Sarra Department of Mathematics, Marshall University Floating Point Number Systems Precision Extended Precision Patriot Missile Failure Floating point representation is based on scientific An increasing number of problems exist for which Patriot missile defense modules have been used by • The precision, p, of a floating point number notation, where a nonzero real decimal number, x, IEEE double is insufficient. These include modeling the U.S. Army since the mid-1960s. On February 21, system is the number of bits in the significand. is expressed as x = ±S × 10E, where 1 ≤ S < 10. of dynamical systems such as our solar system or the 1991, a Patriot protecting an Army barracks in Dha- This means that any normalized floating point The values of S and E are known as the significand • climate, and numerical cryptography. Several arbi- ran, Afghanistan failed to intercept a SCUD missile, number with precision p can be written as: and exponent, respectively. When discussing float- trary precision libraries have been developed, but leading to the death of 28 Americans. This failure E ing points, we are interested in the computer repre- x = ±(1.b1b2...bp−2bp−1)2 × 2 are too slow to be practical for many complex ap- was caused by floating point rounding error. The sentation of numbers, so we must consider base 2, or • The smallest x such that x > 1 is then: plications. David Bailey’s QD library may be used system measured time in tenths of seconds, using binary, rather than base 10. -

Chapter 2 Data Representation in Computer Systems 2.1 Introduction

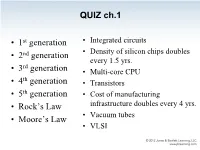

QUIZ ch.1 • 1st generation • Integrated circuits • 2nd generation • Density of silicon chips doubles every 1.5 yrs. rd • 3 generation • Multi-core CPU th • 4 generation • Transistors • 5th generation • Cost of manufacturing • Rock’s Law infrastructure doubles every 4 yrs. • Vacuum tubes • Moore’s Law • VLSI QUIZ ch.1 The highest # of transistors in a CPU commercially available today is about: • 100 million • 500 million • 1 billion • 2 billion • 2.5 billion • 5 billion QUIZ ch.1 The highest # of transistors in a CPU commercially available today is about: • 100 million • 500 million “As of 2012, the highest transistor count in a commercially available CPU is over 2.5 • 1 billion billion transistors, in Intel's 10-core Xeon • 2 billion Westmere-EX. • 2.5 billion Xilinx currently holds the "world-record" for an FPGA containing 6.8 billion transistors.” Source: Wikipedia – Transistor_count Chapter 2 Data Representation in Computer Systems 2.1 Introduction • A bit is the most basic unit of information in a computer. – It is a state of “on” or “off” in a digital circuit. – Sometimes these states are “high” or “low” voltage instead of “on” or “off..” • A byte is a group of eight bits. – A byte is the smallest possible addressable unit of computer storage. – The term, “addressable,” means that a particular byte can be retrieved according to its location in memory. 5 2.1 Introduction A word is a contiguous group of bytes. – Words can be any number of bits or bytes. – Word sizes of 16, 32, or 64 bits are most common. – Intel: 16 bits = 1 word, 32 bits = double word, 64 bits = quad word – In a word-addressable system, a word is the smallest addressable unit of storage. -

Introduction & Floating Point NMMC §1.4.1, Atkinson §1.2 1 Floating

Introduction & Floating Point NMMC x1.4.1, Atkinson x1.2 1 Floating-point representation, IEEE 754. In binary, 11:01 = 1 × 21 + 1 × 20 + 0 × 2−1 + 1 × 2−2: Double-precision floating-point format has 64 bits: ±; a1; : : : ; a11; b1; : : : ; b52 The associated number is a1···a11−1023 # = ±1:b1 ··· b52 × 2 : If all the a are zero, then the number is `subnormal' and the representation is −1022 # = ±0:b1 ··· b52 × 2 If the a are all 1 and the b are all 0 then it's ±∞ (± 1/0). If the a are all 1 and the b are not all 0 then it's NaN (±0=0). Machine-epsilon is the difference between 1 and the smallest representable number larger than 1, i.e. = 2−52 ≈ 2 × 10−16. The smallest and largest positive numbers are ≈ 10±308. Numbers larger than the max are set to 1. 2 Rounding & Arithmetic. Round-toward-nearest rounds a number towards the nearest floating-point num- ber. For nonzero numbers x within the range of max/min, the relative errors due to rounding are jround(x) − xj ≤ 2−53 ≈ 10−16 = u jxj which is half of machine epsilon. Floating-point arithmetic: add, subtract, multiply, divide. IEEE 754: If you start with 2 exactly- represented floating-point numbers x and y and compute any arithmetic operation `op', the magnitude of the relative error in the result is less than half machine precision. I.e. there is some δ with jδj ≤u such that fl(x op y) = (x op y)(1 + δ): The exception is underflow/overflow, where the result is either larger or smaller than can be represented. -

Design of 32 Bit Floating Point Addition and Subtraction Units Based on IEEE 754 Standard Ajay Rathor, Lalit Bandil Department of Electronics & Communication Eng

International Journal of Engineering Research & Technology (IJERT) ISSN: 2278-0181 Vol. 2 Issue 6, June - 2013 Design Of 32 Bit Floating Point Addition And Subtraction Units Based On IEEE 754 Standard Ajay Rathor, Lalit Bandil Department of Electronics & Communication Eng. Acropolis Institute of Technology and Research Indore, India Abstract Precision format .Single precision format, Floating point arithmetic implementation the most basic format of the ANSI/IEEE described various arithmetic operations like 754-1985 binary floating-point arithmetic addition, subtraction, multiplication, standard. Double precision and quad division. In science computation this circuit precision described more bit operation so at is useful. A general purpose arithmetic unit same time we perform 32 and 64 bit of require for all operations. In this paper operation of arithmetic unit. Floating point single precision floating point arithmetic includes single precision, double precision addition and subtraction is described. This and quad precision floating point format paper includes single precision floating representation and provide implementation point format representation and provides technique of various arithmetic calculation. implementation technique of addition and Normalization and alignment are useful for subtraction arithmetic calculations. Low operation and floating point number should precision custom format is useful for reduce be normalized before any calculation [3]. the associated circuit costs and increasing their speed. I consider the implementation ofIJERT IJERT2. Floating Point format Representation 32 bit addition and subtraction unit for floating point arithmetic. Floating point number has mainly three formats which are single precision, double Keywords: Addition, Subtraction, single precision and quad precision. precision, doubles precision, VERILOG Single Precision Format: Single-precision HDL floating-point format is a computer number 1.