MT-009: Data Converter Codes-Can You Decode Them?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 2 Data Representation in Computer Systems 2.1 Introduction

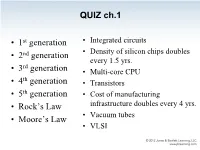

QUIZ ch.1 • 1st generation • Integrated circuits • 2nd generation • Density of silicon chips doubles every 1.5 yrs. rd • 3 generation • Multi-core CPU th • 4 generation • Transistors • 5th generation • Cost of manufacturing • Rock’s Law infrastructure doubles every 4 yrs. • Vacuum tubes • Moore’s Law • VLSI QUIZ ch.1 The highest # of transistors in a CPU commercially available today is about: • 100 million • 500 million • 1 billion • 2 billion • 2.5 billion • 5 billion QUIZ ch.1 The highest # of transistors in a CPU commercially available today is about: • 100 million • 500 million “As of 2012, the highest transistor count in a commercially available CPU is over 2.5 • 1 billion billion transistors, in Intel's 10-core Xeon • 2 billion Westmere-EX. • 2.5 billion Xilinx currently holds the "world-record" for an FPGA containing 6.8 billion transistors.” Source: Wikipedia – Transistor_count Chapter 2 Data Representation in Computer Systems 2.1 Introduction • A bit is the most basic unit of information in a computer. – It is a state of “on” or “off” in a digital circuit. – Sometimes these states are “high” or “low” voltage instead of “on” or “off..” • A byte is a group of eight bits. – A byte is the smallest possible addressable unit of computer storage. – The term, “addressable,” means that a particular byte can be retrieved according to its location in memory. 5 2.1 Introduction A word is a contiguous group of bytes. – Words can be any number of bits or bytes. – Word sizes of 16, 32, or 64 bits are most common. – Intel: 16 bits = 1 word, 32 bits = double word, 64 bits = quad word – In a word-addressable system, a word is the smallest addressable unit of storage. -

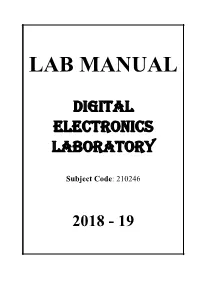

Final-Del-Lab-Manual-2018-19

LAB MANUAL DIGITAL ELECTRONICS LABORATORY Subject Code: 210246 2018 - 19 INDEX Batch : - Page Date of Date of Signature of Sr.No Title No Conduction Submission Staff GROUP - A Realize Full Adder and Subtractor using 1 a) Basic Gates and b) Universal Gates Design and implement Code converters-Binary to Gray and BCD to 2 Excess-3 Design of n-bit Carry Save Adder (CSA) and Carry Propagation Adder (CPA). Design and Realization of BCD 3 Adder using 4-bit Binary Adder (IC 7483). Realization of Boolean Expression for suitable combination logic using MUX 4 74151 / DMUX 74154 Verify the truth table of one bit and two bit comparators using logic gates and 5 comparator IC Design & Implement Parity Generator 6 using EX-OR. GROUP - B Flip Flop Conversion: Design and 7 Realization Design of Ripple Counter using suitable 8 Flip Flops a. Realization of 3 bit Up/Down Counter using MS JK Flip Flop / D-FF 9 b. Realization of Mod -N counter using ( 7490 and 74193 ) Design and Realization of Ring Counter 10 and Johnson Ring counter Design and implement Sequence 11 generator using JK flip-flop INDEX Batch : - Design and implement pseudo random 12 sequence generator. Design and implement Sequence 13 detector using JK flip-flop Design of ASM chart using MUX 14 controller Method. GROUP - C Design and Implementation of 15 Combinational Logic using PLAs. Design and simulation of - Full adder , 16 Flip flop, MUX using VHDL (Any 2) Use different modeling styles. Design & simulate asynchronous 3- bit 17 counter using VHDL. Design and Implementation of 18 Combinational Logic using PALs GROUP - D Study of Shift Registers ( SISO,SIPO, 19 PISO,PIPO ) Study of TTL Logic Family: Feature, 20 Characteristics and Comparison with CMOS Family Study of Microcontroller 8051 : 21 Features, Architecture and Programming Model ` Digital Electronics Lab (Pattern 2015) GROUP - A Digital Electronics Lab (Pattern 2015) Assignment: 1 Title: Adder and Subtractor Objective: 1. -

A New Approach to the Snake-In-The-Box Problem

462 ECAI 2012 Luc De Raedt et al. (Eds.) © 2012 The Author(s). This article is published online with Open Access by IOS Press and distributed under the terms of the Creative Commons Attribution Non-Commercial License. doi:10.3233/978-1-61499-098-7-462 A New Approach to the Snake-In-The-Box Problem David Kinny1 Abstract. The “Snake-In-The-Box” problem, first described more Research on the SIB problem initially focused on applications in than 50 years ago, is a hard combinatorial search problem whose coding [14]. Coils are spread-k circuit codes for k =2, in which solutions have many practical applications. Until recently, techniques n-words k or more positions apart in the code differ in at least k based on Evolutionary Computation have been considered the state- bit positions [12]. (The well-known Gray codes are spread-1 circuit of-the-art for solving this deterministic maximization problem, and codes.) Longest snakes and coils provide the maximum number of held most significant records. This paper reviews the problem and code words for a given word size (i.e., hypercube dimension). prior solution techniques, then presents a new technique, based on A related application is in encoding schemes for analogue-to- Monte-Carlo Tree Search, which finds significantly better solutions digital converters including shaft (rotation) encoders. Longest SIB than prior techniques, is considerably faster, and requires no tuning. codes provide greatest resolution, and single bit errors are either recognised as such or map to an adjacent codeword causing an off- 1 INTRODUCTION by-one error. -

NOTE on GRAY CODES for PERMUTATION LISTS Seymour

PUBLICATIONS DE L’INSTITUT MATHEMATIQUE´ Nouvelle s´erie,tome 78(92) (2005), 87–92 NOTE ON GRAY CODES FOR PERMUTATION LISTS Seymour Lipschutz, Jie Gao, and Dianjun Wang Communicated by Slobodan Simi´c Abstract. Robert Sedgewick [5] lists various Gray codes for the permutations in Sn including the classical algorithm by Johnson and Trotter. Here we give an algorithm which constructs many families of Gray codes for Sn, which closely follows the construction of the Binary Reflexive Gray Code for the n-cube Qn. 1. Introduction There are (n!)! ways to list the n! permutations in Sn. Some such permutation lists have been generated by a computer, and some such permutation lists are Gray codes (where successive permutations differ by a transposition). One such famous Gray code for Sn is by Johnson [4] and Trotter [6]. In fact, each new permutation in the Johnson–Trotter (JT ) list for Sn is obtained from the preceding permutation by an adjacent transposition. Sedgewick [5] gave a survey of various Gray codes for Sn in 1977. Subsequently, Conway, Sloane and Wilks [1] gave a new Gray code CSW for Sn in 1989 while proving the existence of Gray codes for the reflection groups. Recently, Gao and Wang [2] gave simple algorithms, each with an efficient implementation, for two new permutation lists GW1 and GW2 for Sn, where the second such list is a Gray code for Sn. The four lists, JT , CSW , GW1 and GW2, for n = 4, are pictured in Figure 1. This paper gives an algorithm for constructing many families of Gray codes for Sn which uses an idea from the construction of the Binary Reflected Gray Code (BRGC) of the n-cube Qn. -

Other Binary Codes (3A)

Other Binary Codes (3A) Young Won Lim 1/3/14 Copyright (c) 2011-2013 Young W. Lim. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License". Please send corrections (or suggestions) to [email protected]. This document was produced by using OpenOffice and Octave. Young Won Lim 1/3/14 Coding A code is a rule for converting a piece of information (for example, a letter, word, phrase, or gesture) into another form or representation (one sign into another sign), not necessarily of the same type. In communications and information processing, encoding is the process by which information from a source is converted into symbols to be communicated. Decoding is the reverse process, converting these code symbols back into information understandable by a receiver. Young Won Lim Other Binary Codes (4A) 3 1/3/14 Character Coding ASCII code BCD (Binary Coded Decimal) definitions for 128 characters: Number characters (0-9) 33 non-printing control characters (many now obsolete) 95 printable charactersi Young Won Lim Other Binary Codes (4A) 4 1/3/14 Representation of Numbers Fixed Point Number representation ● 2's complement +1234 ● 1's complement 0 -582978 coding ● sign-magnitude Floating Point Number representation +23.84380 ● IEEE 754 -1.388E+08 -

Loopless Gray Code Enumeration and the Tower of Bucharest

Loopless Gray Code Enumeration and the Tower of Bucharest Felix Herter1 and Günter Rote1 1 Institut für Informatik, Freie Universität Berlin Takustr. 9, 14195 Berlin, Germany [email protected], [email protected] Abstract We give new algorithms for generating all n-tuples over an alphabet of m letters, changing only one letter at a time (Gray codes). These algorithms are based on the connection with variations of the Towers of Hanoi game. Our algorithms are loopless, in the sense that the next change can be determined in a constant number of steps, and they can be implemented in hardware. We also give another family of loopless algorithms that is based on the idea of working ahead and saving the work in a buffer. 1998 ACM Subject Classification F.2.2 Nonnumerical Algorithms and Problems Keywords and phrases Tower of Hanoi, Gray code, enumeration, loopless generation Contents 1 Introduction: the binary reflected Gray code and the Towers of Hanoi 2 1.1 The Gray code . .2 1.2 Loopless algorithms . .2 1.3 The Tower of Hanoi . .3 1.4 Connections between the Towers of Hanoi and Gray codes . .4 1.5 Loopless Tower of Hanoi and binary Gray code . .4 1.6 Overview . .4 2 Ternary Gray codes and the Towers of Bucharest 5 3 Gray codes with general radixes 7 4 Generating the m-ary Gray code with odd m 7 5 Generating the m-ary Gray code with even m 9 arXiv:1604.06707v1 [cs.DM] 22 Apr 2016 6 The Towers of Bucharest++ 9 7 Simulation 11 8 Working ahead 11 8.1 An alternative STEP procedure . -

Data Representation in Computer Systems

© Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION There are 10 kinds of people in the world—those who understand binary and those who don’t. © Jones & Bartlett Learning, LLC © Jones—Anonymous & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION CHAPTER Data Representation © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE 2OR DISTRIBUTIONin ComputerNOT FOR Systems SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION 2.1 INTRODUCTION he organization of any computer depends considerably on how it represents T numbers, characters, and control information. The converse is also true: Stan- © Jones & Bartlettdards Learning, and conventions LLC established over the years© Jones have determined& Bartlett certain Learning, aspects LLC of computer organization. This chapter describes the various ways in which com- NOT FOR SALE putersOR DISTRIBUTION can store and manipulate numbers andNOT characters. FOR SALE The ideas OR presented DISTRIBUTION in the following sections form the basis for understanding the organization and func- tion of all types of digital systems. The most basic unit of information in a digital computer is called a bit, which is a contraction of binary digit. In the concrete sense, a bit is nothing more than © Jones & Bartlett Learning, aLLC state of “on” or “off ” (or “high”© Jones and “low”)& Bartlett within Learning, a computer LLCcircuit. In 1964, NOT FOR SALE OR DISTRIBUTIONthe designers of the IBM System/360NOT FOR mainframe SALE OR computer DISTRIBUTION established a conven- tion of using groups of 8 bits as the basic unit of addressable computer storage. -

Algorithms for Generating Binary Reflected Gray Code Sequence: Time Efficient Approaches

2009 International Conference on Future Computer and Communication Algorithms for Generating Binary Reflected Gray Code Sequence: Time Efficient Approaches Md. Mohsin Ali, Muhammad Nazrul Islam, and A. B. M. Foysal Dept. of Computer Science and Engineering Khulna University of Engineering & Technology Khulna – 9203, Bangladesh e-mail: [email protected], [email protected], and [email protected] Abstract—In this paper, we propose two algorithms for Coxeter groups [4], capanology (the study of bell-ringing) generating a complete n-bit binary reflected Gray code [5], continuous space-filling curves [6], classification of sequence. The first one is called Backtracking. It generates a Venn diagrams [7]. Today, Gray codes are widely used to complete n-bit binary reflected Gray code sequence by facilitate error correction in digital communications such as generating only a sub-tree instead of the complete tree. In this digital terrestrial television and some cable TV systems. approach, both the sequence and its reflection for n-bit is In this paper, we present the derivation and generated by concatenating a “0” and a “1” to the most implementation of two algorithms for generating the significant bit position of the n-1 bit result generated at each complete binary reflected Gray code sequence leaf node of the sub-tree. The second one is called MOptimal. It () ≤ ≤ n − is the modification of a Space and time optimal approach [8] G i ,0 i 2 1 , for a given number of bits n. We by considering the minimization of the total number of outer evaluate the performance of these algorithms and other and inner loop execution for this purpose. -

GRAPHS INDUCED by GRAY CODES 1. Introduction an N-Bit

GRAPHS INDUCED BY GRAY CODES ELIZABETH L. WILMER AND MICHAEL D. ERNST Abstract. We disprove a conjecture of Bultena and Ruskey [1], that all trees which are cyclic graphs of cyclic Gray codes have diameter 2 or 4, by producing codes whose cyclic graphs are trees of arbitrarily large diameter. We answer affirmatively two other questions from [1], showing that strongly Pn Pn- compatible codes exist and that it is possible for a cyclic code to induce× a cyclic digraph with no bidirectional edge. A major tool in these proofs is our introduction of supercomposite Gray codes; these generalize the standard reflected Gray code by allowing shifts. We find supercomposite Gray codes which induce large diameter trees, but also show that many trees are not induced by supercomposite Gray codes. We also find the first infinite family of connected graphs known not to be induced by any Gray code | trees of diameter 3 with no vertices of degree 2. 1. Introduction n An n-bit Gray code B = (b1; b2;:::; bN ), N = 2 , lists all the binary n-tuples (\codewords") so that consecutive n-tuples differ in one bit. In a cyclic code, the first and last n-tuples also differ in one bit. Gray codes can be viewed as Hamiltonian paths on the hypercube graph; cyclic codes correspond to Hamiltonian cycles. Two Gray codes are isomorphic when one is carried to the other by a hypercube isomorphism. The transition sequence τ(B) = (τ1; τ2; : : : ; τN 1) of an n-bit Gray code B lists − the bit positions τi [n] = 1; 2; : : : ; n where bi and bi+1 differ. -

Lab Manual Exp Code Converter.Pdf

Hybrid Electronics Laboratory Design and Simulation of Various Code Converters Aim: To Design and Simulate Binary to Gray, Gray to Binary , BCD to Excess 3, Excess 3 to BCD code converters. Objectives: 1. To understand different codes 2. To design various Code converters using logic gates 3. To simulate various code converters 4. To understand the importance of code converters in real life applications Theory Binary Codes A symbolic representation of data/ information is called code. The base or radix of the binary number is 2. Hence, it has two independent symbols. The symbols used are 0 and 1. A binary digit is called as a bit. A binary number consists of sequence of bits, each of which is either a 0 or 1. Each bit carries a weight based on its position relative to the binary point. The weight of each bit position is one power of 2 greater than the weight of the position to its immediate right. e. g. of binary number is 100011 which is equivalent to decimal number 35. BCD Codes Numeric codes represent numeric information i.e. only numbers as a series of 0’s and 1’s. Numeric codes used to represent decimal digits are called Binary Coded Decimal (BCD) codes. A BCD code is one, in which the digits of a decimal number are encoded-one at a time into group of four binary digits. There are a large number of BCD codes in order to represent decimal digits0, 1, 2 …9, it is necessary to use a sequence of at least four binary digits. -

Chap02: Data Representation in Computer Systems

CHAPTER 2 Data Representation in Computer Systems 2.1 Introduction 57 2.2 Positional Numbering Systems 58 2.3 Converting Between Bases 58 2.3.1 Converting Unsigned Whole Numbers 59 2.3.2 Converting Fractions 61 2.3.3 Converting between Power-of-Two Radices 64 2.4 Signed Integer Representation 64 2.4.1 Signed Magnitude 64 2.4.2 Complement Systems 70 2.4.3 Excess-M Representation for Signed Numbers 76 2.4.4 Unsigned Versus Signed Numbers 77 2.4.5 Computers, Arithmetic, and Booth’s Algorithm 78 2.4.6 Carry Versus Overflow 81 2.4.7 Binary Multiplication and Division Using Shifting 82 2.5 Floating-Point Representation 84 2.5.1 A Simple Model 85 2.5.2 Floating-Point Arithmetic 88 2.5.3 Floating-Point Errors 89 2.5.4 The IEEE-754 Floating-Point Standard 90 2.5.5 Range, Precision, and Accuracy 92 2.5.6 Additional Problems with Floating-Point Numbers 93 2.6 Character Codes 96 2.6.1 Binary-Coded Decimal 96 2.6.2 EBCDIC 98 2.6.3 ASCII 99 2.6.4 Unicode 99 2.7 Error Detection and Correction 103 2.7.1 Cyclic Redundancy Check 103 2.7.2 Hamming Codes 106 2.7.3 Reed-Soloman 113 Chapter Summary 114 CMPS375 Class Notes (Chap02) Page 1 / 25 Dr. Kuo-pao Yang 2.1 Introduction 57 This chapter describes the various ways in which computers can store and manipulate numbers and characters. Bit: The most basic unit of information in a digital computer is called a bit, which is a contraction of binary digit. -

A Review of Binary to Gray and Gray to Binary Code Conversion

Volume 3, Issue 10, October – 2018 International Journal of Innovative Science and Research Technology ISSN No:-2456-2165 A Review of Binary to Gray and Gray to Binary Code Conversion Pratibha Soni Vimal Kumar Agarwal M. TECH. Scholar Associate Professor Apex Institute of Engineering and Technology Apex Institute of Engineering and Technology Jaipur, Rajasthan, India Jaipur, Rajasthan, India Abstract:- In today’s world of electronics industries low II. LITERATURE REVIEW power has emerged as a principal theme. Power dissipation has become an essential consideration as area One of the promising computing technologies assuring for VLSI Chip design and performance. With shrinking zero power dissipation is the Reversible Logic. Reversible technology reducing overall power management and Logic has a wide spectrum of applications like power consumption on chip are the main challenges Nanotechnology based systems, Bio Informatics optical below 100nm as a result of increased complexity. When Circuits, quantum computing, and Low Power VLSI. it comes to many designs available, power optimization is Therefore, this paper represents the 4 bit reversible essential as timing as a result to the demand to extend comparator based on classical logic circuit which uses the battery life and reduce package cost. Asto reduce the existing reversible gates. The design simply aims at power consumption we have many techniques from those reducing optimization parameters like quantum cost, techniques, lets we implement on Reverse Logic. In garbage outputs, and the number of constant inputs. The reversible logic it as greater advantage and executing in results obtained in this research work indicate that the high speed, with less power dissipation which is proposed comparator has one constant input and 4 quantum implemented by using basic logic gates in less area.